458: Cloudflare's GPU Suitcases, Microsoft + Oracle, Palmer Luckey, Minecraft, Bing, GenAI Lawsuits, Industrial APIs, and Ocean's Eleven

"Ma'am, listen to the thunder."

When you are listening to someone you respect, treat their whispers like screams.

—Ramit Sethi

🤠 The quote above reminds me of a great scene in Deadwood:

(the best TV show ever — if you’ve never seen it, the scene won’t work as well out of context, but it’s still good)

Wild Bill Hickok:

You know the sound of thunder, don't you, Mrs. Garrett?

Alma Garrett:

Of course.

Wild Bill Hickok:

Can you imagine that sound if I asked you to?

Alma Garrett:

Yes I can, Mr. Hickok.

Wild Bill Hickok:

Your husband and me had this talk, and I told him to head home to avoid a dark result. But I didn't say it in thunder.

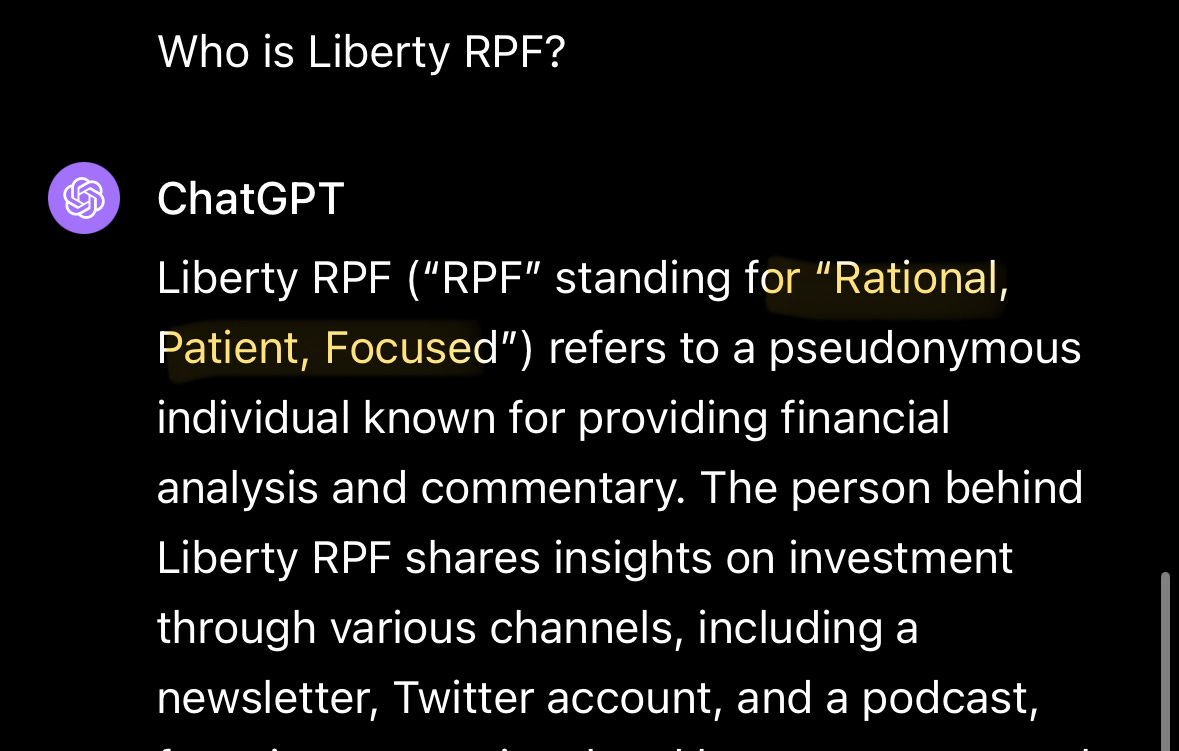

Ma'am, listen to the thunder.🤖💭 GPT-4 Turbo hallucinated something about me, and it’s almost better than the real answer, even though it would be a bit pretentious if I had written this about myself. I guess it’s ok to be aspirational sometimes… (do *you* know what RPF stands for?) :

🔌🚘🔋I’ve been driving the EV6 for a couple of days, and I have to say, the leap from my old car is like going from a horse carriage to a spaceship. 🐴 🛸

Or to use a nerdier metaphor:

Going from an internal combustion engine to the instant torque of electric motors is like when computers went from spinning hard drives to SSDs. You can’t go back!

On the first night when we picked up the car, I drove around a bit with my family. The kids were more excited than on the morning of Xmas. 🎁🎄🎅🏻

In fact, before bed, they asked my wife to read them the car’s user manual!

I’m not kidding! My oldest boy was very keen on learning about every feature and capability of the car, including how to change the color of the cabin’s ambient LED lighting and how the various drive modes work.

It’s still too early for a full review, but so far I’m very impressed by the engineering and design decisions made by Kia. 15-20 years ago, I wouldn’t have guessed that this company would improve to this level.

🏦 💰 Liberty Capital 💳 💴

⛅️⏳Cloudflare’s Long-Term Thinking, GPU Edition

CEO Matthew Prince:

We also announced Workers AI to put powerful AI inference within milliseconds of every Internet user.

We believe inference is the biggest opportunity in AI and inference tasks will largely be run on end devices and connectivity clouds like Cloudflare.

Right now, there are members of the Cloudflare team traveling the world with suitcases full of GPUs, installing them throughout our network. We have inference-optimized GPUs running in 75 cities worldwide as of the end of October, we are well on our way of hitting a goal of 100 by the end of 2023.

By the end of 2024, we expect to have inference-optimized GPUs running in nearly every location where Cloudflare operates worldwide, making us easily the most widely distributed cloud-AI inference platform.

I have no idea how big the edge inference opportunity will ultimately turn out to be.

I suppose that there will be many use cases where latency matters yet you can’t do on-device compute for whatever reason (power envelope, cost, etc), but how big is that?

¯\_(ツ)_/¯

It’s very hard to predict in advance since AI is fast-moving and a lot of innovation is on the horizon… But it’s not a bad idea to position yourself in a way that you would benefit if the demand materializes, and if it doesn’t, the GPUs will still be valuable even if at a lower premium.

We've been planning for this for the last 6 years, expecting that at some point, we hit the crossover, where deploying inference-optimized GPUs made sense. To that end, starting 6 years ago, we intentionally left one or more PCI slots in every server we built, empty. When the demand and the technology made sense, we started deploying. That means we can use our existing server infrastructure and just add GPU cards, allowing us to add this capability while still staying within our forecast CapEx envelope. And customers are excited. [...]

for people who are paying close attention almost 3 years ago, we actually did an announcement with NVIDIA that was a trial balloon, kind of in the space to see how much demand there was. And at the time, there wasn't a ton of demand, but we could see how the models are improving. Inference is improving.

I remember when they announced that. It seemed like a “uh, kinda cool” thing.

Fast-forward to today, and everybody is scouring the world looking for GPUs and it looks pretty prescient (see the thing I wrote below about Microsoft going to Oracle for extra GPUs).

We knew that this was something which was coming. And so we learned from that first thing. I think we've built a really strong relationship with the NVIDIA team in part because of that, and some of the work that we've done with them in the networking space. [...]

we will have a mix of GPUs. Today, we're standardized around NVIDIA, but we're good friends with the folks at AMD and Intel and Qualcomm. We're all doing interesting things and different models from what we've seen perform differently on different types of GPUs that are out there. And so I think you'll find every flavor under the sun of -- from expensive to cheap delivered across the network.

I like how their tech stack is basically a modular fractal.

Every server can run every product and operate hardware from multiple vendors. Even their software stack is cross-compiled to work on ARM and x86, whatever provides the best ROI at the moment and allowing them to play one vendor against the other. Tasks can be dynamically shifted around different DCs depending on load and network conditions. Very flexible and expandable.

It’s a very cool system to study.