464: Twilio's Big Crunch, Larry & Sergey, Munger, Paying Up, Nvidia, Cablecos vs Telecoms, Copilot, Winter Tires, and Light & Magic

"I would find it tragic if..."

There have never been so many opportunities to be told what to think by people who don’t think. —Stoic Emperor

😎⚛️ In 2023-2024, bragging rights go to whoever has the bigger order of Nvidia H100s and H200s. Rizz is being GPU-rich (there’s a chart making the rounds showing Microsoft and Meta with 150,000 H100s on order each, dwarfing everyone else).

I hope that in 2025-2026, bragging rights will go to whoever orders the most Westinghouse AP1000s…

🛀💭1️⃣ 👏 A few days ago, I was talking to Jim (💚 🥃 🎩) about Life, The Universe, and Everything (as we usually do). He mentioned he’s always looking for things to “root for” instead of “root against”.

This got me thinking about how that’s probably easier for us science & tech nerds because because the arrow of progress is relatively clear and the benefits are very tangible.

If, say, politics is your thing, then sure, there are still things to root for and against, but there’s very little real progress and very few real answers. It’s largely cyclical and revolves around personalities. Is “politics” better now than 50 or 100 or 200 years ago? Or is it mostly the same play being repeated over and over with different actors and costumes? (don’t misunderstand me, I’m not saying societies can’t progress — there’s been plenty of that — I’m talking about the daily game of politics itself)

Now look at aerospace or particle physics or semiconductors or software or bioinformatics….

🛀💭2️⃣ 🏗️🛠️ Something else I discussed with Jim is the difference between the generation right after WWII and subsequent generations.

Did they *build everything* because they couldn’t look back, so they had to look forward? So much of what we rely on today was built or started by them.

In recent times, at least in the West, we’ve looked back at decades of mostly peace and prosperity, so we expect it to keep going, as if it’s the natural order of things.

But coming out of WWI, the Great Depression, and WWII, one after the other, it was very clear how bad things could get. That probably promoted more agency and motivated the most talented people to contribute to building a better future.

🚢⚓️ We just passed 21,000 crew members on this steamboat!

That feels like a lot, but numbers have this strange tendency of rapidly becoming abstract, so I did an image search for “crowd of 21,000 people” and oh boy! 😯

Zooming out and looking back once in a while is a good reminder of the power of sticking-to-it.

On the left side of that graph, I had no idea what I was doing or where any of this was going, but I knew I was having fun — on the right side, I still mostly don’t know what I’m doing, but after around 500 Editions (if you count podcasts and special editions), I’m at least making novel mistakes and building on a bunch of shared knowledge that we have together (especially if you’ve been reading for a while — if not, just stick-to-it and that’ll come too!).

🏦 💰 Liberty Capital 💳 💴

📞 Twilio’s Big Crunch 📲 🗜️

I don’t mean to single out this company specifically, but they serve as a good example of what happened to many 2021 darlings.

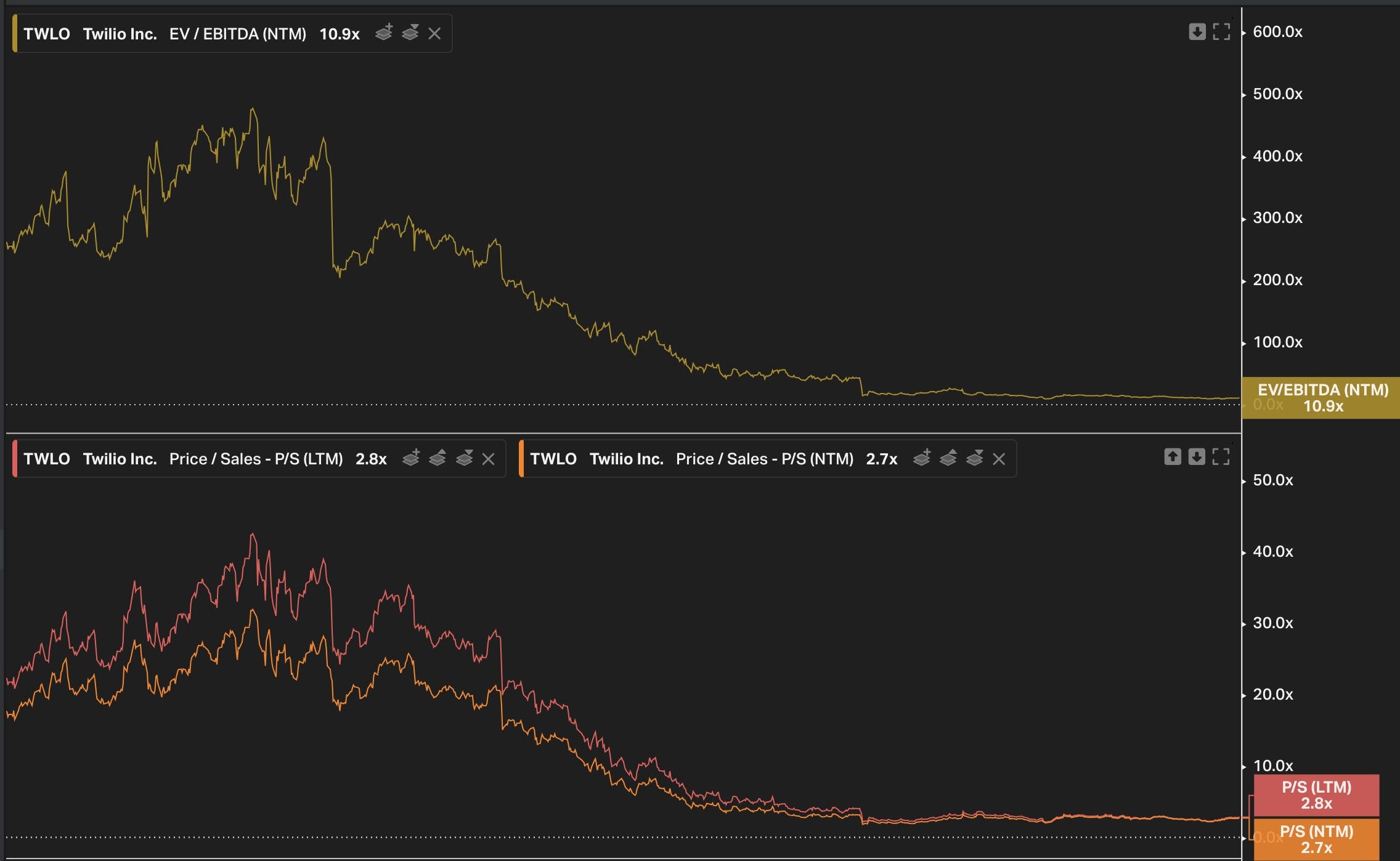

In less than 3 years, TWLO's valuation decreased from 475x EV/EBITDA to 10.8x EV/EBITDA! And from 42.5x sales to 2.8x sales!

Talk about multiple compression!

As MBI points out, it’s worse than it seems because NTM EBITDA overstates profitability since NTM numbers add back share-based compensation (and SBC is *very* high at Twilio…).

Twilio has *never* achieved positive EBITDA margins.

🧠 The Benefits of an Open Mind and Genuine Curiosity 🕵️♂️

I’m still processing Munger’s departure. At some point, I’ll have more to say.

My friend MBI (🇧🇩🇺🇸) writes:

Munger met Buffett at the age of 35. How many people actually meet their best friends after 30s?

Munger met Li Lu when he was nearly 80 years old. How many people actually form deep intellectual partnerships at such an age?

If there's anything I want to learn from his life, it is to live life with intense curiosity and be open to the idea that the best years may be ahead of me. Such belief, even if it proves to be wrong, is deeply optimistic and may lead to more fun and interesting life.

Very well said 💚 🥃

I would find it tragic if my best days were all clustered in my youth, and then I progressively crystallized into an inflexible person, no longer interested in learning or genuinely connecting with others.

See also:

I haven’t had time to listen to it yet, but I’ve seen enough highlights from the transcripts in one of my group chats to know it’s worth listening to. I’ll have more to say once I’ve heard the whole thing.

🍫 Munger’s definition of “paying up for quality” 💝

Whenever Munger’s influence on Buffett is brought up, one of the major points is how he turned Buffett from a cigar butt investor to someone who paid up for quality.

As is usually the case, reality is more complex.

Even Munger said that Buffett was going in a more Phil Fisher direction on his own and would probably have ended up in the same place without him.

But it’s also worth remembering that Buffett and Munger’s definition of “paying up” isn’t what a lot of “quality investors” with loose discipline on price think of when they consider “paying up”.

Friend-of-the-show John Huber points out (💚 🥃):

Munger’s definition of “paying up” was a lot cheaper than I think many realize. They paid 6x pretax earnings for Sees Candies, which was growing

Corporate tax rate was 48% in 1972, but Sees was even cheaper than it appeared. I believe they bought it thinking they could raise prices and see earnings grow 50% in the first year, which would have made it a 14% yield in the first year (7x after tax earnings). Snowball has the details on valuationOf course, the context of what the multiples and interest rates were at the time must be considered, but it’s still a good reminder of how disciplined they were on price.

🚨 Larry & Sergey Watch, Edition #37 🥸🥸

Here is your semi-regular reminder that Larry Page and Sergey Brin are two of the wealthiest and most powerful people in the world, the real power behind Google and all that this implies (touching billions of people’s daily lives + steering their AI efforts), yet we rarely hear about them anymore and I have no idea what they’re up to.

Page’s Wikipedia entry has almost no info on what he’s been up to after 2019, and Brin’s one has fewer details than most B-movie actors.

📢 US Commerce Secretary with a clear message for Nvidia 🇨🇳✋🚚📦🤖🤖🤖🤖🤖

I recently told you that I had some concerns regarding Nvidia’s relationship with China. Although they are most likely following the letter of the law, I’m uncertain about the spirit.

US Commerce Secretary Gina Raimondo seems to agree:

“We cannot let China get these chips. Period,” she said at the Reagan National Defense Forum in Simi Valley, California, on Saturday. “We’re going to deny them our most cutting-edge technology.” [...]

“I know there are CEOs of chip companies in this audience who were a little cranky with me when I did that because you’re losing revenue,” she said. “Such is life. Protecting our national security matters more than short-term revenue.” [...]

Raimondo called out Nvidia Corp., which designed chips specifically for the Chinese market after the US imposed its initial round of curbs in October 2022.

“If you redesign a chip around a particular cut line that enables them to do AI, I’m going to control it the very next day,” Raimondo said. (Source)

She went on to say that the Department of Commerce needs more resources to be able to properly enforce export controls (“I have a $200 million budget. That’s like the cost of a few fighter jets. Come on,” she said. “If we’re serious, let’s go fund this operation like it needs to be funded” "Every day China wakes up trying to figure out how to do an end run around our export controls... which means every minute of every day, we have to wake up tightening those controls and being more serious about enforcement with our allies," she said).

It wouldn’t surprise me if a bunch of A100s and H100s (and soon H200s) end up in China after being officially sold in other countries, and if there weren’t big GPU clusters being built in some countries that will be operated by Chinese entities (possibly through shell companies and various proxies).

📺 🤜💥🤛📞 Interview: Craig Moffett: Cablecos vs Telecoms

I enjoyed this discussion between friend-of-the-show Bill Brewster (💚 🥃) and Craig Moffett on the telecommunications industry and the various competitive dynamics at play as new technologies are reshaping the industry (5G, Fixed Wireless, Fiber-to-the-home, DOCSIS 3.1 and 4.0, Starlink, etc).

To me, the most interesting part was the explanation of why cable companies may have a cost advantage over telecoms when it comes to wireless even though they are mostly using the telecoms’ infrastructure via MVNOs.

It’s not intuitive at first glance but makes a lot of sense when you look at the details.

Related: Ex-Charter CEO Tom Rutledge sold $100m worth of Charter stock on November 21st. Yesterday, the company announced deteriorating KPIs at an investor conference and the stock fell around 9%. Coincidence? 🤨

See also:

🚚📦📦📦 US Domestic Parcel Delivery Market Share 📫

"2023 will mark the 11th consecutive year of market share loss for UPS/FedEx combined"h/t Kourosh

🧪🔬 Liberty Labs 🧬 🔭

🎓 Andrej Karpathy: The Busy Person’s Intro to Large Language Models 🤖

Wanna level up your understanding of what is going on inside these magical software black boxes? This is a great primer by one of the top practitioners in the field.

🤖 Top Generalist AI Models can Beat Specialist Models at their Specialities 🥼🩻

It’s easy to have the intuition that generalist models will be pretty good at everything and that specialist models that are fine-tuned on a specific domain will be much better at that thing, but a study looking at medical models like Google’s Med-PaLM 2 vs GPT-4 found that with proper prompting the generalist model can outperform the medical model:

We find that prompting innovation can unlock deeper specialist capabilities and show that GPT-4 easily tops prior leading results for medical question-answering datasets. The prompt engineering methods we explore are general purpose, and make no specific use of domain expertise, removing the need for expert-curated content [...]

The method outperforms state-ofthe-art specialist models such as Med-PaLM 2 by a large margin with an order of magnitude fewer calls to the model. Steering GPT-4 with Medprompt achieves a 27% reduction in error rate on the MedQA dataset (USMLE exam) over the best methods to date achieved with specialist models, and surpasses a score of 90% for the first time. Moving beyond medical challenge problems, we show the power of Medprompt to generalize to other domains and provide evidence for the broad applicability of the approach via studies of the strategy on competency exams in electrical engineering, machine learning, philosophy, accounting, law, nursing, and clinical psychologyIs this an early sign that there will be few winners and that the same cutting-edge models will be at the front of the pack in multiple verticals?

Presumably, a fine-tuned GPT-4 should do even better than the generalist model with the Medical prompt, so does it all come down to having the smartest foundation model and everything else just builds on it? 🤔

🛞❄️ This is How Much Difference Winter Tires Make 🤕

Recently, I bought winter tires for our new EV6, which turned into quite a rabbit hole.

Going in, I didn’t know much about tires — I knew they were very important, but I had never taken the time to learn, so I mostly bought whatever was cheapest…

But now, after learning about the various trade-offs of different types of tires, finding great review sources and technical primers on the characteristics of modern tires, etc, I can never go back to low-quality tires (they don’t even save you much money since they don’t last as long, and what is your family’s safety worth?).

What’s frustrating is that all this knowledge doesn’t have anywhere to go. I don’t need new tires for a few years! Oh well, it was fun to learn ¯\_(ツ)_/¯

👀📎🤖 Microsoft is Updating Copilot to use GPT-4 Turbo with 128k Context Window

We all knew it was coming:

Copilot will soon be able to respond using OpenAI’s latest GPT-4 Turbo model, which essentially means it will “see” more data thanks to a 128K context window. This larger context window will allow Copilot to better understand queries and offer better responses. “This model is currently being tested with select users and will be widely integrated into Copilot in the coming weeks,”

The larger context window will probably make a bigger difference here than with ChatGPT since Copilot inherently works with longer documents.

The Copilot code interpreter is also getting an upgrade.

🎨 🎭 Liberty Studio 👩🎨 🎥

Light & Magic (2022, mini-series on Disney+)

I really enjoyed this 6-parter on the special effects industry broadly and Industrial Light & Magic more specifically.

It all began with the production of ‘Star Wars’ in 1975. The amount of innovation and invention that came out of that film is pretty incredible.

Also, gotta love how Lucas described what he wanted as Kubrick’s ‘2001: A Space Odyssey’, but kinetic and fast-moving.

The documentary series shows history through the biggest films that ILM worked on, creating a very interesting arc from the analog era of Star Wars and Indiana Jones to films like Terminator 2 and Jurassic Park, marking the Big Bang of the digital era.

It’s impressive to see how George Lucas was the prime moving force for so much from so early. From the very start, he pushed for digital editing, digital sound, digital special effects, etc. It seems obvious now, but it didn’t at the time.

Pixar was spun-off from his efforts (and sold to Steve Jobs).

The human stories of that fast-changing industry feel familiar.

People spent years building skills that within a few years became mostly obsolete.

While some were celebrating the rapid progress on the digital side, others were mourning the death of the model shop and of building things with their hands.

Multiple people mention that they expected things to change, but that it happened way faster than they predicted — which is a common refrain when it comes to AI these days.

https://x.com/nearcyan/status/1732509656478880039?s=20

Light & Magic sounds cool!

I think you'd like Jurassic Punk, which is a pretty cool documentary about the guy who effectively kickstarted the CGI revolution in the early '90s while working on The Abyss, Terminator 2, and Jurassic Park (I actually watched it on the plane to the OSV retreat ✈️)