533: Apple's Two-Trillion Product, Zuck on Llama 4, TSMC, Samsung, SK Hynix, Intel, AWS AI, Close-Loop Platforms, Coffee, and Military Innovation

"millions of creators are dancing to the same tune"

Luck is statistics taken personally.

—Penn Jillete

☕️ Some caffeine news:

I bought a new grinder. After returning my malfunctioning Fellow Opus — which was otherwise nice, I wish it had worked out, but after that problem, I stopped trusting that design so I decided against replacing it — I couldn’t decide what to get. Every model has different trade-offs and I wasn’t sure which way to go.

For a while, my top choice was the Timemore Sculptor 064S (photo 👆). It looks a bit like a sewing machine, which is an acquired taste, but it has strong reviews and seems well-designed and durable.

However, at $600+, it’s expensive and felt overkill for an espresso newbie…

I then almost went in the opposite direction and considered the Breville Smart Grinder Pro, which is more of an entry-level grinder. I was very close to ordering it (it was on sale!), but after doing final diligence on it, I changed my mind and got the Baratza Encore ESP instead 👇

The Encore ESP is almost identical in design to the Baratza Encore that I’ve been using for 10+ years, but it has better M2 burrs and 20 extra steps for espresso grinding. There’s a clever mechanism that makes those “ESP” steps move by a few microns for precise adjustment, and when you go beyond those, the burrs start moving in larger increments. Oh, and it’s 20% faster than the regular Encore.

Based on the reviews, the Encore ESP grinds more consistently and has less retention than the Breville, making my decision easy.

I’ll provide an update after I’ve used it for some time. Stay caffeinated and stay tuned!

🎙️🎧🚨🔌🤖 And now for a different kind of energy than caffeine, my new podcast with Mark Nelson is out:

Mark drops many truth bombs 💣💣💣 I hope you enjoy it!

🏦 💰 Business & Investing 💳 💴

📱🍎 The Two Trillion Dollars Product 💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰💰

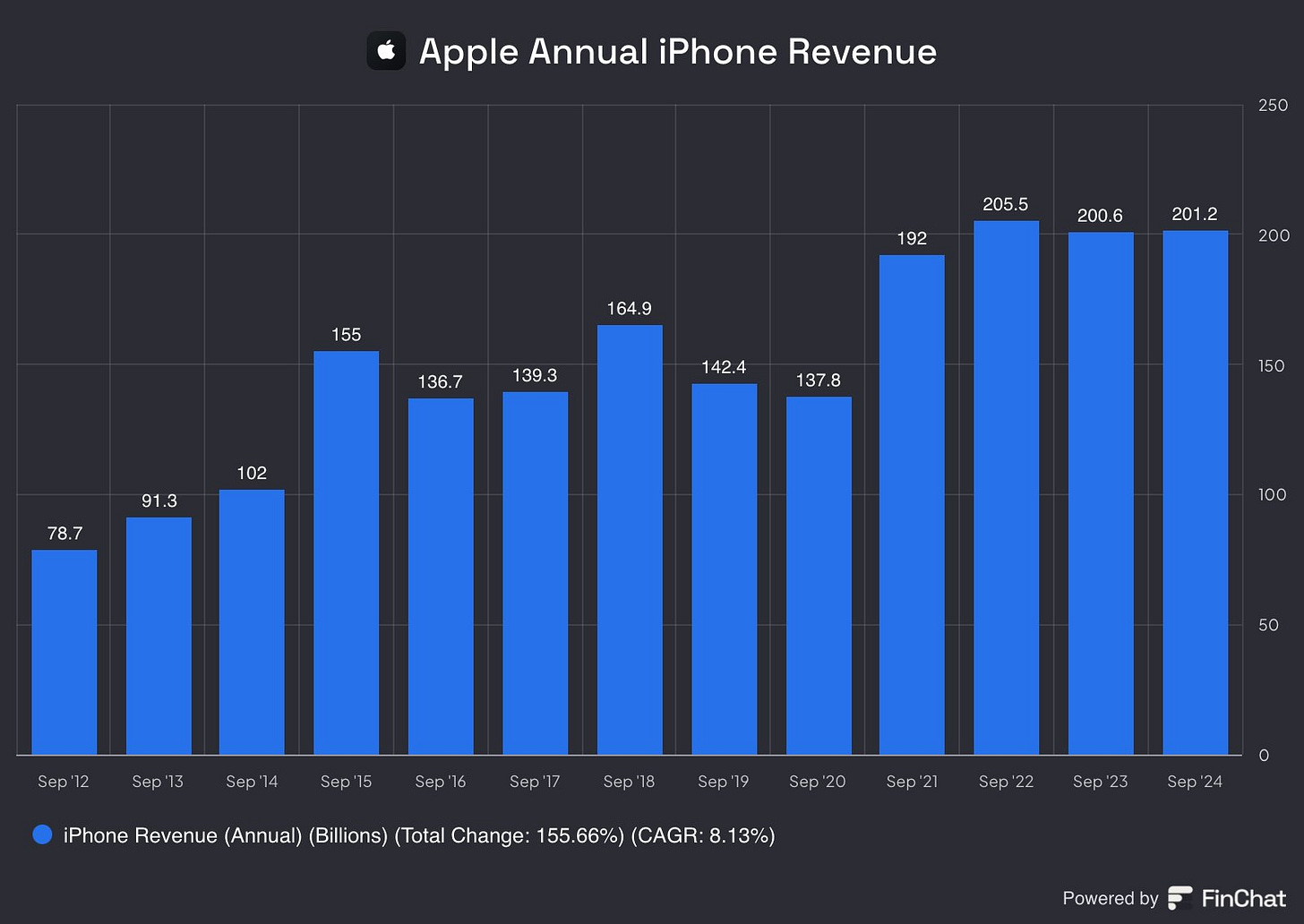

This chart was recently posted by Evan with the caption:

Apple has now sold $937 Billion worth of iPhones over the last 5 yearsThis made me curious about the cumulative revenue from the iPhone since its launch in 2007 — just the phone itself, not the service revenue related to it, or the ecosystem benefits of people buying iPhones and then buying other Apple products.

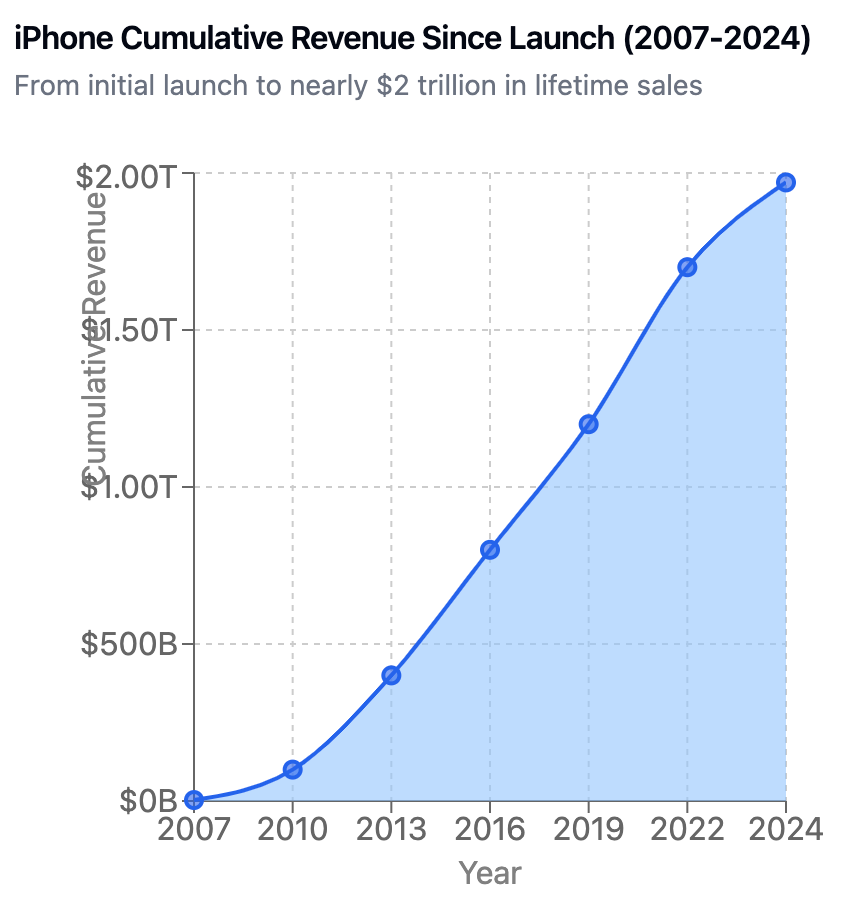

My napkin estimate falls in the range of $1.65 trillion to $2 trillion (🤯)

That’s a lot of moolah! 🌾

The best estimates for iPhone-related services fall in the range of ~$600-700 billion if you include App Store revenue and a proportional share of other services.

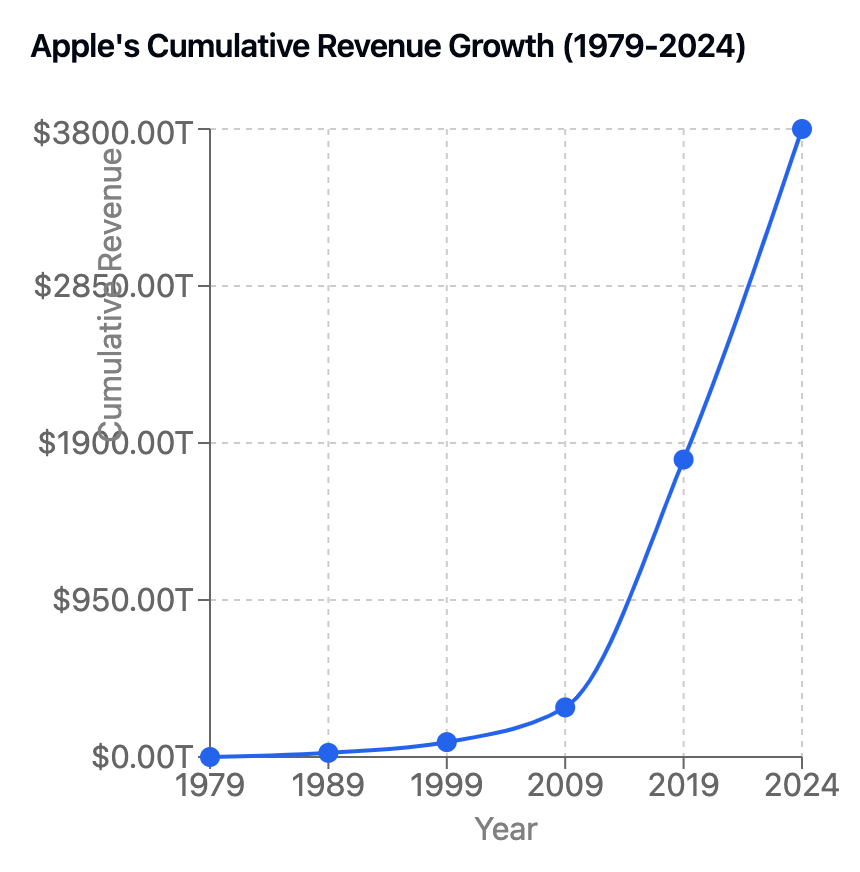

For fun, I tried to estimate the total cumulative revenue for all of Apple since it was founded (🍏 1976-2024 🍎). I got ˜$3.8 trillion (🤯🤯🤯)

Apple trivia:

It took Apple a little under 25 years to reach its first $100 billion in cumulative revenue

Since the holiday quarter in FY2021, it has generated at least that much revenue ($100bn) each holiday quarter 🎄🎁

More than half of its historical revenue has been generated in the last 5 years

The iPhone era (2007 to now) accounts for roughly 80% of all revenue in Apple's history 📲

iPhones sold: Over 2 billion worldwide since launch (they don’t report units since 2018, but we can estimate based on revenue)

(all these numbers could be wrong, double-check the math before using this in your presentation at HBS)

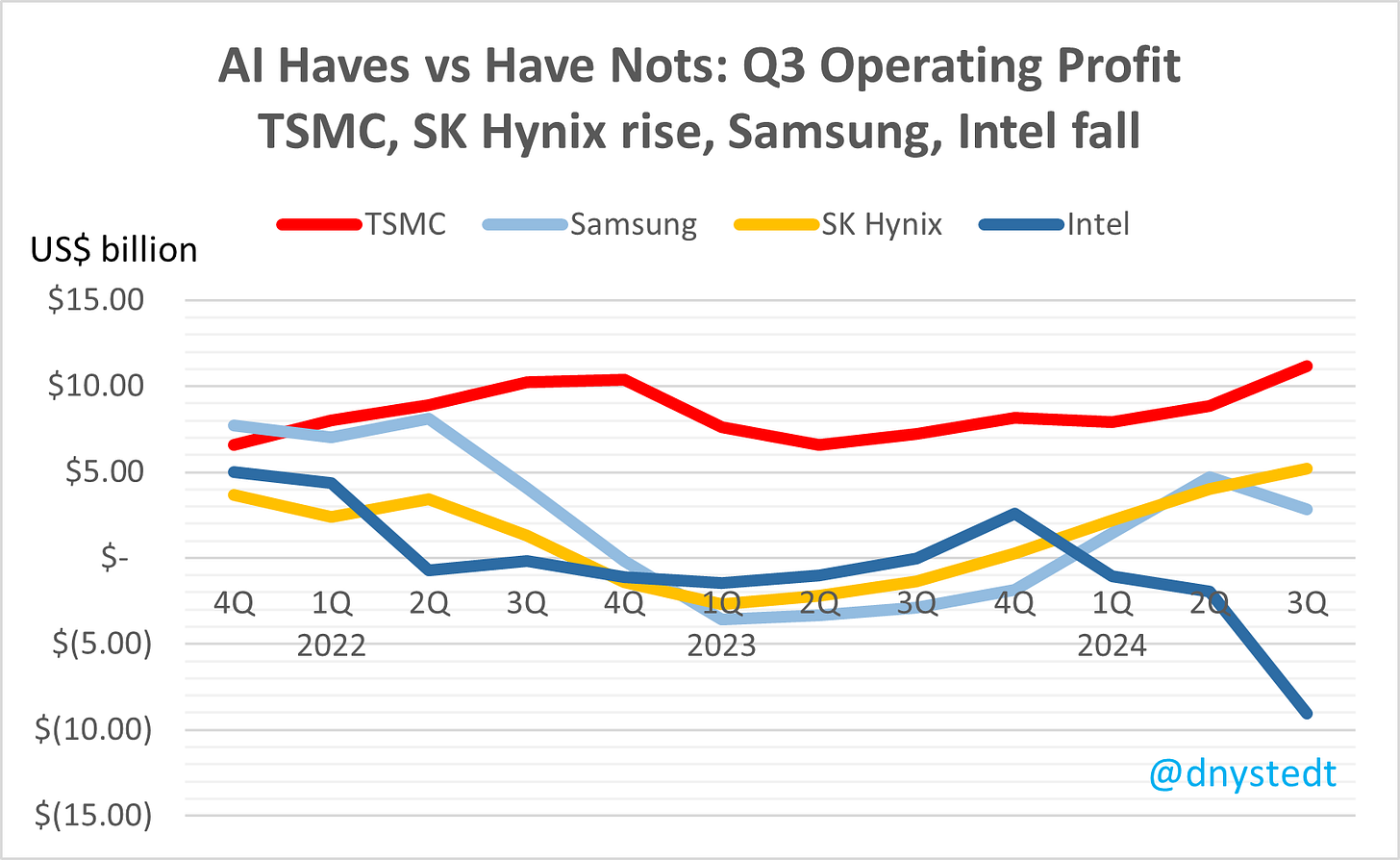

TSMC, Samsung, SK Hynix, and Intel — The Big Four of Semi Fabbing 🐜 🏗️

The graph above is worth a thousand words when it comes to Intel and Samsung’s troubles.

Dan Nystedt calls it ‘the AI haves vs have-nots’ (which reminds me of friend-of-the-show Dylan Patel’s ‘GPU rich’ and ‘GPU poor’) as TSMC and SK Hynix separated from Samsung and Intel.

Here’s revenue:

TSMC: $23.50 billion

Samsung’s chip division: $21.58 billion

Intel: $13.28 billion

SK Hynix: $12.96 billionAnd here’s operating profit:

TSMC: $11.16 billion

SK Hynix: $5.18 billion

Samsung’s chip division: $2.85 billion

Intel: Loss ($9.06 billion)Ouch 😬

🇰🇷🐜🛑 ‘Samsung to shut down 50% of foundry production lines to cut costs’ 🤕 🤒

Speaking of Samsung…

Samsung Electronics’ semiconductor division is temporarily shutting down production lines at its foundry facilities to reduce costs. Analysts estimate the chipmaker’s foundry business recorded losses amounting to 1 trillion won in the third quarter, prompting the company to take cost-cutting measures by deactivating parts of its production lines.

Samsung Electronics has already shut down over 30% of its 4nm, 5nm, and 7nm foundry production lines at Pyeongtaek Line 2 (P2) and Line 3 (P3) and plans to expand the shutdown to about 50% of its facilities by the end of the year

🤖 Andy Jassy on AWS’ AI Business, H200s, Trainium 2 📈

From the Q3 call:

AWS's AI business is a multibillion-dollar revenue run rate business that continues to grow at a triple-digit year-over-year percentage and is growing more than three times faster at this stage of its evolution as AWS itself grew, and we felt like AWS grew pretty quickly. We talk about our AI offering as three macro layers of the stack, with each layer being a giant opportunity and each is progressing rapidly.

🚀

They say they were first to offer Nvidia’s improved Hopper:

we were the first major cloud provider to offer NVIDIA's H200 GPUs through our EC2 P5 e-instances

And it sounds like Trainium 2 is getting better-than-expected adoption:

The second version of Trainium, Trainium2 is starting to ramp up in the next few weeks and will be very compelling for customers on price performance.

We're seeing significant interest in these chips, and we've gone back to our manufacturing partners multiple times to produce much more than we'd originally planned.

For more highlights from Amazon’s Q3, check out MBI’s great piece.

I find their advertising efforts particularly impressive. Going from almost nothing a few years ago to coming ever closer to directly competing with Google and Meta at scale.

🐦🔒✋🛑 🚧 Twitter is not a discovery platform anymore + The Tiktokification of every Closed-Loop Platform 🤔

Twitter used to be great for discovery — for finding all kinds of interesting articles, websites, videos, newsletters, podcasts, etc — but now the site appears focused on keeping users scrolling by preventing them from leaving the platform.

The “For You” feed, which most users default to, increasingly displays engagement bait from accounts you don’t follow and political garbage unless users have actively muted about a hundred different words.

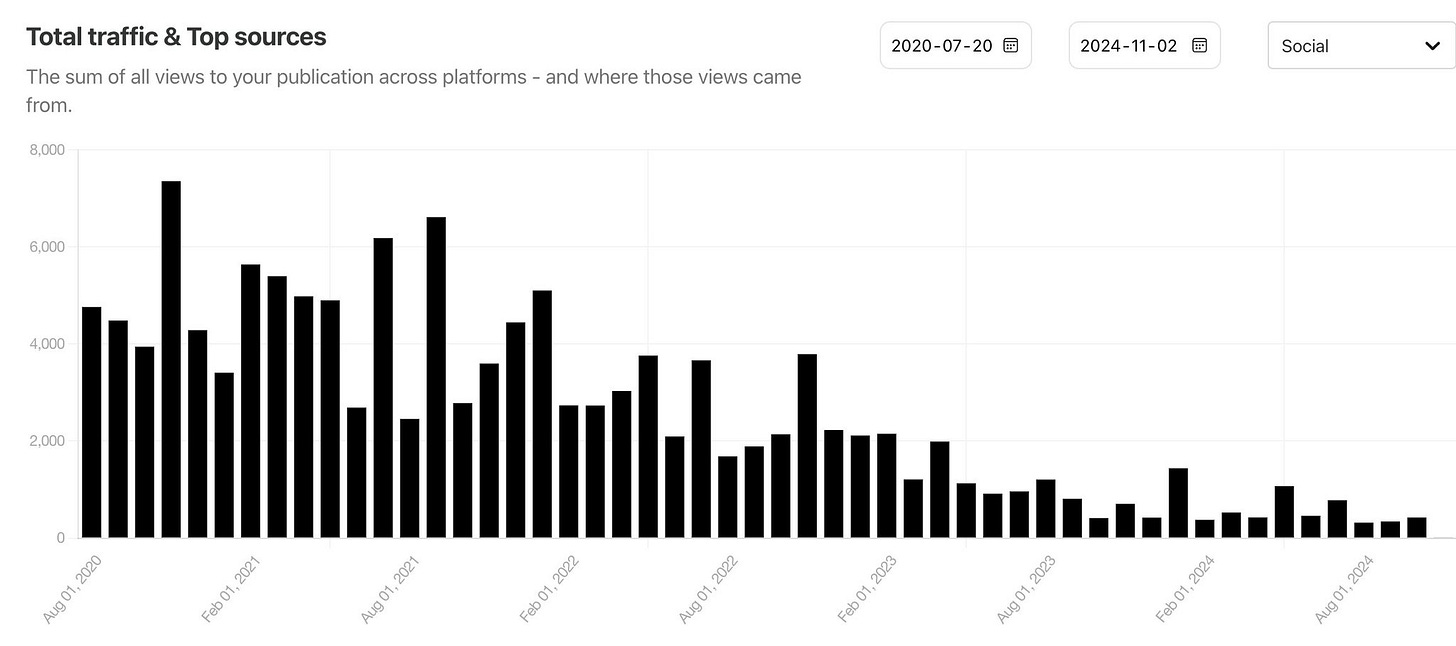

This platform containment strategy is evident in the data:

The graph shows traffic from social sources to this steamboat 🚢⚓️ (ie. almost all from Twitter since that’s where I’m active). Despite growing my subscriber base *tenfold*, I now receive less Twitter traffic than when I had a couple thousand subscribers, making the decline even more significant than it appears.

I miss Twitter’s former role as a central hub for the open web. It was a symbiotic relationship — by helping people discover great stuff, it allowed people to *create* more great stuff. It made it more viable to color outside the lines.

Now that social platforms are all increasingly closed-loop walled gardens, content creation is largely confined to YouTube, Instagram, Twitter, and TikTok. This consolidation makes everything more homogeneous and limits experimentation to the parameters set by a few mega-corporations.

Algorithmic changes on YouTube/Instagram/TikTok very directly SHAPE what kind of content is being made and what kind of content ISN’T being made. If for a while longer videos are being rewarded, everyone’s videos get longer. Oh, now they’re pushing short-form to compete with Tiktok — everybody makes Tiktok-style shorts. It’s why every thumbnail looks the same…

This algorithmic control means millions of creators are dancing to the same tune. This can’t be good.

These platforms are the new middlemen between creators and their fans, putting up an increasing number of toll booths over time.

Currently, Reddit and Substack are the primary platforms that still facilitate discovery of content across the web.

But now that Reddit is a public company, here are concerns it might turn into yet another closed-loop…

🧪🔬 Science & Technology 🧬 🔭

🗣️ Christopher Kirchhoff: Military Innovation and the Future of War 🔮

I enjoyed this interview with Kirchhoff. In fact, I ordered his book halfway through.

If you have any interest in the way weapons systems are created and the incentive system for the big defense primes vs the new breed of private sector upstarts (Anduril, Shieldwere AI, SpaceX, etc) and how commercial technology is increasingly outpacing traditional military development, this should be right up your alley.

You can get the video and audio versions as well as the transcript here:

On the cover of Unit X, we have two images. One is of the iPhone; the other is of the F-35 fighter, the most advanced fifth-generation stealth fighter in the world.

What most people in America don’t realize is that these technologies are produced from completely separate technology ecosystems, and they work very differently under very different incentives. The design of the F-35 was actually frozen in 2001 when the Pentagon awarded the production contract to Lockheed Martin. It didn’t become operational until 2016. That’s a long time in technology years. Look at the iPhone. Its processor is changed every year.

They also go into autonomous weapons and drone warfare and how Ukraine has rapidly grown a fairly decentralized drone industry that can adapt to new countermeasures within days.

🦙🤖 Zuckerberg: Llama 4 is Training on Cluster “Bigger than anything else” (implying more than 100k H100s)

The arms race for training clusters isn’t slowing down:

Zuckerberg told investors and analysts on an earnings call, with an initial launch expected early next year. “We're training the Llama 4 models on a cluster that is bigger than 100,000 H100s, or bigger than anything that I've seen reported for what others are doing,” Zuckerberg said, referring to the Nvidia chips popular for training AI systems. “I expect that the smaller Llama 4 models will be ready first.” [...]

In March, Meta and Nvidia shared details about clusters of about 25,000 H100s that were used to develop Llama 3. In July, Elon Musk touted his xAI venture having worked with X and Nvidia to set up 100,000 H100s. “It’s the most powerful AI training cluster in the world!”

Friend-of-the-show Dylan Patel has estimated that a cluster of 100,000 H100 chips would require 150 megawatts of power, so we’re fast approaching the gigawatt scale, which Mark Nelson and I discussed. 🔌🤖

🛀💭 🤖🧠💾 Thought Experiment: The Theoretical Minimum Storage & Compute Requirements for AGI? 🤔

Let's assume that AGI is possible and that we can create a human-level AGI that can do anything a human can through a computer 👩💻

I’m fascinated by how small a “mind” could be in digital format, ones and zeros, as a file. If we assume compression and sparsity and all the tricks we know and even some that we don’t, what’s the minimum storage that such a model could require, in theory?

How about compute? Let’s assume we want AI to run in “real-time”, or at the same speed as a similarly smart human.

What’s the minimum amount of compute that could achieve this, in theory?

We could use as starting point whatever the human brain does, but evolution hasn’t optimized us just for efficiency — there’s a lot of evolutionary pressure for other things, like survivability and robustness and reproductive drive and such. If the goal was just to make the smallest human-equivalent model, running in real-time, using the least possible amount of compute… What would that require? We might find surprisingly different requirements from our biological implementation.

While this question remains impossible to answer at this point, good questions are fun to think about precisely because the answers are beyond our reach.

🎨 🎭 The Arts & History 👩🎨 🎥

‘Portrait of a Lady on Fire’ (2019, original French title: Le Portrait de la Jeune Fille en Feu) 👩🎨🎨🔥👱🏻♀️

Yes, the above is a shot from the film. It looks *really* good.

They are able to ‘sculpt’ actors with light, color, and movement in a way that I’ve rarely seen.

It all feels very real and personal — like you’re there — partly because they don’t use music other than what is diegetic (so when there is music, it stands out and is particularly powerful!).

And they shot the whole thing in high dynamic range digital 8K with really vivid colors to contrast with the period costumes and beautiful locations, which we’re used to seeing in more washed-out 35mm film grain.

It works for me!

This is a slow film, all about character and small moments. It’s also about creation and art, and the relationship between the subject and the artist. It may not be for you, but if that’s your kind of thing, I recommend it.

Here’s a fairly high-level summary that could be considered mildly-spoilery (only read if you are unsure about checking it out and need a bit more to make a decision:

Set in France in the late 18th century, Portrait of a Lady on Fire tells the story of a passionate love affair between Marianne, a talented female painter, and Héloïse, a young aristocrat soon to be married. Marianne is commissioned to paint Héloïse's wedding portrait in secret, as Héloïse refuses to pose, leading her to observe her subject by day and paint by night. As the two women spend time together on an isolated Brittany estate, their initial artist-subject dynamic evolves into an intimate relationship, creating a powerful exploration of forbidden love, artistic creation, and the female gaze in a time when women's freedoms were severely limited.

Another great issue.

It's great that you are so particular about your coffee. But the grinding is just the tail end of the process. I'll spare you the rant, but we live in the mountains of Panama, the Napa Valley of coffee. Most people don't realize that coffee, just like wine, has varietals. Some Geisha arabica grown in our neighborhood recently sold at auction for $14,000 per kilo - with 5 kilos produced. Anyway, the most important part is the roasting - and if you buy roasted beans, well, who knows? You might want to look into a personal roaster, which allows algorithmic control of the heating profile etc. DId you know that the difference between light and dark roast is 20 seconds? Getting your roast dialed in is part of the art of coffee. And then get your beans from Boquete. :)

It was great to hear from Mark Nelson once again about nuclear. I'm waiting for part 2, which should be super important, especially considering the election results yesterday. Considering how NVDA and AMZN jumped today, I wonder how much of that might be anticipating less regulatory pushback on buying/building nuke plants, like the other Mark discussed. Great timing for the interview.

Great post. Again.

Agree with you re this film. Saw it a few months ago. Really fine art.