55: My Nvidia Q3 Highlights, John Malone Interview, Facebook AI Censorship, FANG Squeeze, TSMC + Google & AMD, EV/TAM, John Carmack, What is Taste?, Psychedelics in Canada, and the Queen's Gambit

"a data center infrastructure on a chip"

Please try to keep an open mind—not only to let new ideas in, but also to let the old ideas out!

—Larry Gonick, Cartoon History of the Universe III

What is taste, and is it transferable across domains?

Is someone with good taste in film and music more likely to have good taste in companies and people (and vice versa)? Is it more transferable by some who are better at extracting abstract patterns, and less transferable to those who compartmentalize and stick to surface level more? If so, is abstracting away principles and patterns a skill that can be developed or is it mostly innate?

¯\_(ツ)_/¯

Whatever the answers to these questions, the instrumental and intrinsic value of taste is probably one of these things that is often overlooked because it's not quantifiable.

I certainly wish I had better taste. I mean, I think I’m ok in a few fields for an amateur, but I’m nowhere near the real pros in music and film and business and science… If only I could be like a character in Gilmore Girls or High Fidelity, with perfect encyclopedic knowledge of all eras of music and film.

❄︎ We had around 15-20cm/6-8 inches of snow last night. Meanwhile, my friend Rishi is surfing. At the same time I’m waking up, someone is falling asleep. As I make my tea, someone is falling in love for the first time, and someone else is signing divorce papers. A teen is discovering their new favorite band that they’ll still listen to when they’re 50, and someone learns that they have pancreatic cancer. The diversity of the human experience is worth remembering and feeling, once in a while…

Investing & Business

A Word From Our Sponsor

At the confluence of the Covid pandemic, unprecedented government actions, and the U.S. presidential elections, Q3 2020 was an earnings season like no other.

Last week fundamental research platform Sentieo hosted a Q3 earnings review webinar, including selected Q3 trends across sectors, overall market and sector performance, as well as an update on their election ideas.

If you missed the live webinar, you can catch the replay at Sentieo.com

Malone & Faber’s Bromance Continues (from November 19, 2020)

There’s another short clip (3 minutes) here. CNBC appears to be holding the full interview hostage and not releasing it freely like they usually do… If you are a paying CNBC Pro sub, you can watch the full thing here.

Nvidia Q3 Call Highlights

Nvidia recently had their Q3 earnings. To recap, the numbers looked like:

Overall revenue: +57% (22% sequentially)

Gaming revenue: +37%

Data-center revenue: +162%

Gross margin: 62.6%

Here’s some stuff that stood out to me in the transcript. First on gaming:

While we had anticipated strong demand [for the new gaming GPUs], it exceeded even our bullish expectations. Given industry-wide capacity constraints and long cycle times, it may take a few more months for product availability to catch up with demand.

So the gaming segment would be even higher if it wasn’t supply-constrained.

Gaming laptop demand was also strong with double-digit year-on-year growth for the 11th quarter in a row

record gaming console revenue on strong demand for the Nintendo Switch.

we continue to grow our cloud gaming service, GeForce NOW, which has doubled in the past 7 months to reach over 5 million registered users. GeForce NOW is unique as an open platform that connects to popular game stores including Steam, Epic Games and Ubisoft Connect, allowing gamers access to the titles they already own. 750 games are currently available on GFN, the most of any cloud gaming platforms, including 75 free-to-play games, with more games added every Thursday [...] GFN's reach continues to expand through our telco partners in a growing list of countries including Japan, Korea, Taiwan, Russia and Saudi Arabia

On data-center:

Over the past weeks, Amazon Web Services, Oracle Cloud Infrastructure and Alibaba Cloud announced general availability of the A100 following Google Cloud Platform and Microsoft Azure. A100 adoption by vertical industries drove strong growth as we began shipments to server OEM partners, whose broad enterprise channels reach a large number of end customers.

began shipping NVIDIA DGX SuperPOD, the first turnkey AI infrastructure. These range from 20 to 140 DGX A100 systems interconnected with Mellanox's HDR InfiniBand networking and enable customers to install incredibly powerful AI supercomputers in just a few weeks' time

we have announced plans to build an 80-node DGX SuperPOD with 400 petaflops of AI performance called Cambridge-1, which will be in the U.K.'s fastest AI supercomputer

400 petaflops is legit. Check out the latest Top 500 list of supercomputers around the world.

In Q3, the A100 swept the industry standard MLPerf benchmark for AI inference performance following our sweep in the prior quarter's MLPerf benchmark for AI training. Notably, our performance lead in AI inference actually extended compared with last year's benchmark. For example, in the ResNet-50 test for image recognition, our A100 GPU beat CPU-only system by 30x this year versus 6x last year. Additionally, A100 outperformed CPUs by up to 237x in the newly added recommender test, which represents some of the most complex and widely used AI models on the Internet.

I wrote about this in edition #42, if you want more details and graphs.

We estimate that NVIDIA's installed GPU capacity for inference across the 7 largest public clouds now exceeds that of the aggregate CPU capacity in the cloud, a testament to the tremendous performance and TCO advantage of our GPUs.

Mellanox had another record quarter with double-digit sequential growth well ahead of our expectations, contributing 13% of overall company revenue. The upside reflected sales to a China OEM that will not recur in Q4. As a result, we expect a meaningful sequential revenue decline for Mellanox in Q4, though still growing 30% from last year

in October, we unveiled the BlueField-2 DPU, or data processing unit, a new kind of processor which offloads critical networking, storage and security task from the CPU. A single BlueField 2 DPU can deliver the same data center services that consume up to 125 CPU cores. This frees up valuable CPU cores to run a wide range of other enterprise applications

We believe that over time, DPUs will ship on millions of servers, unlocking a $10 billion total addressable market. BlueField-2 is sampling now with major hyperscale customers and will be integrated into the enterprise server offerings of major OEMs

we also announced the next-generation NVIDIA Mellanox 400-gigabit per second InfiniBand architecture

Said with the voice of Doc Brown: Four hundred gigabits!

On margins:

Q3 GAAP gross margins was 62.6%, and non-GAAP gross margin was 65.5%. GAAP gross margin declined year-on-year, primarily due to charges related to the Mellanox acquisition partially offset by product mix [...]

Non-GAAP gross margins increased by 140 basis points year-on-year, reflecting a shift in product mix with higher Data Center sales, including the contribution from Mellanox

Jensen on the opportunity with inference compute:

Inference is one of the hardest computer science problems. Compiling these gigantic neural network computational graphs into a target device is really, really -- has proven to be really, really hard. The models are diverse, ranging from vision to language to speech. And there are so many different types of models being created. The model sizes are doubling every couple of months [...]

This is the early innings, and I think this is going to be our largest near-term growth opportunity. So we're really firing on all cylinders there, between the A100s ramping in the cloud, A100s beginning to ramp in enterprise, and all of our inference initiatives are really doing great. [...]

The vast majority of the world's inference is done on CPUs. And nothing is better than the whole world recognizing that the best way forward is to do inference on accelerators

On Ampere A100:

A100 is our first generation of GPUs that does several things at the same time. It's universal. We position it as a universal because it's able to do all of the applications that we in the past had to have multiple GPUs to do. It does training well. It does inference incredibly well. It does high-performance computing. It does data analytics. [...]

we're going to ramp into all of the world's clouds [...]

we're starting to ramp into enterprise, which in my estimation long term will still be the largest growth opportunity for us, turning every industry… every company into AIs and augment it with Al and bringing the iPhone moment to all of the world's largest industries

On supply-constraints:

Our growth is -- in the near term is more affected by the cycle time of manufacturing and flexibility of supply. We are in a good shape to -- and all

of our supply is -- informs our guidance. But we would appreciate shorter cycle times. We would appreciate more agile supply chains. But the world is constrained at the moment.

Jensen’s vision for the data-center of the future and for Mellanox/DPUs:

Long term, every computer in the world will be built like a data center, and every node of a data center is going to be a data center in itself. And the reason for that is because we want the attack surface to be basically 0. And today, most of the data centers are only protected as a periphery.

But in the future, if you would like cloud computing to be the architecture for everything and every data center is multi-tenant, every data center is secure, then you're going to have to secure every single node. And each one of those nodes are going to be a software-defined networking, software-defined storage, and it's going to have per application security.

And so the processing that is -- that it will need to offload the CPU is really quite significant. In fact, we believe that somewhere between 20% to 40% of today's data centers, cloud data centers is the capacity, the throughput, the computational load is consumed running basically the infrastructure overhead.

And that's what the DPU is intended -- was designed to do. We're going to offload that, number one. And number two, we're going to make every single application secure. And confidential computing, zero trust computing, that will become a reality. [...]

I believe therefore that every single server in the world will have a DPU inside someday just because we care so much about security and just because we care so much about throughput and TCO. [...] It's a programmable data center on a chip, think of it that way, a data center infrastructure on a chip.

That’s it. Hope it wasn’t too long.

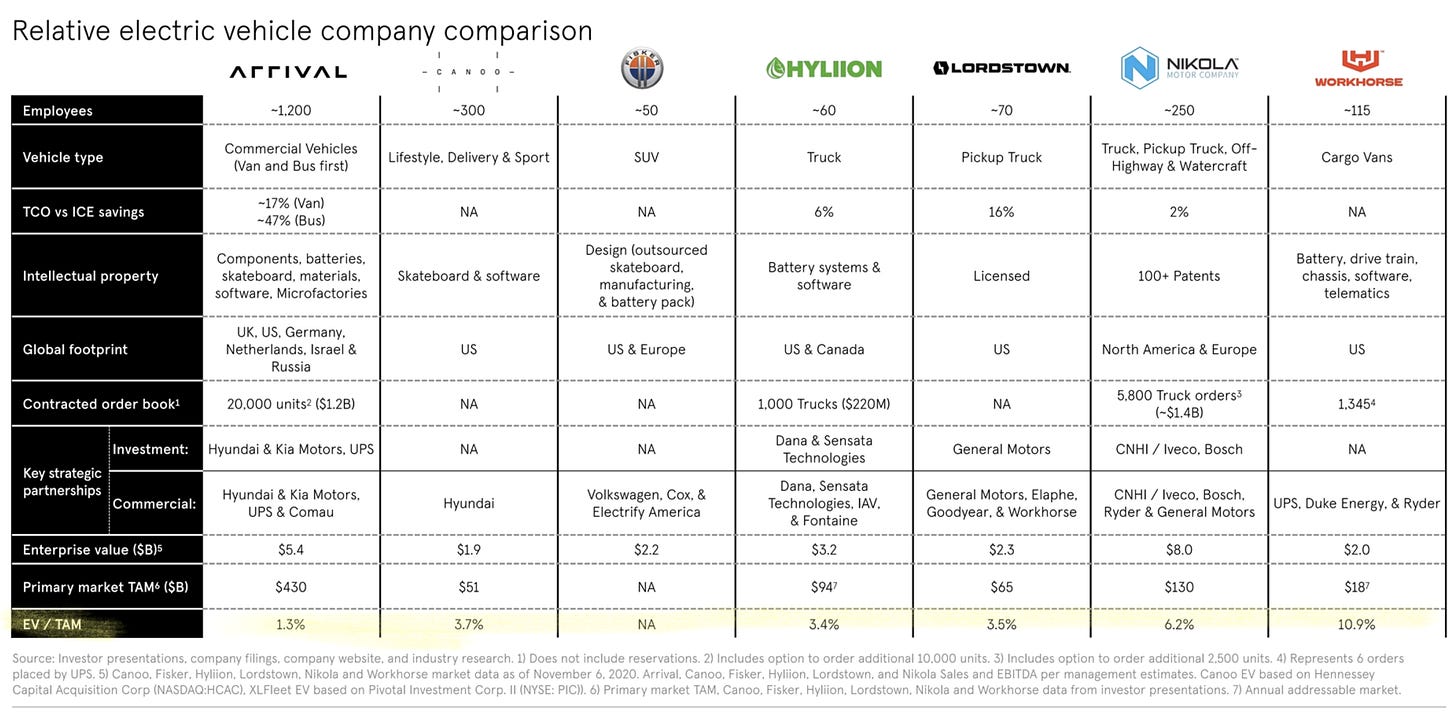

‘EV / TAM, because why not?’

Facebook’s AI-Censorship

Facebook removed 22.1 million pieces of hate speech content on its namesake social network in the third quarter, 94.7% of which was detected automatically by artificial intelligence models.

I wonder what's the false positive rate 🤔

SimSearchNet++ is one of the newest models Facebook uses to find harmful content. The AI, detailed today, automatically detects cases when an image flagged as containing hate speech is reuploaded elsewhere in an altered form. The company said that the AI is better at overcoming image manipulations such as crops and blurs than the earlier model it used for the task.

A lot of it seems to be finding re-uploads of things that have been flagged by humans.

Facebook today also detailed RoBERTa and XLM-R, two other new models developed by its engineers. RoBERTa is a natural-language processing model that has been shown to deliver “state of the art performance,” while XLM-R likewise promises industry-leading speeds for cross-lingual understanding. Cross-lingual understanding is the process wherein a model is trained in one language and then applies its learnings to other languages, an important feature for applications such as translation services. (Source)

The language issue must be a big challenge, because not only do you need to translate, but you need cultural translation of what “hate speech” is. Like how street language is more different across languages than the more basic words (house, car, apple), I suspect that what qualifies as hate speech has a lot of local variation that is more based on the context in the local’s minds than in the words on the page.

But that’s just my guess, I don’t really know how they do any of this ¯\_(ツ)_/¯

YouTube Squeezing the Lemon & Thoughts on FANGY Big Tech…

Don’t be surprised if you start seeing ads on videos made by smaller YouTube creators. The video-sharing website has updated its Terms of Service, and it includes a new section that gives it the right to monetize videos from channels not big enough to be part of its Partner Program. That doesn’t mean new creators can start earning from their videos right away, though — YouTube said in a forum post explaining the changes to its ToS that non-YPP members won’t be getting a cut from those ads. (Source)

YouTube has been ratcheting up the ad load on its site for a while, and now it’s getting to the point where the annoyance factor is getting pretty high. Most videos have 2 pre-roll ads, longer videos have mid-roll ads. Now even videos from smaller channels that can’t even be monetized by creators will have them… I feel like ad-blockers will keep getting more popular.

And as But What For? writes:

This is a weird weird incentive now in place for YouTube to push off letting people into the Partner Program.

Unless they can justify to themselves that the money will motivate this YouTuber more, they have no incentive to add them to the program...

Shareholders of Google may like a heavy ad load, but users, not so much. And while that latter detail may not matter financially in the short-term, eventually, it usually does.

I think it’s too bad that we’re in the part of the lifecycle for most big tech where a large fraction of the changes that they make are now making products/experiences worse and more annoying to users. For many years, things tended to get better as most of the organic growth came from iterative improvements and new experiences, features, and products, rather than more intense monetization of existing assets. I’m not saying it’s 100% one thing or the other now, but things are starting to lean more to that side.

Amazon is putting more and more “sponsored” listings in search results, Facebook’s ad load on its properties is getting really high and now they’re putting shopping tabs where your personal notifications used to be (“a dark pattern is a user interface that has been carefully crafted to trick users into doing things”), Google has mostly removed organic search results from above the fold on mobile and most laptops (and a lot of regular people think they’re clicking on search results, not on ads, because they kind of look the same — see the dark pattern link above), Apple is blocking game-streaming from its App Store to retain control, etc.

Kind of weird to think that Microsoft may now be one of the most customer-friendly big techs (I wouldn’t have believed it 15 years ago)…

You can only do so much of that before a large fraction of your customers move from “annoyed” to “actively disliking you”. I’m not talking now, but what about 2 more years of this trend? 5 years? At some point your site feels like Yahoo’s old homepage or MySpace, or people start calling you the Borg like Micro$oft at peak hatred. I hope they have the self-awareness and self-control to pull back before going too far.

Incentives Warp Perception

An aside on this, on the whole “you are the easiest person to fool” principle…

I know many people reading this will be shareholders of at least one of these big tech companies. You have to be very honest with yourself about how much you really like what they do, their products, and services, because it’s very easy to lie to yourself when you have a monetary interest in something.

I’ve noticed that in myself when I owned some FB a few years ago. After a bit, I became convinced that the blue app that I had never used much before was actually pretty good, I just had never set it up properly (I muted a bunch of annoying people, followed a bunch of techy and sciency publications, etc). I started using Instagram more too, etc. And while it was true that my feed got better by putting a bit more effort into it, I realized I was fooling myself after I sold the stock and my usage and opinion of the apps rapidly declined by 90% (I sold not because I thought it was going to do badly long-term, but because I didn’t want to own it anymore).

We all think that if we lied to ourselves, we’d be smart enough to detect it, but if it was that easy, it wouldn’t be as widespread and pernicious a problem. As humans, our brain architectures are all a lot more similar than not (and that we focus so much on the differences is just a variant of the ‘narcissism of small differences’), and so, we’re unlikely to be entirely immune to problems that affect a lot of people.

Ben Graham Follow-Up

I shared some stuff about Ben Graham in edition #54 (waitwaitwait — have I written 54 of these things in 4 months? It just kinda of hit me…), and it sparked some interesting conversations.

Here’s some follow-up via John Huber (hi John!), and something he wrote in 2014:

Graham’s philosophy has always been synonymous with the “margin of safety” concept. And this extended to a focus on the balance sheet and stocks selling below their liquidation value. This represented one of Graham’s main investment strategies. But in his writing (here and also in his other books), he gives a surprisingly strong amount of weight to what he calls “The Earnings Record”.

He seems to say that while assets are important, it’s the earning power that truly creates value over time… sounds more like Buffett than Graham. This doesn’t exactly jive with his famous net-net strategy…

In our email conversation, I wrote:

Graham was a much more subtle mind than most know, AND it’s unfair to hold against him that he did things that worked in his day because they don’t work today. It’s like calling Alexander Hamilton dumb for not knowing about DNA...

If he was alive today, who knows how Ben Graham would be investing, but I doubt he’d have stayed entirely static in his approach almost a century later.

Bill Brewster Throwing His Hat in the Ring (Again)

A lot of you may know Bill from his co-hosting of Value After Hours with Tobias Carlisle and Jake Taylor. He’s got a new audio project, and clearly it’s a labor of love. I’ve enjoyed the first episode:

The format is quite a bit different from the vast vast majority of finance-related podcasts. It’s a lot more like a real conversation than a formal interview.

There’s topics, like this one has longer sections on QVC and Formula 1 and touches on Charter and other businesses, but a lot of it is a bit more about the meta-level of investing, the psychology of it, finding what works for you even if others don’t find it appealing (QVC anyone?), lessons learned over time, what you can stick with, respecting other styles even if they’re not for you, etc.

In the end, it leans more to being about the guest as a personality than about the topic(s). I think it’s a cool addition to the finance podcast ecosystem and I’m looking forward to seeing where Bill takes it over time.

Science & Technology

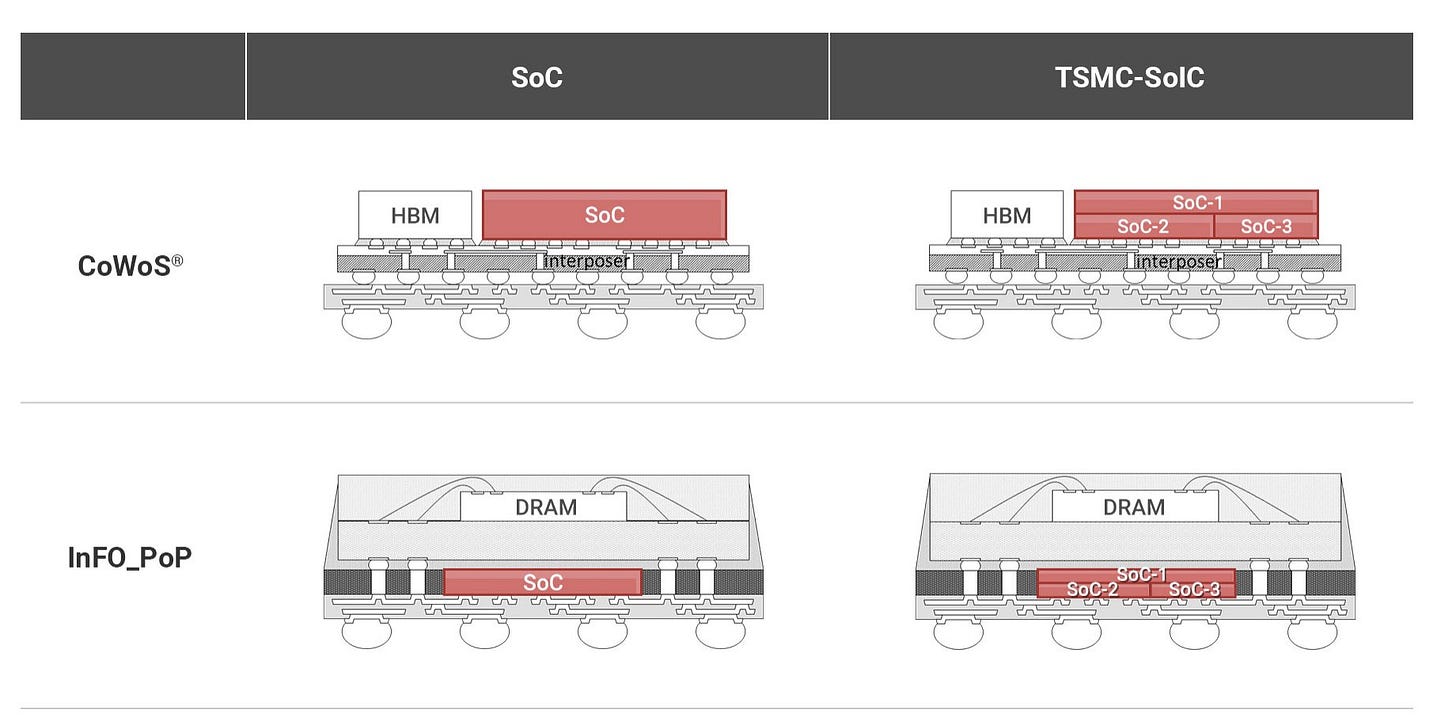

Google and AMD Lining up for TSMC's SoIC Packaging Tech

3D/vertical stacking of the various sub-components of systems-on-chips (SoCs) is hot and getting hotter:

TSMC is now taking chip packaging vertically and horizontally, using a new 3-D technology that it dubs SoIC. It makes it possible to stack and link several different types of chips -- such as processors, memory and sensors -- into one package, according to the company. This approach makes the whole chipset smaller, more powerful and more energy-efficient.

TSMC plans to employ its new 3D stacking technology at a chip packaging plant it is building in the Taiwanese city of Miaoli, people with knowledge of the matter told Nikkei. Google and Advanced Micro Devices, Intel's smaller rival, will be among its first customers for SoIC chips, the sources added, and are helping TSMC to test and certify them. Construction on the plant is slated for completion next year, with mass production to begin in 2022. [...]

[TSMC] has previously left the chip packaging services to a wide range of specialized players, including ASE Technology Holding, Amkor and Powertech, as well as rising government-backed players in China, such as Jiangsu Changjiang Electronics Technology, Tongfu Microelectronics, and Tianshui Huatian Technology Co.

With its SoIC technology, however, TSMC is set to lock its premium customers into its ecosystem for chip packaging as well (Source)

This is what it looks like (on the right):

If you’re not sure what you’re looking at, this is a side view of a package. The red rectangles at the SoC — note how on the right it’s just one thing while on the left you have three different chips basically stacked on top of each other. And on the bottom row, you have the DRAM memory on top too. (Source of that.)

These Are the Shower Thoughts of John Carmack

The spinal cord has about the same number of neural connections as the optic nerve — to your brain, all of your body from the neck down is worth about one eye.

Total I/O from the brain is only a few million signals, and the bandwidth isn’t very high. The “brain in a jar” of SF could run over modern WiFi. (Source)

Carmack is a childhood hero of mine, the coder who wrote the 3D engine for Doom and Quake, among others. I still can’t believe what he was able to make run on a 486@33Mhz with 4 megs of RAM…

Someday, I need to write more about Doom (a game that still has an active mapping and modding community to this day, btw). I was a map-maker, played LANs all night in my parents basement with my friends, and generally thought of little else as a 12-year-old. I still have the cardboard box of the original that I bought on floppy disks…

Psychedelics Research Boom, Canada Edition

amid the growing buzz around psychedelics, there’s been an exponential explosion in research into LSD, psilocybin, MDMA and ketamine to treat conditions such as depression, post-traumatic stress disorder (PTSD), anxiety and substance-use disorder. This comes at a time when Canadians are experiencing more mental health challenges due to the COVID-19 pandemic. [...]

On Oct. 22, Numinus Wellness Inc., a Vancouver-based company researching and developing psychedelics for psychotherapy, announced it has completed the first legal harvest of magic mushrooms by a public company in Canada. It is the first publicly traded company to be granted a licence by Health Canada to conduct research into extracting psilocybin from mushrooms for research purposes.

Earlier this year in Toronto, Field Trip Health opened what has been called the “first psychedelic-enhanced psychotherapy centre in Canada,” using ketamine, an anesthetic and psychedelic that can provide out-of-body experiences, to treat depression as well as PTSD.

Toronto’s Mind Medicine Inc. is studying the use of LSD (lysergic acid diethylamide) to treat anxiety and as well as the effectiveness of microdoses of LSD in treating adult attention deficit hyperactivity disorder.

The Arts & History

Ok, that’s funny…

The serious point here is that when I was growing up, gaming was almost only for kids because the adults didn’t have gaming around when they were growing up and few adults picked it up later in life. That’s why gaming was so associated with kids. But that was an accident of history, not a “natural” state of things.

Now we’re seeing that a lot of that generation just kept gaming as they aged, and if you project that forward, it’ll be interesting to see, in a few decades, how many 70-80 year olds will have been gaming for 60-70 years and are still playing. Source.

(Yes, I know, plenty of people of all ages game — I meant as a more mainstream thing, and for the types of big games that we don’t necessarily associate with these cohorts right now. Say, less Candy Crush and more Call of Duty…)

The Queen’s Gambit (episode 2)

Saw the second episode of 'The Queen's Gambit' (Netflix, 2020).

Excellent. Really enjoyable and avoids most of the tropes, while hinting at them so you kind of pre-live them in your head in expectation, and then it goes somewhere else.

I like the obvious attention to detail paid to the mannerisms of the chess players (Garry Kasparov and Bruce Pandolfini were consultants and helped make both the chess and the players more realistic).

The Queen’s Gambit (episodes 3-4)

Subsequently to writing the above, I saw #3 and #4.

As others have said, it keeps getting better. Very well made. The way Anya Taylor-Joy embodies the character is striking. She has kind of an iconic look in some scenes, the way her hair is sculpted and the way the wardrobe makes her look. Haven’t found the right way to describe it, but it’s a good effect. Ankur Parikh on Twitter compared it to Audrey Tautou in ‘Le Fabuleux Destin d’Amélie Poulain’ (2001, known as just ‘Amelie’ in the US), and I think there’s some of that.

Very good cinematography, almost every supporting actor gets a moment. Good stuff. Looking forward to the last 3 episodes.

Villeneuve’s ‘Dune’ Pushed Back to October 2021

I missed this bit of news when it came out, but apparently the release was moved from December 2020 to next Fall. It makes sense with the pandemic, but still a bit disappointed as I’m really looking forward to it.

h/t My friend JPV

TikTok’s Distributed, Crowdsourced ‘Ratatouille’ Musical

I recently re-watched ‘Ratatouille’ (Pixar, 2007) with my kids and posted about it here.

Something remarkable is happening on TikTok. While Broadway is shut down and schools have gone virtual, theater kids and professionals alike are collaborating on the app to create a fully socially distanced musical about one particular muse: Remy the rat from Pixar’s 13-year-old Francophilia flick Ratatouille. Composers, singers, actors, musicians, dancers, and set designers have come together to write and perform original songs for a Ratatouille musical that does not exist on any stage, but is alive and ever-growing on TikTok.

The whole story is too long to recount here, but you can get all the context and see the videos of the project here (you can scroll to the bottom to see one of the latest versions of a song).