551: Anti-Charisma, Claude Sonnet 3.7, Huawei's Chips, Microsoft, SMRs, Mag7, Markel, Fairfax, Berkshire, Quantum Archeology, and J.S. Bach

"identity without physical continuity"

One should expect to find the genius, and the defects, of the human mind in its creations, as one always finds the autobiography of the artist in the art-work.

—Robert Anton Wilson

🛀💭🚢 🔄 I’ve been thinking about the concept of the 'Ship of Theseus' paradox — every plank has been replaced over the years, is it still the same ship? — and I came up with a couple of fun thought experiments:

A communal urban graffiti wall 🎨 that has existed for decades in the same location but has had its entire surface completely repainted thousands of times. Every molecule of the original paint is long gone, the concrete base has been resurfaced multiple times, and even the surrounding building structure has been partially rebuilt.

The artwork is changing constantly. Artists are painting over previous works, sometimes incorporating elements or honoring styles of what came before.

Residents of the city recognizes it as a cultural landmark. City records still refer to it as the same "Free Wall" that began in the 1970s, though not a single pigment particle from those early days remains, and the artistic styles have evolved dramatically.

What persists is the cultural significance — identity without physical continuity.

The Eternal Wine: A renowned 300-year-old wine estate that still produces the "Château Éternel" 🍷 vintage.

The original vineyard's grapevines were destroyed by phylloxera in the 1800s, then the replacement vines were later also wiped out by fungal diseases. The modern grapes are entirely different, chosen specifically to better resist modern invasive pests.

The original cellar collapsed in 1923, the oak forests that supplied the barrel wood were harvested to extinction, and the traditional fermentation techniques were abandoned after modernization. The soil chemistry has been completely altered through centuries of different fertilization methods, and the microbiome responsible for the wine's unique terroir has evolved into an entirely different mix of microorganisms.

Chemical analysis could confirm that today's "Château Éternel" shares not a single molecular compound profile with bottles from the 1700s. The flavor profile has transformed into something that would be unrecognizable to the drinkers of that era.

Yet through unbroken production it remains the "authentic" Château Éternel. Wine critics and historians still regard it as the same historic wine despite nothing—not grapes, soil, climate, production methods, flavor, or even ownership family—remaining from the original. ¯\_(ツ)_/¯

💧🧐 I’m starting to research home reverse osmosis filtration systems. Or as an alternative, Berkey seems to make pretty good gravity filters, but I’m not sure about the countertop space requirements. Stay tuned.

🎬 I saw ‘Anora’ (2024) and really liked it.

Fair warning: It’s not for everyone. I’ve seen it described as a cross between ‘Pretty Woman’ (1990) and ‘Uncut Gems’ (2019), and that’s not a bad starting point.

I’ll share my review soon.

💚 🥃 🙏☺️ If you’re a free sub, I hope you’ll decide to become a paid supporter in 2025:

🏦 💰 Business & Investing 💳 💴

📊 The U-shaped Theory of Charisma and Anti-Charisma 🧲

A large part of business and investing is understanding humans. Trying to decide who to bet on (and second order, who will others bet on, work for, support with capital, etc). If it was all just cold arithmetics, the spreadsheets would’ve taken over more than they already have.

Thinking about how social influence works, I noticed something interesting: there seems to be a U-shaped curve when it comes to charisma.

At one end of the spectrum, you have traditionally charismatic figures like Barack Obama, Richard Branson, or Steve Jobs. Great communicators who know just what to say, and how to say it, to make you feel a certain way.

At the opposite end, you find anti-charismatic figures who achieve similar levels of influence, but through a different route. Think early Mark Zuckerberg (awkward and robotic), young Bill Gates (back when he was in hardcore uncool nerd mode), or in the arts, Andy Warhol (I was surprised to learn that he was shy, spoke in a monotone, often gave monosyllabic answers, and was generally non-expressive). Their social awkwardness (or deliberate distance?) somehow becomes magnetic — but only when paired with exceptional talent or vision 🧠

They stand out precisely because they do extraordinary things while being different from the more traditional smooth-talking-but-ultimately-empty-suit CEOs.

The ‘valley of normal’ tends to generate less cultural impact and social magnetism. It's the extremes that captivate. 📉📈🧲

🔍 When anti-charisma actually works

The anti-charisma side of the curve only works when backed by genuine exceptional ability.

Young Gates and Zuckerberg were compelling because they were changing the world. Anti-charisma thrives in fields where concrete achievements can be measured and speak for themselves (technology, arts, innovation, engineering, investing, mathematics) rather than in areas where the product is the relationship, where social capital and human connection matter most (politics, entertainment, sales, middle management, hosting a TV show, whatever).

🔄 Crossing the U-curve

Some people have crossed from one end of the spectrum to the other.

Warren Buffett transformed from an awkward genius with limited social graces to the smooth "folksy oracle" persona of the last few decades. Bezos went from tweed Star Trek to Terminator. Zuck has similarly been transforming right before our eyes.

Sometimes it happens organically as someone matures, and other times it’s more deliberate and cynical (hiring PR teams, etc). It usually coincides with the need to communicate to wider audiences to achieve goals and reach new summits. 🏔️

💎 The authenticity premium

Both ends of the U-curve share one critical feature: perceived authenticity.

Traditional charisma works when it feels genuine rather than manipulative. Anti-charisma works precisely because it appears unfiltered and raw.

This explains why audiences often forgive or even celebrate the social quirks of anti-charismatic figures while growing suspicious of polished but inauthentic valley folks. We have built-in BS detectors that respond to authenticity, whatever form it takes. 🧠

⚠️ The dark side of anti-charisma

Be careful though — this dynamic works for frauds too!

Mike Pearson of Valeant was celebrated partly because his anti-charisma created an impression of authenticity (the gruff, unpolished presentation). "He's too focused on business to care about that stuff" was part of the sales tactic.

Sometimes what looks like authenticity is . The line between genuine anti-charisma and someone playing a role — the carefully crafted deception of Elizabeth Holmes — can be surprisingly thin and the gullible are left holding the bag 💸

I’m sure there are other examples of anti-charisma, let me know what comes to your mind 🤔

🐜🇨🇳 Huawei Doubles AI Chip Yields to 40%, Fabbing Them is Now Profitable 💸🫷

This is a big deal for China, which is facing an export ban on semiconductors:

Huawei has significantly improved the amount of advanced artificial intelligence chips it can produce, in a key breakthrough that supports China’s push to create its own advanced semiconductors.

The Chinese conglomerate has increased the “yield” — the percentage of functional chips made on its production line — of its latest AI chips to close to 40 per cent, according to two people with knowledge of the matter. That represents a doubling from 20 per cent about a year ago.

The move represents an important advance for Huawei, which has been rolling out its latest Ascend 910C processors, which offer better performance than its previous 910B product.

The 40% yields apparently make the chips profitable for Huawei, and their goal is now to further increase yields to 60%, closer to the industry average.

While China is still behind the leading nodes that TSMC is producing, and their lack of access to ASML’s extreme ultraviolet technology (EUV) remains a big roadblock, they can partly get around it by 1) clever optimizations, as DeepSeek has shown, and 2) by leveraging their sheer ability to put shovels in the ground and build stuff.

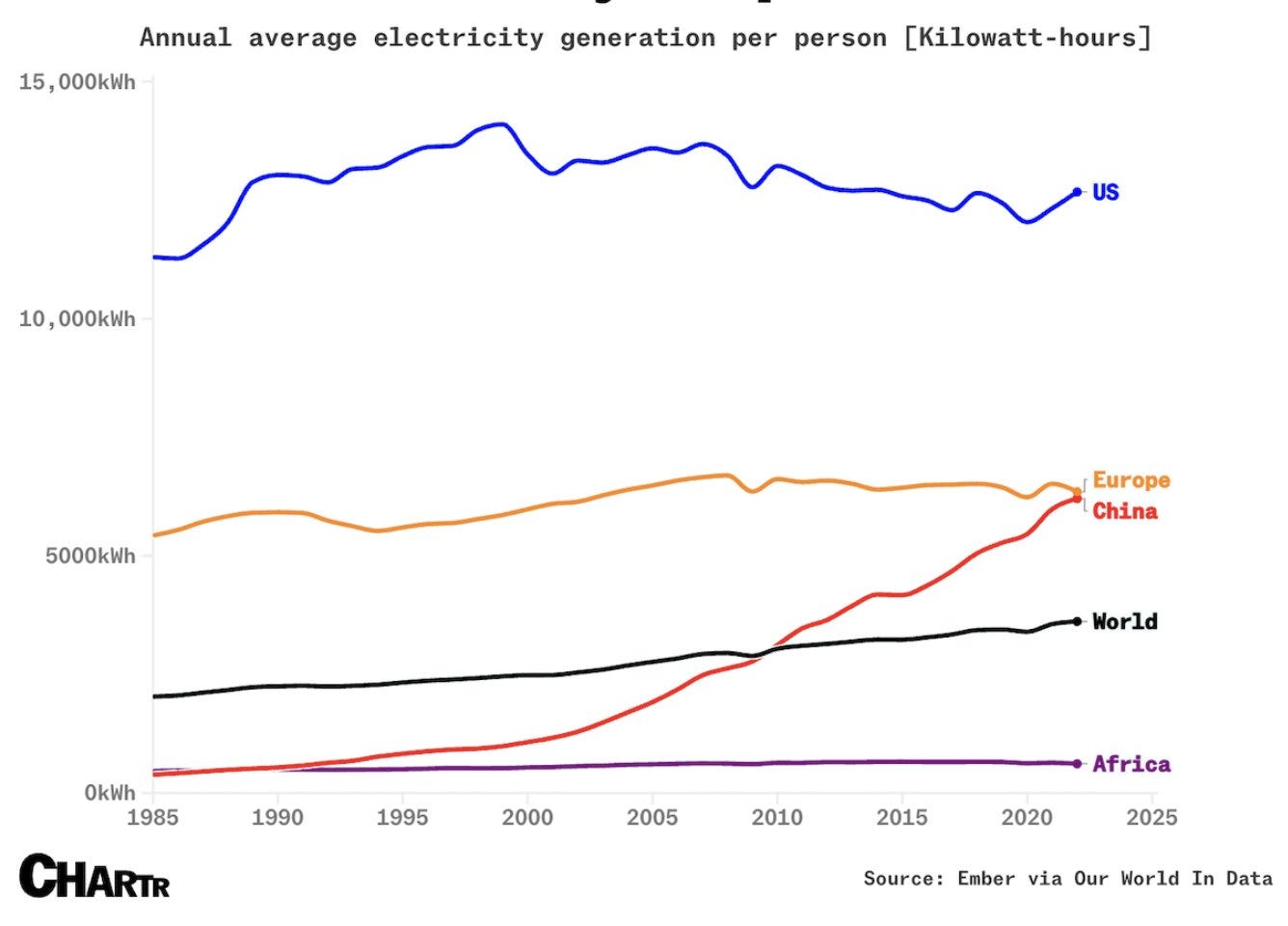

It doesn’t matter too much if it takes them more GPUs and more energy than Western AI firms that have access to TSMC/Nvidia chips if the ability to build data-centers and power plants becomes the real bottleneck. That’s a race that China has a much better chance of being competitive in, even while operating at a disadvantage:

Right now, the Huawei chips are mostly competitive for inference, not training. Nvidia is still selling plenty of H20s (there are reports that Tencent, Alibaba, and ByteDance have "significantly increased" orders of the H20 after DeepSeek came out), at least for now — we can probably expect more export controls to hit soon. But Huawei is working on making its Ascend chips better for training by improving interconnectivity, memory speeds, software, and other critical components.

🤖💭 Microsoft Offers o1 Reasoning for Free in Copilot

The commoditization of AI continues apace, with reasoning models and voice mode now becoming increasingly available for free:

Microsoft made OpenAI’s o1 reasoning model free for all Copilot users last month, and now it’s providing unlimited use of this model and Copilot’s voice capabilities to everyone. Previously, both Think Deeper (powered by o1) and Voice in Copilot had limits for free users, but Microsoft is removing these today to allow Copilot users to have extended conversations with the company’s AI assistant.

I don’t know if it’s DeepSeek putting pressure on them or just competition from other reasoning models (Gemini 2.0 Flash Thinking, Grok 3, and now Anthropic’s Claude 3.7).

Are we witnessing LLMs becoming bifurcated? Are consumer LLMs destined to become free utilities (ad-supported), while paid tiers evolve to focus primarily on enterprise requirements like data security, guaranteed uptime, and compliance features? ☑️ ☑️ ☑️

⚛️ Holtec wants to build 10GW of Small Modular Nuclear Reactors in the US, Starting with Palisades in Michigan ⚛️

Good news!

The owner of the Palisades nuclear plant, Holtec International, signed a strategic agreement with Hyundai Engineering and Construction on Tuesday to build a 10 gigawatt fleet of small modular reactors in the North America, starting with two units at the Palisades site. Holtec is aiming to bring the original Palisades reactor back online in October subject to approval by the Nuclear Regulatory Commission. It would be the first restart of a closed nuclear plant in U.S. history.

The first, but not the last, I hope!

The Palisades plant permanently closed in 2022, part of a wave of reactor shutdowns as nuclear struggled to compete against cheap natural gas. Palisades started commercial operations in 1971. The plant has a generating capacity of 800 megawatts, enough to power hundreds of thousands of homes. The two 300-megawatt small modular reactors would nearly double the plant’s capacity.

It’s just insane that we have built these amazing assets that can generate gigawatts of clean electricity 24/7, and they can be periodically refurbished, like Ships of Theseus (again), making their useful lives open-ended — but we’re shutting them down and letting them rot… 😫

Hopefully, the recent realization that we need lots of reliable power for AI and for manufacturing is going to sharpen minds and get us back on the smarter path.

Brooklyn Investor on Mag7, Markel, Fairfax, and Berkshire

One of my inspirations for starting this newsletter is Brooklyn Investor. I really like how he thinks things through via writing and takes the reader along with him.

I’ve been reading him since 2011, and over that 14 year period he’s taken long breaks, but he recently published a couple of pieces and I wanted to highlight interesting parts:

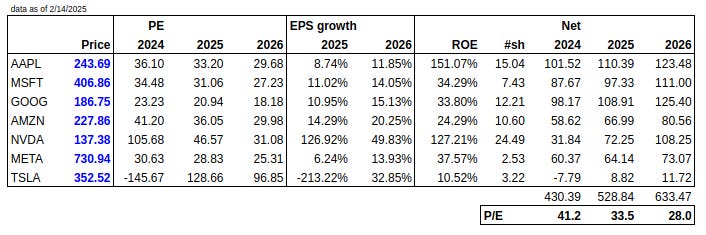

Let’s say the Mag 7 was a modern-day, techful version of a conglomerate like BRK. Its subsidiaries have grown tremendously in the past 5 and 10 years. Earnings will collectively grow 23% in 2025 and 19% in 2026 (willful suspension of disbelief may be key here), and look at the high ROE of each division (OK, I was too lazy to calculate a weighted average).

And this conglomerate is trading for 34x earnings! Or less than 30x if you don’t want NVDA and TSLA. Think about that for a second. How many ideas with those metrics can you find? Let us know in the comments!

He’s clear in the extended writeup that this isn’t a recommendation to buy Mag 7, but this ‘virtual conglomerate’ is an interesting way to think about it and do a ‘sanity check’ on valuation.

Of course, things are always more complex than the napkin math — there are changes in these businesses as capex needs increase because of AI, etc.

Second post:

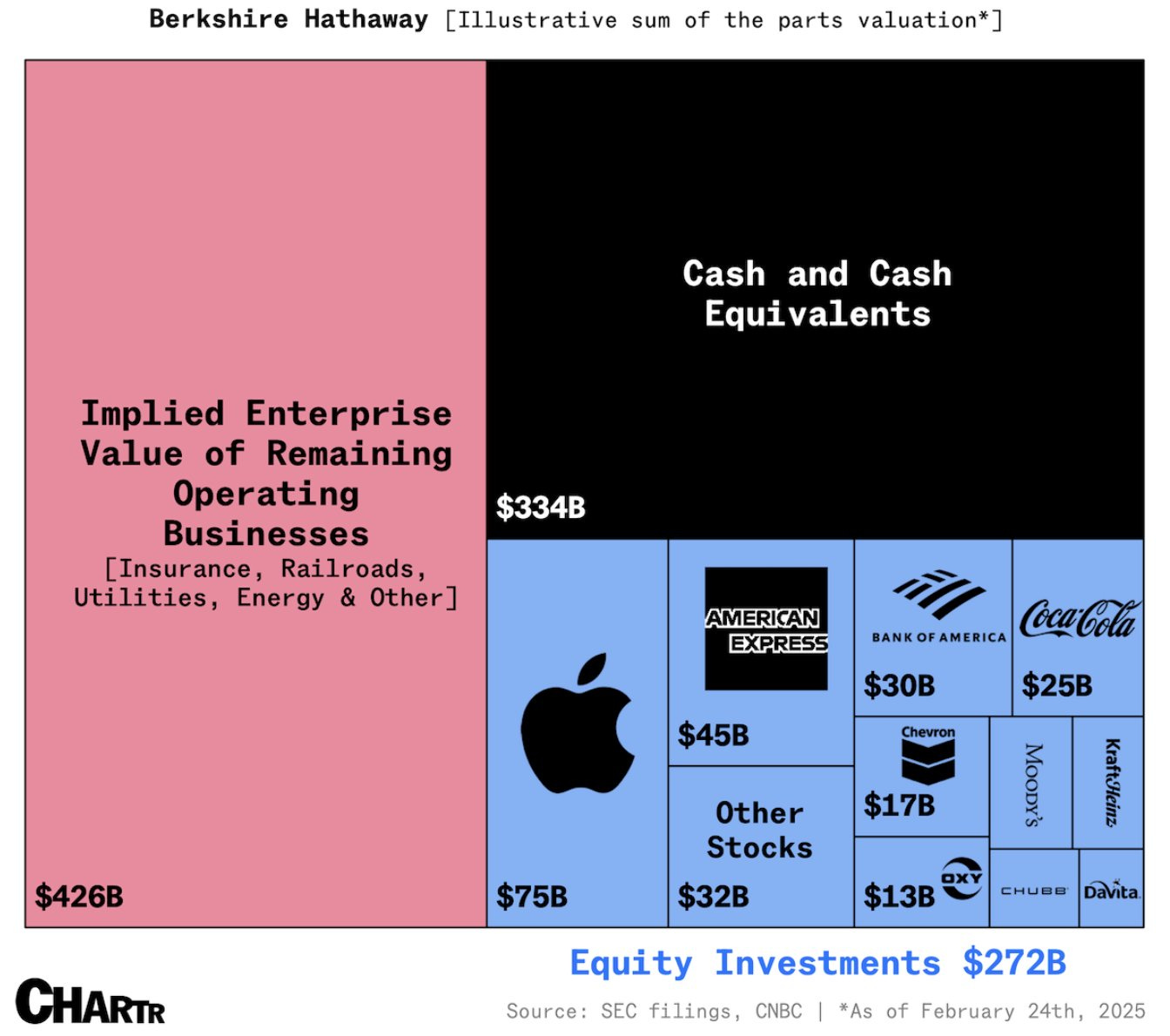

BRK has $650 billion in shareholders’ equity, and $272 billion is invested in stocks. Sure, that looks low, but a lot of BRK net worth is tied up in equity of their wholly-owned/consolidated and equity-method investments. For example, the Railroad, Utilities and Energy segment has equity of $130 billion. That’s as much ‘equity’ as stocks (but just not marked to market every day). That brings up the total equity exposure to $402 billion.

The last time the Manufacturing, Services and Retail had their own balance sheet reported was in 2016, and then, it was $92 billion. It is probably much higher than that now due to acquisitions since then. But let’s just say it’s $92 billion. Add that and we get to $494 billion.

And then there are the equity method holdings like KHC and OXY. Just because they are not included in stock holdings section does not make them any less ‘equity’. Together, they’re on the books at $31 billion, so that takes us up to $525 billion. Yes, market value is lower than that, but since we are comparing this to book value, we will use carrying value. That’s already 80% of BRK’s shareholders equity exposed to equity. Not quite the conservatism you would imagine from the $300+ billion of cash on the books.

Another way to look at it, based on market cap:

🧪🔬 Science & Technology 🧬 🔭

🎁🤖 Claude Sonnet 3.7 is out! (hybrid reasoning model)

My most anticipated model is out, and so far, I’m impressed!

Anthropic confirmed that Sonnet 3.7 *isn’t* a 10^26 FLOP model, unlike Grok 3, so it’s still part of the broad GPT-4 generation in size. That’s probably the reason they didn’t call it “4.0”. That makes me very curious to see what Anthropic can do with an order of magnitude more training compute for their next big release 🤔

But whatever secret sauce Anthropic is using to improve this generation it appears to be working, at least based on benchmarks and my early hands-on t testing. I suspect that they have larger, uneconomic Opus-class models that they use internally to create high-quality synthetic data for the smaller models.

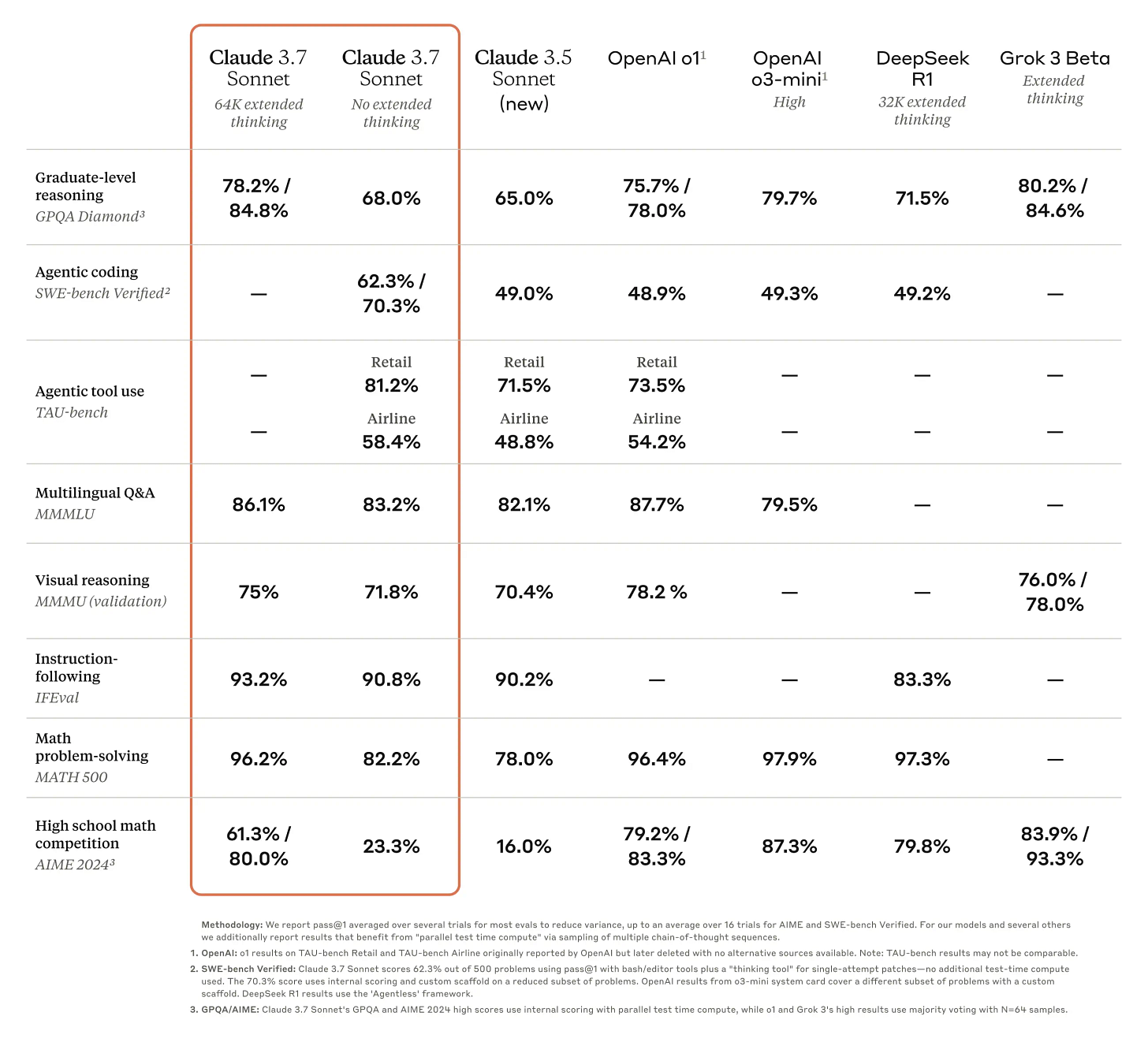

The first thing to note is that Claude Sonnet 3.7 has a new “Extended Thinking” mode that allows it to do test-time compute and reason through things in a scratch pad. This is similar to OpenAI’s o1 and o3 models and DeepSeek’s R1.

Two scaling laws are now firmly in the driver’s seat of AI: 1) Bigger training = better performance, and 2) More processing time = better reasoning. It's like having both a bigger brain and more time to think.

For some things, this extra reasoning may not make a big difference, but for the most complex problems, it’s a big deal: On the MATH500 benchmark, Sonnet 3.7 went from 82.2% to 96.2% thanks to the reasoning mode, and on the AIME 2024 High school math competition bench, it went from 23.3% to the 61.3-80% range (depending on exact methodology). That’s a huge improvement!

Anthropic says it wants to give users of the API version the ability to tune how long they want the model to think, which is nice and something I was asking for last year.

Another good news:

Anthropic also says Claude 3.7 Sonnet will refuse to answer questions less often than its previous models, claiming the model is capable of making more nuanced distinctions between harmful and benign prompts. Anthropic says it reduced unnecessary refusals by 45% compared to Claude 3.5 Sonnet.

Another benefit is that the model’s knowledge has been updated to the end of October 2024.

I also like the transparency of making the system prompt public. These are the invisible instructions given to Claude to shape how it answers and its personality.

Sonnet 3.7 isn’t ranked yet on the LLM Chatbot Arena Leaderboard. I wonder where it’ll settle 🤔

Something very important: AI isn't just about automation. These models are good enough that we're entering the era of AI as genuine intellectual partner. Organizations stuck in the "let's automate this task" mindset are going to miss the forest for the trees.

Too many people try these tools but keep their old habits — they use them like they’re a search engine, instead of a partner to iterate back-and-forth with. They don’t realize that you could ask the same question 5 times and get 5 different answers, and that how you phrase a prompt can have a huge influence on the quality of the results.

In fact, the qualities that make someone a good brainstorming partner with humans are probably pretty similar to those that make someone good at getting the most out of AI 🤔

🪄🔮 Quantum Archeology 🌌🧠

This is kind of a sci-fi concept, but very cool:

Quantum archaeology suggests that nothing truly disappears; it just gets scrambled into an impossibly complex puzzle. Every interaction, thought, and moment leaves its mark on the fabric of reality, preserved in a vast web of quantum information. It's not that the past is gone—it's that we haven't yet learned to read the evidence written into the very structure of space-time itself.

Imagine a detective story where the crime scene is all of history, and every particle in the universe is a witness holding a tiny piece of the truth.

This opens up a possibility that sounds like science fiction but follows from actual physics: if we could develop technology sophisticated enough to decode these quantum signatures, we might be able to reconstruct any person from any moment in history, complete with their memories and consciousness. It's as if the universe keeps a perfect backup of everything that ever happens, and we're just beginning to learn how to access it (some future fusion of information theory, quantum computing, and technologies we haven't yet imagined).

The implications are profound: the past is more like encrypted data than permanently erased, just waiting for the right key to unlock it.

As I said, it’s science fiction. Don’t expect anything like this any time soon. But if the laws of physics allow it… well! Perhaps someday, some way.

🎨 🎭 The Arts & History 👩🎨 🎥

🎼🔬 J.S. Bach’s Music Analyzed with Tools from Physics and Claude Shannon’s Information Theory 🎵 ⚗️

I love when separate fields collide.

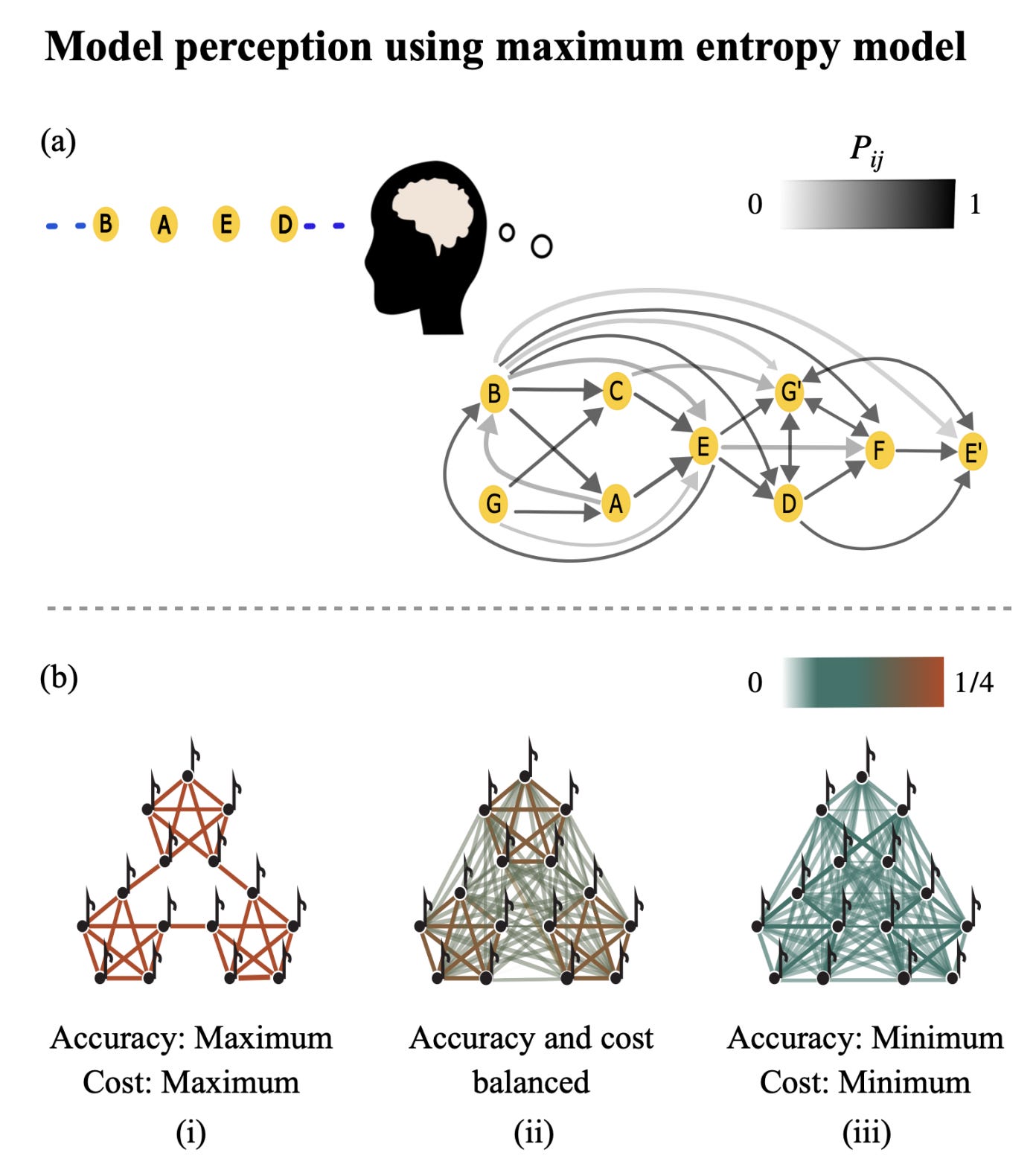

Researchers took Bach's compositions and turned them into complex networks, with each note becoming a node and each transition an edge. They analyzed 337 pieces, from his stately chorales to those amazing fugues.

It's like watching someone apply modern data science to decode the DNA of musical genius:

Baroque German composer Johann Sebastian Bach produced music that is so scrupulously structured that it’s often compared to math. [...]

“We used tools from physics without making assumptions about the musical pieces, just starting with this simple representation and seeing what that can tell us about the information that is being conveyed.”

Researchers quantified the information content of everything from simple sequences to tangled networks using information entropy, a concept introduced by mathematician Claude Shannon in 1948. [...]

What did they find? 🧮 🎻

That Bach wasn't just a musical genius — he was practically a cognitive scientist before the field existed! 🧠

His compositions are structured to accommodate human cognitive biases in information processing. It's like he was intuitively solving an optimization problem: how to pack maximum complexity and beauty into music while keeping it learnable by the human brain.

This reminds me of how elite chess players can memorize board positions much better than novices, but only when the positions make strategic sense. ♟️

He partly solved it with an abundance of transitive triangular clusters (where note A transitions to B, B transitions to C, and A also transitions to C), which make the music more learnable while maintaining the complexity that makes Bach... well, Bach.

These structures create a kind of cognitive scaffolding that helps listeners build accurate mental models of the music without sacrificing information richness. He somehow found the mathematical sweet spot between predictability and surprise that appears optimized for human cognitive processing, potentially explaining why his music still hits so hard centuries later, despite its apparent mathematical complexity.

The full paper is here if you want to dig in deeper.

And if you are new to Bach, I recommend starting with Glenn Gould’s 1955 Goldberg Variations. It's a masterclass in mathematical beauty turned into music 🎹

I used a Berkey for a few years. I stopped using because I wasn't sure if it was doing anything (was the water going through the filters or leaking through the seals?) and remembering to clean the filters was a hassle. I've been using a countertop distiller for over two years and like the experience much better. It has a similar footprint but you need an outlet nearby.

Great issue, Liberty! I'm interested to hear your findings on the water filters. Ever since our town discovered PFAS in the water, I've been looking into solutions. I was told that reverse osmosis is the only effective treatment, but I ended up opting for delivered water that already goes through that process.

Also, regarding Anti-Charisma… Bill Belichick immediately came to mind. He's an absolute football genius that sits at the far end of the U-curve. Most coaches are on the traditionally charismatic side.