571: Jensen's Uber for GPUs, OpenAI Wants Microsoft to Sleep on the Couch, xAI Burn Rate, Meta's $100m AI Engineers, Coffee & Health, Noise & Sleep, and Bob Dylan

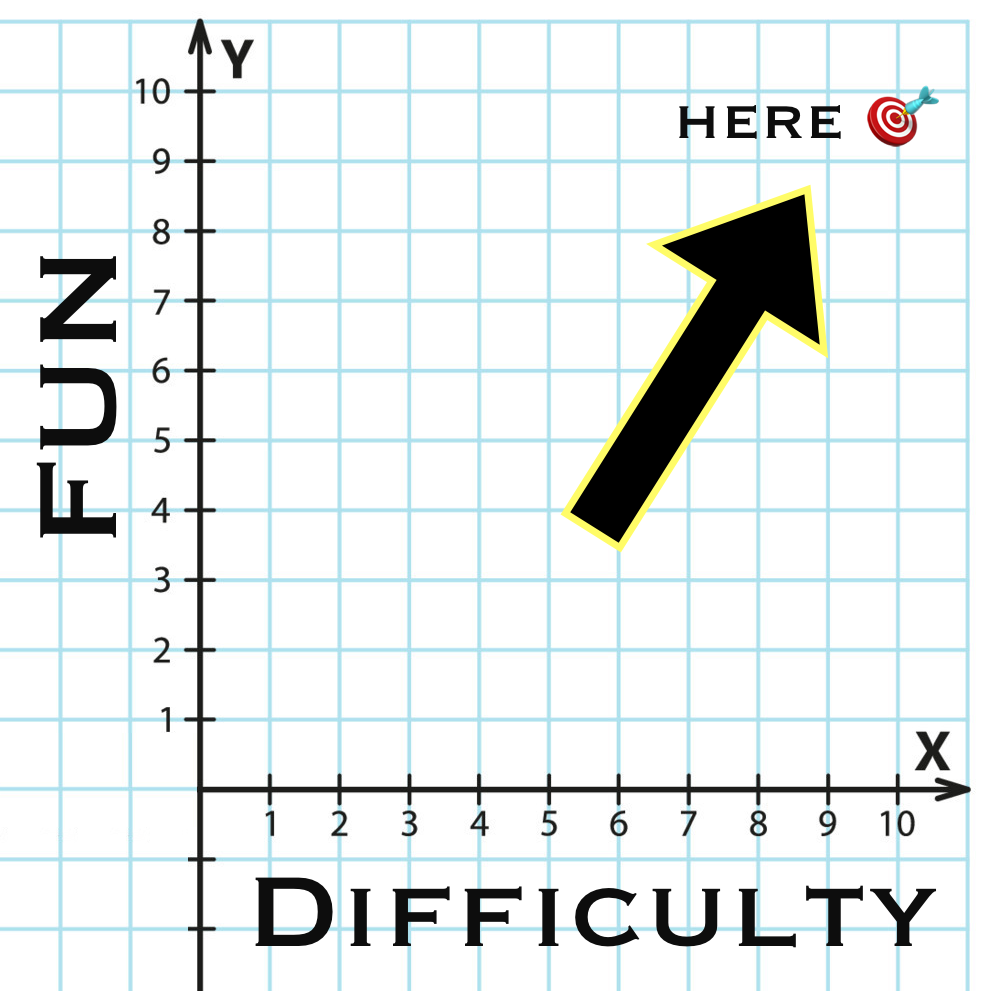

"Let’s call it the Asymmetric Enjoyment Filter."

A great life is nothing more than a series of days well lived strung together like a string of pearls.

—Robin S. Sharma

🛀💭 If it was easy, everyone would do it.

But the distinction that matters most for success isn’t between easy and hard.

It’s between what you find fun and what you don’t.

I’m sure there are some hard things you genuinely enjoy.

That’s a good place to look for opportunities.

Those are the things you’ll stick with long-term, outwork others on, think about after hours, and actively research beyond the point where others have given up or gotten distracted by the next shiny thing.

I know I don’t have to tell you why long-term, sustained interest matters: Compounding. 📈

Ideally, figure out what almost everyone else finds boring and hard, but that you find energizing and, not easy, but… easier.

A challenge is good — you don’t want it to be *too* easy, otherwise you’ll eventually get bored 🥱 — but if it’s relatively easier for you than for others, that’s a real advantage.

Let’s call it the Asymmetric Enjoyment Filter.

🎸🔍😅 I’m in trouble!

As I wrote recently, I bought an acoustic guitar after 20+ years of not playing… and I’ve already started looking at electric guitars 😬

Playing every day has made me realize a few things:

The reasons I gave it up back then have changed, but I never revisited the decision, even though so much has changed since. (What else should I revisit? 🤔)

The acoustic guitar was the easiest way to test whether I wanted to get back to playing, but at heart, I’m more of an electric guy. However, it’ll be great to have both.

Know thyself: Some things get better when you put some pressure on yourself, others get worse. When I was younger, I was judging myself harshly as a guitar player, comparing myself to others. It made me frustrated and lose interest. It made practice not fun. A bad mindset can ruin a good thing.

There are SO MANY more resources online for autodidacts now. It was very different for a fan of fairly underground music in the early days of the web. I couldn’t find most of what I looked for, and the quality was very hit & miss.

Nowadays, I don’t care if I ever get ‘good’ at my hobbies. I just enjoy learning new things and slowly growing my skills.

I feel *intrinsic pleasure and satisfaction* when I hold the guitar, when I play melodies and chords.

It’s like the Rafael Nadal line, “I love to hit the ball” 🎾

I don’t have any other goals than having fun.

Which makes me play a lot more.

Which explains why I’ve made so much progress in just a couple of weeks 😃

I’m not kidding myself, I know I’m in the honeymoon period, and I won’t always be this excited. But I feel like it’ll stay a great long-term hobby. My passion for music has never faded, and I still get a kick when I nail a new melody, chord progression, or riff after struggling with it for a while.

That’s plenty for me.

I’m not chasing mastery. I’m just chasing joy.

Soon, I’ll show you the electric guitar that I’m considering buying. It’s not in stock anywhere, so I’ll have to wait a while to get it, but hey, something to look forward to…

💚 🥃 🙏☺️ If you’re a free sub, I hope you’ll decide to become a paid supporter.

You’ll unlock access to paid editions, the private Discord, and get invited to the next supporter-only Zoom Q&As with me.

If you get just one good idea 💡 per year, it’ll more than pay for itself. If it makes you curious about new things, that’s priceless.

Can't upgrade right now? Share something you enjoyed here with someone who might like it too. Drop a screenshot and a link in your group chat! 💬

🏦 💰 Liberty Capital 💳 💴

😠🗯️🛋️ Redrawing the Prenup: OpenAI Wants Microsoft to Sleep on the Couch 🤔

The honeymoon period of 2022-2023 between Sam Altman and Satya Nadella is definitely over. They’ve gone from power couple to power struggle 💔

As OpenAI’s star has risen ⭐, the balance of power between the senior and junior partners has shifted, and the two have increasingly found themselves at odds.

Last year, we saw Microsoft hit its limit on how many tens of billions it was willing to invest in capex to provide OpenAI with compute. That dissolved the initial Azure exclusivity and opened the door for OpenAI to seek other deep-pocketed moneybags to fund the Stargate project (*cue Masayoshi Son and Larry Ellison*) 💰💰💰

The latest matrimonial dispute has to do with what happens when OpenAI converts to a for profit (still with a non-profit board and a weird structure, but cleaner than what they have now).

How much of the new entity will Microsoft own? How will the existing IP rights and profit-sharing agreements be interpreted going forward?

OpenAI wants Microsoft, the startup’s biggest outside shareholder, to have a roughly 33% stake in the reshaped unit in exchange for foregoing its rights to future profits, according to a person who spoke to OpenAI executives.

OpenAI also wants to modify existing clauses in its contract with Microsoft that give the software firm exclusive rights to host OpenAI models in its cloud, and it wants to exempt a planned $3 billion stock acquisition of AI coding startup Windsurf from the existing contract between the parties that grants Microsoft access to OpenAI intellectual property, according to that person and another person who spoke to Microsoft executives about it. (Source)

Windsurf competes with Microsoft Copilot, even if they’re not quite the same thing. But once OpenAI integrates it with its Codex stack, I think they'll be much more head-to-head.

So that’s another vector of friction and competition between the partners. 💥🥊

The current partnership gives Microsoft access to all of OpenAI’s IP. From their point of view, the coding data from Windsurf could be extremely valuable to further differentiate/improve GitHub Copilot, which has been falling behind new entrants like Cursor.

Early on, these coding AI tools were mostly front-ends for the third-party foundational models (like Anthropic’s Claude, which has been particularly good at coding). But over time, first-party user data and internally developed models are adding a lot of value on top, managing the complex orchestration required in building more advanced software, and differentiating them from standalone models. 💻 💾

Microsoft would love to get its hands on that for the same reason that OpenAI wants to keep it to itself.

Microsoft has a lot of leverage because, well, they acted as a kind of Super VC that provided OpenAI a crapload of funding & compute back when they were tiny, had no revenue, and everything was still uncertain (back in ye olden days of 2019)

Their track record at the time? Mostly coding AIs to play StarCraft II and Dota 2, and doing reinforcement learning for robotic hands. 🕹️

The largest LLM they had was GPT-2.

GPT-3 would only come out for the general public two years later in November 2021 (though there was researcher/dev access earlier).

It’s always easy to rewrite history with the benefit of hindsight and say that someone got an obviously good deal when they invested, but that’s usually unfair. There are probably plenty of alternate histories where OpenAI fails or doesn’t partner with Microsoft and Google or someone else takes the lead.

OpenAI is apparently considering complaining to the feds to try to pressure Microsoft:

But the negotiations have been so difficult that in recent weeks, OpenAI’s executives have discussed what they view as a nuclear option: accusing Microsoft of anticompetitive behavior during their partnership, people familiar with the matter said. That effort could involve seeking federal regulatory review of the terms of the contract for potential violations of antitrust law, as well as a public campaign, the people said.

Was this story leaked by OpenAI as part of their negotiating tactics? Will they do it?

Would Microsoft agree to renegotiate? Maybe trade some profit-sharing for more equity in the new entity? Or tightening IP access in exchange for removing the fuzzy AGI clause?

¯\_(ツ)_/¯

But if they can’t work it out, things could escalate… I’m not sure what a full breakup between Microsoft and OpenAI could look like, but it could be painful.

And distracting, for both sides, at a time when focus and execution matters most and one misstep can make you fall behind (just ask Zuck about Llama 4).

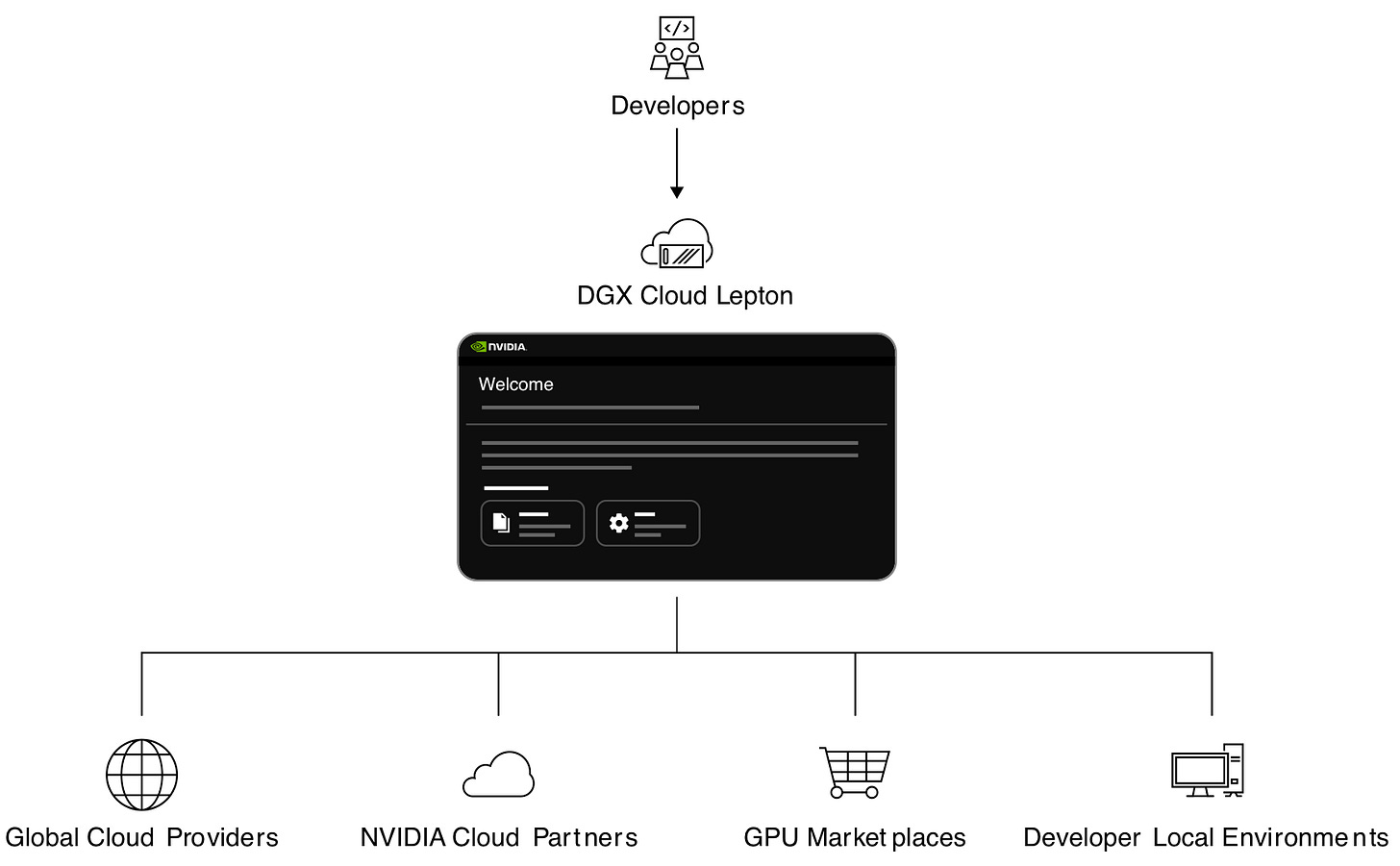

😎☁️🔀🤖🤖🤖 Nvidia DGX Lepton: Jensen’s Plan to Commoditize the GPU Clouds (can they fight back?)

Everybody in the AI compute ecosystem needs to play nice with Nvidia because they desperately need its GPUs. This allows Jensen to muscle into various parts of the stack and mess with profit pools and customer relationships in ways that benefit Nvidia, often to the detriment of its customers. 💪

DGX Lepton is a good example of the classic “commoditize your complements” strategy. It’s basically a two-sided marketplace that abstracts away the various GPU clouds (hyperscalers and neoclouds) into one platform orchestrated by Nvidia.

Customers who need access to GPUs could go to it and be allocated compute at various clouds based on availability and price, with Nvidia doing the routing. This is convenient for them because they don’t lock themselves into one specific provider, don’t have to manage multi-cloud deployments themselves, and get the benefit of clouds competing on price.

BUT

From the cloud providers’ POV, this kind of sucks. What used to be a direct relationship with customers now becomes Nvidia’s relationship. 🤝

This weakens their cross-selling and lock-in potential, on top of now having even more competition, which should pressure their margins.

Friend-of-the-show Dylan Patel has a great analogy:

Just like how Uber/Lyft is a platform for connecting customers to drivers, DGX Lepton does the same. Famously, Uber/Lyft turned stable taxi driver income into the low margin gig economy workers. DGX Lepton could have the same effect on neoclouds.

From Nvidia’s point of view, this is great in two main ways:

They can get some margin on Lepton directly. 💵

By increasing competition between the various (neo)clouds, they reduce prices to users, which should increase demand for compute → which means they sell more high-margin GPUs. 💰

And if Lepton takes off, Nvidia may become one of the biggest customers at the various clouds, steering a big pool of demand, making it hard for any one of them not to participate.

But if the clouds can coordinate before Lepton scales, they may be able to make it fail to get adoption altogether… but Jensen has leverage: he controls GPU allocations!

So far, more than a dozen GPU cloud providers have agreed to make servers available to the new Nvidia service, DGX Cloud Lepton. They include CoreWeave and Nebius, whose businesses are among the most mature in the field.

It’ll be interesting to watch.

If I were Jensen, I would make Lepton a free service, at least at first, to get as much adoption as possible. Making it paid out of the gate only lowers the odds it succeeds at all.

🧑💻👨💻 ChatGPT has More Web Traffic than Wikipedia 🌐

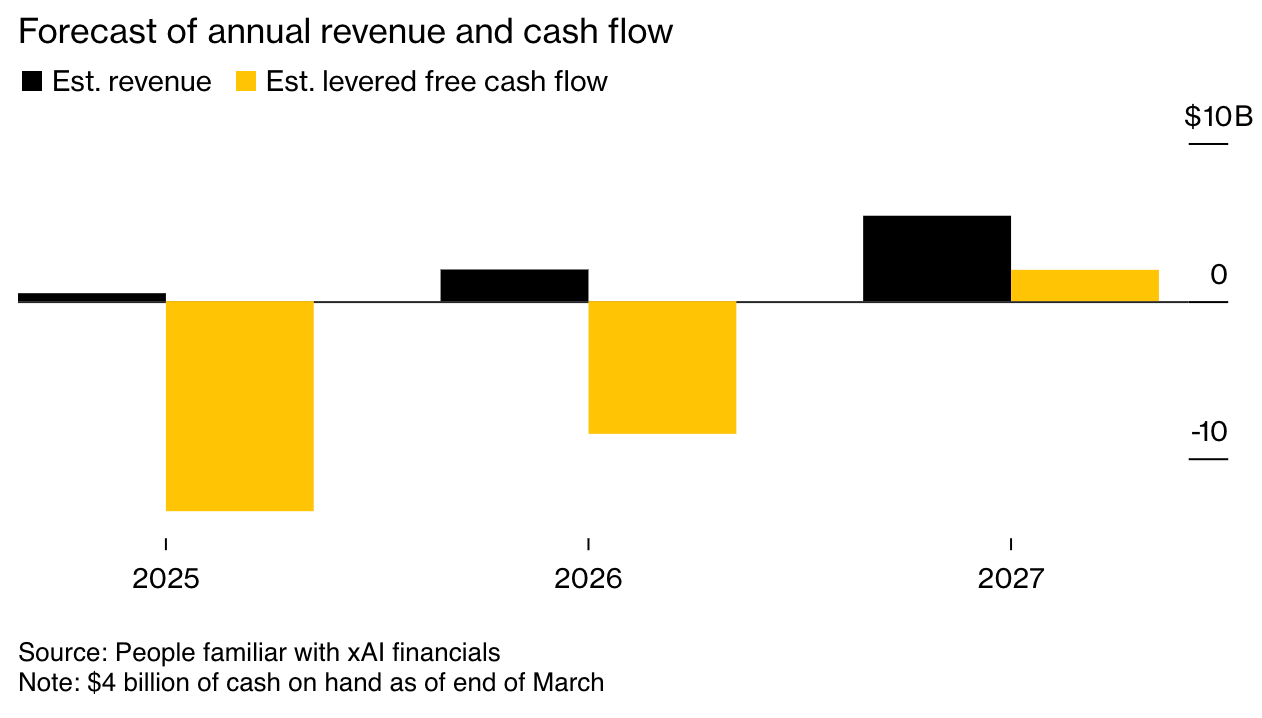

🩻🔍🕵️♂️ The State of xAI and Grok: Big Burn Rate, Small Traction 💸 🤔

It feels like Grok has failed to get consumer traction AND hasn't specialized into a profitable niche like, for example, Anthropic is doing with coding.

No, explaining tweets doesn't count as a profitable niche… 🐦🔍🤖

I have to say, it’s not looking good for them right now. Even though Musk has historically been a magician 🪄 at fundraising, the more time passes, the harder it’ll be for them to keep up with OpenAI and Google, especially as we enter the era of multi-gigawatt data-centers (more on that below).

Elon Musk’s artificial intelligence startup xAI is trying to raise $9.3 billion in debt and equity, but even before the money is in the bank the company has plans to spend more than half of it in just the next three months

Even if 100% of forecast revenue fell to the bottom line, it doesn’t look like it would be enough money to fund the kind of capex that will be required to stay at the frontier:

[xAI] expects to burn through about $13 billion over the course of 2025, or more than $1 billion each month [...]

Between its founding in 2023 and June of this year, xAI raised $14 billion of equity, people briefed on the financials said.

Of that, just $4 billion was left at the beginning of the first quarter, and the company expected to spend almost all of that remaining money in the second quarter

Who knows, maybe they will pull out a breakthrough, leap-forward model out of a hat 🎩🐇 and something about it will give it a sustainable advantage — or at least take a long time for others to catch up with.

They need time to drive adoption and let users habituate, to build stickiness.

Unlike with Grok 3, which was in the spotlight for a short moment… and then most people forgot about it and moved on to o3 and Gemini 2.5.

But somehow I doubt it.

I don’t think Elon has been very focused on xAI in the past 6 months, so I’m not sure they had the drive and pace that they had before Musk’s adventures in government.

💵 Sam Altman: ‘Meta offered OpenAI staff $100 million bonuses’ 💵

Well, I guess that’s pretty cheap compared to hiring Alexandr Wang 😅

I suspect it may be considerably easier for Zuck to poach from xAI than from OpenAI, Anthropic, or Google, but that’s just a guess.

💡 Thoughts on Measuring Compute in Watts 🔌⚡☁️

A short observation that will seem super obvious to some, but I don’t think everyone has considered it.

Lately, we’ve been measuring compute at the very large data centers required for AI in terms of energy…

xAI’s Colossus datacenter is being expanded to 1.2 gigawatts, OpenAI’s Stargate will be 5 GW when it gets to phase 5, the UAE project will also reach 5 GW at maturity, ‘Humain’ in Saudi Arabia is aiming to eventually hit 6.6 GW, etc.

This is a useful proxy for scale, but let’s not confuse energy usage with actual compute output.

The same 1 GW of electricity can power vastly different amounts of compute over time.

1GW of Hopper GPUs isn’t the same as 1GW of Blackwell GPUs, and as we go forward, Rubin and Feynman GPUs will have even more FLOPS/watt.

It’ll be interesting to see what the optimal equilibrium is over time when it comes to balancing keeping older GPUs around versus upgrading to new ones, because energy is becoming a bigger bottleneck than anything else, but how tight that bottleneck is varies greatly by country (eg, China vs USA).

In other words, in a place where energy is abundant and fast to build, it may make sense to keep fully depreciated GPUs around for a while for inference on less demanding tasks. But if energy is scarce and expensive and takes a long time to build, ripping them out and replacing them with the latest hot chips may make the most sense. 🏗️🚧

🧪🔬 Liberty Labs 🧬 🔭

☕ Deep Dive on the Health Impact of Coffee 🩺🫀🧠

Pour yourself a hot cup and check this distillation of the current science on coffee by Dr. Rhonda Patrick.

It may make you want another cup, but keep it early in the day and skip the sugar!

🙈💾🕸️👴 AI’s Holy Grail: Dealing With the Massive Mission-Critical COBOL Codebase 😬🏦💵💵💵💵

So much of the world runs on ancient code and ancient mainframes, and with every passing year, fewer and fewer people even know these systems and languages. 🪦

Efforts to modernize ancient code are ALWAYS way harder, take way longer, and cost way more than expected.

Maybe AI can help?

If you know what COBOL is at all, you probably know this is a big deal:

Morgan Stanley is now aiming artificial intelligence at one of enterprise software’s biggest pain points, and one it said Big Tech hasn’t quite nailed yet: helping rewrite old, outdated code into modern coding languages.

In January, the company rolled out a tool known as DevGen.AI, built in-house on OpenAI’s GPT models. It can translate legacy code from languages like Cobol into plain English specs that developers can then use to rewrite it.

So far this year it’s reviewed nine million lines of code, saving developers 280,000 hours [...]

Morgan Stanley, he said, was able to train the tool on its own code base, including languages that are no longer, or never were, in widespread use.

Now the company’s roughly 15,000 developers, based around the world, can use it for a range of tasks including translating legacy code into plain English specs, isolating sections of existing code for regulatory enquiries and other asks, and even fully translating smaller sections of legacy code into modern code. (Source)

They’re not at the stage yet where the AI can automatically translate from these ancient languages to a modern language on its own — at least not to the level of reliability required by these specific use cases…

It’s very delicate when the code is running the back-office of a bank with hundreds of billions moving around daily.

But that’ll come.

😴 The Impact of Noise on Sleep Quality: The Billion-Dollar Public Health Blind Spot 🌃🔊

Reducing noise in cities as an easy win on quality-of-life has been one of my minor crusades for a while. 🛡️⚔️

Andrej Karpathy recently had a good post on what he noticed when it comes to his own sleep quality:

My sleep scores during recent travel were in the 90s. Now back in SF I am consistently back down to 70s, 80s.

I am increasingly convinced that this is due to traffic noise from a nearby road/intersection where I live - every ~10min, a car, truck, bus, or motorcycle with a very loud engine passes by (some are 10X louder than others). In the later less deep stages of sleep, it is much easier to wake and then much harder to go back to sleep.And that’s while wearing earplugs!

People suggested that to him, and he replied:

Funny that people are suggesting earplugs to me. I've slept with earplugs my entire life and always assumed everyone else obviously does too haha.

He continues:

More generally I think noise pollution (esp early hours) come at a huge societal cost that is not correctly accounted for. E.g. I wouldn't be too surprised if a single motorcycle riding through a neighborhood at 6am creates millions of dollars in damages in the form of hundreds - thousands of people who are more groggy, more moody, less creative, less energetic for the whole day, and more sick in the long term (cardiovascular, metabolic, cognitive). And I think that many people, like me, might not be aware that this happening for a long time because 1) they don't measure their sleep carefully, and 2) your brain isn't fully conscious when waking and isn't able to make a lasting note / association in that state. I really wish future versions of Whoop (or Oura or etc.) would explicitly track and correlate noise to sleep, and raise this to the population.

It's not just traffic, e.g. in SF, as a I recently found out, it is ok by law to begin arbitrarily loud road work or construction starting 7am. Same for leaf blowers and a number of other ways of getting up to 100dB.His point about the societal damages caused by a single loud motorcycle riding around during normal sleeping hours should be highlighted. Same for early super-loud diesel garbage trucks or whatever. 🏍️

Hundreds — if not thousands — of people getting worse sleep and then being tired the next day, or even just having their cognitive functions impaired in subtle ways, can indeed be worth millions. 🥱

Now multiply that by all sources of nocturnal noise, it has to be billions in damage 💸

And how do you even measure the impact on creativity? How many good ideas were never had because someone slept poorly? ¯\_(ツ)_/¯

And the cumulative health-related costs over a lifetime also have to be significant 🏥

I ran a few Deep Research sessions and a number of studies that have tried to isolate noise and show depressing outcomes for cohorts of people who sleep in noisy environments, with increased risk across all of mental health (e.g. depression, bipolar disorders, Alzheimer's incidence) but also a lot more broadly, e.g. cardiovascular disease, diabetes.One thing I’m planning: the next time windows need to be replaced on my house, I’ll pay up for better insulated acoustic glass (or whatever makes sense).

I don’t think it’ll solve everything, but it can only help.

🎨 🎭 Liberty Studio 👩🎨 🎥

🗣️💬 Two Hours of Bob Dylan Anecdotes

Enough said!

As always, great stuff. I especially appreciated the section on sleep, something that I have belatedly come to value...maybe it comes with age?

A personal anecdote - my wife likes sleeping with the windows open and we have many birds that inhabit our backyard tree and like to wake up at 3:30am this time of year...she can sleep through it but I cannot. I got a white noise maker and run the fan on the AC to compensate. However, I am still looking forward to the winter time already.

"Why We Sleep" by Matthew Walker does a great job of scientifically explaining why sleep is so important.

New here, love the vibe. Ty