573: Zuck's Recruiting Strategy, Cursor is Crushing GitHub, Anthropic Fair Use, ZIRP Mortgages, Meta AI Pivot, China’s Grid as a Weapon, and Amazon's Megastructure

"when it comes to the *truly generational* top talent"

It is not because things are difficult that we do not dare; it is because we do not dare that things are difficult.

–Seneca

🤗🫂🤝 Hug it out!

People will optimize their protein intake, chase early-morning sunlight exposure, fine-tune vitamin D3 levels, and track deep sleep cycles like they’re managing an investment portfolio.

But many live with a chronic touch deficit, often without consciously realizing it. Or if they do, without recognizing just how fundamental it is to our biology. It isn’t merely a ‘nice to have,’ it’s foundational.

Human physical touch functions as an ongoing neurobiological calibration system.

When others touch us, specialized mechanoreceptors in our skin called C-tactile afferents activate somatosensory pathways that help the brain maintain its ‘body schema,’ our living neural map of where we exist in space and who we are.

As social animals, this process triggers the release of oxytocin and serotonin, key neuromodulators essential for trust, calmness, and social bonding, which are crucial for survival in cooperative groups. These are not just pleasant feelings, they’re chemical signals that enable survival. They affect countless aspects of our lives.

The importance of touch explains why astronauts 🧑🚀 who spend time in zero-gravity report distortions in their sense of embodiment despite constant visual feedback: microgravity causes persistent sensory mismatches that create sensory-conflict illusions. It’s why premature infants 👶 show normalized stress hormones after skin-to-skin contact, and why prolonged touch deprivation can contribute to depersonalization. Long-term solitary confinement is considered cruel for a variety of reasons, but that’s one of ‘em.

Conversation and visual cues like eye contact 👀 and facial expressions provide critical emotional and cognitive grounding and connection, but touch is especially effective at recalibrating the fundamental neural architecture that defines where "you" end and the world begins and how you relate to others in your social life.

This sensory recalibration grounds us in physical reality and provides a direct reminder of our place in the world in a way other interactions can’t quite replicate.

💚 🥃 🙏☺️ If you’re a free sub, I hope you’ll decide to become a paid supporter.

You’ll unlock access to paid editions, the private Discord, and get invited to the next supporter-only Zoom Q&As with me.

If you get just one good idea 💡 per year, it’ll more than pay for itself. If it makes you curious about new things, that’s priceless.

Can't upgrade right now? Share something you enjoyed here with someone who might like it too. Drop a screenshot and a link in your group chat! 💬

🏦 💰 Liberty Capital 💳 💴

🤖🧠 🛒😎💰Zuck is trying to buy everything in A.I. that isn’t nailed down 🦙🛍️ 💳

The saga continues!

I wrote about what is likely the most expensive acqui-hire of all time, with Meta paying $14.8bn basically just for Alexandr Wang.

Sure, they also get 49% of Scale AI, but since this move will make Scale lose a ton of customers, the *effective price* of getting Wang is even higher than it would be if the deal didn’t actively damage Scale’s value 💸

Then Sam Altman said on a podcast that Zuck had tried to recruit a lot of OpenAI’s top talent, luring them with $100m signing bonuses and huge pay packages (I don’t know if the number is true, but I don’t doubt big bucks are being offered)…

And then came the reports that Zuck was about to acqui-hire Nat Friedman and Daniel Gross, no doubt offering them huge pay packages and buying out their VC fund. This was after he reportedly approached Ilya Sutskever to acquire his company, Safe Superintelligence (SSI), where Gross serves as CEO.

Now we learn that Zuck has also been talking to Runway, the generative AI video startup, testing the waters for an acquisition or talent grab.

If that was all there was, it would already be a lot!

But Zuck has been a busy guy:

Mark Zuckerberg is spending his days firing off emails and WhatsApp messages to the sharpest minds in artificial intelligence in a frenzied effort to play catch-up. He has personally reached out to hundreds of researchers, scientists, infrastructure engineers, product stars and entrepreneurs to try to get them to join a new Superintelligence lab he’s putting together.

Some of the people who have received the messages were so surprised they didn’t believe it was really Zuckerberg. One person assumed it was a hoax and didn’t respond for several days.

A clear sign of a hard pivot… or panic mode 🚨⚠️🚨 (depending on how generous you want to be)

This was probably precipitated because Llama 4 failed to live up to expectations. I mean, Llama 4 Behemoth is still unreleased (this is the massive 288 B active‑parameter, ~2 T‑parameter model). At this rate, by the time it comes out, it may already be ‘old’. Every day that passes makes it fall further behind the state of the art. 🦙

Zuckerberg is in a WhatsApp chat called “Recruiting Party 🎉” with Ruta Singh, a Meta executive in charge of recruiting, and Janelle Gale, the company’s head of people.

Zuckerberg is also in the weeds of wonky AI research papers, digging into the tech and trying to find out about who is actually building it. He also believes there is a flywheel effect to recruiting: By talking to the smartest person he can find, they will introduce him to the smartest people in their networks.

When the Recruiting Party 🎉 chat finds people worth targeting, Zuckerberg wants to know their preferred method of communication, and he gets their attention by sending the first messages himself.

Zuckerberg has taken recruiting into his own hands, according to a person familiar with his approach, because he recognizes that it is where he can personally have the most leverage inside the company he founded—that an email from him is a more powerful weapon than outreach from a faceless headhunter.

That seems like a pretty good way to go about it.

It’s probably how I’d do it if I was him: Move fast, no bureaucracy, go to primary sources, figure out who’s the real deal, doing the best work, and go to them directly. 🏴☠

I guess if you’re going to reboot things, you may as well go big. And the amounts of $ involved with staying at the frontier of AI are so ginormous that in context, the numbers for acqui-hires and talent salaries start to seem… almost reasonable?

I mean, it’s kind of why CEOs are so well paid.

If you’re going to make decisions that will steer thousands of people and large quantities of physical capital, with an impact on billions of dollars of outcomes, it really matters who is in charge, and you want the most capable person at the helm.

The very best ones are *always* scarce. 👨🏻✈️☸︎

At least, that’s a nice theory. Many CEOs are vastly overpaid and even destroy value…

But when it comes to the *truly generational* top talent, it’s almost impossible to overpay because they can both generate so much value AND avoid so many massively painful mistakes that they pay for themselves many times over.

I mean, what if Microsoft had decided in 2014 that Satya Nadella was asking for too much and they went with their second pick instead, saving a few million bucks.

There’s no way to be sure what would’ve happened, but the odds are that most other CEOs wouldn’t have been anywhere near as successful as Nadella. This failure could have cost shareholders hundreds of billions, maybe even a trillion dollars or more.

Back to Zuck:

And Meta’s chief executive isn’t just sending them cold emails. Zuckerberg is also offering hundreds of millions of dollars, sums of money that would make them some of the most expensive hires the tech industry has ever seen. In at least one case, he discussed buying a startup outright.

While the financial incentives have been mouthwatering, some potential candidates have been hesitant to join Meta Platforms’ efforts because of the challenges that its AI efforts have faced this year, as well as a series of restructures that have left prospects uncertain about who is in charge of what, people familiar with their views said. (Source)

The fact that not everyone is immediately jumping on board with these incentives tells me that:

Other labs are also paying really well, and are probably increasing comp to fight off these offers from Meta. There’s a chance that *everyone* will soon pay a lot more for top talent, but the balance of power between the labs won’t have changed too much.

A big win for labor over capital, I guess, though with that level of com, labor is ALSO capital 🤔

It’s also a sign that Meta’s AI lab has gotten kind of a bad reputation in the industry. There aren’t that many people at the top of the industry, and most of them seem to prefer OpenAI and Anthropic (which has the lowest employee turnover, afaict) or smaller startups that potentially have higher upside (Mira Murati’s Thinking Machines Lab and Ilya Sutskever’s Safe Superintelligence come to mind).

When it comes to those who prefer open source development, which was one of Meta’s big selling points, a lot of that talent has gone to Mistral, the French startup that is now home to a lot of the key architects of the successful Llama 3 model.

Another question I’ve been wondering:

What’s going on with Yann LeCun?

He used to be one of the public faces of Meta’s AI efforts… but not so much lately.

He’s long been a skeptic of the LLM architecture and of those who predict ongoing rapid progress for a while using that technology. He doesn’t seem to think we can get to AGI and ASI that way.

Trying to acquire Sutskever’s company, or even just him, feels completely at odds with LeCun’s philosophy.

How much has LeCun’s public skepticism hurt Meta’s ability to hire AI researchers who disagree with him?

What’s his status at Meta now? Is he on the way out? Is he quietly being sidelined while a new crew — Wang, Friedman, Gross — takes over the main trunk of the effort?

👨⚖️⚖️ California Court: Anthropic’s AI Training Is ‘Fair Use’ (but this debate isn’t over) 🤖🔎📚🗜️💾

It’s still early in the case — this isn’t a final judgment, and it doesn’t have the legal weight of a Supreme Court ruling — but it’s an interesting development:

The Northern District of California has granted a summary judgment for Anthropic that the training use of the copyrighted books and the print-to-digital format change were both “fair use”.

My own belief is that AI training should be treated legally like human learning.

I can learn from whatever I want:

Books I bought, or books I borrowed from the library. Webpages. Reddit threads. YouTube videos. Newspapers, the radio, and carrier pigeons 🐦

Even someone mumbling on a podcast.

After I’ve learned from something, I don’t retain a perfect copy in my mind. I extract patterns and have better recall for certain parts than others.

AI training similarly transforms and heavily compresses what is learned.

There’s no way these models could exist if they had to literally hold the whole corpus they are trained on (which now no doubt includes billions of pages of synthetic data, on top of images, audio, and increasingly video data).

It’s not like copying it all bit-for-bit, verbatim in an archive to be accessed later (even Google Search, which does something closer to that, has been protected by fair use). Of course, AI can have better recall than our meat brains, but that’s a positive thing 🧠

This transformation through pattern-matching is learning, and if we want to legally block it so that “fair use” doesn’t apply, we’ll just entrench the incumbents, and it’ll make it so that AI is even more restricted to only the biggest companies in the world. It’ll be impossible for any small player or startup to get involved because they can’t afford the rights, and lawsuits will kill them.

This doesn't mean creators shouldn't benefit when their work is used for training. But treating the learning process itself as copyright infringement is the wrong path.

The way we did things in the past, in a different context, doesn’t necessarily apply forever. When new technologies emerge, new laws and social norms emerge. It’s not a binary between “ban it” or “let it rip.”

However, the court also found that the pirated library copies that Anthropic collected could not be deemed as training copies, and therefore, the use of this material was not “fair”.

The court also announced that it will have a trial on the pirated copies and any resulting damages, adding: “That Anthropic later bought a copy of a book it earlier stole off the internet will not absolve it of liability for the theft but it may affect the extent of statutory damages.” (Source)

Maybe publishers and rights holders can create one-time license bundles for AI Labs so that they can purchase a legal digital copy of every book. This could be a lot of money that alls straight to the bottom line 🤔

🏡 The Great U.S. Housing Divide: ZIRP Mortgages vs. Everyone Else 🏚️

You hear a lot about how expensive and unaffordable housing is these days. And by some metrics, that’s clearly true. And yet for some people, shelter is very affordable.

Yes, if you’re in the market right now, looking for a place to buy or rent, then it costs a lot of money. But if you locked in your monthly housing payment a few years ago (by getting a 30-year fixed rate mortgage during the ZIRP era) then you’re doing fine.

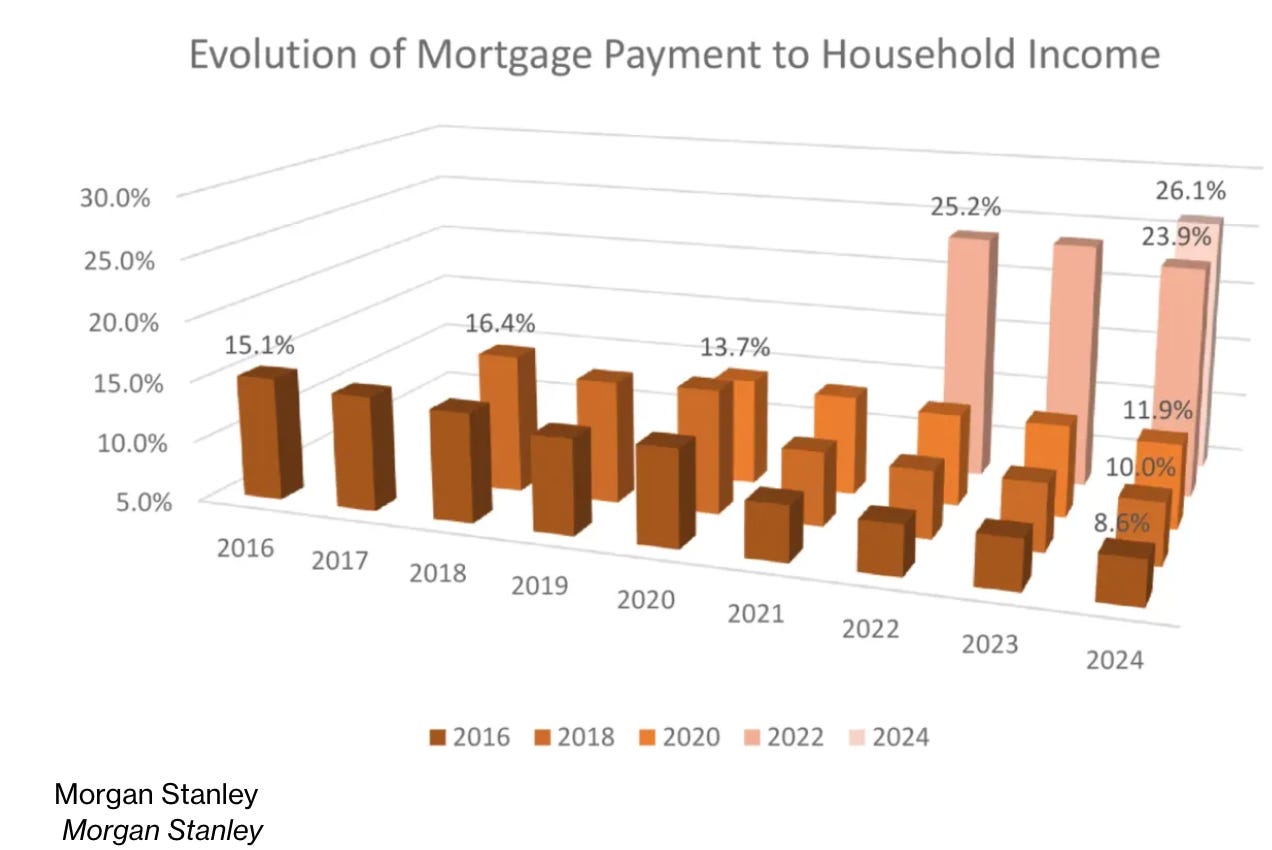

Back in April, Egan published this chart (which Tracy posted here) showing the huge difference between the housing payments burden incurred by people who got a mortgage prior to 2021 and those who got a mortgage post-2021.

What a difference a single year makes.

Squeaked in during 2021? On average, you may be paying around 10% of your income for housing. If you got in the next year, you’re likely to be paying around 25% of your income, on average.

I envy these 30-year fixed mortgages in the U.S. 👀

Here in Canada, most people get a 5-year fixed. 🍁

I got a 7-year fixed at 2.89% in 2020, which is considered long here, so that’s nice. But I kind of regret not getting a 10-year fixed, which is relatively rare here.

According to the latest data, as rates have risen, more than *80%* of Canadian borrowers are now on terms shorter than 5 years.

In the U.S. it’s flipped: Over 80% of mortgage applications in 2023 were in the 20–30-year fixed range, according to the FHFA’s 2024 Annual Housing Report.

Another difference: Mortgage interest is tax-deductible (within certain generous limits) in the U.S., but it ISN’T in Canada 🇺🇸 👀 🇨🇦

🧪🔬 Liberty Labs 🧬 🔭

🗣️🇨🇳🏭⚡ Interview: Arthur Kroeber on China’s Manufacturing Power & Why China’s Grid Might Be Its Secret Weapon for AI

Another great interview in friend-of-the-show Dwarkesh Patel’s China Series:

Here are a couple of highlights, but I encourage you to listen to the whole thing:

Arthur Kroeber: if the US is serious about revitalizing its industrial base—and I think there's a good case for trying to do that selectively—it is not going to happen unless you invite in the world's leading players and have them compete.

That is how China industrialized. 45 years ago, they were an industrial basket case. They said, “We want to get industrially strong. How do you do that? Get all the leading companies in the world to invest here and we'll learn from them.” That's how you do it. If we're serious about that, we should be serious about figuring out ways that we can bring Chinese industrial investment into the United States.

That to me would be a win-win, because Chinese companies at an individual level would love that. They see the US is a huge market. They can't get into it right now. They would love the opportunity to tap into it more. We could learn from them.

But there’s a new risk:

Arthur Kroeber: back 30 or 40 years ago, you used to be able to divide the world into technologies which were essentially for civilian use, a few technologies that were military, and then a very small proportion of dual-use technologies that could go either way. It was those dual-use technologies that had to be controlled very carefully.

Now basically everything is dual use. Any technology that you can imagine can be put to some kind of military use.

On China’s big bet on electrification, which is now turning out to give them a big advantage on AI too:

Arthur Kroeber: The comparable move that they are making today, which I think is severely underappreciated, is that they believe in the power of electrification. Electricity has been around for a long time. Still in most countries it only accounts for about 15-20% of the total final consumption of energy. [...]

The result is today, China has generating capacity that is more than double that of the United States. […] Electricity as a whole is now about 30% of China's total energy consumption. It's going up like this, it's rising very rapidly. Everyone else is increasing their electricity consumption very, very marginally.

What is one of the big constraints on AI development? At scale, it is essentially the power that you need to power these data centers.

Who is best placed in the world to do this at home? China.

They are now determined, I think, on a strategic basis to have an electricity system that just produces as much power as humanly possible from whatever fuel source you can find.

🔌📈

🏗️ Amazon’s AI Megastructure: 30 Data Centers in One Spot, 2.2 Gigawatts, and a Bet on Trainium 🔨👷

This thing is already huuuuge, a Dune-style megastructure, but only a small part of it has been built:

A year ago, a 1,200-acre stretch of farmland outside New Carlisle, Ind., was an empty cornfield. Now, seven Amazon data centers rise up from the rich soil, each larger than a football stadium.

Over the next several years, Amazon plans to build around 30 data centers at the site, packed with hundreds of thousands of specialized computer chips. With hundreds of thousands of miles of fiber connecting every chip and computer together, the entire complex will form one giant machine intended just for artificial intelligence.

It’s expected to use 2.2 gigawatts, making it by far the biggest electricity user in the state of Indiana.

Amazon calls it Project Rainier, and it will be used by Anthropic to train next-generation models using AWS’ homegrown Trainium chips.

These Trainium chips aren’t individually as powerful as Nvidia’s GPUs, but they use less power and aren’t as hot, so they intend to pack more of them per datacenter and hope to offset raw performance with density and efficiency.

It also helps that Amazon doesn’t have to pay Nvidia’s margins on these, but there’s more risk involved with this approach, and if things go wrong and this training campus isn’t competitive with a capex-equivalent Nvidia-supplied one, it could turn out to have been penny-wise, pound-foolish.

The exact cost of developing the data center complex is not clear. In the tax deal, Amazon promised $11 billion to build 16 buildings, but now it plans to build almost twice that. The total number of buildings is not determined yet and will depend in part on whether the company gets permission. (Source)

Interestingly, Amazon still relies on air cooling while most others are moving to water-cooling because the densest configurations of Nvidia’s Grace Blackwell GPUs used for AI training require it.

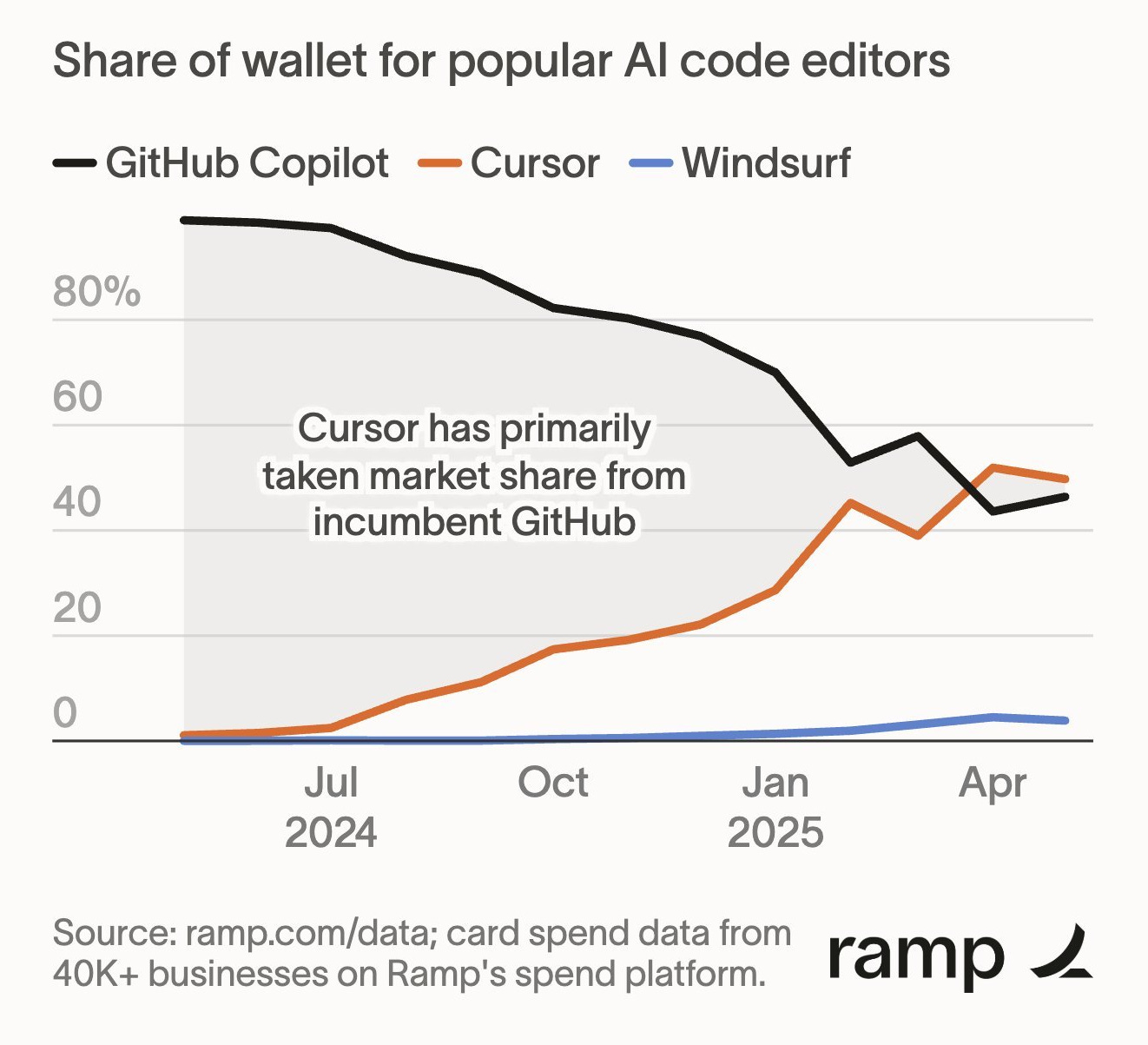

🐇💾 Cursor is Crushing It, Eating GitHub Copilot’s Lunch

After taking an early lead by pioneering a lot of the AI coding with its copilot, Microsoft’s GitHub has been rapidly losing share to the more nimble incumbents (if this data is correct).

I wonder if any of the Big Tech would even be allowed to buy Cursor by regulators 🤔

They kind of all play in that space somehow…

Maybe Cursor will have to stay independent. Maybe they’ll even go public.

What a concept these days!

You hear that, Stripe? SpaceX? Anduril? Databricks?

🎨 🎭 Liberty Studio 👩🎨 🎥

Have you ever seen Stanley Jordan play Eleanor Rigby at the 1986 Newport Jazz Festival? 🎸

You really should.

I bet it’s *not* what you expect.

Give it a try!

Even if you think you know the song… You don’t know this version.

"My own belief is that AI training should be treated legally like human learning."

I understand that argument but it makes me think of surveillance. Back before technology it was a lot more cumbersome to actually analyze the data so the surveillance would be more focused. Now there's zero marginal cost to surveil an individual and I wouldn't be surprised if every government/agency/etc is doing as much as they can here.

I don't have an answer or proposal here and don't know which side I'm on but I do think that lack of friction does make it different.

But to your point, we're in a new world and society/laws need to adapt.

I love your opening post!!

As tech gets more and more embedded in our lives, it seems many aspects of physical embodiment can get ignored and diminished.

Bravo for including this piece!!