586: Schrödinger‘s Company, Public Market Ghost Town, Meta + Google & OpenAI, John Malone, DeepSeek, Nvidia vs Huawei, Casey Handmer, Nano Banana, F-35 Crash, and Jurassic Park

"First comes the verdict, then the trial"

Meeting complexity with complexity can create more confusion than it resolves.

–Donald Sull

🤔💭🏆 How much time have you invested in deliberately figuring out what is truly meaningful to you?

It's important enough to deserve some real thinking time, no?

🛀💭 The accessibility of modern AI is one of its most surprising features.

We get used to things very quickly, so that state of things now seems obvious and banal.

But it would’ve been easy 15 or 20 years ago to paint a very different picture of the future. One where cutting-edge AI that requires billions and billions of dollars of physical infrastructure and large teams of super-highly-paid cracked researchers was so expensive and supply-limited that it would be used mostly by large corporations with a clear ROI and the wealthy. A future where usage was metered and costly, making you think twice before 'wasting' a query on something trivial.

Instead, hundreds of millions of people use it every day without a second thought about how much they use. And if the multiple free options aren’t quite enough, they can get access to the best models for less than a monthly phone bill.

📲☎️💻📽️🎬🍿 Computing devices are so important that when I watch a film, I always think about when it’s set in time in relation to the technology available to the characters.

My reference point is 2007, the iPhone year. 🗓️

It’s like my personal B.C. and A.D., but Before iPhone and After iPhone.

🦖🦕📕🎧 Perfect timing!

I watched Jurassic Park with my 11yo boy a couple of weeks ago. He loved it. I hadn't seen it in years, but now it's fresh in memory, so this episode that goes deep in the book is the perfect companion:

Jurassic Park was one of the first ‘adult’ novels I read as a young teen and I remember it fondly.

I’m halfway through the podcast, and it’s a great way to revisit the book AND contrast it to the film. There’s also a ton of interesting stuff about humanity’s ambivalent views on science and innovation (genetic engineering, in this case).

📫💚 🥃 Do it! 👇

🏦 💰 Liberty Capital 💳 💴

📈📉 Every Company is Schrödinger‘s Company 🐈📦

Humans are storytelling creatures.

Price action drives narrative.

There’s not a single company where market participants couldn’t *instantly* come up with a perfectly plausible-sounding explanation as to why the stock moved +20% or -20%.

Narratives are quantum ⚛︎

First comes the verdict, then the trial 👨⚖️⚖️

Take your pick: Berkshire Hathaway, Nvidia, Meta, Google, Microsoft, whatever.

What’s most interesting is that the same attributes will often be cited in both the positive and negative cases, just with a different spin on top of them.

Jensen is incredibly aggressive, that’s why he wins!

Jensen is incredibly aggressive, that’s why he drove over the edge!

Zuckerberg is a founder with unchecked power, that’s why he can invest for the long-term and do painful course corrections!

Zuckerberg is a founder with unchecked power, that’s why he wastes tens of billions of dollars on things with dubious ROI.

Google is an entrenched incumbent deeply embedded in the digital world, that’s why they win.

Google has been an entrenched incumbent for so long that they’ve grown fat and lazy.

This isn't just a thought experiment. Just within the past few years, we’ve seen the narrative pendulum swing back and forth. 2021 on one side, 2022 on the other, and now we’re back closer to 2021.

🏗️💰🚀 The Public Market Ghost Town: We Need More Companies to go Public, and Earlier 👻

BuccoCapital had a good tweet recently that captured something I’ve been thinking about a lot for many years:

There’s something sad about the fact that great companies go public with all the gains captured by private investors

Amazon IPOed at $500m. Nvidia? $50M!

Hard not to think we’ve lost something great about the equalizing force of capitalism over the last 30 years

This trend is corrosive, not just for public market investors that may be missing out on returns, but because it changes the incentives for entrepreneurs and founders.

It’s one fewer path available… well, that path is still there, but clearly it’s a lot more expensive and complex than it used to be to go public.

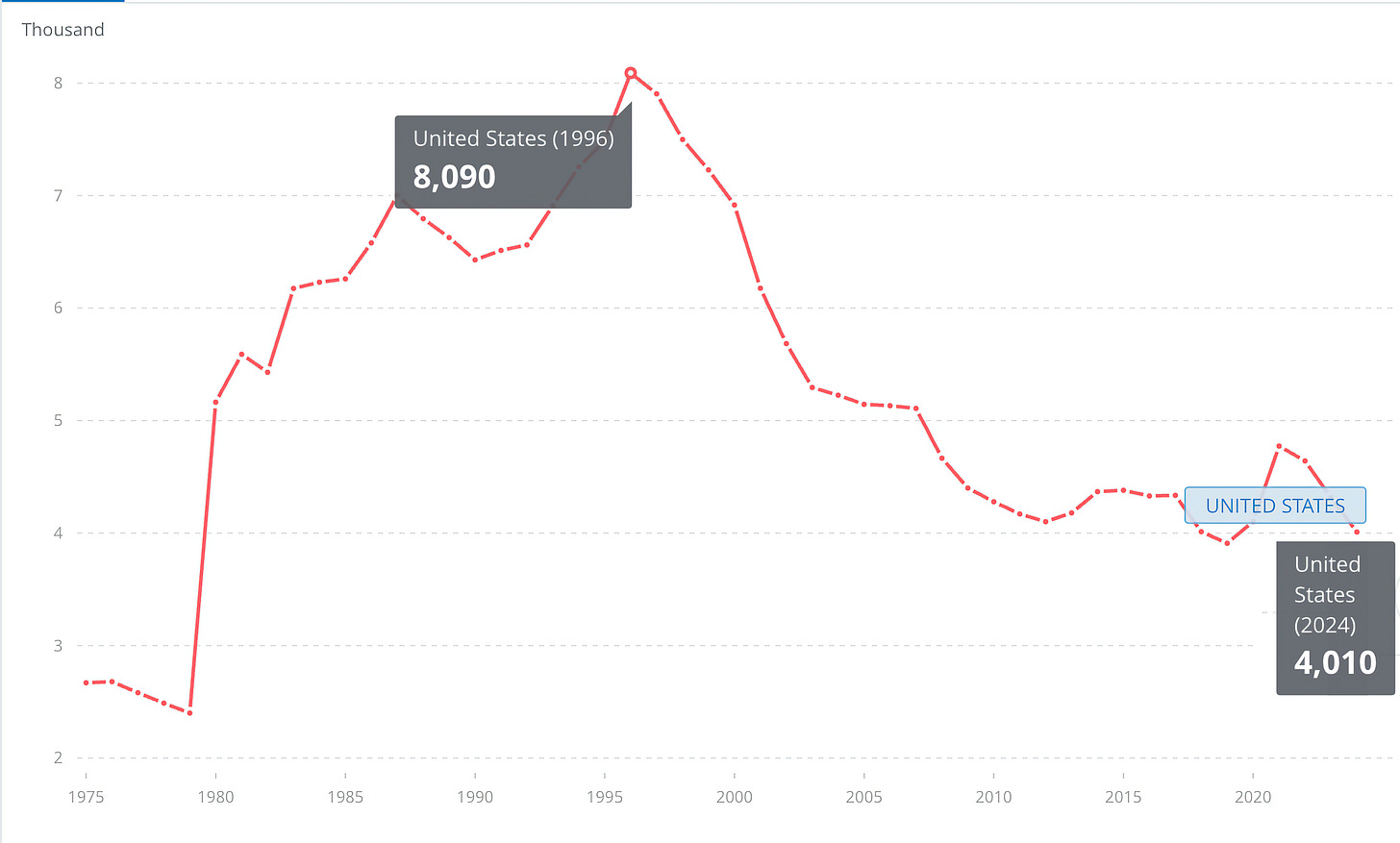

According to WorldBank data, the peak for listed corps was in 1996, and despite the brief IPO window in 2021, the trend has been pretty clear:

There are about 5,500 companies that are traded in the U.S., but about 1,500 of them are foreign companies.

There’s a data artifact on the chart that makes the current situation even worse than it may seem: In the last 1970s, the WorldBank added NASDAQ companies to its data set when it was included in the U.S. national market system. This means that there were more public companies in the late 1970s than today despite the fact that the U.S. economy is *much* larger than it was in 1980 (adjusted for inflation: About $10 trillion in 1980 and almost $30 trillion today).

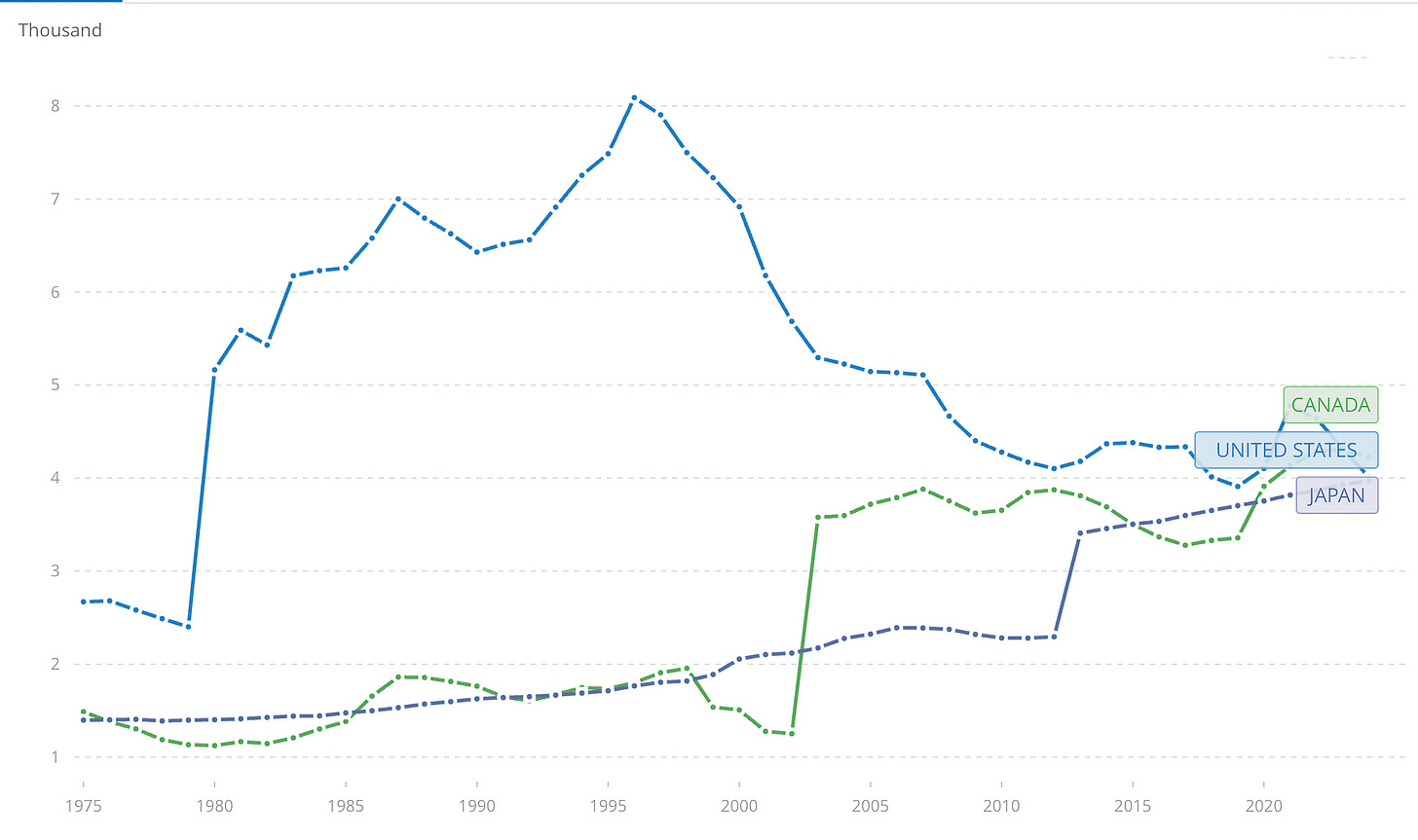

Digging around, I was also a bit surprised to see that Canada and Japan each have almost as many as the US:

Though of course, median quality is a different story… And I won’t get into how many “companies” trade in China 😬

So what happened? Red tape 📋

Whatever fraud and shenanigans regulations like Sarbanes-Oxley Act and Dodd-Frank prevented, did they cause more damage by also preventing a bunch of good things for public investors..?

Shouldn’t we care about the net impact? ⚖️

Nature abhors a vacuum. All kinds of ways to get capital and liquidity from private markets grew over this period, and the most successful companies wait as long as possible before going public, if they ever do.

In a previous era, SpaceX, Stripe, Databricks, even OpenAI and Anthropic would have long been public. And others that did eventually go public may have gone public earlier (Figma, Uber, Airbnb, Snowflake, etc).

🤖👨🔬 Meta’s New AI Leadership is Considering Partnering with Google & OpenAI (for now) 🤝

Keep in mind that there’s a gulf between considering something and doing it, but if this information is correct, it does show how different Meta’s AI posture is now vs what it was just a few months ago:

Leaders in Meta’s new AI organization, Meta Superintelligence Labs, have discussed using Google’s Gemini model to provide conversational, text-based answers to questions that users enter into Meta AI, the social media giant’s main chatbot, a person familiar with the conversations said. Those leaders have also discussed using models by OpenAI to power Meta AI and other AI features in Meta’s social media apps [...]

While a big priority of the lab is making sure its next generation AI model, known as Llama 5, can compete with other top-notch models, that work will take time. Any deals it strikes with outside model makers like OpenAI and Google in the interim are likely to be stopgap measures meant to improve its AI-powered products, until Meta’s own models are significantly improved (Source)

I’m guessing that the way they’re thinking about it is that it doesn’t matter too much if they use someone else’s model as long as they keep users on their platform, forming the habit of going to Meta’s AI.

It’s a lot easier to do that and hot-swap Llama 5 into the product when it’s ready than try to lure back users that have tried the product, found it lacklustre, and gone elsewhere, creating a new habit for ChatGPT or Gemini or whatever.

Personally, I can’t say that I know anyone who even mentions Meta’s AI assistants and uses them as their primary chatbots. I’m sure that in more normie circles, they get more usage.

But I’m skeptical of metrics like weekly or monthly users for these, because I see how hard Meta is working to get me to click through to Threads from Instagram, and I sometimes do. That makes me count as an “active user of Threads” but I don’t really use it much anymore.

I suspect that a lot of Meta AI users are people who click through once in a while, but the median intensity of usage and relationship of the users with the product isn’t anything like what ChatGPT is seeing.

🚪 Meta AI Lab Sees Multiple Departures and Almost Loses ChatGPT Co-Creator Shengjia Zhao 🤔

Speaking of Meta’s AI lab, it’s impossible to know how things are *really* going, and when you make big changes, you can expect turbulence and that not every new transplant will take and thrive.

But after a few weeks of super-positive headlines about Terminator Zuck and how Meta was poaching a ton of talent from the competition and making it much more expensive for them to retain the rest, the news flow has turned more negative.

For example:

Within days of joining Meta, Shengjia Zhao, co-creator of OpenAI’s ChatGPT, had threatened to quit and return to his former employer, in a blow to Mark Zuckerberg’s multibillion-dollar push to build “personal superintelligence”.

Zhao went as far as to sign employment paperwork to go back to OpenAI. Shortly afterwards, according to four people familiar with the matter, he was given the title of Meta’s new “chief AI scientist”.

There’s more:

Adding to the tumult, a handful of new AI staff have already decided to leave after brief tenures, according to people familiar with the matter.

This includes Ethan Knight, a machine-learning scientist who joined the company weeks ago. Another, Avi Verma, a former OpenAI researcher, went through Meta’s onboarding process but never showed up for his first day, according to a person familiar with the matter.

In a tweet on X on Wednesday, Rishabh Agarwal, a research scientist who started at Meta in April, announced his departure. [...]

Meanwhile, Chaya Nayak and Loredana Crisan, generative AI staffers who had worked at Meta for nine and 10 years respectively, are among the more than half a dozen veteran employees to announce they are leaving in recent days. (Source)

There’s been a few more (Afroz Mohiuddin, Rohan Varma, Bert Maher, etc).

Not a hugely significant number for what is still a big organization, but seeing this does raise my priors a little that maybe something is wrong with the culture inside Meta’s AI lab, and it may take some time to fix it.

Last week, Meta “temporarily [paused] hiring across all [Meta Superintelligence Labs] teams, with the exception of business critical roles”. This may be a sign that they need to digest the changes and have some time to integrate new hires and form a new culture.

On theory I have, and I’ve shared it with you before, is the SuperGroup effect. It’s not because people did great work elsewhere that if you bring them together, they’ll gel and be able to keep doing great work. Talented people are necessary, but you need them to be able to work productively together, and many things can impede that.

For all its own faults, Anthropic appears to have cracked this part of the code. It reportedly has the lowest employee churn in the industry and more departures eventually come back, proving that a strong.

🤠 John Malone’s Memoir 📖

I have to admit, I would’ve been a lot more excited about this book if it had come out 10-15 years ago.

Malone’s star arguably peaked around the time William Thorndike’s ‘The Outsiders’ came out (2012), and since then his playbook of gaining scale through M&A and doing levered buybacks on media and cable assets hasn’t really worked the way it did historically.

But hey, I don’t just want to learn from what worked. I also want to learn from what didn’t.

Mark Robichaux’s ‘Cable Cowboy’ is a good book, but it came out in 2002 and didn’t cover Malone’s early days much. I’ll be curious to see what Malone has to say about his training at Bell Labs and the big deals post-2002.

If you’re curious about the new memoir, there’s a 1-chapter excerpt here.

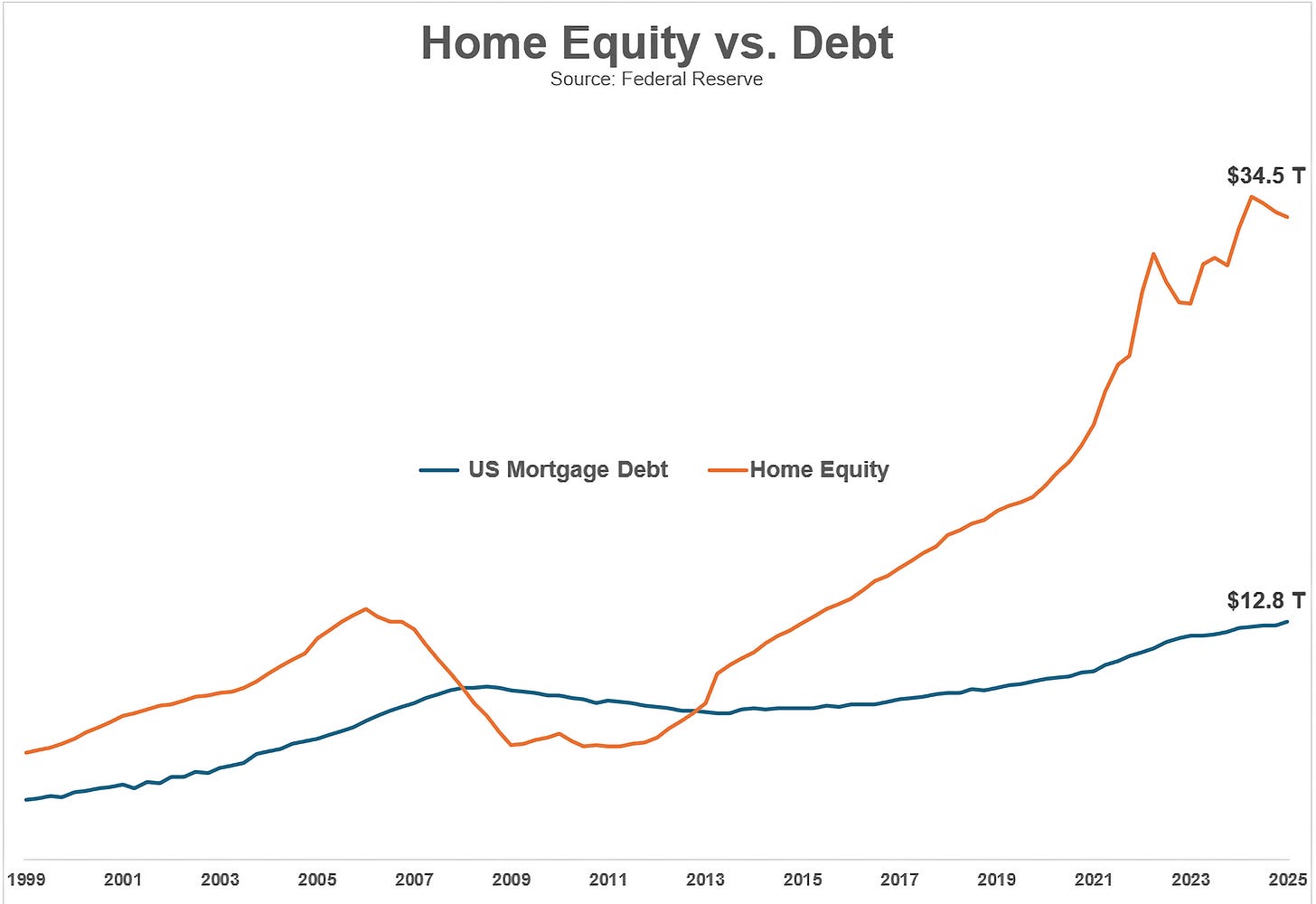

🏡 U.S. Home Equity vs Debt since 1999 🏚️

Great graph by Ben Carlson. What a rebound since the GFC.

🇨🇳 Replacing Nvidia Isn’t Easy, DeepSeek Edition 🤖🔨

While China’s homegrown semi ecosystem is making progress, things are not going entirely smoothly:

Chinese artificial intelligence company DeepSeek delayed the release of its new model after failing to train it using Huawei’s chips

After the planetary success of its model, they were “encouraged” by the authorities to use Chinese chips…

But the Chinese start-up encountered persistent technical issues during its R2 training process using Ascend chips, prompting it to use Nvidia chips for training and Huawei’s for inference, said the people. [...]

Huawei sent a team of engineers to DeepSeek’s office to help the company use its AI chip to develop the R2 model, according to two people. Yet despite having the team on site, DeepSeek could not conduct a successful training run on the Ascend chip, said the people. (Source)

Other reports say that DeepSeek will go with a hybrid approach, using Ascend chips to train smaller models and inference, while using Nvidia GPUs for the bigger training runs.

Considering how fast things are moving, this delay for R2 is a pretty big setback.

When R1 first made a big splash, I wrote about the potential danger to DeepSeek of getting all this attention, because it meant they were now on the radar of politicians and may not be as nimble as they were before.

The mandate to use Huawei chips may be an example of exactly this effect.

Now, it doesn’t mean that these issues won’t be solved, but for now, DeepSeek is likely behind where it would be if it could’ve just stayed fully on the Nvidia stack.

🧪🔬 Liberty Labs 🧬 🔭

🗣️ Interview: Casey Handmer on Building the Future 🏗️🏗️🚀

If ambition is the world's scarcest asset, consider this interview a necessary supplement 💊

I don’t know how much of what he’s working on will ultimately work out, , but he’s one of the few people thinking about how to improve our civilization on the right scale.

🍌 Google’s Nano Banana Image Model is Incredible 🎨

Google has been *cooking* lately.

Last week I wrote about Genie 3. The next most impressive release is an image generation-and-editing model that excels at following instructions and keeping characters and scenes consistent.

The example above shows its power: the model was given separate images and asked to combine them into a single, coherent scene. The result is something no other model could pull off this well!

Nano Banana debuted at #1 in the LLM Arena Leaderboard 🥇 for Image Edit with the largest score jump in history over the previous leader (1362 vs 1191 for Flux-1-Kontex-Max).

I’ve been playing with it a bit myself, and so far I’m very impressed.

I even like that they leaned into the codename. It shows playfulness. It’s the name they used when anonymously testing the model on LLM Arena, but I think they kept it because people were so impressed by it that it built a brand under that name.

This kind of leap forward should make Adobe shareholders incrementally more nervous. I’m not saying cashflows will dry up over night or anything, but it’s a step change in utility for genAI for images.

I’m also hearing rumors that Gemini 3 may come out later this week 🤞

☎️ F-35 pilot held 50-minute airborne conference call with Lockheed engineers before $200m fighter jet crashed💥

What a headline!

Talk about having a hard time full concentrating on a conference call…

A US Air Force F-35 pilot spent 50 minutes on an airborne conference call with Lockheed Martin engineers trying to solve a problem with his fighter jet before he ejected and the plane plunged to the ground in Alaska earlier this year, an accident report released this week says. [...]

The pilot ejected safely, suffering only minor injuries, but the $200 million fighter jet was destroyed.

The trouble began when the F-35’s landing gear wouldn't retract properly.

After trying to lower it again, the nose wheel got stuck at an angle. In an attempt to fix it, the pilot tried two "touch and go" landings.

Then things went from bad to catastrophic…

At that point, the F-35’s sensors indicated it was on the ground and the jet’s computer systems transitioned to “automated ground-operation mode,” the report said.

This caused the fighter jet to become “uncontrollable” because it was “operat(ing) as though it was on the ground when flying,” forcing the pilot to eject.

Yikes!

So what caused this cascade of failures?

An inspection of the aircraft’s wreckage found that about one-third of the fluid in the hydraulic systems in both the nose and right main landing gears was water, when there should have been none.

The investigation found a similar hydraulic icing problem in another F-35 at the same base during a flight nine days after the crash, but that aircraft was able to land without incident.

❄️

🎨 🎭 Liberty Studio 👩🎨 🎥

🏦 ‘Inside Man’ (2006, Spike Lee, Denzel Washington) 😷

>NO< spoilers below

I had somehow missed this one when it came out.

I don’t remember the context, but Jim O’Shaughnessy (💚 🥃🎩) recommended it to me, so I knew I had to check it out (we have pretty similar tastes).

It did not disappoint!

Instantly one of my fave heist films. In fact, I had been meaning to rewatch Michael Mann’s ’Heat’, and this made me bump it up the list.

If you’ve never seen it and like the genre, or if you haven’t seen it in a long time, I recommend it 👍 👍

Trivia: At first, Ron Howard was going to direct it. I wish I could see what that version would’ve been like!

I'd never heard of John Malone before listening to Senra's Podcast on him, which pushed me to read Cable Cowboys. I look forward to reading his new autobiography.

It is interesting that the number of IPOs have decreased. It is even more interesting that the Trump administration is intent on allowing individuals to invest in private companies with their retirement plans. That is unlikely to push more IPOs as companies will have even more access to "venture" capital...

Having read https://www.goodreads.com/book/show/59056157-the-price-of-time, I think the overabundance of capital in private equity is a symptom of easy money for a long period. Just wait till we have discount rates and bonds yielding at least high single digits for awhile and nobody can get out of their gated private equity for decades and you'll see a lot more smaller listings again. Also the low number of stocks reflects weak antimerger enforcement for a long time