588: Google's AI Money Machine, OpenAI + Broadcom, AWS + Anthropic XPUs, Nvidia H20, Europe's A/C Deaths, El Segundo Roundtable, Veo 3, Dan Wang, and Construction Roomba

"the survivorship filter of thousands of years"

If you scratch a cynic, you'll find a disappointed idealist.

—George Carlin

😎⛱️👙🍹🍇🍝🏰vs🏚️🏭 🛒🚗🛣️🍔 Here’s one I see often, and many people are blind to it.

Comparing peak vs average.

Or maybe call it the Instagram highlight-reel fallacy 😎🤳

Say someone lives in a basic, average neighborhood in America. They look around and see big box stores with vast parking lots, cookie-cutter suburban homes, billboards with ads for plumbers, and traffic on the highway.

Then they go to Europe on vacation… but they don’t pick their destination at random. They go to the most beautiful parts of the region, take the scenic routes, eat in good restaurants, and generally have a curated experience that may cost their family $10-20k, far more per day than their normal life at home.

Later, they see some statistic about how Europeans are poorer than Americans and that the continent has all kinds of troubles, and they say something like:

“It doesn’t matter, Europe is so much nicer! Quality of life is so much better! Who needs money when you live in such a paradise? I wish I could retire over there, every time I go it’s so nice…” or whatever.

What’s comes to their mind when they think “Europe” is cherry-picked, not the typical daily reality of someone living there who doesn’t have as much money to spend per day as a tourist.

Don’t get me wrong: This doesn’t mean that Europe doesn’t have plenty of charms, or that the survivorship filter of thousands of years of building things and history doesn’t produce more beauty than in a country where most structures were built in the past 50-75 years, or that some people wouldn’t prefer the trade-offs that have been made there.

But it’s still not a fair comparison, just like the reverse wouldn’t be if we compared the median French banlieue to the most beautiful districts of the U.S.

The same bias seems to happen a lot with China too.

People visit Shanghai and come back with a certain impression. But they often forget that *hundreds of millions* of Chinese citizens live in parts of the country that are very different.

Between Beijing and the rural parts of Gansu province (GDP/capita similar to Jamaica and Botswana), there’s a world of difference, just like there is between rural Mississippi and Martha’s Vineyard..

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet 👇

🏦 💰 Liberty Capital 💳 💴

☁️→💰 Thomas Kurian: Google’s AI Money Machine

First with a little shade:

if you compare us to other hyperscalers, we are the only hyperscaler that offers our own systems and our own models, and we're not just reselling other people's stuff.

Is AI making money yet? 💵

we've made billions using AI already. We're growing revenue while bringing operating discipline and efficiency.

So our remaining performance obligation or backlog is sometimes referred to, is now at $106 billion. It is growing faster than our revenue. More than 50% of it will convert to revenue over the next 2 years. So not only are we growing revenue, but we're also growing our remaining performance obligation.

That sounds like a lot until you look at Oracle 😅

But I can’t help but feel like there’s something strange going on with Oracle. I need to dig into it and think some more about it 🤔

Back to Google, how do they monetize?

The 5 ways we monetize AI.

Some people pay us for some of our products by consumption. So if you use our AI infrastructure, whether it's a GPU, TPU or use our model, you pay by token, meaning you pay by what you use. Some of our products, people pay for by subscription. You pay a per user per month fee, for example, Agentspace or workspace.

Some monetization comes by increased product usage. So if you use our cybersecurity agent and you run threat analysis using AI, we've seen huge growth in that. Example, we're over 1.5 billion threat hunts, we call it, using Gemini, and that drives more usage of our security platform. Similarly, we see growth in our data cloud.

We also monetize some of our products through value-based pricing. For example, some people use our customer service system, say, "I want to pay for it by deflection rates that you deliver." Some people use our creative tools to create content, say, "I want to pay based on what conversion I'm seeing in my advertising system." And then finally, we also upsell people as they use more of it from one version to another because we have higher quality models, more quota and other things in higher-priced tiers.

To be honest, his “5 ways” kind of boil down to three (consumption, subscription, and outcome-based), but it’s interesting to see how many different business models they deploy at the same time. It’s a good way to experiment and find out what works for each produce/service.

On how fast Gemini is ramping up:

compared to 1.5, which we launched in January of this year, 2.5, our latest model, reached 1 trillion tokens 20x as fast. So we're seeing large-scale adoption of Gemini by developer community.

To be fair, Gemini 2.5 generates a lot more tokens per query on average because of reasoning, so part of that 20x has to be from this algorithmic change rather than purely from higher end-user usage. But it’s still a lot of tokens! 🚀

On the impact of AI of usage:

Those customers that use our AI tools typically end up using more of our products.

For example, they use our data platform or our security tools. And on average, those that use our AI products use 1.5x as many products than those that are not yet using our AI tools. And that leads then customers who sign a commitment or a contract to over-attain it, meaning they spend more than they contracted for, which drives more revenue growth.

The big question here is causality vs. correlation. Are the AI tools causing users to explore more products and use them more, or are the most engaged "power users" simply the first to adopt AI features, self-selecting into it?

But I’m sure they can tease out what happens to user cohorts after they start using the AI-enhanced products 🤔

🤖🤝🐜 OpenAI's Silicon Gambit: TPU-like ASICs with Broadcom

Here’s Hock Tan, the CEO of Broadcom during the last quarterly call:

Now let me give you more color on our XPU business, which accelerated to 65% of our AI revenue this quarter. Demand for custom AI accelerators from our 3 customers continue to grow as each of them journeys at their own pace towards compute self-sufficiency. And progressively, we continue to gain share with these customers.

Now further to these 3 customers, as we had previously mentioned, we have been working with other prospects on their own AI accelerators. Last quarter, one of these prospects released production orders to Broadcom, and we have accordingly characterized them as a qualified customer for XPUs and, in fact, have secured over $10 billion of orders of AI racks based on our XPUs. And reflecting this, we now expect the outlook for our fiscal 2026 AI revenue to improve significantly from what we had indicated last quarter.

This is reported to be OpenAI, joining Google, Amazon, and Meta as Broadcom XPU customers.

From OpenAI’s point of view, there are multiple ways to win with this.

They are compute constrained, and Nvidia is supply constrained, so having their own chips would be additive. But it also gives them better leverage when negotiating with Nvidia and hyperscalers for compute.

BUT

These ASIC projects don’t always work out. Any delays or issues can be the difference between a useful chip and a useless piece of silicon that has a higher total cost of ownership than Nvidia’s latest and greatest. In fact, the equation must account for not just TCO but also datacenter square footage constraints and energy bottlenecks.

Even if the TCO is lower than Nvidia on paper, if the compute density is also lower, it may make sense to go with Nvidia’s denser compute to fit within the datacenter space ‘budget’. Same for energy. If you only have 1 gigawatt of power available, you’ll want the most compute per watt even if upfront cost is a bit higher.

In favor of ASICs is that they’re more specialized than Nvidia’s GPUs, which means that for the specific workloads of a company, they can be particularly efficient. This is a double-edged sword if there’s a big architectural breakthrough with AI models that require a different balance than what the ASIC was originally designed for (eg. more memory, more networking bandwidth, etc).

This is where OpenAI has a key advantage: being vertically integrated on both the models and the ASIC, they have a better idea of what is in the pipeline and can tune the chip design for it.

When a hyperscaler that *doesn’t* own a frontier lab makes an ASIC, they are more likely to design something that isn’t quite right for what has come out by the time the chip if taped out and fabbed. (This is what the next story is also about 👇)

Maybe at some other time I’ll get into Broadcom's Tomahawk Ethernet switches vs Nvidia's NVLink interconnect. That’s another battlefield.

AWS and Anthropic’s Trainium XPUs Symbiosis 🔬🐜👨🔬

SemiAnalysis has a good piece about Amazon’s evolving positioning for AI and what they expect will be their coming resurgence.

A key part of it is working closely with Anthropic to design custom silicon:

Trainium2 lags Nvidia’s systems in many ways, but it was pivotal to the multi-gigawatt AWS/Anthropic deal. Its memory bandwidth per TCO advantage perfectly fits into Anthropic’s aggressive Reinforcement Learning roadmap. Dario Amodei’s startup was heavily involved in the design process, and its influence on the Trainium roadmap only grows from here.

Put plainly: Trainium2 is converging toward an Anthropic custom-silicon program. This will enable Anthropic to be, alongside Google DeepMind, the only AI labs benefiting from tight hardware–software co-design in the near horizon. [...]

While Nvidia’s chips and systems are better on most fronts, Trainium2 fits perfectly into Anthropic’s roadmap. They’re the most aggressive AI Lab on scaling post-training techniques like Reinforcement Learning. Their roadmap is more memory-bandwidth-bound than FLOPs bound.

The SemiAnalysis folks are very bullish on the Anthropic and AWS partnership, largely thanks to the surging demand for AI for coding and Anthropic’s leadership there (either directly through Claude Code or via Cursor and other AI IDEs).

We think the outlook is bright for Anthropic. Their models are state of the art. Claude Opus 4.1 leads on coding-related benchmarks like SWE-Bench, and we expect more updates before the end of 2025 to further improve the model through RL. The launch of Amazon’s large-scale training clusters towards the end of 2025 bodes well for Anthropic’s progress in 2026.

On the win-win symbiosis with Amazon:

More important to AWS’ XPU business growth is the ability to secure anchor customers – the market-makers in this first wave of GenAI demand. Scale, time-to-market, deep partnerships, and pricing are key to winning these accounts, more so than advanced software layers.

No firm better illustrates this than Microsoft. Azure’s AI outperformance over peers is entirely driven by its OpenAI partnership. As of Q2 2025 (June 2025), all of OpenAI’s >$10B cloud spending is booked by Azure.

Amazon understood early on the need for an anchor customer and invested $1.25B, expandable to $4B in Anthropic in September 2023. The partnership expanded in March 2024 with Anthropic committing to use Tranium and Inferentia chips. In November 2024, Amazon invested an additional $4B into Anthropic, with the latter naming AWS as its primary LLM training partner.

More here (🔐)

🇨🇳✍️ Nvidia’s China Licenses Limbo

Here’s Nvidia’s CFO at the Goldman conf:

Colette Kress: Yes, we did receive a license approval and have received licenses for several of our key customers in China. And we do want that opportunity to complete that and actually ship the H20 architecture to them.

Right now, there is still a little geopolitical situation that we need to work through between the two governments. Our customers in China do want to make sure that China government is also very well received in terms of receiving the H20 to them. But we do believe there is a strong possibility that this will occur.

And so it could add additional revenue. It's still hard to determine how much within the quarter. We talked about it being about a $2 billion to $5 billion potential opportunity if we can get through that geopolitical statement.

What a mess. They also need to figure out the legality of sending 15% to the U.S. government, where to send it and and how to do the paperwork on that one. Just that may hold things up… ¯\_(ツ)_/¯

🇪🇺🥵 Europe’s Crusade against Air Conditioning has a Real Human Cost 💀💀💀💀💀

Data sources differ, but nobody puts AC usage in Europe (or the UK) at more than around 20%. [...]

With this rise in temperature — and the aging of the European population — has come a rise in preventable death. Estimates of heat-related mortality vary, but the most commonly cited number is 175,000 annually across the entire region. Given that Europe has a population of about 745 million, this is a death rate of about 23.5 per 100,000 people per year. For comparison, the U.S. death rate from firearms is about 13.7 per 100,000.

So the death rate from heat in Europe is almost twice the death rate from guns in America. If you think guns are an emergency in the U.S., you should think that heat in Europe is an even bigger emergency.

Those that suffer most are, of course, the most vulnerable, the poorest, the elderly, the sick…

Those who craft the laws and regulations that make it difficult or impossible to get A/C probably do so from beautiful villas by the sea or in the mountains…

Note: The 175k number cited by Noah is contested. Other sources put it closer to 50,000-70,000/year in recent times. Even with those lower numbers, that’s still a lot of people dying from easily preventable causes.

🗣️🇨🇳 Interview: Dan Wang on China’s Development Model

h/t friend-of-the-show ‘Jesse Livermore’

🧪🔬 Liberty Labs 🧬 🔭

🏭🏗️🏭🏗️ El Segundo Roundtable: Bringing an Atoms Renaissance the U.S. 🏭🏗️🏭🏗️🏭🏗️🚀

This was a really fun overview of what is going on in El Segundo in L.A., while doubling as a group therapy session for a new generation of founders chewing glass to bring back more hard tech and advanced manufacturing in the US.

Ti Morse did a great job putting this together 💚 🥃

The discussion featured founders working on drones, advanced manufacturing/casting of metal alloy parts, nuclear power, cloud seeding, mining/drilling tech, etc.

It’s very inspiring and I hope that their example encourages other entrepreneurs and founders go do things in the physical world rather than build yet more software.

The marginal impact of a talented person creating a startup for drones or mining is much higher than it is for another B2B SaaS company.

📸 Google Puts Veo 3 in Photos app, Slashes API Cost ✂️

Get ready for a flood of AI-generated short videos:

Bringing your memories to life is at the heart of Google Photos. With Photo to video, you can already turn your images into fun, short clips, and starting today we’re enhancing that feature with our state-of-the-art video generation model, Veo 3. This means you can now turn your still images into even higher-quality clips.

This is only available in the U.S. for now, but this is still a huge rollout for a feature that *has* to be very computationally intensive. This is a flex of Google's infrastructure muscle.

Devs and third parties are also getting goodies from Google:

Veo 3: Now $0.40 / second; was $0.75 / second

Veo 3 Fast: Now $0.15 / second; was $0.40 / second

You can also generate Veo 3 and Veo 3 Fast videos in 1080p HD

Veo 3 and Veo 3 Fast are now out of beta and considred ‘stable’

I can’t wait to play with it some more. I’ve done maybe a couple dozen videos so far, but at some point I want to go really deep on it.

🏗️👷🚧 Roomba-like Robot Prints Layout on Concrete at Construction Sites 🤖

This is brilliant!

Here’s what one of the engineers working on it had to say on Twitter:

Layout is typically a very manual process requiring a skilled worker to snap lines on hands and knees. With this robot, they now supervise the robot while it does the drudgery of accurately putting the lines down. Other information, such as QR codes and detailed notes, can be put down as well.

It doesn't really replace a worker, but it does give them a tool to work faster, more accurately, and more efficiently. Think of it as providing a medieval monk transcribing documents, a word processor, and a laser printer.

The robot excels at printing out points on the ground for overhead hangers for wiring, lighting, plumbing, and HVAC. It's particularly useful when it does a multi-trade layout. Mistakes and conflicts pop out to the tradespeople walking the areas before anything is put up.

The accuracy and capabilities of the robot have evolved greatly over the last five years. Today, you can have the robot print a ruler 100' long and lay down a tape measure next to it and the inch marks will be spot on along the entire line. Another engineer and I worked very hard on that capability.

The robot can print in different colors, different line styles, and lines with text details such as "update" on them. The end user can select a number of different ways to indicate updates in the field if they have to be made. Also, the user can choose to use an ink that has durability similar to chalk, so it can be washed off if absolutely necessary.

Very cool.

The company is Dusty Robotics, it’s a venture-backed private company.

Anything that can help with the construction industry’s efficiency/quality/speed has *massive leverage* on making the world better.

While manufacturing productivity has exploded over the past few decades, construction has stagnated. We desperately need more founders bringing this kind of innovation to the physical world (like the Gundo crew above).

🏴☠️ Investigation: How GPUs are Smuggled to China 🇨🇳

Ok, this is crazy, but I like this kind of crazy. This video is 3-hour long and the tenacity of Steve Burke deserves all kinds of kudos.

The smuggling goes from individual RTX 4090s smuggled via fake pregnancy bumps 🤰 or packed with live lobsters 🦞 to entire Grace Blackwell racks shipped from Taiwan.

There’s also the irony that Chinese factories assemble the devices they're prohibited from having, using components that come from dozens of Chinese suppliers.

To be clear, I can’t verify or confirm all the claims made in the video, take it with a grain of salt, and things could be changing rapidly… but it seems highly plausible.

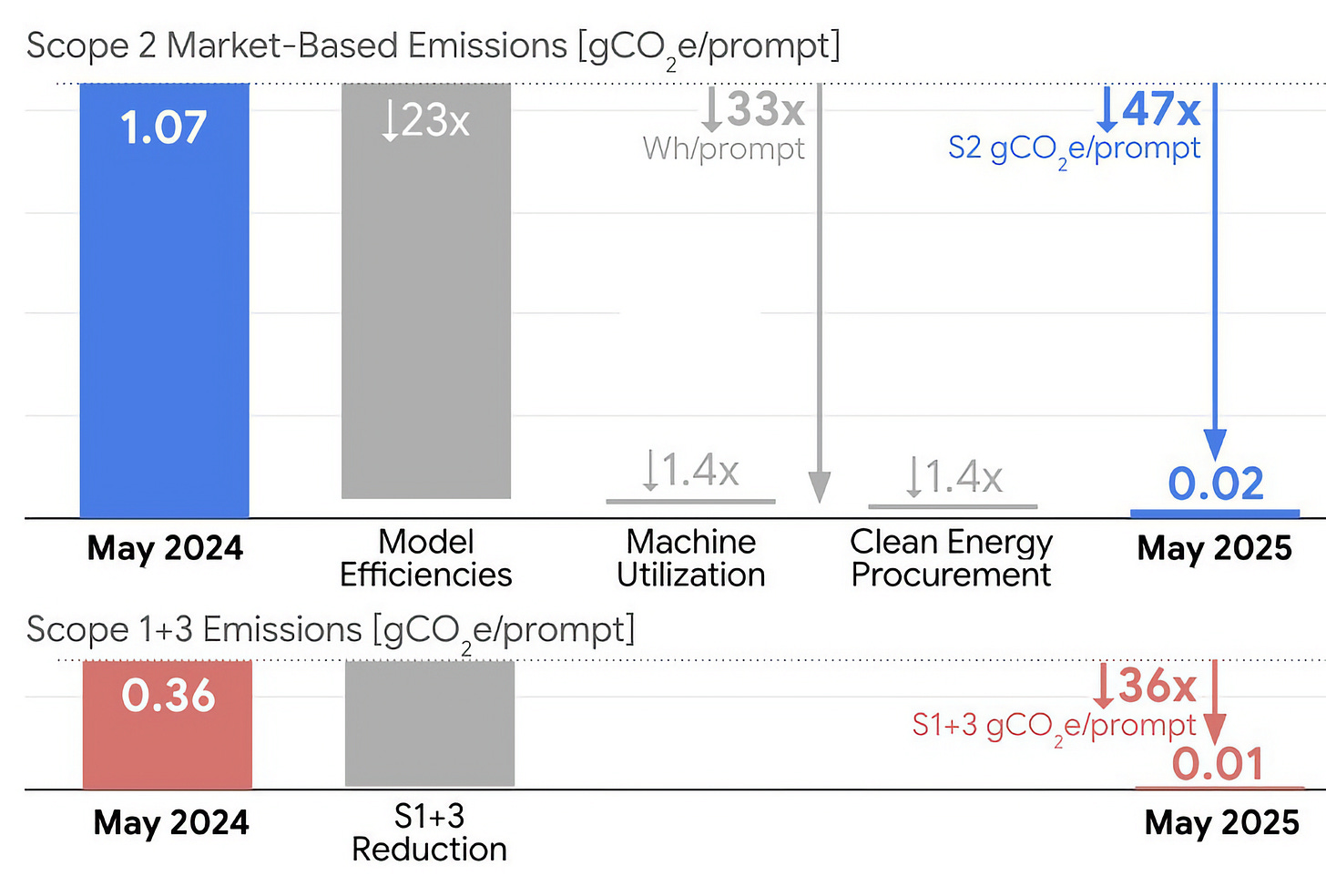

🔌 The Environmental Footprint of AI at Google 💧

Google released a technical paper detailing the energy. emission and water impact of Gemini.

Bottom line:

we estimate the median Gemini Apps text prompt uses 0.24 watt-hours (Wh) of energy, emits 0.03 grams of carbon dioxide equivalent (gCO2e), and consumes 0.26 milliliters (or about five drops) of water — figures that are substantially lower than many public estimates. The per-prompt energy impact is equivalent to watching TV for less than nine seconds.

Crucially, these figures aren't static.

Over the past year, the “energy and total carbon footprint of the median Gemini Apps text prompt dropped by 33x and 44x, respectively”, and that’s as the quality of the AI itself has improved.

There exists HUGE incentives to make AI more efficient even if there were no environmental considerations, but I believe that if we were to look at things to cut or ration to reduce energy consumption, the delivery of intelligence to the masses would be at the bottom of the list.

Clean-energy abundance is the answer: Solar + batteries and nuclear can deliver what we need.

🎨 🎭 Liberty Studio 👩🎨 🎥

🎥 The Enduring Power of Practical Effects: I thought it was CGI but it wasn’t 🎬

Nice video essay, and it did make me wonder what else that I thought was CGI was done mostly practically (with some digital manipulation, of course) 🤔

As cool as modern CGI is, the ability for the film director to *see* and direct things in real-time, on the set, has got to be incredibly valuable.

Great stuff. The lack of air conditioning in Europe is mind blowing. The comparison with murder rates is enlightened thinking.

Good insights.