59: My Charter Q3 Highlights, Andy Jassy on AWS Custom Silicon, DeepMind Computational Biology Breakthrough, Rory Sutherland, SK Fusion, HVDC, Moderna, and EV sales

"More time in the salt mine."

If I accept you as you are, I make you worse; however, if I treat you as though you are what you are capable of becoming, I help you become that.

—Johann Wolfgang von Goethe

I recently listened to this podcast:

…and liked it. But felt like I understood only around 40% of what they were talking about (isometric scapular protraction issues? Dynamic Neuromuscular Stabilization?). Which, I have to remind myself, is fine.

My knowledge base in this area is so low that:

Adding 40% of that podcast is a massive relative improvement. It made a real difference in what I know about this topic. This is very different from what one more podcast about investing is likely to do to my investing knowledge base, even if I understand 100% of that one. (I use italics too much, don’t I?)

40% is way better than 0%, which is what I would’ve gotten if I had decided to punch “eject” a few minutes in, when I started to be confused about what they were saying.

I guess what I’m getting at is: It’s fine to not understand 100% of what you’re reading/listening to. In fact, it’s probably a sign that you’re really learning. (and bold too)

I remember listening to Peter Attia’s 5-part interview with Tom Dayspring about lipids and thinking that I both understood almost nothing of what they were saying, and was learning more than I had been in a long time.

✖︎ Salesforce is buying Slack. I don’t have anything to say about it right now, so I won’t say anything (except one word: distribution).

Investing & Business

My Charter Q3 Highlights

A bit late, but finally had a look at this (slides here). A few things I noticed:

[buybacks:] 6.1 million shares for approximately $3.6 billion [$592/share on average]

mobile revenue growth of 91.8%

increased our wage for all hourly field operations and customer service call center employees by $1.50 an hour, and we remain on path to a $20 minimum wage by 2022.

data usage for Internet-only customers remaining at an elevated 600 gigabytes per month during the third quarter.

We recently purchased 210 CBRS priority access licenses in 106 counties across all our key DMAs for just over $460 million. Over a multiyear period, we'll execute on our inside-out strategy with small cells attached to our existing network using unlicensed and now our licensed spectrum [...]

our WiFi capabilities and available spectrum for WiFi has continued to improve. The FCC has just granted significance amounts of WiFi spectrum to the public for use, and we plan to use that spectrum inside dwellings.

We grew consolidated EBITDA by over 13%, and our third quarter free cash flow grew by nearly 40% year-over-year.

Our advertising business is improving, and our core ad business, excluding political, is about 90% back to normal, in part because of the amount of sporting events that are now airing

our net debt to last 12-month adjusted EBITDA was 4.3x or 4.2x if you look at cable only

we issued $1.5 billion of 12-year high-yield notes at a yield of roughly 4%

there is an opportunity through compression going from MPEG-2, which is still widely distributed by us, to MPEG-4. [...] I think the key takeaway is that traditional video is still the largest single -- it's more than half the capacity of the infrastructure.

They’re still using the ancient MPEG-2 codec (a video codec is the way video is compressed so that it takes less bandwidth — it’s lossy compression, meaning that quality is degraded compared to the source, but the algorithm tries to do it in a way that is less perceptible by the human visual cortex than if it was applied uniformly over the whole image)…

By moving to more modern codecs (h.264, which is old too now, but less so… or the more modern HEVC/h.265), they could free up a bunch more capacity in their pipes and offer even higher speeds to customers for the same price, or use the same infrastructure for longer without needed to beef it up with capex.

Of course, switching codecs probably is a huge headache, but it’s nice to know there’s the option and they’re seeing it. And they did mention that they wouldn’t need to replace set-top boxes to do it, which makes a huge difference.

I guess it’s just like me to not comment on a $460m purchase of spectrum but write a bunch on a video codec… Sorry to the real financial analysts out there ¯\_(ツ)_/¯

late in Q3, you could start to see the market move to more normal transaction activity.. both churn and sales. We think that's indicative where Q4 is probably heading. And I think probably for next year as well, you'll have higher levels of mover churn and market churn

Here’s Rutledge’s answer to being asked to confirm that he’s now under contract until the end of 2024:

Yes. More time in the salt mine.

Andy Jassy on AWS Making its Own Chips

We bought a business, the Annapurna business, and they were a very experienced team of chip designers and builders. We put them to work on chips that we thought could really make a big difference to our customers. We started with generalized compute and we built these Graviton chips initially in the A1 instances we launched a few years ago, which really were for scale-out workloads like the web tier or microservices, things like that. It was 30% better price-performance and customers really liked them, but there were some limitations to their capabilities that made it much more appropriate for a smaller set of workloads.

a customer said, “Can you build a chip that is a version of that that can allow us to run all our workloads on it?” And that’s what we do with Graviton2, which if you look at the performance of what we’ve delivered with Graviton2 chips in those instances I mentioned, it’s 40% better price performance than the latest processors from the large x86 providers.

Q: The Annapurna team doesn’t get a lot of public attention. What else has it been doing?

A: We’ve also put that Annapurna team to work on some hard machine learning challenges. We felt like training was something that was reasonably well-covered. The reality is when you do big machine learning models at scale, 90% of your cost is on the inference or the predictions. So we built a chip to optimize the inference called Inferentia. And that already is growing incredibly quickly. Alexa, which is one of biggest machine learning models and inference machines around, already has 80% of its predictions being made through Inferentia. That’s saving it 30% on costs and 25% on latency.

So we’re continuing to build chips. We have the scale and the number of customers and the input from customers that allow us to be able to optimize for workloads that really matter to customers.

On serverless:

In 2020, half of the new applications that Amazon built were built on top of Lambda compute. I think the next generation of developers are going to grow up building in this serverless fashion

Interview: Rory Sutherland (June 2020)

Always an interesting man to listen to. I enjoyed this one:

The part about the value of “creating your own job” (not just being your own boss, but creating the format of it, to create the proper expectations) is insightful. And the “conversational value” of certain products, like EVs, too.

EV Sales & the Pandemic

For the first nine months of 2020, car sales cratered. Every major automaker was affected—with the notable exception of Tesla. The electric automaker sold more cars than ever before. [...]

A closer look at the data shows it wasn’t just a Tesla story. Electric vehicles in general managed to thrive even as sales of traditional cars broke down. Both Volkswagen and Daimler saw record-setting declines in total sales, even while sales at their EV divisions doubled. [...]

Batteries are a technology, not a fuel, which means the more that are produced, the cheaper they are to make. In fact, every time the global supply of batteries doubles, the cost drops by about 18%, according to data tracked by BNEF. (Source)

Science & Technology

We’re Going to Need a Lot More Electricity Transmission

To increase the production of renewable energy, the world will need a lot more long-distance, high-voltage transmission lines, to move power from the sunniest and windiest areas to more populated areas (and also to interconnect various parts of the grid together so it’s easier to move power around from places that have a surplus to those that aren’t generating enough locally):

Since 2014, China has built 260 gigawatts of interregional transmission capacity that’s come on line or will come on line in the next few years, according to a report this month by Americans for a Clean Energy Grid. Europe is way behind at 44GW, followed by South America at 22GW and India at 12GW. Then comes North America at 7GW, with only 3GW in the U.S. [...]

Transmission lines aren’t just about bringing power from where it’s cheap to where it’s expensive, though that’s valuable. Current can flow both directions. A region that produces solar power during the day can swap with a region that produces wind power at night. Or power can flow to the eastern U.S. when demand peaks there on weekday mornings, then shift westward as the day goes on. Swaps like these satisfy demand with what grid planners call “virtual storage,” reducing the need for construction of generating plants and physical storage such as battery packs. (Source)

I posted some maps and graphs in this thread. You can see the whole report here.

DeepMind AlphaFold Breakthrough on 50-year Computational Biology Holy Grail Quest

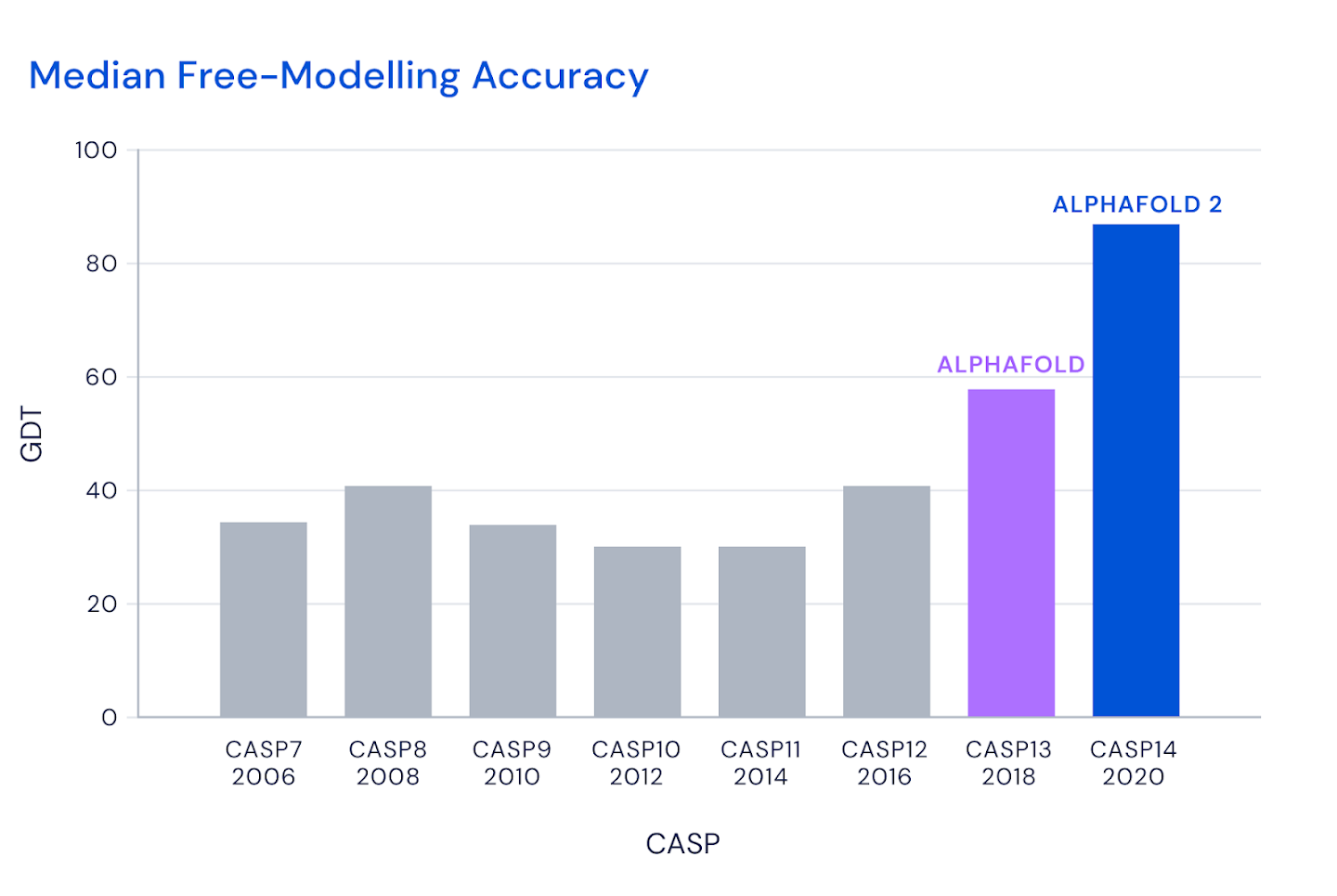

Right after I posted about computational biology in the past edition, CASP 14 results came out and Deepmind has crushed it with their AlphaFold2 software. They are close enough to ground truth that it could be said that they’ve ‘solved’ the problem.

For those who aren’t familiar with CASP:

Our goal is to help advance the methods of identifying protein structure from sequence. The Center has been organized to provide the means of objective testing of these methods via the process of blind prediction. The Critical Assessment of protein Structure Prediction (CASP) experiments aim at establishing the current state of the art in protein structure prediction, identifying what progress has been made, and highlighting where future effort may be most productively focused.

Basically, they have protein structures that have been solved by traditional x-ray crystallography (which is precise, but doesn’t work well with everything), but the results haven’t been made public yet. They give the amino-acid sequences of these proteins to the participants in the challenge, an everybody then tries to figure out the actual 3D shape of the proteins based on just that sequence of atoms.

Whoever comes closest to the actual, experimentally verified shape wins.

Every two years this competition is held, and you can see the software improve as it is tweaked and made smarter and compute costs drop.

Two years ago, AlphaFold made a big splash. But this year, it looks like it just stands head-and-shoulders above everybody else.

And the beauty is, enough about how they did it is getting published that everybody will soon use similar techniques and benefit from this progress. This isn’t some proprietary thing.

And what makes it useful is not only determining the shape of existing proteins, but that once you can do that, you can also design proteins that don’t yet exist in nature but that do something you want to do (bind to a certain other protein or cell or catalyze a reaction or whatever).

To give you an idea of the magnitude of the step forward:

Note that the blue bar is close to 90%, which is about where things are considered equivalent to the experimental method (you don’t need to get to 100% for that).

Nearly 50 years ago, Christian Anfinsen was awarded a Nobel Prize for showing that it should be possible to determine the shape of proteins based on their sequence of amino acids - the individual building blocks that make up proteins. That's why our community of scientists have been working on the biennial CASP challenge. [...]

Being able to investigate the shape of proteins quickly and accurately has the potential to revolutionise life sciences. Now that the problem has been largely solved for single proteins, the way is open for development of new methods for determining the shape of protein complexes - collections of proteins that work together to form much of the machinery of life, and for other applications. [...]

determining a single protein structure often required years of experimental effort. It’s tremendous to see the triumph of human curiosity, endeavour and intelligence in solving this problem. A better understanding of protein structures and the ability to predict them using a computer means a better understanding of life, evolution and, of course, human health and disease. (Source)

If you want more on this, DeepMind has a writeup, and Nature has a good piece (h/t Rishi Gosalia for that one).

‘S. Korea's fusion device 'KSTAR' runs for 20 seconds at 100 million degrees Celsius’

According to the Korea Institute of Fusion Energy on Tuesday, the device, which is used to study aspects of fusion energy, successfully operated at a temperature of 100-million degrees Celsius for 20 seconds generating intense heat that is seven times hotter than the center of the sun.

No other fusion power plant in the world has managed to run for more than ten seconds but the institution aims to maintain this state of plasma for 300 seconds by the year 2025. (Source)

Fusion reactors are really interesting. The idea of using magnetic fields to confine a super-mega-hyper-hot plasma in empty space because it’s too hot for any material to handle without damage is just sci-fi cool. The fact that us monkeys-with-tools are even able to do it is impressive (though that applies to lots of things, including the planet-spanning communication network that runs on strands of molten glass and sand that you’re reading this on).

Almost everything I know about the field comes from these two podcasts, which, to be fair, are 5.5 hours of pretty technical discussion with physicists who work at ITER. If you’re curious about the field, I highly recommend them:

“Go for it,” Dr. Fauci said he told them. “Whatever it costs, don’t worry about it.”

Moderna employed only 800 people, including a manufacturing team. Twenty vaccines and treatments were in development, but none were expected to come to market for at least two years. It had never run a Phase 3 clinical trial, the late-stage testing designed to determine whether a vaccine is safe and effective for humans.

Some Moderna executives suggested taking a stab at a vaccine for a few months, then reassessing. But Juan Andres, the company’s chief technical operations and quality officer, said he warned: “Sorry, guys, there is no exit on this highway. If we are in, we are in.” [...]

N.I.H. got in with them. Dr. John R. Mascola, the head of the Vaccine Research Center, and Dr. Barney Graham, the center’s deputy director, proposed the partnership to Dr. Anthony S. Fauci, the director of the National Institute of Allergy and Infectious Diseases.

“Go for it,” Dr. Fauci said he told them. “Whatever it costs, don’t worry about it.”

Moderna’s goal was to get from a vaccine design to a human trial in three months. The design came quickly. “This is not a complicated virus,” Mr. Bancel said.

Dr. Graham said that after China released the genetic sequence of the new virus, the vaccine research center zeroed in on the gene for the virus’s spike protein and sent the data to Moderna in a Microsoft Word file. Moderna’s scientists had independently identified the same gene. Mr. Bancel said Moderna then plugged that data into its computers and came up with the design for an mRNA vaccine. The entire process took two days. (Source)

h/t Gavin Baker for highlighting

The Arts & History

Queen’s Gambit (episodes 5-6-7 — SPOILERS)

Finished it. Really enjoyed it. Quality show on multiple dimensions: Acting, writing, cinematography, music, themes, etc.

One of the details that I liked is when an older Beth (I said: spoilers) returns to the orphanage. I love how they shot the perspective differently; as a kid, they mostly shot interiors from closer to the ground and with wider lenses, and the place looks pretty big. When she comes back as an adult, they shoot it from higher and with tighter lenses, and it looks rather small and unimposing.

Reminded me of when I went back to my childhood house and it felt like it had shrunk by 40%.

I’m always afraid of good shows that don’t really know how to end satisfactorily. Scott Frank’s previous mini-series, ‘Godless’ (2017, Netflix), was really good, but it suffered a bit from that, IMO. But this one finished strong, with decent payoffs for multiple threads (her mother, friends, Townes, whether she can access her genius without too much self-destruction, etc).

That moment when she sees the pieces on the ceiling in Russia, while sober; I gotta admit, with the music and that look, that was badass.

Follow-Up: Scary Pockets Keyboard Player is also the CEO of Patreon

Friend of the newsletter TMTprof (locked account — they may not let you in!) pointed out that the keyboard player of the band Scary Pockets, which was featured at the bottom of edition #57, is the CEO of Patreon.

Very cool. He always looks like he’s having such a good time playing, I hope he finds running a business enjoyable too.

Dave Chappelle 18-Minute Unforgiven Video

I had seen part of the transcript, which is what I posted in edition #57, near the end, but I think it’s worth watching the video:

‘Mercy is the mark of a great man…’

Randomly thought of this Firefly joke, and felt compelled to share it with you (context is the end of a traditional duel with swords).

Wait… was Firefly really in 2002? That’s 18 years ago!

Live shot of how that makes me feel: