591: The Circular AI Economy, Nvidia's $100bn Deal with OpenAI, Constellation Software's AI Call, Hyperscalers & ASICs, Oracle Buybacks, North-Korean Infiltrators, and Alien Guitar

"From picks & shovels salesman to kingmaker?"

Quis Custodiet ipsos custodes?

[Latin: Who will watch the watchmen?]

🫧📈 Bubble talk is everywhere lately.

Historically, bubbles have thrived on complacency and denial, not broad recognition.

I can’t help but wonder whether the fact that everyone is talking about a bubble means that we’ve still got some way to go 🤔

There’s certainly a lot of crazy stuff going on. Meme stocks and SPACs are back, and the market is bifurcated in a way that I’ve never seen before:

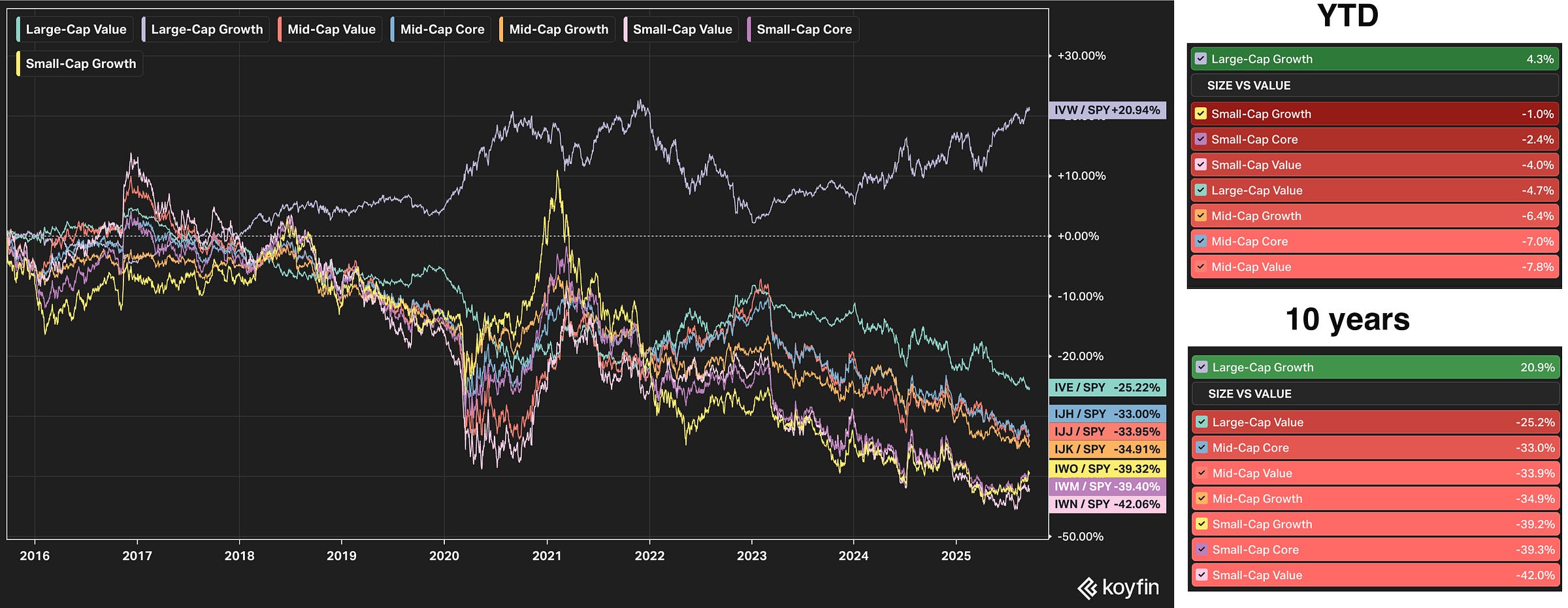

This graph shows a bunch of factors (Large-Value, Large-Growth, Mid-Growth, etc) divided by the S&P 500 index to show relative performance. Year-to-date and over the past 10 years (!), Large-Cap Growth has trounced everything else. And because it’s so large as a percentage of total market cap, it’s moving the index along with it, masking the underperformance of the other factors.

That explains why the index is moving a certain way, but if you look at the long-term chart of most other stocks that aren’t Large-Growth, it’s a different story.

Can we expect mean reversion? Is this just reality in the post-internet era of increasing returns-to-scale? ¯\_(ツ)_/¯

🗽✍️🔓🖋️📝🗣️💬⚖️🤐 On Free Speech, I often hear arguments framed around: “what happens when the other side/people you don’t like get power?”

While this appeal to self-interest can be persuasive, it’s a weak foundation. I wish we would also try to convince people to support Free Speech because it’s a good idea in itself, on principle, and not just with an argument that implicitly boils down to “censorship would be fine if you could be sure that ‘your side’ would always be in charge.”

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet 👇

🏦 💰 Liberty Capital 💳 💴

💰🤝🤖 Nvidia’s $100bn Deal with OpenAI for 10GW of Vera Rubin GPUs + The Circular AI Economy 🔄 🤔

Jensen announced a partnership and large investment in OpenAI, calling it “the biggest AI infrastructure project in history” (it feels like we’re getting one of these every couple weeks these days):

OpenAI and NVIDIA today announced a letter of intent for a landmark strategic partnership to deploy at least 10 gigawatts of NVIDIA systems for OpenAI’s next-generation AI infrastructure to train and run its next generation of models on the path to deploying superintelligence. To support this deployment including data center and power capacity, NVIDIA intends to invest up to $100 billion in OpenAI as the new NVIDIA systems are deployed.

From picks & shovels salesman to kingmaker? 👑

The first phase aims for operations in the second half of 2026 using Vera Rubin (the generation after Blackwell, but before Feynman).

It’s reported that Microsoft got one day’s notice before the deal became public 🤔

It’ll happen in tranches:

The initial $10 billion tranche is locked in at a $500 billion valuation and expected to close within a month or so once the transaction has been finalized

Nine successive $10 billion rounds are planned, each to be priced at the company’s then-current valuation as new capacity comes online

This isn’t Nvidia’s first investment in OpenAI. It participated in last October’s round, back when OpenAI was valued at ‘just’ $157 billion.

This not only includes the compute itself, but also networking:

OpenAI will work with NVIDIA as a preferred strategic compute and networking partner for its AI factory growth plans.

They’re apparently talking about leasing the chips:

OpenAI and Nvidia are discussing an unusual way to structure their new artificial intelligence data center partnership, under which OpenAI would lease Nvidia’s AI chips rather than buying them [...]

The leasing deal could be structured to minimize risks for Nvidia. Nvidia could set up an entity that borrows money to buy the servers, using the chips as collateral. OpenAI’s lease payments could go towards paying back the loan.

If you’re successful enough, you eventually become a bank 🏦

And interestingly, a two-way partial integration of roadmaps:

OpenAI and NVIDIA will work together to co-optimize their roadmaps for OpenAI’s model and infrastructure software and NVIDIA’s hardware and software.

To better understand what this means and why it happened, I think we must first look at the strategic positioning of the players involved, and what it means for Nvidia to join the knife fight with its biggest customers 🗡️

At the frontier, OpenAI competes with a few players that are generating *a lot* of free cash flow from other businesses (Google, Meta, Amazon… 💰), and that have giant balance sheets allowing them to raise debt, or even issue tens of billions in equity if necessary (looking in your direction, Oracle).

OpenAI’s revenue has grown fast, but nowhere near enough to self-fund this scale. So far, they could rely on venture capital, but they’re rapidly scaling to a size that makes them too big for VC. There are large pools of money like sovereign wealth funds and Masa Son, who's a category on his own. But these are not bottomless either.

To better compete against Big Tech and the hyperscalers, OpenAI originally picked Microsoft as its partner. But Nadella seems to have reached his limit a little while ago, and won’t press the pedal all the way to the metal. He seems to have concluded that models will likely commoditize distribution/customer relationships may matter most. Right now, this may seem overly cautious, but we’ll see. It’s always hard to see the wisdom of being careful during the good times.

What about Nvidia? They’re the belle of the ball! Everybody wants what they’re selling, and they’re ready to pay a lot for it. The investments they made 10-15 years ago are paying off fabulously, and they basically have 4 aces. But even if you have a great hand, you should still maximize how you play it. That’s what they’ve been doing, spreading GPU allocations around in such a way as to divide and conquer. That’s why they’ve been giving such large allocations to neoclouds like Coreweave or Nvidia pure-plays like Oracle Cloud, helping create more competition for hyperscalers.

There are benefits to staying neutral and selling picks & shovels to everyone ⛏️ But if pushed to pick someone, OpenAI is the clear choice. Google doesn’t need their money and has TPUs, Anthropic is entangled with Amazon and Google and an increasing amount of their compute will come from Trainium and TPUs, xAI buys a lot of GPUs but they don’t have much traction with users and are reliant on Elon Musk’s fundraising prowess, Meta is in the middle of a big re-org and doesn’t need the money, etc.

Nvidia would prefer OpenAI to win AND one of the biggest obstacles in the way of that happening is not having the deep pockets of a Big Tech benefactor. By backing them with cash that they can’t deploy organically fast enough, they boost OpenAI’s odds AND keep GPU demand elevated. Two birds, one stone. 🐦⬛🐦⬛

What are the problems with this?

Circular money flow: Nvidia wires money over to OpenAI, and OpenAI wires it right back to order a bunch of Vera Rubin racks. 🔄

Those who were around during the dot-com bubble will get a shiver down their spine. Vendor financing doesn’t exactly have the best track record.

But at least, Nvidia is supporting a customer that has a product that is actually useful, has true product-market fit, and fast growing revenue. Not Pets-dot-com and Webvan 🤔

Quality of revenue: The revenue will be of lower quality than true organic demand, which is a negative, but they’re also making it more likely that OpenAI continues to scale and keeps winning the AI race, which potentially has immense strategic upside for Nvidia.

And they’re getting both the round-tripping revenue AND equity in OpenAI, which, if they do keep winning thanks to Nvidia’s help, could prove to be a good investment.

The goal is to be a self-catalyst.

Mr. Market will have to decide if, on the net, this is more good than bad ⚖️

Who loses?

Probably everyone who isn’t Nvidia or OpenAI.

You could say that hyperscalers will double down on ASICs and maybe even AMD GPUs, but Jensen appears to be making the bet that they were already doing everything they can on that front. They already have billions and billions in incentives to reduce their dependence on Nvidia, so this won’t materially change that.

Many ASIC projects will fail and won’t be competitive. For a silicon design team, going head-to-head with Nvidia isn’t exactly easy, even when you take into account not having to pay their margin, and any frontier lab that can’t get a full allocation of cutting-edge GPUs — especially for training — risks falling behind. 🏁

The hyperscalers now find themselves in more direct competition with one of their most important suppliers, and the AI labs are now in competition with a much better-funded OpenAI (which may help Nvidia tune its GPUs to work even better with its models).

This deal isn’t finalized. As Chanos pointed out, the last line of the press release is:

NVIDIA and OpenAI look forward to finalizing the details of this new phase of strategic partnership in the coming weeks.You could interpret this in all kinds of negative ways.

Or you could be more charitable and say that planning 10 GIGAWATTS of data centers that will be deployed over many years is very complex and takes a long time, and that in the fast-moving AI industry, spending months and months hammering the details before making an announcement probably isn’t optimal if you can agree on the big things and then make an announcement that will help OpenAI through reflexivity.

After this announcement, they will no doubt be able to raise money at even higher valuations, and leave Anthropic further behind in the fundraising race.

Question 1: What does this mean for OpenAI’s internal ASIC efforts? Does the Nvidia partnership mean that this is going on the back burner? Was it part of the deal that OpenAI had to stop or slow down this project and use Nvidia chips for training and inference?

I can’t imagine that Jensen would be pleased if Altman raised billions and used it to finance custom silicon that competes directly with Nvidia’s chips 😅

They mentioned that Nvidia would be a “preferred” supplier for compute and networking, not the “exclusive” one…

Question 2: How about electricity? Where will these 10 GW of data centers be built? Is Nvidia about to finance some new nuclear power plants somewhere?

📞🤖💾 ✨ Constellation Software on AI: Risks & Upside ✨🔮

First, some meta-context:

As I said last week, Mark Leonard had every incentive to present things in a bad light. He’s been trying to talk down the stock price for a decade because CSI doesn’t do SBC, so employees and executives have to buy on the open market. He feels that a stock price that is too high is bad for talent retention.

And as a deep value investor who prefers to pay 4-6x EBITDA, he may be the right founder for Constellation, but if he had been an outsider, he probably would never have bought the stock, so it’s no surprise that he’s been finding it too expensive for a long time.

Mark also *hates* sharing valuable info with competitors, so if they have found promising use cases, he’d be the last person to mention it and get into specifics.

Parsed through that lens, I thought the call was ultimately positive on the net.

They highlighted many risks, but also many potential benefits from AI tools, gave a good explanation of why they were well-positioned to benefit, and how they are already experimenting in a decentralized way across their thousand+ business units. When something succeeds, they can share best practices with the rest of the company.

First, here’s Mark Leonard on how he thinks about vertical market software (VMS):

Mark Leonard: I believe that vertical market software is the distillation of a conversation between the vendor and the customer that has gone on frequently for a couple of decades.

And you distill those work practices down into algorithms and software and data and reports and it captures so much about the business. And being able to examine that in a new way because of AI, creates new opportunity to modify and change and suggest new approaches. So yes, I'm hopeful that, that unique and proprietary information will be of value.

That’s well put.

The “vertical” in the name is important.

It’s very focused on one specific industry niche, and to do a good job at it, it requires a level of knowledge about that industry, and a level of intimacy with customers, that is very different from horizontal software, which targets more general use cases, and which can typically be designed by people who have no idea about many of the use cases of end-users.

Mark Leonard told a story to illustrate how hard it is to predict the impact of new technologies, even directionally, and to frame the message of the whole call: 🩻

Mark Leonard: I'd like to tell a story. It's a true story. And it illustrates a useful but unsatisfying lesson, which I think we should all understand. In 2016, Jeff Hinton made a long-term forecast. For those of you who don't know, Jeff, is known as the godfather of AI and is a Noble Prize winner for his work in the field.

And long-term forecasting is very difficult. I talked about this before and happy to send you some source, information if you'd like to delve into that further.

Jeff's forecast in 2016 was that radiologists were going to be rapidly replaced by AI. And specifically, he said people should stop training radiologists now. And in the intervening 9 years since he made that forecast, the number of radiologists has increased from 26,000 in the U.S., these are U.S. Board certified radiologists, to 30,500 or a 17% increase. Now that's outpaced the population growth in that period.

So the number of radiologists per capita is up from 7.9% to 8.5%.

Jeff wasn't wrong about the applicability of AI to radiology. Where he was wrong was that the technology would replace people, instead it's augmented people. The quality of care delivered by radiologists has improved and the number of practicing radiologists has increased. So I told you the story to make 2 points.

Firstly, you and I will never know a tiny fraction as much about AI as Jeff did. And secondly, despite his deep knowledge of AI, he was unable to predict how it would change the structure of the radiology profession. So I think we're at a similar point today with the programming profession. It's difficult to say whether programming is facing a renaissance or a recession.

“Nobody can really know” is not a message that Mr. Market likes hearing, even when it’s the truth. People prefer confidence and certainty, even when it’s not based on anything.

Programming renaissance or recession! That’s quite the framing, but it goes to the heart of what happens when something becomes more efficient and less expensive.

This is the classic Jevons’ Paradox. The naive view is that if programming becomes more efficient, you need fewer programmers. But the inverse is often true. Lower costs create new use cases, and demand goes up.

Is this what is going to happen to software?

Programmers could experience massive demand for their services if their efficiency improves tenfold. You can imagine not having to put up with software that does 80% of what you want, you'll be able to get software that does 100% of what you want, that's customized to your needs and the cost of programming will drive that increased customization. What a wonderful outcome that would be.

Equally, you can imagine a tenfold increase in programmer productivity, driving massive oversupply of programmers and demand -- and particularly if demand for the services remains static. Similarly, if the 10x efficiency doesn't happen, if it's a 10% efficiency gain, you can imagine that there would be very modest changes to the current status quo. So, we don't know which way this is going to go. We're monitoring the situation closely.

It’s certainly possible to imagine all these scenarios playing out.

The question is: Is demand for software limited or unbounded?

So the advantage of using a high discount rate already is that it minimizes the value of the terminal value and your overall assessment of the attractiveness of the investment. So we've got that going for us inherently. We're already discounting the future a lot.

They’ve always been very disciplined and conservative, using high hurdle rates and requiring short payback periods. This may have made them too timid at times, but it pays off when things get more uncertain.

ML: the main point there was the fact that across customers, you can extract insight that you might not be able to extract within a particular clients' data. And I think that, for sure, is powerful with most of our businesses because, of course, we have multiple customers in a particular vertical.

While each customer owns their own data, Constellation is in a position to analyze anonymized data across its entire customer base within a specific industry, or to share insights across verticals.

This may allow them to uncover patterns, benchmarks, and insights that no single company could see on its own. This aggregated data is a proprietary asset that can be used to build unique, high-value AI features that are very difficult for a new entrant or a single customer to replicate.

Another message from the call is to be skeptical of ‘AI washing’, adding the AI label to things as a marketing ploy without creating true value for customers. 🏷️

I suspect that someone listening to this in the context of recent OpenAI, Oracle, and Nvidia fireworks may think that this was 🥱 but to be honest, everything else in the market seems pretty stodgy compared to the frontier AI labs and their compute suppliers ¯\_(ツ)_/¯

I also suspect that there’s a bifurcation among tech/software investors:

Those who think AGI/superintelligence is around the corner and software of any complexity will become trivial to create and maintain, humans will basically be put to pasture, etc. These won’t be interested in companies like Constellation, too stodgy. They’re chasing triple-digit growth in “AI stocks”.

Those who think that tech is a bubble and everything in the space is too expensive probably won’t be interested either, because they feel the whole sector is too expensive and has been “exciting” for too long.

🔮 Oracle Buybacks: Buy Low, Sell High (?) 📊

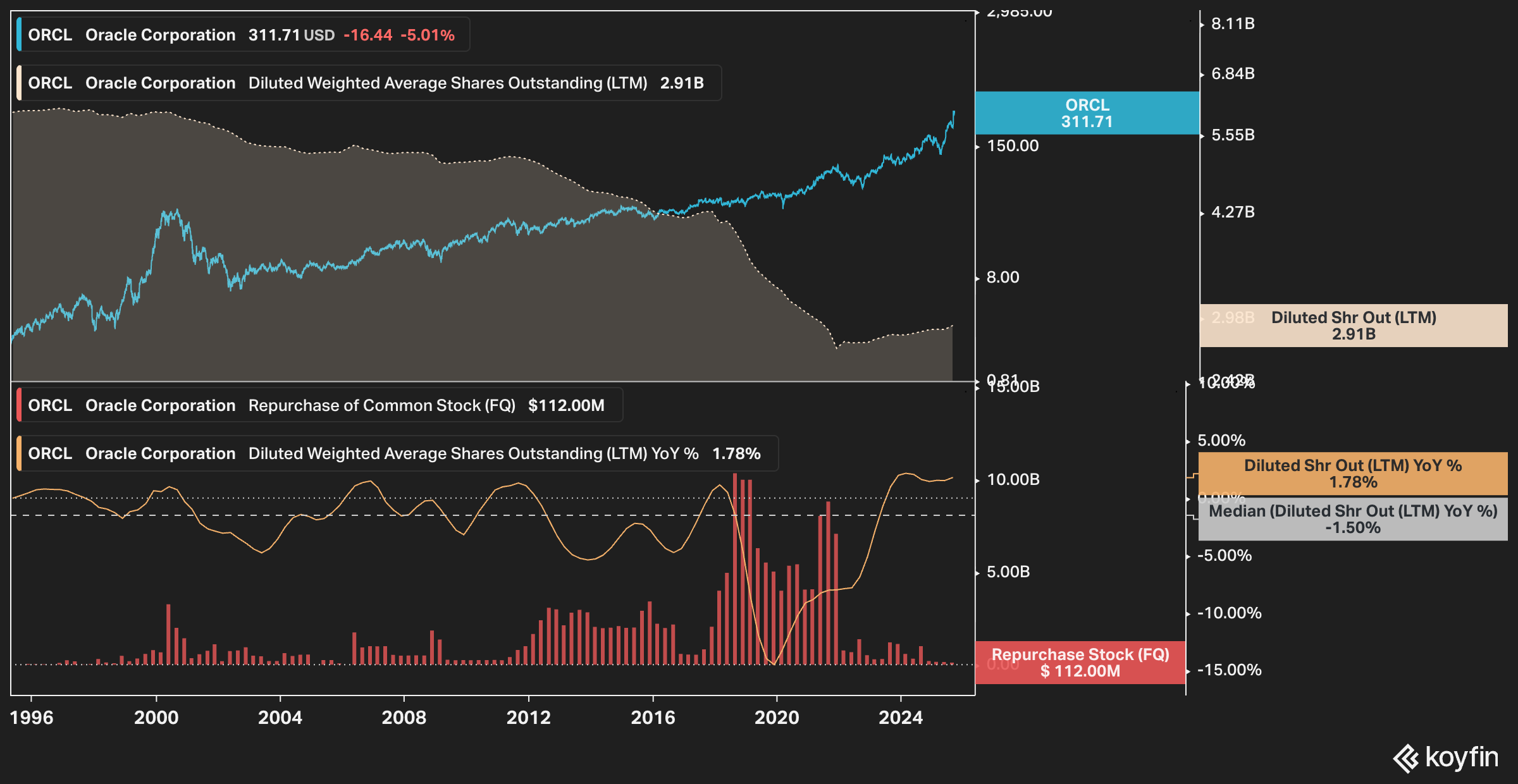

I was looking at Oracle’s share buybacks.

Around 2000, the share count peaked at around 6 billion (adjusted for splits since). They steadily repurchased them over the next ˜15 years, and then between 2018 and 2021 they aggressively bought back almost 40% of the outstanding.

This was done between the low $40s and about $90.

After that, they went back to doing more minimal buybacks — not enough to offset SBC — as capex ballooned from 4% of revenue to 46% now.

But if they’re now going to issue a bunch of equity to finance their cloud building for OpenAI, it could be quite a coup of financial engineering!

Buy back a crapload of shares at $40-90 and reissue at $300+ 🏆

Update: It looks like they will go to the debt market first. It was just reported that they want to borrow $15bn:

The software maker is selling debt in as many as seven parts, including a rare 40-year bond, the people said. Initial price discussions for that portion of the deal are in the area of 1.65 percentage point above similarly dated Treasuries.

But they will reach a balance sheet limit at some point. They already have $100bn in net debt.

🧪🔬 Liberty Labs 🧬 🔭

🌾🚜👨🌾 The Long Bull Market in Harvests 🍽️

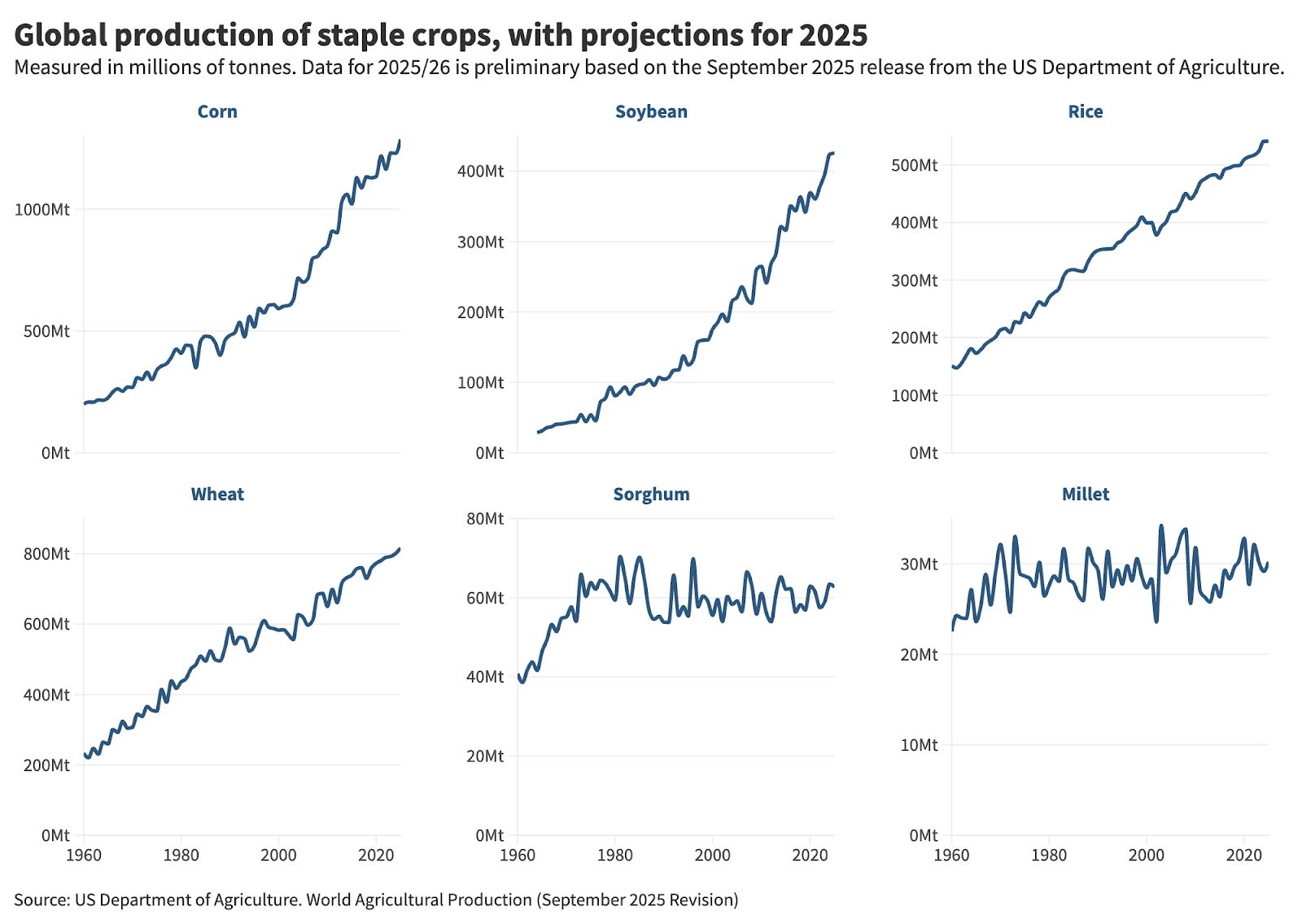

“Most crops, with the exception of sorghum and millet, have seen steady increases over the past 60 years, and that trend is expected to continue this year.”

Above are absolute numbers, but if you look at yields, they have also been steadily rising and those graphs look similar. In other words, we’ve been growing more food through improving yields, not just by planting more acreage.

How many would have predicted that it would keep going steadily back in the early days of the Green Revolution?

🇰🇵⌨️🔓 North Korean Infiltrators are Getting Remote Jobs at Tech Companies

Do you *really* know your coworkers?

CrowdStrike’s threat-hunting team investigated more than 320 incidents involving North Korean operatives gaining remote employment as IT workers during the one-year period ending June 30.

“It’s not just in the United States anymore,” Meyers said. The threat group escalated its operations throughout the past year, landing jobs at companies based in Europe, Latin America and elsewhere to earn salaries that are sent back to Pyongyang.

North Korea really knows how to follow trends. They stole huge sums in crypto, and now they’re using AI to get remote work:

“They use generative AI across all stages of their operation,” Meyers said. The insider threat group used generative AI to draft resumes, create false identities, build tools for job research, mask their identity during video interviews and answer questions or complete technical coding assignments, the report found.

You almost gotta admire the grind culture:

CrowdStrike said North Korean tech workers also used generative AI on the job to help with daily tasks and manage various communications across multiple jobs — sometimes three to four — they worked simultaneously.

🗣️🗣️ Podcast: ‘Data Centers: The Hidden Backbone of Our Modern World’ 🏗️🌐🕸️🛜💻

A nice new podcast discovery, hat tip to my friend MBI (🇧🇩🇺🇸):

This episode is 4 hours long, and I haven’t finished it yet, but I’m enjoying it enough so far to be able to recommend it.

To get the vibe, think narrative storytelling like what Acquired does, but about an innovation or infrastructure rather than about a company (the hosts credit Acquired explicitly for the format on their about page, so I’m not making this up).

If you want more, I also recommend the book ‘Tubes’ by Andrew Blum. It covers data-centers and internet infrastructure, though it was written pre-ChatGPT, so it doesn’t cover the recent buildout.

🎨 🎭 Liberty Studio 👩🎨 🎥

👽🎸 Alien Instrument: Spinning Guitar/Bass 🤯

I’ve seen a lot of things when it comes to weird/clever instruments, but it’s the first time I see this one, and it’s very cool. I wish I could try it.

Thanks - very detailed & balanced explanations.

My only question - which will sound curmudgeonly - is in reference to 'But at least, Nvidia is supporting a customer that has a product that is actually useful'.

How confident are we that the product is useful relevant to the cost?

But I suppose you are either a believer or non-beliver on this topic!

The Oracle buyback analysis here is fasinating - buying back 40% of shares between $40-90 and potentially reissuing at $300+ would be impressive financial enginering. But the update about borrowing $15B with $100B in net debt already on the books is concerning. At some point Oracle hits a balance sheet limit, and then they'll need to issue equity anyway, probaly at less favorable terms. The capex jump from 4% to 46% of revenue shows how capital intensive this AI infrastructure bet really is. If OpenAI doesn't deliver on those revenue projections, Oracle's financial engineering will look a lot less clever in hindsight.