596: Nvidia’s $500B Pipeline, Anthropic's Million TPUs, OpenAI & Microsoft's New Partnership, $80bn for Westinghouse Nuclear, UK Shipbuilding Lessons, and Mr. Scorsese

"Anthropic is quite the polyamorous player"

Fall in love with some activity, and do it!

Nobody ever figures out what life is all about, and it doesn’t matter.

Explore the world. Nearly everything is really interesting if you go into it deeply enough.

Work as hard and as much as you want to on the things you like to do the best.

Don’t think about what you want to be, but what you want to do.

—Richard P. Feynman

✈️ 🗽📚🎻🚉🏙️ I’m back!

Did you miss me?

(you don’t have to answer that if you didn’t 😅)

Well, I missed you.

I had a wonderful time at the OSV team gathering and at the subsequent writers’ room, diving deep into a not-yet-public project (sorry, can’t say more 🤐), spending time with many of my favorite brilliant people, and making new friends among collaborators and the 2025 cohort of OSV Fellows.

I’ve never been part of a group so full of curiosity, so passionate about what they’re creating, so alive with possibilities!

Fascinating conversations about my favorite topics and rabbit holes — from books to films to tech to business to biology to history and more — kept me up until 3 a.m. on most nights. Always among the last to bed, I refused to tap out for fear of missing anything, which made me glad I don’t really drink anymore, because mornings would’ve been quite rough.

There’s so much to share with you, I can’t fit it all here, but I’ll give you just a few highlights and save the rest for later:

I went to Lincoln Center for the first time with a few friends (thank you, Jim, for the tickets! 🎟️🙏). It was a chamber music concert honoring violin virtuoso Joseph Joachim, with pieces by the composers he inspired: Brahms and the Schumanns. Typically, I listen to concert programs repeatedly beforehand to familiarize myself with the music, but this time I went in cold.

It was still a great experience: beautiful venue, excellent acoustics, world-class players 🎻🎶

Another highlight was meeting Jameson Olsen in person. I’ve long been a fan of his podcast Becoming the Main Character (I’ve linked to it multiple times, I hope you checked it out!) and we had talked via Zoom and DMs, but nothing beats hanging out in person and unstructured convos that go all over the place and help you really get to know someone.

He’s the real deal! His approach to storytelling and the philosophy behind it is more thoughtful than anything I’ve seen in years, and he takes his craft very seriously.

He’s making me want to read much more fiction AND think about it more deeply.

Mark my words: He’ll someday help millions discover and rediscover the greatest stories ever told 📚 as a friendly & knowledgeable tour guide for imaginary worlds and a sensei for protagonists in training 🥋

I had a great nerdy conversation about biology, aging, and the potential second and third-order societal effects of longevity breakthroughs with OSV Fellow Benjamin Arya.

He’s exploring ways to bolster cellular DNA repair, learning from long-lived species, to ultimately tackle cancer. I’ll likely have him on the podcast for a deep dive into all this. Stay tuned!

I’m running out of space in this Edition, so more later! 👋

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet 👇

🏦 💰 Business & Investing 💳 💴

Google 🤝 Anthropic: A Million TPUs for 10s of Billions 😮

The Big G keeps winning lately, even when it comes to other people’s AI (well, to be fair, they have a big investment in Anthropic, even though they also compete with them).

They’ve signed a deal with Anthropic valued at ‘tens of billions’, giving Anthropic access to up to a million Google TPUs and over 1 gigawatt of capacity coming online in 2026.

Anthropic is quite the polyamorous player, having massive compute deployments coming for Nvidia GPUs, Amazon Trainium chips (Project Rainier, which has been estimated at “over 400,000 Trainium2 chips” and in the 2.2 gigawatt range, based on estimates for the Indiana DC), and Google TPUs.

But it makes sense. They apparently are getting a lot of design input for AWS’s next generation in-house chips, so they will largely be tuned to their roadmap and needs.

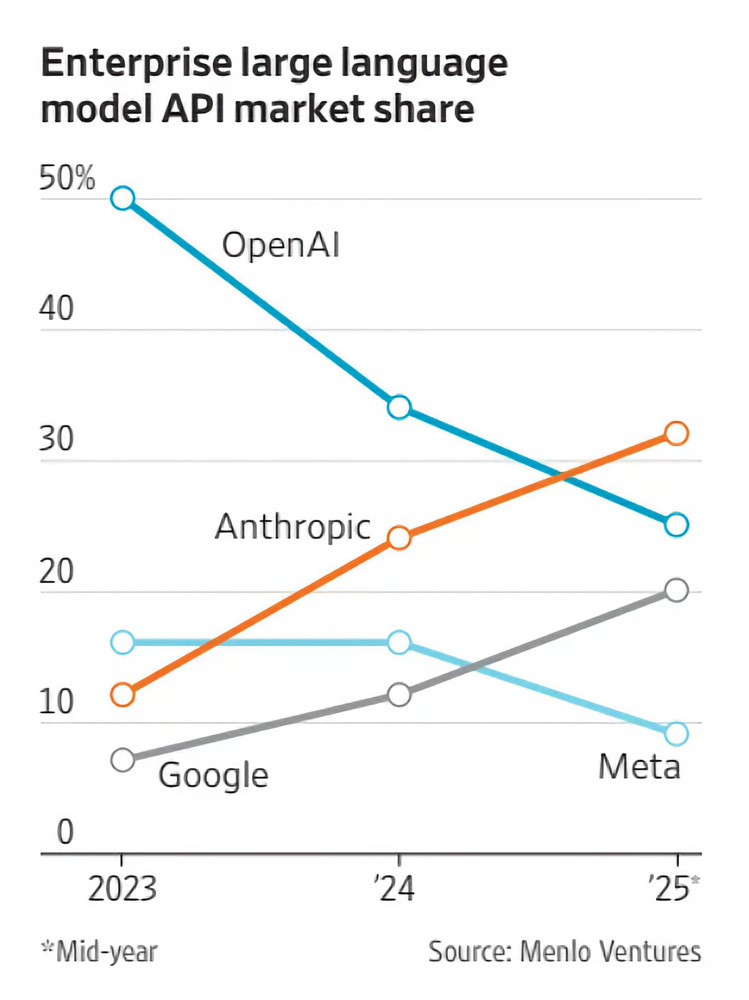

And if they want to keep up with OpenAI — the other poly player, making large deals with *everyone* and using all kinds of chips as well as designing its own with Broadcom — they have to take all the compute and funding they can get.

I'm sure the Cursor Bros will be happy when some of that capacity comes online, because Anthropic has been starved for inference. They have fewer total users than OpenAI, but they have a ton of power users who go through a gazillion tokens per day for complex agentic coding workflows.

✂️🥧 OpenAI Finally Restructures: Microsoft Gets 27% + $250bn in Azure Commitments 🧩

After months of what must have been tense negotiations, OpenAI and Microsoft have finally agreed on a new structure and how to slice the new pie:

First, Microsoft supports the OpenAI board moving forward with formation of a public benefit corporation (PBC) and recapitalization. Following the recapitalization, Microsoft holds an investment in OpenAI Group PBC valued at approximately $135 billion, representing roughly 27 percent on an as-converted diluted basis, inclusive of all owners—employees, investors, and the OpenAI Foundation. Excluding the impact of OpenAI’s recent funding rounds, Microsoft held a 32.5 percent stake on an as-converted basis in the OpenAI for-profit.

Microsoft started backing OpenAI in July 2019 and has invested over $13bn.

At first glance, a 10x in about 5.5 years is pretty, pretty good. That’s a CAGR of over 50%.

But, if I understand things correctly, that’s just part of the value that accrued to Microsoft. Most of what they provided to OpenAI has been compute, which was likely marked roughly at market prices in the deal, but Microsoft’s actual costs are lower. I don’t know the exact cash outlay to provide that compute, but it was certainly below the nominal amount in the deal.

Also, this partnership helped Microsoft build AI scale and expertise in Azure, and attract a bunch of API customers who want the OpenAI models but, for some reason, prefer to get them from Azure. It certainly helped Azure momentum and created more equity value inside Microsoft from that revenue.

Then there’s the revenue share, which is reported to be around 20% of OpenAI’s revenues until AGI is declared (more on that below), and the inclusion of OpenAI models inside of Microsoft Office products (Copilot). Copilot hasn’t exactly set the world on fire, but I’m sure that’s another nice source of high-margin revenue.

All in all, for what may have been a sub-$10bn cash investment, Microsoft likely has received hundreds of billions in equity value. Quite the homerun! ⚾

The crucial question for the NEXT five years is whether it will turn out to have been a good move for Satya to take his foot off the accelerator and allow others to become OpenAI partners, or if he should’ve gone for broke and used MS’ balance sheet more aggressively to own a bigger slice of the Stargate pie 🏗️🍰🤔

One of the changes in the new agreement has OpenAI purchasing an additional $250B of Azure services BUT Microsoft will no longer have a right of first refusal to be OpenAI’s compute provider. It looks like that clause was expensive to remove, but OpenAI is throwing multi-$100bn commitments left and right these days, so what’s an additional quarter trillion dollars? ¯\_(ツ)_/¯

Some of the interesting changes to the agreement include:

Once AGI is declared by OpenAI, that declaration will now be verified by an independent expert panel.

Microsoft’s IP rights for both models and products are extended through 2032 and now includes models post-AGI, with appropriate safety guardrails.

Microsoft’s IP rights to research, defined as the confidential methods used in the development of models and systems, will remain until either the expert panel verifies AGI or through 2030, whichever is first. Research IP includes, for example, models intended for internal deployment or research only. Beyond that, research IP does not include model architecture, model weights, inference code, finetuning code, and any IP related to data center hardware and software; and Microsoft retains these non-Research IP rights.

Microsoft’s IP rights now exclude OpenAI’s consumer hardware. (This is the Jony Ive thing)

OpenAI can now jointly develop some products with third parties. API products developed with third parties will be exclusive to Azure. Non-API products may be served on any cloud provider.

Microsoft can now independently pursue AGI alone or in partnership with third parties. (good luck)

As for the non-profit, it’s pretty massive: “Now called the OpenAI Foundation, [it] holds equity in the for-profit currently valued at approximately $130 billion, making it one of the best resourced philanthropic organizations ever.”

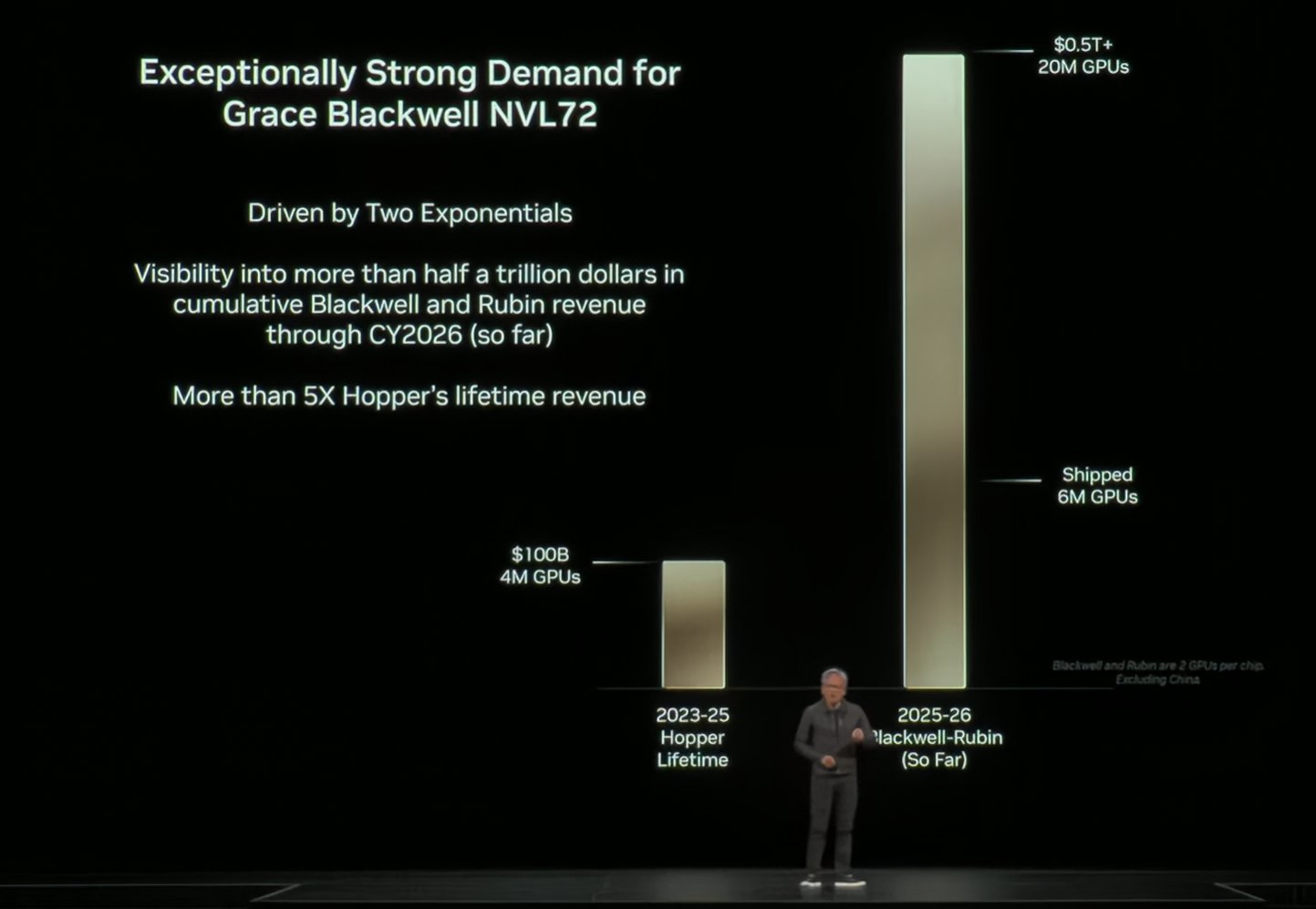

😎 GTC Preview: Nvidia’s $500B Pipeline, 3x Their Entire Annual Revenue 🚀

As I’m writing this, Jensen is on stage at GTC in D.C., announcing a bunch of stuff.

In the next Edition, I’ll dive deep into that and give my highlights. But for now, here’s something that jumped out:

Jensen says they have visibility into HALF-A-TRILLION in Blackwell+Rubin until the end of 2026. That’s a lot of hardware!

For scale, Nvidia’s entire trailing 12-month revenue up to the latest quarter was $165bn.

Dwarkesh Patel and Romeo Dean wrote a good piece about capex with a compelling way to put Nvidia’s scale in perspective:

With a single year of earnings in 2025, Nvidia could cover the last 3 years of TSMC’s ENTIRE CapEx. [...]

With only 20% of TSMC capacity, Nvidia has generated $100B in earnings.

Suppose TSMC nodes depreciate over 5 years - this is enormously conservative (newly built leading edge fabs are profitable for more than 5 years). That would mean that in 2025, NVIDIA will turn around $6B in depreciated TSMC Capex value into $200B in revenue.

Further up the supply chain, a single year of NVIDIA’s revenue almost matched the past 25 years of total R&D and capex from the five largest semiconductor equipment companies combined, including ASML, Applied Materials, Tokyo Electron...

🤯

They make an interesting point:

if you were to naively speculate about what would be the first upstream component to constrain long term AI CapEx growth, you wouldn’t talk about copper wires or transformers - you’d start with the most complicated things that humans have ever made - which are the fabs that make semiconductors. We were stunned to learn that the cost to build these fabs pales in comparison to how much people are already willing to pay for AI hardware!

Nvidia could literally subsidize entire new fab nodes if they wanted to.

🤯

🧪🔬 Science & Technology 🧬 🔭

🇺🇸⚛️ U.S. Govt Partners with Westinghouse Owners for $80B Nuclear Buildout

Details are a bit fuzzy at this point, but I like where this is going.

The U.S. Government has entered into a “strategic partnership” with Brookfield and Cameco (the joint owners of Westinghouse) to build “at least $80 billion of new reactors across the United States using Westinghouse nuclear reactor technology.”

They’re looking at the AP1000, the type built in Georgia (Westinghouse says 14 are under construction globally), and the smaller AP300.

It’s not yet clear how many GW of capacity, or which sites (expansion of existing ones?), but this is a start. And it’s urgently needed as demand for reliable electricity is going up faster than it has in decades, thanks to the massive AI data-center buildout.

🏗️🚢 From 57% to Zero: How the UK Lost Its Entire Shipbuilding Industry ⚓📉 (1947→2023)

Brian Potter has a great piece on how the UK went from building OVER HALF of the world’s ship tonnage in 1947 to ZERO commercial ships in 2023.

Here are my highlights:

Following the end of WWII, UK shipbuilding appeared ascendant. The shipbuilding industries of most other countries had been devastated by the war (or were, like Japan, prevented from building new ships), and in the immediate years after the war the UK built more ship tonnage than the rest of the world combined.

Even if we assume that the UK couldn’t have maintained that post-WWII market share, it still did MUCH WORSE than expected over the coming decades. Why?

But this success was short-lived. The UK ultimately proved unable to respond to competitors who entered the market with new, large shipyards which employed novel methods of shipbuilding developed by the US during WWII. The UK fell from producing 57% of world tonnage in 1947 to just 17% a decade later. By the 1970s their output was below 5% of world total, and by the 1990s it was less than 1%. In 2023, the UK produced no commercial ships at all.

The lesson here is about flexibility and changing your process when new, more effective methods appear. Europe as a whole seems to need to hear this lesson again these days.

Ultimately, UK shipbuilding was undone by the very thing that had made it successful: it developed a production system that heavily leveraged skilled labor, and minimized the need for expensive infrastructure or management overheads. For a time, this system had allowed UK shipbuilders to produce ships more cheaply and efficiently than almost anywhere else. But as the nature of the shipping market, and of ships themselves, changed, the UK proved unable to change its industry in response, and it steadily lost ground to international competitors.

There’s probably a lesson for the U.S. here too. If it wants to rebuild capacity to manufacture certain things on its own soil, it needs the flexibility to adopt best practices and move fast. Spending 10-20 years in paperwork and lawsuits isn’t a viable option.

Having ports that are way less automated than global competitors is another example of this inability to change and adapt.

💻 Claude’s New Desktop App: First Impressions 🤔

Bottom line: It’s not as good as the ChatGPT app, but it’s very welcome and a step in the right direction. If they can make it a bit snappier and add a few missing features, it could be a contender.

I noticed in my recent usage patterns that Claude Sonnet 4.5 is so good that it has bumped off most of my Gemini 2.5 Pro usage. I’ve made it the default model in the internal OSV AI tool that I’m using for a lot of things.

The other model I use most is GPT-5 in Extended Thinking mode. It has a good balance of attributes and a good “flavor” for my own use cases (your mileage may vary, of course).

My most anticipated model is Gemini 3.0 Pro. I don’t know if my expectations are too high, but I suspect it will be the next “wow” moment for AI. We’ll find out soon, the latest rumbles are that Google will release it before the end of the year.

🎨 🎭 The Arts & History 👩🎨 🎥

🎥🔪🩸 ‘Mr. Scorsese’: How He Stuck to His Guns and Won

This 2025 Apple TV 5-part series fills an interesting hole in my Scorsese mental library.

I know the films, and I’ve seen a few interviews with the man (mostly from the past 10 years — this half-hour convo between him and Timothée Chalamet was good), but I feel like I don’t *really* know that much about him and his journey making all those great films, especially in the early days when they were breaking new ground and facing all kinds of controversies.

I discovered him when he was already becoming a household name, but it was a long, hard road to get there.

Episode 1 gives great background on Scorsese, his family, where he grew up, and his early interest in film. It’s not the most exciting episode in some ways, because the films you know don’t show up yet. But when you learn about his childhood and family, a lot of recurring themes in his films start to make a lot more sense.

But then, we’re off to the races.

He meets Robert De Niro, and they have a pretty incredible collaboration, career-defining for both. The ‘Taxi Driver’ section is great, and the parallels and contrasts to his later collaboration with Leonardo DiCaprio are fascinating.

I was just six when ‘Last Temptation of Christ’ came out, so I was too young to know about the controversy. It’s pretty incredible to see all these people who haven’t seen the film being so angry about it. It was banned in multiple countries, there was a firebombing in a theater showing the film, and Scorsese had to have FBI protection… Yet theaters carried horror films showing people getting murdered with chainsaws.

¯\_(ツ)_/¯

The backstory on ‘Goodfellas’ is also great. Incredible how much of the film he had in his head, down to tiny details of cuts and music cues. I also didn’t know that Joe Pesci brought in the idea for the famous “funny how?” scene, that it wasn’t in the script 🤡 I also didn’t know that test audiences hated Goodfellas, many walked out, etc…

The Leo era is also fascinating, because their partnership brought Scorsese the bigger budgets and higher profile that he couldn’t quite get on his own. There was also, late in his career, a complete change of perception for his early films, now considered classics and highly influential, while at the time they were often ignored or controversial, and it took decades for him to win his first directing Oscar.

His ability to stick to his guns in the face of pressure from producers and financiers is admirable (they usually wanted less violence and happy endings). They eventually came around to his point of view, rather than the other way around 👍 👍

westinghouse, calm in the eye of nuclear meme, is even looking at possible revival of SC.

i dont know if it has better odds than some trump influenced deployment; my reflex being what is the MAGA (wright, trump) cut?

i wonder if some of this is brookfield's seller's regret (~50% stake) too cheaply.

I am still struggling to understand how a company that has a revenue of 12 billion can make purchases of 250 billions. It is like all you own would be 50,000 euros and I would go and say that I am going to buy a house for 1,000,000 and everyone would be like - yep, sounds legit, lets celebrate the deal.