598: Thinking About Bubbles, Neo's Wizard-of-Oz Problem, Zuck's $60bn Models, Europe, OpenAI + AWS, Cursor 2.0 vs Anthropic, Why Humanoids, and Cel Animation

"what differentiates a “good bubble” from a bad one?"

There are no solutions. There are only trade-offs.

—Thomas Sowell

🎥🎬🤖🤔 As a kid who loved robots, the ‘Short Circuit’ films loomed large in my imagination growing up.

The basic premise: an experimental military robot accidentally gains sentience. It begins to question orders, grows deeply curious about the world, and develops a sense of humor and morality as it makes friends with humans. Hilarity and drama ensue.

I haven’t seen the films in a loooooong time. They’ve probably aged very badly. But it’s still a cool concept. The classic “stranger in a strange land” as a way to explore what makes us human through an outsider’s eyes 👀

If we remade it today, or made something similar, we could do much better because, for the first time, we’re very close to having the technology to use an actual robot as an “actor” (maybe not sentient, but also not fully on rails).

By this I mean: Instead of building a robot with pre-scripted behavior for every scene or controlled remotely like a puppet, what if we powered it with an LLM + Vision-Language-Action (VLA) model so that it can actually ‘act’ in real-time alongside human actors and deliver a unique performance on each take?

The AI would have access to the script and even do rehearsals to make sure it has extensive context on what it has to do, but like a human, it could vary delivery and physical performance to have more ‘presence’ during a scene and better react to what’s going on around it. It could also receive real-time feedback from the director, because so much of the magic of films happens spontaneously on set, something you lose with fully pre-programmed bots.

It doesn’t have to be a ‘Short Circuit’ remake, though in this age of reboots, it would make sense.

A higher level of difficulty would be to create something like Alex Garland’s ‘Ex Machina’ but with actual robots rather than humans disguised with VFX. Or on the cuter end of the spectrum, a physical embodiment of Wall-E in a live-action film, maybe 🤔

In any case, I can’t wait for the first movie crediting a robot running live AI as an ‘actor’.

Tell me that wouldn’t be cool!

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet 👇

🏦 💰 Business & Investing 💳 💴

🇪🇺 Europe’s Problems Are Everyone’s Problems 🕸️🌐

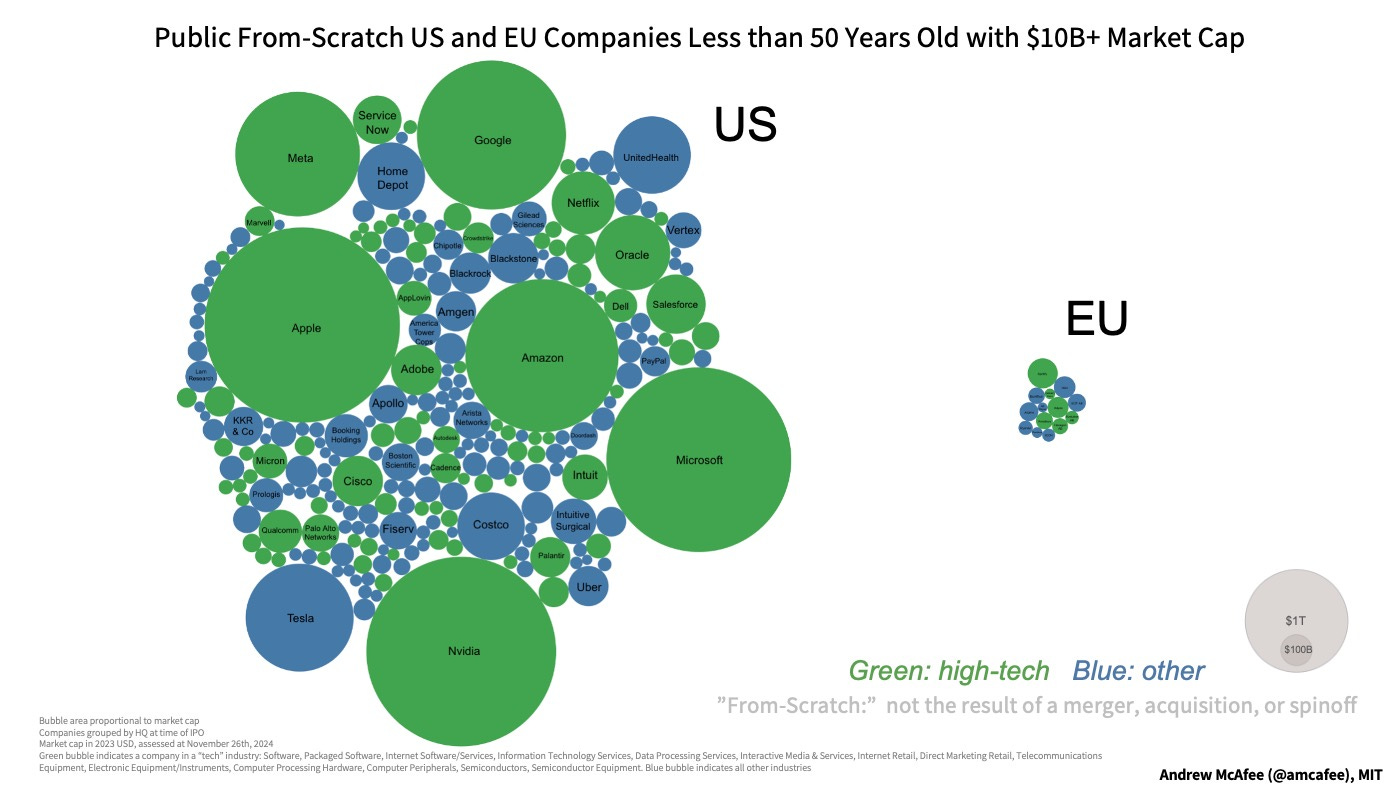

I was thinking about this graph again (created by Andrew McAfee).

It shows public companies in the US and EU that are less than 50 years old, have at least a $10bn market cap, and aren’t just the result of a merger/spinoff/etc. It’s an imperfect but useful proxy for dynamism, and the difference is so striking that it’s hard to explain away, even if we want to steelman Europe’s approach.

There may be a temptation to look at this as a European problem, but everything is connected.

Imagine how much better the world would be if the EU was as dynamic as the U.S.: Half a billion people (if we want to just count the EU, or around 750m if we include all of wider Europe) creating companies, technologies, medicines, etc. A better trading partner and ally, all kinds of win-win exchange, helping to better counter-balance autocrats and adversaries around the world.

That would be an interesting alternate history!

If voluntary trade is largely win-win (we can all come up with exceptions, but it’s largely how the US became so wealthy and powerful), having a bigger and better trading partner would make both the US AND Europe better off, and if China is the most worrisome adversary, a bloc (also including Japan, South Korea, etc) that is much larger in both population and economic output would strengthen everyone’s position.

This is why I try not to feel too much schadenfreude about Europe’s self-inflicted problems, but rather root for them to change course.

(to be clear, I’m not saying everything is great in the US, it has plenty of its own problems, but when it comes to creating world-beating innovative companies that generate a lot of technological breakthroughs and wealth, I think it’s clear which system is working better)

🫧 Thinking about Bubbles 🤔

If most people could sit out bubbles, they wouldn’t become bubbles in the first place.

Reflexivity strikes again 🔄

Most market participants have heard about past bubbles, and they’re smart enough to want to avoid them, or at least, avoid the bursting part 💥 So if a bubble wasn’t extremely convincing, if it didn’t create a dynamic where it feels worse to sit it out than to participate, it wouldn’t inflate in the first place.

If it didn’t feel like “yes, sure sure, but there’s no other choice, this time it’s different because XYZ”, then it wouldn’t get traction.

Just listen to Big Tech CEOs talk about their decision to invest hundreds of billions of dollars in capex. They clearly see the bigger downside in underinvesting rather than in overinvesting.

Yet if everyone thinks like that, doesn’t that greatly increase the odds of overinvesting in the aggregate?

To be clear, I’m not saying bubbles are necessarily a bad thing. I’m using the term in a fairly neutral way, as a description of a phenomenon where a certain industry is inflating super quickly and massive investments are being made based on future expectations of cashflows that haven’t materialized yet.

How else could OpenAI make deals for $1.4 trillion in compute infra?

Bubbles have complex effects. Some are positive things that would never happen outside of bubble dynamics: where would anyone get the capital to compete with TSMC, Nvidia, Google, ASML, hyperscalers, etc, during normal times? Does anyone think that OpenAI and Anthropic would have been able to raise the tens of billions they need in a more sleepy time, like 2011? Other effects are, of course, negative: investors, especially the less sophisticated ones, tend to pile on at the very top, right before vast amounts of wealth evaporate.

But what differentiates a “good bubble” from a bad one?

The ideal bubble leads to breakthroughs that have long-lasting positive effects, long after everyone has calmed down. It unlocks whole new branches in the tech tree by helping coordinate everyone’s attention, talent, and capital on a few very promising areas for a while. And it helps finance long-lived assets that can be used for things beyond the bubble.

So stockpiles of tulip bulbs or Beanie Babies, not so great after the bubble. 🌷🌷🌷

But rail or dark fiber crisscrossing the world: Amazing!

The ideal outcome is a platform on which to build for decades to come! 🛤️

For this era, I think the most likely benefits will come from vast R&D investments in AI, robotics, semiconductors, etc, which would be a fraction of what they are in a more normal environment, and lots of new power generation.

Hopefully, building all these power plants and data-centers also strengthens our infrastructure-building muscle 💪🏗️👷

Whatever happens to the stocks of AI companies, society would benefit from more energy abundance and from broad access to very good AI models and robots.

💰 OpenAI’s $38 Billion Compute Deal with Amazon 🤝

Would you ever have thought a couple of years ago that a $38 billion compute deal would be considered on the smaller side? That’s the power of bubble dynamics! After a while, you anchor to huge numbers and they stop being impressive…

Anyway:

Under this new $38 billion agreement, which will have continued growth over the next seven years, OpenAI is accessing AWS compute comprising hundreds of thousands of state-of-the-art NVIDIA GPUs, with the ability to expand to tens of millions of CPUs to rapidly scale agentic workloads. [...]

OpenAI will immediately start utilizing AWS compute as part of this partnership, with all capacity targeted to be deployed before the end of 2026, and the ability to expand further into 2027 and beyond.

As soon as the ink was dry on the new agreement with Microsoft, OpenAI turned around and made this deal with Azure’s biggest competitor to “immediately” start running workloads (which begs the question: Why was all this capacity available? Is it just a testament to AWS’ ability to build fast at scale and source lots of power?)

They also mentioned that AWS is building Nvidia GB200 and GB300 capacity for OpenAI, to be used for both inference AND training.

It’ll be interesting to see if Amazon can get OpenAI to also use Trainium chips, building further scale after getting Anthropic on that train (Amazon says Anthropic will scale to ~1M Trainium chips by end-2025). I’m guessing OpenAI would probably prefer to focus on its own internal ASIC projects, but for the right price… they are so poly-amorous that I wouldn’t be surprised if they add one more chip to the list 🤔

By making deals with everyone, OpenAI is putting itself in an enviable position. They can play various partners against one another and extract better deal terms than if they were dependent on just one compute provider, as they first were with Microsoft for a few years.

Microsoft had all the leverage in that relationship and extracted pretty lucrative conditions. But that’s over now. It’s OpenAI that got equity in AMD when they partnered (up to 10% of equity via warrants), and I suspect it won’t be the last time that we see a deal like this.

📲⚙️💰 Zuck’s $60bn Transformers

As everyone is wondering about monetizing AI investments, Zuckerberg dropped this line in the most recent quarterly call:

This quarter, we saw meaningful advances from unifying different models into simpler, more general models, which drive both better performance and efficiency. And now the annual run rate going through our completely end-to-end AI-powered ad tools has passed $60 billion. And one way that I think about our company overall is that there are 3 giant transformers that run Facebook, Instagram and ads recommendations. We have a very strong pipeline of lots of ways to improve these models by incorporating new AI advances and capabilities.

And at the same time, we were also working on combining these 3 major AI systems into a single unified AI system that will effectively run our family of apps and business using increasing intelligence to improve the trillions of recommendations that we’ll make for people every day.

I’m sure something very similar could be said by Google, and how a certain number of large transformer models are powering businesses that generate tens if not $100+ billion of dollars 🤯

That’s the most direct monetization of modern transformer-based AI, generating much more than ChatGPT or Anthropic’s APIs (though growing more slowly).

And having these large first-party workloads is a big advantage for Meta and Google, because if demand for chatbots, coding agents, and such, slows down, they can probably use a lot of the excess compute internally to nudge up ad targeting and content recommendation effectiveness.

In other words, a lot of these processes can absorb more compute to deliver better results, and even if returns don’t scale up linearly with additional compute, it’s still much better to get some return on it than having idle GPUs/ASICs in a shell somewhere.

The fact that they’re attempting to merge everything into one system is interesting. On one hand, I would expect that specialized models would outperform a more general model, but because these models are architected as a complex mixture of experts (MoE), they can probably have expert sub-models that are better at ad targeting, while others are better at feed content recommendation, etc.

But by combining them, any improvements on the shared infrastructure gets more leverage. Develop once and deploy once, benefit everywhere, rather than having to develop & deploy three times into models that may grow apart over time and become harder and harder to reconcile.

That’s my guess anyway, I don’t have visibility into their plans ¯\_(ツ)_/¯

🇰🇷 🏭🔍🤖 Samsung + Nvidia: Creating the Semiconductor ‘AI Megafactory’ of the Future

One more week, one more Nvidia partnership. This time with Samsung:

[Samsung] said it will deploy more than 50,000 of Nvidia’s most advanced graphics processing units in the new facility to embed artificial intelligence throughout its entire chip manufacturing flow.

It’s also planning to leverage AI to help with chip development and design to facilitate a new generation of semiconductors, mobile devices and robotics with enhanced AI capabilities of their own.

The goal is to build a semiconductor fab that is AI-powered at almost every stage of chip manufacturing, to automate operations AND design even more than they already are, and modern fabs are already *very* automated, but with more traditional techniques.

It remains to be seen if AI can provide a step-change in gains or if it’ll turn out to be more evolutionary.

To do this, it’s going to rely heavily on AI-powered “digital twins,” or virtual replicas of its chip products. Using the Nvidia Omniverse platform, it’ll create digital twins of every component that goes into its semiconductors, including memory, logic, advanced packaging and more. It’s also going to create twins of its actual fabrication plants and the expensive machinery within them.

👩❤️💋👨 The Digital Marketplace for Dating: Aggregation Theory for Relationships? 🤳

Something a bit different!

Erik Torenberg has an interesting piece about some of the challenges of the modern dating world, and how app-dating has changed the dynamics at play and created a mismatch:

Before online dating, the available dating pool was just the people in your town: the people at your local bar, at your church, at your office, etc. Online dating expanded that pool by orders of magnitude, which changed how we think about dating in general.

There’s a parallel there with how most industries were shielded from competition by geography and other barriers, and the internet and globalization removed those barriers and made competition much more brutal, with a few power law winners gobbling up a lot of the market.

What online dating does is enable hypergamy at a massive scale. Hypergamy is the tendency for women to want to date the best men, no matter where the woman is in the hierarchy. Men also want top women of course, but they’re on average willing to settle for any woman, at least for casual sex, whereas women are much more discerning, which makes sense given women have a much bigger risk than men when it comes to sex, since women can get pregnant. It’s basic biology: Sperm is cheap, eggs are expensive.

This is a population-level observation. Of course, any single individual’s mileage may vary.

What we see with algorithmic online dating isn’t a mechanism to assign the perfect match to each person of the opposite sex. Instead, we’ve created a machine where the top 20% of men mate with many different partners and the top 80% women try to get the top 20% of men to date and ultimately marry them (and not just have sex with them).

Aggregation theory for dating? Where’s the Ben Thompson of dating 😅

Algorithmic dating conflates two markets, the market for relationships and the market for sex under the ambiguous banner of “dating.” What happens then is men on apps try to match with as many women as possible and women try to match with a small selection of higher status men. That leads to the situation where a dating app’s natural equilibrium is that a narrow set of men have “dating” access to almost all the women if they choose to, and they typically do. Even with the best intentions, these men aren’t interested in long-term relationships with all these women. The more options a man has, the less inclined he is to want one single relationship.

In numbers:

Men swipe right on 60% of women, women swipe right on 4.5% of men.

The bottom 80% of men are competing for the bottom 22% of women and the top 78% of women are competing for the top 20% of men.

A guy with average attractiveness can only expect to be liked by slightly less than 1% of females. This means one “like” for every 115 women that see his profile.

🧪🔬 Science & Technology 🧬 🔭

💾 Cursor 2.0 + Composer: Faster, Cheaper, In-House, and Reducing Reliance on Anthropic

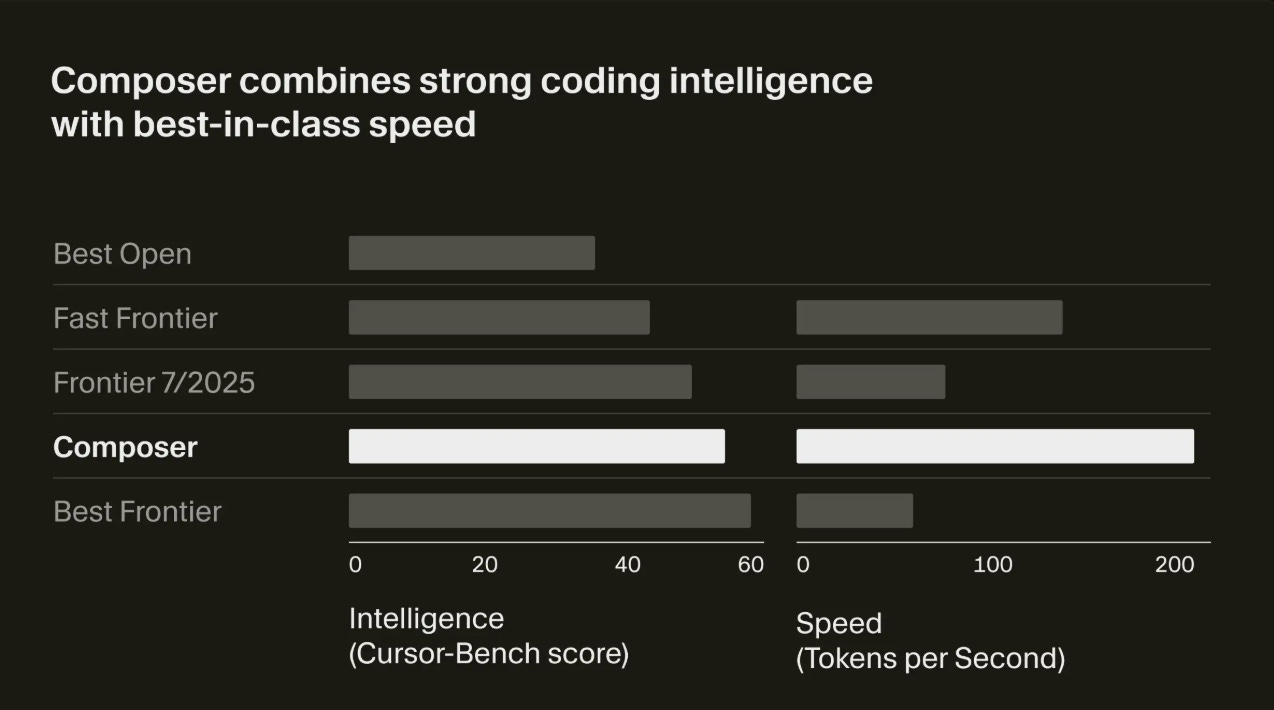

The biggest announcement with Cursor 2.0 was Composer, a ‘frontier model’ created specifically by Cursor to excel at coding tasks while being freaking fast (and of course, less expensive/more profitable for Cursor than running Anthropic APIs…).

Cursor didn’t give us a ton of info about Composer, but it’s very likely to be based on an open-weights model like Qwen with a lot of reinforcement learning post-training on top to make it particularly good at the specific coding tasks that are common in Cursor.

In some ways, it’s a bit like how OpenAI created Codex on top of their more general models.

Composer is a mixture-of-experts (MoE) language model supporting long-context generation and understanding. It is specialized for software engineering through reinforcement learning (RL) in a diverse range of development environments. [...]

Reinforcement learning allows us to actively specialize the model for effective software engineering. Since response speed is a critical component for interactive development, we incentivize the model to make efficient choices in tool use and to maximize parallelism whenever possible.

The post-training RL paradigm seems to be holding for now. Extra compute dollars seem better invested in the slice than anywhere else right now. Of course, that may change at any time.

In addition, we train the model to be a helpful assistant by minimizing unnecessary responses and claims made without evidence. We also find that during RL, the model learns useful behaviors on its own like performing complex searches, fixing linter errors, and writing and executing unit tests.

Based on commentary from Cursor users, this token efficiency has long been one of the big advantages of Anthropic vs most others, and clearly, Composer is targeting it.

If you are paying by token volume on the API, having a less verbose model can make a big difference.

During RL, we want our model to be able to call any tool in the Cursor Agent harness. These tools allow editing code, using semantic search, grepping strings, and running terminal commands. At our scale, teaching the model to effectively call these tools requires running hundreds of thousands of concurrent sandboxed coding environments in the cloud.

More details in the engineering blog post by Cursor.

You can also check out this video review/first impressions of Cursor 2.0 and Composer by Theo.

🤖 Neo Robot’s Wizard-of-Oz Problem 👀🎮

MKBHD explains it well: This robot is being sold as an AI-powered helper around the house, but almost all of the demo videos are human-teleoperated, and the few things it can do autonomously are not very impressive.

The privacy implications of having strangers walking around your house are real. People do hire housekeeping help, but there’s a personal connection, and face-to-face norms carry weight. 🗣️🗣️

How does it work when you don’t know who is in your house? When you can’t size them up by having a conversation with them, look at their body language and micro-expressions (the stuff we’ve evolved to do)?

In fact, how does it work when it’s a different remote worker every day? A rotating cast of contractors?

If a robot is walking around your house, how quickly do you forget there’s a human on the other end of those cameras? 👀 You wouldn’t forget that a human is in the house, but a robot can mask the presence of the operator.

Even if you trust the company as an entity, there can be rogue employees exfiltrating video and audio data captured by the robots (if only by filming their screens with their phones).

🦾🤖 Robots 101: Why Humanoids? 🤔

If you’ve been following robotics, this will be obvious to you. But maybe you haven’t been paying too much attention until your Twitter timeline became flooded with videos and images of creepy humanoid robots.

One question I often see is: Why are they humanoid?

Wouldn’t it be less uncanny if they just didn’t look like humans?

Couldn’t we design more effective body architectures? Why have a head at all? Why not more than two arms? Etc.

Here’s the answer:

Because the human world is built to human specs. Stairs, door handles, ladders, shelves, tools, vehicles, and workspaces all assume two arms, two hands, human reach envelopes, and a certain eye-height. 🧍

Humanoid robots slot in without redesigning the environment or the workflow, similarly to how self-driving cars have to work within the existing road system. 🚙

A body plan with hands means instant compatibility with the trillion-dollar catalog of human tools. A head with “eyes” makes intent legible to people and eases teleoperation and safety.

For companies, the near-term job-to-be-done is labor substitution in existing facilities, not re-architecting factories or cities around strange new robot shapes, so matching human kinematics minimizes integration difficulty.

On the software side, perception and manipulation models trained on human motions (and human-made objects) transfer more directly to humanlike form factors.

Yes, wheels beat legs on flat floors, and specialized bots are more efficient at single tasks, which is why industrial robots are usually non-humanoid, but if you need one machine to traverse stairs, open doors, carry totes, and use a drill, a humanoid wins on versatility and time-to-deployment. 🦾

🗣️ Interview: Sally Greenwald on Women’s Sexual Health 👩🤰

Back in Edition #435, I highlighted a couple of interviews with experts on sexual health (men and women’s).

As a follow-up, here’s one more on women’s sexual health:

The interview contains a lot of information relevant to both men and women, so don’t feel like it’s not for you if you’re a dude.

🎨 🎭 The Arts & History 👩🎨 🎥

👨🎨 The Lost Art of Cel Animation 🎞️

My kids were watching K-Pop Demon Hunters for the Nth time when I got to thinking about traditional, hand-drawn animation ✍️

When looking at very old, early animation, you can see all kinds of flickering and uneven colors. But fairly rapidly, the process was perfected, and the very best Disney or Miyazaki films have flawless animation and colors.

But without computers, how did they get colors to be so even? How were they able to make what are basically photos of hand-drawn frames look so clean?

Nothing that I could color by hand would EVER look anywhere near that neat. When I look at Aladdin or The Lion King, it’s almost too good to be human!

So I started looking into it a bit, and the clever solutions that they found are fascinating. Here’s an overview, but if you want more I encourage you to check out the video above.

Before digital tools, Disney, Miyazaki’s Studio Ghibli, and others used a process called cel animation (short for “celluloid”, later acetate).

Here’s how they achieved those perfectly even, line-free colors:

🎨 1. Ink-and-Paint on Transparent Cels

Each frame began as a drawing on paper, which was then traced onto a transparent sheet of celluloid (a “cel”) using black ink for outlines. Once the outlines dried, the color was painted on the back side of the cel, not the front.

Painting on the back did two key things:

It prevented visible brushstrokes, since viewers saw the color through the smooth, glossy front surface.

It kept the black outline crisp, because the paint never interfered with the ink lines.

Starting in the 1960s, Disney adopted xerography to transfer pencil lines directly to cels, eliminating some hand-inking and producing consistent, hair-fine outlines.

🧪 2. Flat, Opaque Paints

Studios used special opaque paints developed specifically for cel animation. These paints were extremely pigmented and consistent, designed to dry without streaks or uneven gloss.

Disney even had a “Paint Lab” where chemists mixed custom colors for each production 👩🔬 (like the precise yellows in Bambi or the pastels in Sleeping Beauty). Each jar was labeled and documented in “color model sheets” to ensure perfect consistency across thousands of frames.

🧑🎨 3. Highly Skilled Painters (craftsmanship!)

Cel painters were professionals who specialized in creating flat, uniform color fields by hand. They used tiny, soft brushes and developed a steady, even technique.

Even small variations were caught during quality control under magnification 🔬 because when photographed in sequence, even one streak could flicker on screen.

📷 4. Multiplane Cameras and Lighting

When each cel was finished, it was layered over painted backgrounds and filmed with a multiplane camera under carefully controlled lighting.

The even illumination ensured that no reflections or shadows betrayed brushstrokes or dust. This gave that pristine, “perfectly colored” look that I associate with the best Disney and Ghibli films.

💻 (Later) Digital Ink and Paint

By the 1990s, studios began moving to digital ink-and-paint systems (like Disney’s CAPS system or Ghibli’s Toonz), which simulated the cel look on computers. The goal was still to match the clean, even colors of the classic hand-painted era.

Only later, with Pixar, did things start shifting to 3D computer animation. Toy Story (1995) was the first feature‐length film made entirely with computer‐generated images.

"Europe has become a museum". I have been thinking this a bit about London, but with two caveats:

1: London always changes (not always for the better). So maybe it's just having a little pause.

2: Once you get older, you don't have the opportunities to see all the different things that you did before, so maybe there is a load of new stuff going on, and I'm just not seeing it?

But certainly, those comparisons of the US vs European indices from 2000 to 2025 are jaw-dropping i.e. how US went to mostly tech & new stuff, while Europe is still the same banks, industrials etc. (+ ASML and Adyen as token successes!)

The frame about 'good bubbles vs bad bubbles' is crucial. The best bubbles are those that create durable infrastructure - they fund innovation that persists even after euphoria fades. This applies across domains: internet infrastructure, AI computing, and hard tech. The key insight is that societal progress often requires overshooting before markets find equilibrium. Excellent synthesis of complex topics as always, Liberty.