599: Anthropic & OpenAI Strategic Divergence, Nvidia's China Ban, Buffett’s Last Letter, Pareto Dating, Gigawatt Datacenters & Nuclear Plants, and Sarah Paine

"I needed role models, and could only find them in books"

What is the first business of one who practises philosophy?

To get rid of thinking that one knows; for it is impossible to get a man to begin to learn that which he thinks he knows.

—Epictetus, Discourses, 2.17.1

🤰👶 👦🏼🧍🚌🧍♂️🎓🧑🧑🧒🧒⏳ Lately, I’ve been thinking *a lot* about this:

“About 90% of the time a parent will ever spend with their child happens by the time the child is 18.”

Looking at how fast my kids are growing up, it’s hard not to feel like it’s all going so much faster than I expected. It’s the thing wise older people warn you about when you’re 22, but you don’t REALLY get it until it happens to you 🕰️

My 11-year-old is now as tall as my wife, and he’s about to do his secondary school entrance exams. My 7-year-old now leaves the house by himself to go play at his friend’s place down the street 🏡🏠🏡

It’s great to see them grow up and gain autonomy, but sometimes I wish I could travel back in time like Billy Pilgrim in Slaughterhouse-Five (minus the firebombing of Dresden and the aliens) and spend time with my kids when they’re still one or three, with tiny feet and big belly laughs 👣

It seems like just yesterday that they were tiny infants I was rocking to sleep while singing (badly) lullabies and Beatles songs. 🎶👶🌙🧸

Back to the “18 summers” stat: I looked up the data and used AI to run the math on a few scenarios.

Best I can tell, the typical ballpark is somewhere between ~75% and ~90% by age 18.

Of course, it varies from one family to the next. Some are very close while others aren’t (geographically AND relationships-wise). But even if you’re very close, it makes intuitive sense that there’s a step change when your children become teens, when the healthy process of figuring out their own identity and relying more on peers than parents takes place, and then there’s a time-spent cliff when they move out 📉

It’s not something I thought about until recently.

It always seemed like a TV show trope, getting emotional as you help your kid move into a dorm room, so far off in the future it felt more like fiction than real life.

But now it’s starting to feel real…

🥋🏋️♂️💪🧠🚲📘🎥🎬 Speaking of kids, great news!

There’s going to be a film based on Jocko Willink’s ‘Way of the Warrior Kid’ book.

As I told you before, these books have had a HUGE impact on my kids’ lives.

I read them out loud, and they really enjoyed them AND made multiple life changes based on the stories and lessons. It’s hard to ask more from a children’s book!

What we know about the film so far:

Chris Pratt will be Uncle Jake, and Linda Cardellini will be Marc’s mom (she was good in ‘Freaks & Geeks’ and later seasons of ‘Mad Men’)

Filming began in August 2024 and wrapped in October 2024, so it’s in late post-production right now.

It will be on Apple TV 🍎📺

Release date: December 25, 2025 🎄🎁

I hope they do the whole series, books #2 and #3 are just as good as #1, if not better.

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet 👇

🏦 💰 Business & Investing 💳 💴

🤖🤖 Anthropic & OpenAI: Strategic Divergence and Projections (I know, I know…) 📈🚀🤔

Projections are bullshit, especially long-term ones in fast-changing industries with a lot of contingent events… made by companies that are seeking to raise billions.

But as long as we understand that, it’s still worth peeking at what they’re projecting. If they make decisions based on these forecasts, understanding the projections helps us understand their strategy.

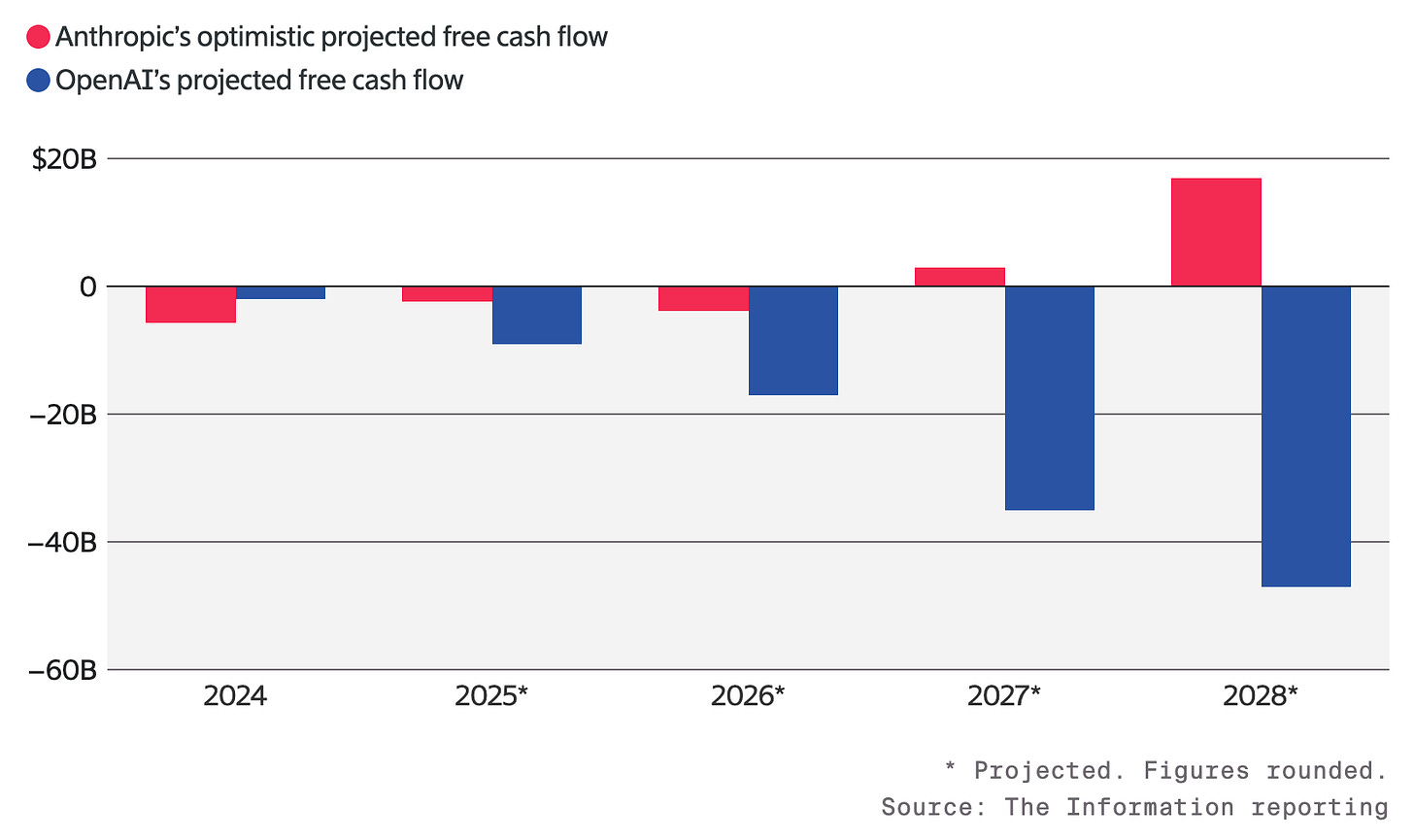

The first thing to note is how Anthropic and OpenAI are diverging on how much they expect to invest over the next few years.

I’ve written about this before: They have pretty different customer bases, and OpenAI’s large free user base provides it both a great opportunity/funnel, but is also expensive to serve, while Anthropic’s smaller total user base has a much higher mix of paying customers, especially Cursor bros who are using millions of MONETIZED tokens on the API.

Anthropic’s latest known estimates for how much it spends on its free users are around $160m (take it with a grain of salt, we don’t really know). We don’t know how much OpenAI is spending on free, unmonetized users, but I expect it’s in the billions and growing fast.

As a more ‘consumer’ AI company, OpenAI is also spending a lot more on various product lines, like a browser, consumer hardware (the Jony Ive thing), robots, music generation, and Sora’s TikTok/Reels-like app.

Anthropic is much more narrowly focused on coding and agentic/tool-use workflows.

Is this because both companies have diverged in how ‘AGI-pilled’ they are? 🤔

Is Anthropic focused on just what can generate the most revenue to get them to AGI (which they think will change the game), while OpenAI increasingly sees itself as more of a Big Tech consumer company with all kinds of product lines used by billions of people..?

💌 Buffett’s Last Letter 🥲

I’m sure you’ve seen Buffett’s Thanksgiving message, where he announced “going quiet”.

This feels like the end of an important era.

I credit Buffett and Munger with many of the best decisions in my life, even outside of anything related to finance. Their way of thinking was very influential to me during a formative time when I needed role models, and could only find them in books 📚

I truly wish they could live forever.

I hope my kids can find role models and teachers who are as solid. It’s one thing to talk about the long-term, about doing what you love, about marching to the beat of your own drum, etc, but words are just words, and most people who utter the “right words” don’t live them out or bow out of the arena after three or four decades, not seven or eight.

In theory, all of the writings and recordings are still there for future generations to find. But I’m afraid that their influence will fade with time, as with all things, and I don’t see anyone of their stature and integrity on the scene.

That leaves me a little sad.

Safal Niveshak has a nice reflection on Buffett & Munger. I encourage you to read it.

🇺🇸🤖🚫🇨🇳 U.S. Blocks Nvidia’s China Sales: First, Second, and Third-Order Effects 🤔🔀

After what I assume was a lot of lobbying and cajoling behind the scenes, it looks like the White House has landed on NOT allowing the sale of Nvidia’s cut-down Blackwell GPUs to China.

1️⃣ First-order effects:

Financial hit to US chipmakers (mostly Nvidia, also AMD, and others) from losing access to one of the largest markets in the world. Broadcom’s ASIC business would no doubt also be stronger in China without the limits on performance and the entity list restricting who they can work with.

Impact on China’s AI industry: It should slow down the rate at which they can train new and larger models and increase their operating costs. Even if they have lots of power and can use a larger number of less efficient chips, if given the choice, they’d rather spend the same amount of power on much faster chips. Of course, they can still smuggle some chips and access some remotely in other countries, but not as many as if they could buy them directly.

More Nvidia supply for the rest of the world. TSMC and the complex supply chain can only make so many racks per week, so if nothing is made for China, it should mean more supply elsewhere.

2️⃣ Second-order effects:

Accelerated Chinese chip development: They were already pushing very hard on this, but scale matters. If Chinese Big Tech were spending billions on Nvidia chips, it would reduce scale for Huawei and SMIC and slow down progress on both the hardware AND software ecosystem fronts. Having more developers and AI researchers use the Chinese stack will help it mature faster and get people trained away from CUDA.

Bigger focus on algorithmic & systems efficiency in China: To be clear, all the big labs around the world invest a lot in this. We’re not hearing about most of it because it’s proprietary (this is why DeepSeek R1 made so many waves, it is open source, we could see exactly what they did 👀).

But incentives rule the world, and if it’s easier to just buy more Nvidia GPUs than do esoteric optimizations, that’s what you’ll do. If China focuses more on algo efficiency, it could find itself in an enviable position if Huawei ever catches up with Nvidia AND the Chinese software stack is more optimized than the Western AI stack. That’s speculative, though.

Global supply chain fragmentation: Instead of one dominant ecosystem, we’re more likely to have two.

A US/Western-aligned stack (built around Nvidia/AMD/Broadcom/etc) and a China-aligned stack (built around Huawei/SMIC/domestic alternatives).

More shadow supply chains and grey markets. We’ve seen this ever since export controls went into effect. 🏴☠️

3️⃣ Third-order effects (more speculative):

Maybe Jensen is right, and banning sales to China leads to a short-term win, but sets up a long-term failure by increasing motivation for China to fully build its own stack, and have the scale to pull it off, rather than leave them dependent on Western hardware and software.

The most effective controls are those on semi equipment (EUV machines from ASML, etc), but GPUs certainly are also an important piece of the puzzle 🧩

Slowing down Western innovation (?): If Nvidia loses scale from fewer sales, and loses some CUDA network effects from having fewer AI researchers using it, and the US gets fewer AI researchers from China wanting to emigrate because they are deeply embedded in the Chinese stack, it could slow down progress in the West.

In fact, it could slow down global progress because instead of having one big semi-integrated hardware/software ecosystem, you have two smaller, bifurcated ecosystems that don’t share as much and need to duplicate more efforts.

It’s interesting to think through. What have I missed? What do you think is likely to happen?

📲👩❤️💋👨 Follow-Up on the Pareto-Distribution Digital Dating Marketed 📊

This is a follow-up to the digital dating marketplace piece from Edition #598.

🧪🔬 Science & Technology 🧬 🔭

🗣️ Interview: Sam Altman on Where OpenAI is Going

Here are a few of my highlights:

🔌 On the power constraint:

Cowen: The stupidest question possible: Why don’t we just make more GPUs?

Altman: Because we need to make more electrons.

Cowen: What’s stopping that? What’s the ultimate binding constraint?

Altman: We’re working on it really hard.

Cowen: If you could have more of one thing to have more compute, what would the one thing be?

Altman: Electrons.

Cowen: Electrons. Just energy. What’s the most likely short-term solution for that?

Altman: Short-term, natural gas. ... Long-term, it will be dominated, I believe, by fusion and by solar. I don’t know what ratio, but I would say those are the two winners.

👨🔬 On AI that can do scientific research:

Altman: If GPT-3 was the first moment where you saw a glimmer of something that felt like the spiritual Turing test getting passed, GPT-5 is the first moment where you see a glimmer of AI doing new science.

It’s very tiny things, but here and there someone’s posting like, ‘Oh, it figured this thing out,’ or ‘Oh, it came up with this new idea,’ or ‘Oh, it was a useful collaborator on this paper.’

There is a chance that GPT-6 will be a GPT-3 to 4-like leap that happened for Turing test-like stuff for science, where 5 has these tiny glimmers and 6 can really do it.

💻 Creating a new kind of computing device, built for AI:

Altman: We are going to try to make you a new kind of computer with a completely new kind of interface that is meant for AI, which I think wants something completely different than the computing paradigm we’ve been using for the last 50 years that we’re currently stuck in.

AI is a crazy change to the possibility space. A lot of the basic assumptions of how you use a computer and the fact that you should even be having an operating system or opening a window, or sending a query at all, are now called into question.

I realize that the track record of people saying they’re going to invent a new kind of computer is very bad. If there’s one person that you should bet on to do it, I think Jony Ive is a credible, maybe the best bet you could take.

Odds of success on the new computing platform are low, given the base rate for everyone who has attempted it, but I’m very intrigued. If anyone can pull it off, it’s probably OpenAI. 🪄🎩🐇

They have good product instincts and are more of a consumer company than any of the other frontier AI labs, except maybe Google. But Google’s hardware product instincts can be very hit & miss, and they’re better at iterating on form factors created by others than at coming up with successful new ones themselves.

🇺🇸🏗️👷🧰⚛️ The Workers Who Could Build Nuclear Plants are Building AI Datacenters 🤔

If you don’t work out for a while, muscles atrophy 🏋️♂️

Not building nuclear capacity for a few decades means the specialized workforce has shrunk, and the more general construction workforce is currently very busy building and wiring up data centers and natural-gas power plants.

That poses a challenge if the U.S. is to meet its goal of having 10 new large reactors under construction by 2030:

The U.S. civilian nuclear industry directly employs about 70,000 people, supporting another 180,000–200,000 indirectly. [...]

At Vogtle, the last big project, construction peaked above 9,000 craft workers—for just two reactors. The same electricians, pipefitters, and welders who gained that experience are now wiring data centers and EV factories. Electricians alone are projected to outpace nearly every other trade in demand growth through the decade. In other words: we can finance nuclear, permit nuclear, even cheerlead nuclear—but we don’t currently have the people to build nuclear. (Source)

This bottleneck needs to be addressed, likely through both stronger incentives for training/apprenticeships + targeted skilled immigration.

🏗️ ⏱️ How Fast Can Gigawatt-Scale AI Datacenters Be Built? 🔌🤖

Speaking of building large-scale complex projects, now that everyone is talking about gigawatt-scale datacenters, how long does it take to build such a thing?

Epoch AI looked into it:

AI data centers require massive amounts of power and permitting, yet timelines are short. Across the data center builds we’ve tracked, the time from starting construction to reaching 1 GW of facility power capacity typically ranges from 1 to 4 years.

Based on planned timelines, we expect five AI datacenters at a scale of 1GW or more to come online in 2026. Each is operated by a different hyperscaler.

Looking at the graph, xAI’s Colossus 2 stands out:

xAI’s Colossus 2 in Memphis projects the fastest build-out planned, targeting just 12 months to reach gigawatt scale. How? By reusing existing industrial shells and generating its own power early using gas turbines and batteries, before full grid connection.

For context, 1 gigawatt is about enough to power 1 million homes in the U.S.

I know it’s hard to even picture in my mind what a million homes looks like, but fair to say, it’s A LOT. 🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠🏠

🍎 Apple to White-Label Gemini for Siri 🗣️📱

This seems like a win-win for Apple and Google, at least in the near-to-mid-term:

Apple Inc. is planning to pay about $1 billion a year for an ultrapowerful 1.2 trillion parameter artificial intelligence model developed by Google [...]

Apple is paying Google to create a custom Gemini-based model that can run on its private cloud servers and help power Siri. Apple held a bake-off this year between Anthropic and Google, ultimately determining that the former offered a better model but that Google made more sense financially (partly due to the tech giants’ preexisting search relationship)

The latter is interesting and shows how having a “better” model doesn’t automatically mean you will win.

Google has the scale to serve Apple’s billion users, plus the compute, infra, and enterprise muscle via GCP.

Anthropic isn’t set up to do this, and would likely need to be paid a lot more than $1bn and require more time to set up the inference capacity… which they probably don’t want to take away from training new models and serving high-paying API customers.

So I can see how it wasn’t as good a fit as Google.

They aren’t abandoning their own efforts:

Apple still doesn’t want to use Gemini as a long-term solution. Despite the company bleeding AI talent — including the head of its models team — management intends to keep developing new AI technology and hopes to eventually replace Gemini with an in-house solution [...]

Apple executives believe it can reach a similar quality level as the custom Gemini offering. But Google continues to enhance Gemini, and catching up won’t be easy.

But they’re also not doing the kind of investment they would need to do to be competitive, so it’s probably futile… unless AI commoditizes fully and there’s less difference in everyday life between frontier models and the next tier of models.

Maybe Siri could run on the equivalent of a souped-up open source model and be just fine for pretty much everything that a user is likely to ask of it, and the frontier models will be doing advanced physics and controlling fleets of robots elsewhere 🤔

🎨 🎭 The Arts & History 👩🎨 🎥

🇷🇺🔪🇨🇳 Sarah Paine: How Russia Derailed China

I love listening to Sarah Paine explain history and grand strategy. Dwarkesh Patel often jokes that his podcast is basically the Sarah Paine show because her episodes get so much more attention than even AI, which he’s best known for.

She doesn’t say it in this one, but her unofficial catchphrase (to me) is “and if this happens, you’re in deep, dark trouble”.

I even considered getting some print-on-demand t-shirt place to make myself this:

Wouldn’t it be cool? 😎

Here are a few of my highlights from this lecture:

🗺️📏🐻 The staggering scale of Russian land grabs

If you add up all the territory that the Russians took from the Chinese sphere of influence from the 1858 Treaty of Aigun and 1860 Treaty of Peking, fast-forward to detaching Outer Mongolia from the Chinese sphere of influence. Here’s what it really is. It’s greater than all US territory east of the Mississippi. This is not your normal land grab. So talk about derailing somebody, that would do it.

🐻📜🛡️⚔️ The ruthless logic of continental empires

Both empires followed the rules for continental empire. If you want to survive in a continental world — that’s what both of them historically have been — you don’t want to have two-front wars because you have multiple neighbors. Any one of them can come in at any time. If they gang up on you, that’s trouble. So you take on one at a time. Also, you don’t want any great powers on the borders.

This is the fundamental problem with their relationship. Today’s friend can be tomorrow’s foe. That is truly problematic. So what do you do to solve that problem? Well, you take on your neighbors sequentially. You set them up to fail. You destabilize the rising, ingest the failing, and you set up buffer zones in between. You wait for the opportune moment to pounce and absorb. That is Vladimir Putin’s game.

But if you play this game, you’re surrounding yourself with failing states, because you’re either busy destabilizing them or ingesting them. So the curious might ask, are Russia and China unlucky with all the very dysfunctional places that surround them, or are they complicit?

🔴⭐️🕊️📣 The fiction that the USSR is anti-imperialist

Remember communism? What are the really bad people in the communist story of how we all live? It’s the imperialists, right? Imperialism is really bad. Well, I’ve just shown you. Russians were the greatest practitioners of imperialism of the 20th century. They’re doing this right as Western imperialists are giving up their colonies.

Yet there’s this big lie, the big fiction.

There’s so much more good stuff. I encourage you to listen to the whole thing!

Realist International Relations theory assumes that Russia and China are competing with each other. If so, Russia is in a world of hurt right now. It went west when it should have focused on securing its border with China. China’s influence is growing in Russia’s thinly populated eastern provinces and in Central Asia. Russia’s military is being weakened in Ukraine and it has become dependent on trade with China. China has won huge relative gains just by sitting on the sidelines and watching Putin bite off more than he can digest.

I wonder if Google can be trusted with this new relationship with Apple. They are likely to know more about us Apple customers than I would like