601: Gemini 3.0!, AI Love Triangle of Anthropic, Microsoft & Nvidia, Satya Nadella Interview, Google TPU v7, Malaria, WWI, and Woodstock '99

"I think that’s a worthy goal"

There is nothing more precious than trust.

Low trust environments are full of friction and inefficiency. Every interaction is a fierce negotiation.

Trust makes a marriage warm and a nation wealthy.

—Stoic Emperor

🛀💭🥐😋 There’s an expression in French:

Bon vivant

In common usage, it describes someone sociable and convivial, with Epicurean tastes, one who appreciates the good things in life, maybe even to excess. 🍷🍇🍪🥩🍨

There’s another French expression:

Savoir-vivre

It refers to having good manners, being polite, courteous, respectful, and possessing social grace.

But I like the more literal translation better:

Bon vivant = Good at living

Savoir-vivre = Knowing how to live

In fact, I think that’s a worthy goal: To be better at living. 🏆

You’re probably doing something right if people who know you think: They’re pretty good at life!

If that’s the goal, how do we reverse-engineer the path?

What specific choices actually get us there? What specific inputs produce that output? What are the essential ingredients of being good at living?

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet 👇

🏦 💰 Business & Investing 💳 💴

🔍🤖3️⃣ Gemini 3.0: Google Strikes Back 💥🥊

It’s probably not a coincidence that OpenAI released GPT-5.1 and xAI released Grok-4.1 a few days ago. Everyone knew that Gemini 3.0 was imminent.

Before launch, Google’s CEO even posted a screenshot of the Polymarket odds for a launch yesterday, while another Googler reminded his colleagues about their ethics training on insider trading 😬

Gemini 3.0 is out. While I’ll need to spend more time with it to give you my full review, benchmarks and early user reports already give us a good idea.

Sundar Pichai gave a little context on the success of Gemini so far:

The Gemini app surpasses 650 million users per month, more than 70% of our Cloud customers use our AI, 13 million developers have built with our generative models, and that is just a snippet of the impact we’re seeing.Getting closer to ChatGPT’s 800 million users, though ChatGPT likely still wins on engagement depth.

A little while ago, I predicted that Google making AI Mode more prominent in the default Google Search UX would be an important moment in the evolution of AI, reaching mainstream users who haven’t adopted AI yet.

Since then, they’ve taken steps to make AI Mode easier to find and trigger, adding a more prominent button and linking to it in the nav from more places. But it isn’t yet replacing the default legacy search.

However, one thing to note is that they made Gemini 3 available in AI Mode in Search from day one of release, the first time they have done this. Clearly, they’re slowly giving it more prominence and getting it ready to someday take a bigger role.

This is the first time we are shipping Gemini in Search on day one. Gemini 3 is also coming today to the Gemini app, to developers in AI Studio and Vertex AI, and in our new agentic development platform, Google Antigravity.

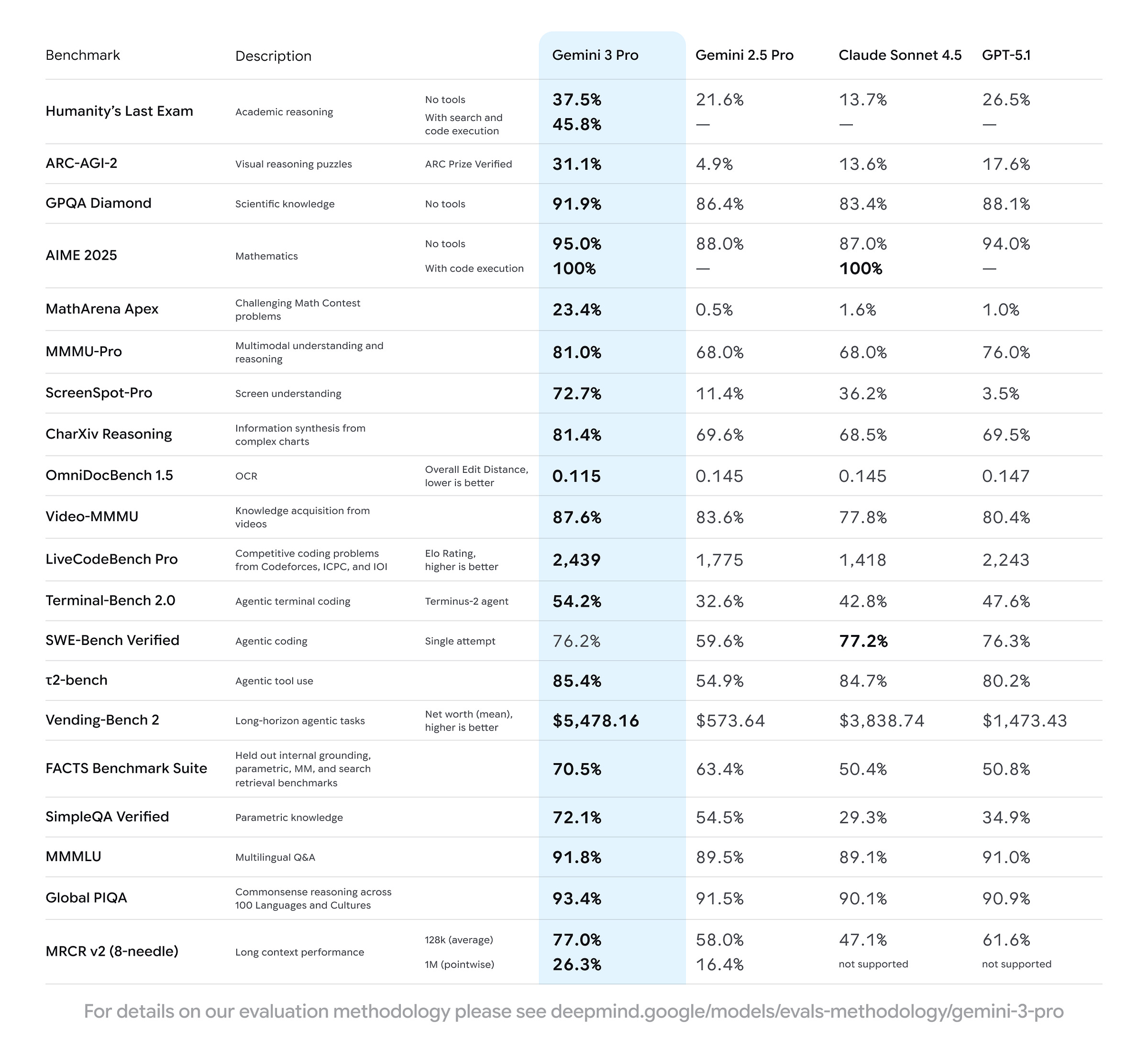

So how does it stack up against other models on the various reference benchmarks?

As you can see above, it leads on everything except SWE-Bench Verified where it is 1% behind Sonnet 4.5. Basically, a clean sweep against Sonnet 4.5 and GPT-5.1.

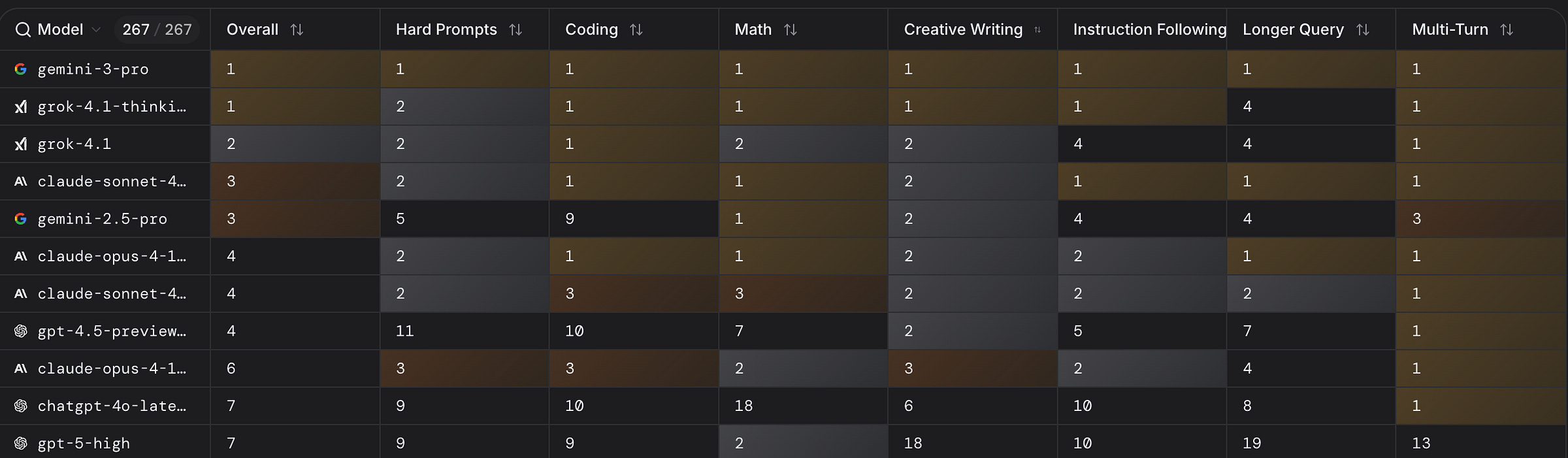

Grok 4.1 does well on the EQ-Bench and on the LLM Arena, where it briefly ranked #1 until Gemini 3.0 came out. Since xAI hasn’t released scores for the other benchmarks, direct comparisons are harder, but I suspect Gemini 3.0 wins.

I’m particularly impressed by Gemini 3’s results in some of the most difficult benchmarks where other models struggle to even get a single digit of correct answers. On MathArena Apex, the previous leader was Sonnet 4.5 with 1.6%. The new Gemini crushes it with 23.4% right out of the gate!

The improvement on Humanity’s Last Exam and the ARC-AGI-2 suites are also impressive, jumping 10%+ over the previous leader.

Gemini 3 also seems particularly good at visual reasoning and understanding what is going on a computer screen. Sonnet 4.5 dominated ScreenSpot-Pro with 36.2%, far ahead of GPT-5.1 and Gemini 2.5 Pro. But Gemini 3 scores an impressive 72.7%, about double!

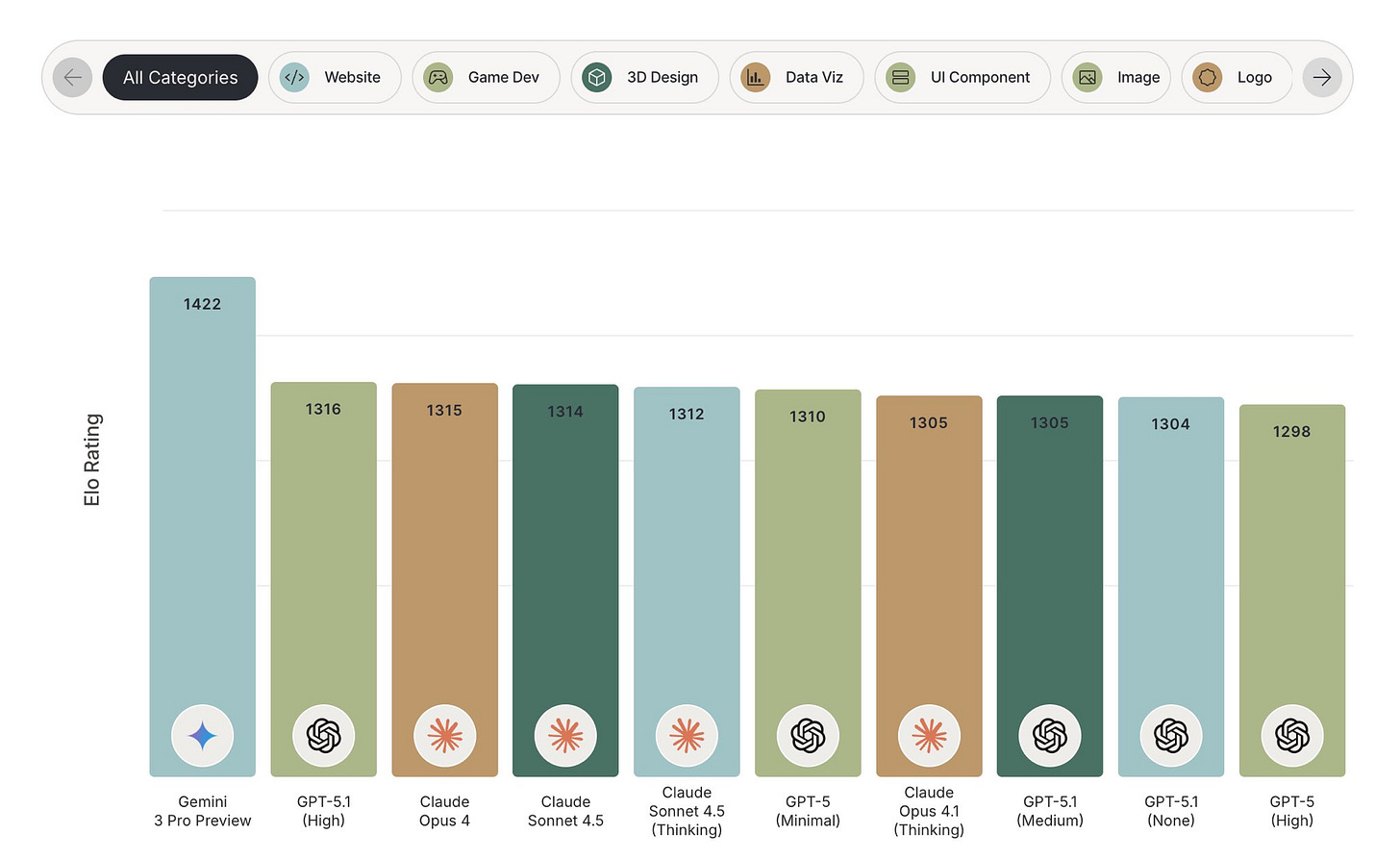

Gemini 3’s dominance when it comes to real-user preference for the things it builds gave it a huge lead in Design Arena, marking the largest delta vs other models since Design Arena launched:

As I always say, benchmarks only tell us so much, so take them with a grain of salt 🧂 Andrej Karpathy explains the incentives to cheat:

First I usually urge caution with public benchmarks because imo they can be quite possible to game. It comes down to discipline and self-restraint of the team (who is meanwhile strongly incentivized otherwise) to not overfit test sets via elaborate gymnastics over test-set adjacent data in the document embedding space. Realistically, because everyone else is doing it, the pressure to do so is high.We’ll have to wait for real-world usage to be sure just how good the model actually is, and if its “flavor” is subjectively pleasant for our own use cases and preferences.

But so far, I’m very impressed! You can see early examples of things that people have vibe-coded with it, and some of them are wild.

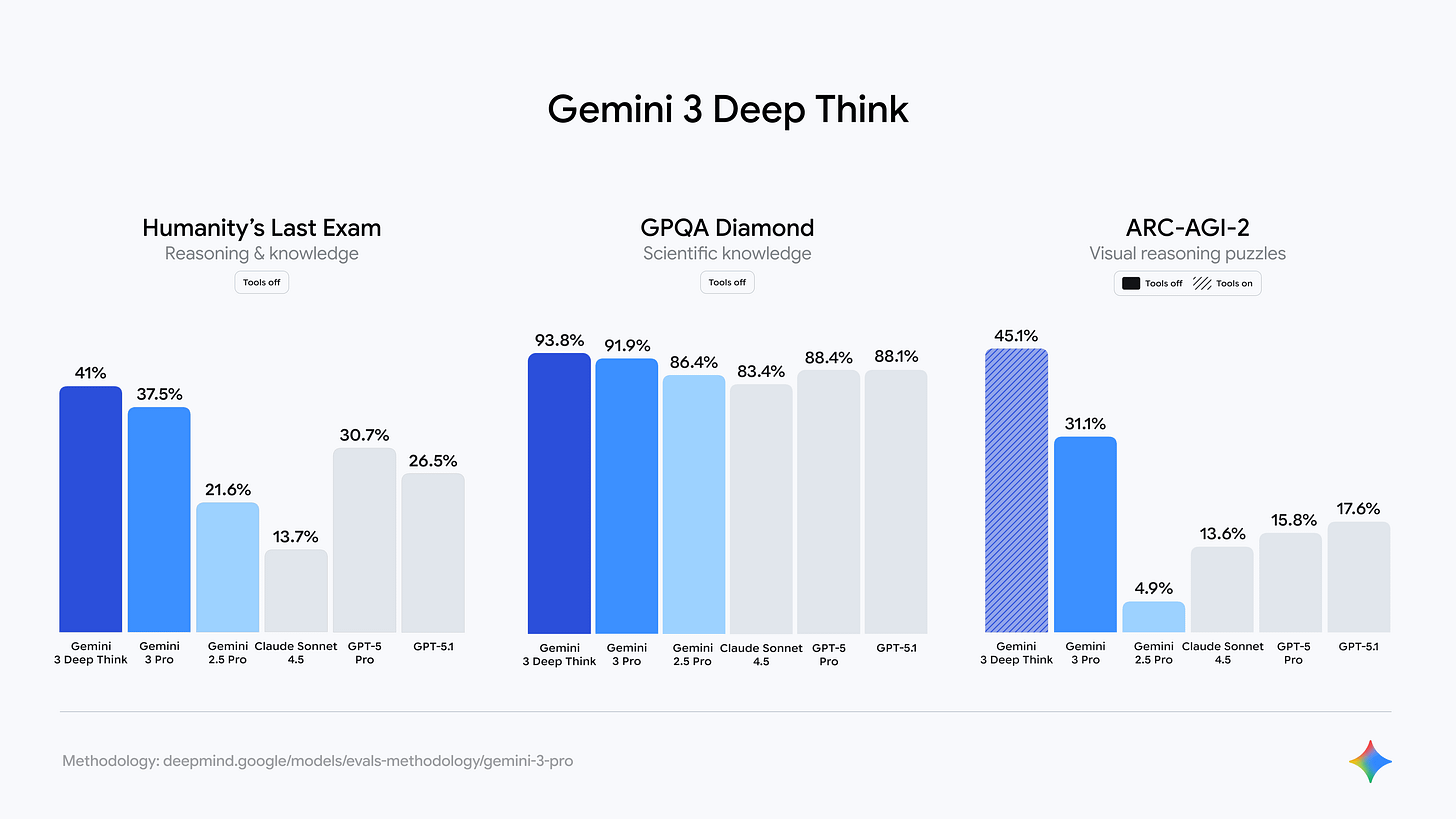

‘Deep Think’ mode also seems very powerful. It’s more like GPT-5 Pro than Deep Research, despite the similar name. It was first released as an experimental model for Gemini 2.5 Pro, and is now also part of Gemini 3:

In testing, Gemini 3 Deep Think outperforms Gemini 3 Pro’s already impressive performance on Humanity’s Last Exam (41.0% without the use of tools) and GPQA Diamond (93.8%). It also achieves an unprecedented 45.1% on ARC-AGI-2 (with code execution, ARC Prize Verified), demonstrating its ability to solve novel challenges.

Gemini 3 has a 1 million token context window, which is still category-leading. Only OpenAI matched it with GPT-4.1, but GPT-5 went back to 400k tokens. Anthropic offers 500k tokens to enterprise users on Sonnet 4.5.

On pricing, Google is not doing what I expected:

So far, Gemini has been priced very aggressively compared to Claude and ChatGPT. Gemini 3 increases prices vs Gemini 2.5 Pro: Input tokens are 60% more expensive and output 20% more, which makes it about as expensive as Sonnet 4.5 and more expensive than GPT-5.1.

This may just be temporary while they further optimize the new, larger model. Or maybe they are so confident in the quality of it that they think it will be de-commoditized 🤔

Another notable launch by Google is Antigravity, their AI IDE/agentic development platform, which competes with Cursor. They’re pretty aggressive about it, offering a bunch of free inference for now.

If you’re curious about it, there’s an in-depth review here:

Sounds very promising, though still early. With a few rounds of polish, this could become a fierce competitor.

▲🤖🤝☁️ The AI Love Triangle: Anthropic, Microsoft & Nvidia

Because it’s 2025 and EVERYBODY needs to be partnered with EVERYBODY ELSE (🕸️), a new deal has been announced between the last three players that weren’t already dating each other:

Anthropic has committed to purchase $30 billion of Azure compute capacity and to contract additional compute capacity up to one gigawatt.

Anthropic and Nvidia will collaborate on design and engineering, with the goal of optimizing Anthropic models for the best possible performance, efficiency, and TCO, and optimizing future Nvidia architectures for Anthropic workloads.

Anthropic’s compute commitment will initially be up to one gigawatt of compute capacity with Nvidia Grace Blackwell and Vera Rubin systems.

Customers of Microsoft Foundry will be able to access Anthropic’s frontier Claude models including Claude Sonnet 4.5, Claude Opus 4.1, and Claude Haiku 4.5. This partnership will make Claude the only frontier model available on all three of the world’s most prominent cloud services.

Microsoft has also committed to continuing access for Claude across Microsoft’s Copilot family, including GitHub Copilot, Microsoft 365 Copilot, and Copilot Studio.

As part of the partnership, Nvidia and Microsoft are committing to invest up to $10 billion and up to $5 billion respectively in Anthropic.

What does this all mean?

First, the investment values Anthropic at around $350bn, almost double what it was in September when they raised $13bn at a $183bn valuation. It almost makes OpenAI’s $500bn valuation from October feel low 😅

It makes you wonder what valuation VCs would put on Google’s Gemini product + DeepMind AI lab if it were somehow independent 🤔 (I understand that it’s very hard to imagine since independence would remove access to Google’s massive distribution, but it’s still interesting to think about)

Back to the deal: Nvidia is bringing Anthropic closer, reducing the odds that they ever go all-in on some non-Nvidia chips (they already have big commitments for TPUs and Trainium chips).

Microsoft is showing that they are becoming less dependent on OpenAI over time and want to be a platform on top of which all kinds of models and products can be built and accessed.

And Anthropic is getting more funding, which they will immediately turn around and give back to Azure and Nvidia for compute. But if they want to keep up with OpenAI and Google, this is what they need to do.

It’ll be an interesting dynamic to watch unfold over time, though, as companies like Nvidia and Microsoft have large investments in rivals that fiercely compete with each other (OpenAI vs Anthropic).

Google is in a similar boat, investing in Anthropic and hosting them on GCP… all while fiercely competing with them via Gemini ¯\_(ツ)_/¯

🗣️🗣️ Interview: What the Dot-Com Era Teaches Us About the AI Boom & The Myth of the Open Web 💾

Speaking of Nadella, he seems to be thriving in this environment.

Microsoft is making moves to reposition itself, aiming to benefit from a wide range of outcomes. While success isn’t guaranteed, his vision is coherent and plays to some of Microsoft’s historical strengths as a platform.

He’s been doing the podcast rounds and had another interesting conversation, this time with John Collison:

Here are a few of my highlights.

First, a great riff on open web vs closed web, how the narrative that most people talk about isn’t fully accurate, and what we can learn from that for the AI future:

Nadella: So from ‘93 to ‘95, there was that two-year period where it was unclear whether this was going to be the protocol and the full stack. And the stack emerged, and by ‘95 it was clear and then we pivoted.

John Collison: So just at that time, it wasn’t actually clear that the open internet would win.

Nadella: The interesting thing that I’ve always watched because I think we can parlay this into AI. One is to get the paradigm right… Even if you get the paradigm right, that you may not get what is the killer app or even the business model.

That’s always been the case, with the internet, who would’ve thought that for the open web, an organizing layer would be one network effect search engine, right? Because the organizing layer of the web, I always say there’s no such thing as the open web. There’s the Google web, and just because they dominated it. [...]

So AOL and MSN kind of lost out, let’s call it, to the open web. Except they were replaced by new forms of AOL and MSN. They’re called search engines. They’re called app stores. The mobile web, in fact, is fascinating. [He could have mentioned the Meta properties here. -Lib]

John Collison: The open web was a moment in history.

IDEs won’t just be for coders anymore, they are coming to other jobs:

Nadella: So the IDE… one of the most exciting things is new classes of highly refined IDEs that have even sort of a telemetry loop with the intelligence layer, but also they kind of act more like heads-up displays. I have thousands of agents going off. How am I going to make sense of the micro steering of thousands of agents? And that is what IDE slash inboxes and messaging tools will be, which is I’m not messaging or dealing with triage the way I deal with it today, but it’s going to be different.

John Collison: Okay, interesting. So you think right now programmers spend all their time in IDE, but they’re one of the few professions that does that. And your vision is the accountant IDE, the lawyer IDE, and—

Nadella: What is the metaphor of how I will work with agents? So it’s kind of like massive macro delegation. So there’s lots of agents I go give a bunch of instructions to and they go off and work sometimes for hours, days, let’s say, as the models get better. But they are checking in and so it’s macro delegation, micro steering. So if you take that, how does one do micro steering with context? It can’t be in the next notification hell, which is it sort of notifies me, it has five words, [and] I don’t know exactly what the real context is, or what have you.

[It] has to be multi app-like. So that’s where I feel like all software finally [ends up when] it grows up, it looks like an inbox and a messaging tool and a canvas with a blinking screen, except this time around a lot of work happened.

On Bill Gates’ vision for information management:

Nadella: Yeah, and for the longest time, Bill was always obsessed […] I remember him distinctly saying this in the nineties…: ‘There’s only one category in software. It’s called information management. You got to schematize people, places and things and that’s it. You don’t have to do anything more, because all software…’

[…] The problem is people are messy, and even if data is structured, it sort of is not truly available in one index or one SQL query that I can run against all of that.

In fact, one of the longest time, we used to always obsess about, ‘Oh, how complex do the relationships have to be or the data model needs to capture the essence of an enterprise?’ And it turns out it’s lots of parameters in a neural network with a lot of compute power.”

They also have the bubble talk, and Nadella makes the distinction that in the dot-com era, capacity was built ahead of demand, and a lot of fiber remained dark for many years, while today, they are supply-constrained (on power, data-centers, etc) and can’t keep up with demand.

Of course, that doesn’t mean that they can’t over-invest and over-shoot demand, or that the ROIC will be high on every investment just because the assets will be utilized, but it’s a pretty different dynamic… at least for now (things may change if a lot of debt gets involved in funding all this capex).

I also like how fair-minded Nadella is with the competition. He will often bring up, unprompted, how others are doing a good job at this or that. Few CEOs do this.

🧳🌐 Before World War I, You Could Cross Borders Without Documentation

Jim O’Shaughnessy (💚 🥃🎩) posted this quote from Peter Drucker’s ‘Adventures of a Bystander’:

“it is hard today to realize that before World War I nobody needed or had a passport, an identity card, a work permit, a driver’s license, and often enough not even a birth certificate.”It really was a different world.

It’s so intriguing, I had to look it up:

📜🖋️ The Pre-WWI world of minimal documentation

Before 1914, the Western world operated with remarkably little bureaucratic documentation:

Passports: Largely obsolete in Europe and the Americas. Most countries had abolished passport requirements in the mid-19th century, allowing people to cross borders freely. The concept of ‘illegal immigration’ barely existed.

Identity Cards: Non-existent in most countries. There was no systematic way for states to track or identify their citizens in daily life.

Work Permits: Unnecessary. Labor mobility was largely unrestricted, and millions migrated for work without government permission.

Driver’s Licenses: Not required in most places until the 1900s-1920s, as automobiles were still new.

Birth Certificates: Often informal or non-existent, especially in rural areas. Many people didn’t have official proof of birth.

🪖✋🗺️ Why it changed after WWI

The Great War fundamentally transformed the relationship between individuals and states:

Security Concerns: Governments needed to control movement, identify spies, and track enemy aliens.

Military Conscription: States needed comprehensive records to draft soldiers.

Economic Controls: Rationing and war economies required tracking populations.

Refugee Crisis: Mass displacement created a need for documentation systems.

👮♂️🛂 The temporary situation became permanent

What began as “temporary wartime measures” became permanent:

Passport systems were reinstated and never removed

National ID systems expanded across Europe

Bureaucratic surveillance became normalized

The modern administrative state was born

What a different world that was! And this is the reason why history buffs are obsessed with studying WWI and WWII (in many ways, they’re the same event, just part 1 and part 2) → We’re living in the world that was created by this global conflict.

🧪🔬 Science & Technology 🧬 🔭

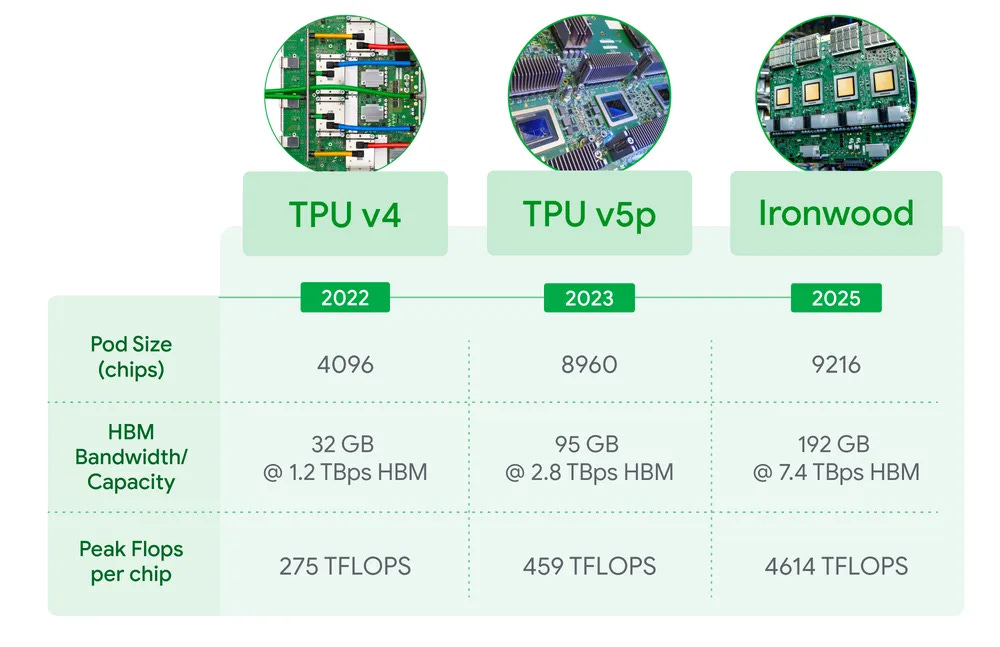

🐜 Google’s New Silicon Monster: The Ironwood TPU (v.7) 🏎️

It’s Google Day here on the steamboat, so let’s have a closer look at the new TPU:

With Ironwood, we can scale up to 9,216 chips in a superpod linked with breakthrough Inter-Chip Interconnect (ICI) networking at 9.6 Tb/s.

This massive connectivity allows thousands of chips to quickly communicate with each other and access a staggering 1.77 Petabytes of shared High Bandwidth Memory (HBM), overcoming data bottlenecks for even the most demanding models.

Each chip has a peak performance of 4,614 TFLOPS at FP8. A Superpod with 9,216 chips should produce about 42.5 Exaflops (🤯). The manufacturing process is unconfirmed, but I’d guess TSMC 5nm or 4nm.

HBM bandwidth is 7.37 TB/s per chip, 4.5x that of the sixth generation of TPU. Google also claims that performance/watt is 2x compared to TPU v6 (29.3 TFLOPS/watt vs 14.6).

🦟 A New Weapon Against Malaria: ‘97.4% PCR-corrected cure rate’ 💊

Great news in the fight against one of the diseases that causes the most death and suffering on Earth:

Novartis today announced positive results from KALUMA, a Phase III study for new malaria treatment KLU156 (ganaplacide/lumefantrine, or GanLum). The novel non-artemisinin antimalarial, which was developed with Medicines for Malaria Venture (MMV), met the study’s primary endpoint of non-inferiority to the current standard of care. The treatment achieved a 97.4% PCR-corrected cure rate using an estimand framework, compared to 94.0% with standard of care. This equates to cure rates of 99.2% and 96.7% respectively based on conventional per protocol analysis.

GanLum combines two compounds to attack the malaria parasite: ganaplacide, a novel compound with an entirely new mechanism of action, and a new once-daily formulation of existing antimalarial lumefantrine, a longer-acting treatment.

The most exciting part is that it targets drug-resistant parasites:

“GanLum could represent the biggest advance in malaria treatment for decades, with high efficacy against multiple forms of the parasite as well as the ability to kill mutant strains that are showing signs of resistance to current medicines,” said Dr Abdoulaye Djimdé, Professor of Parasitology and Mycology at the University of Science, Techniques and Technologies of Bamako, Mali. “Drug resistance is a growing threat to Africa, so new treatment options can’t come a moment too soon.” [...]

“Drug-resistant parasites threaten the efficacy of medicines that have helped to control malaria for decades,” said Shreeram Aradhye, M.D., President, Development and Chief Medical Officer, Novartis. “Together with our partners, we’ve gone further to develop a new class of antimalarial with an entirely new mechanism of action, which has the potential to both treat the disease and block transmission. We look forward to working with health authorities to bring this innovation to patients as soon as possible, helping close a critical gap in malaria care for those who need it most.”

Of course, efficacy is only half the battle, distribution is the other. Making sure that those who need access actually get it is crucial, even for existing treatments.

But in the evolutionary arms race against the parasite, this is a victory for our side.

📋🤖💬 Forget Benchmarks, Give Your AI a Job Interview 👔

I love this idea by Ethan Mollick. It’s closer to how I evaluate models, using them for a while on my personal use case to get a gestalt of their flavor and usefulness for my own needs.

It doesn’t matter if a model ranks 4% higher than another one in some benchmark if, in everyday use, it feels worse to me.

Benchmarks are getting saturated anyway, and it’s an open question how much deliberate or accidental contamination happens, when models train on benchmark questions and answers.

If benchmarks can fail us, sometimes “vibes” can succeed. If you work with enough AI models, you can start to see the difference between them in ways that are hard to describe, but are easily recognizable. As a result, some people who use AI a lot develop idiosyncratic benchmarks to test AI ability.

Part of this is stylistic:

With a little practice, it becomes easy to find the vibes of a new model. As one example, let’s try a writing exercise: […]

If you have used these AIs a lot, you will not be surprised by the results.

You can see why Claude 4.5 Sonnet is often regarded as a strong writing model. You will notice how Gemini 2.5 Pro, currently the weakest of these four models, doesn’t even accurately keep track of the number of words used. You will note that GPT-5 Thinking tends to be a fairly wild stylist when writing fiction, prone to complex metaphor, but sometimes at the expense of coherence and story (I am not sure someone would use all 47 words, but at least the count was right). And you will recognize that the new Chinese open weights model Kimi K2 Thinking has a bit of a similar problem, with some interesting phrases and a story that doesn’t quite make sense.

But part of the model’s ‘flavor’ is also deeper, more akin to someone’s personality. For example, risk-aversion or agreeableness/sycophancy varies by model. Ask them about a crazy, risky idea, and they’ll give you different ratings that are somewhat consistent over time.

Of course, this isn’t scientific and there’s probably a pretty big placebo effect.

If you are predisposed to like a certain model better, you’ll probably be more generous with it. But at the end of the day, subjectivity matters: It’s how we pick our friends, our partners, and many of the other things in our lives.

So if a model feels better to you, especially when you look at the whole package and all the features wrapped around the base model, then it’s probably the one for you, regardless of benchmarks.

🎨 🎭 The Arts & History 👩🎨 🎥

🔥☮️🔥 ‘WoodStock ‘99: Peace, Love, and Rage' 🕊️

Growing up, I was very familiar with the music of Woodstock ‘94 because a friend of mine had the double-album and listened to it a bunch of times. It was probably where I first heard Primus and Nine Inch Nails.

I paid a lot less attention to Woodstock ‘99, but I knew it was a kind of proto-‘Fyre Festival’ that devolved into riots and general dystopia. Beyond that, I didn’t know much.

This documentary does a good job of putting you on the ground. The overflowing toilets, the lack of food and water, the sexual assaults and riots. But also, the pre-smartphone world where if you lost someone in the crowd, you might not find them for the rest of the day.

It’s not the best doc, and I sometimes wish it editorialized less and just let the viewer make up their mind about what is going on, but I’m still glad I saw it. It’s a decent ‘B-’.

If you’re looking for entertainment, watch Pluribus first. But after that, if you’re looking for something to bring you back to a very specific era in the pre-9/11 USA, this may do the trick.