604: Data Centers in Space, Hock Tan’s Coffee, Xiaomi vs Tesla, Nvidia vs China, Guy Gottfried & TerraVest, James Dyson, Boom Turbines, Base Power, and Comfort Films

"It increases the magnitude of your advantage."

The creation of the world did not take place once and for all time, but takes place every day.

—Samuel Beckett

🎨🧑🎨👨🔧💡 The world’s latent creativity is vastly greater than we think.

But so are the barriers to creativity: education systems designed for obedience, employers who mistake conformity for competence, social pressure to “be realistic” and fit in, and debt that chains talent to boring jobs.

Let’s tear those down. We all win.

🗓️ 🤯 🕰️🎶 Speaking of creativity, I heard this recently, and it blew my mind a little:

“If the Beatles broke up today, their first album would have been in 2018.”

The duration between the release of Please Please Me and the band’s breakup is 7 years and 19 days.

📚📖📚 If most people are reading less, that makes it even more important for you to read more. It increases the magnitude of your advantage.

🔪 🧅 🍅 🥩 One of life’s little pleasures is slicing through food with a freshly sharpened knife.

Time to give those dull boys a proper edge.

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet!

If this newsletter adds something to your week, consider becoming a paid supporter 👇

🏦 💰 Business & Investing 💳 💴

🗣️ Gavin Baker: AI Economics, Data Centers in Space, Nvidia vs ASICs, Edge Bear Case, and the SaaS Opportunity 🚀🤖🐜🛰️🛰️🛰️

Very interesting interview with Baker about the AI race, scaling laws, Nvidia vs Google TPUs, Blackwell-trained models, AWS Trainiums, SaaS + agents, and ROIC on AI so far.

I think the whole thing’s worth listening to, but I want to highlight one part about datacenters in space, which are the “new thing” that everyone is suddenly talking about (and we’ll no doubt hear more as SpaceX approaches IPO…).

Gavin says:

I think the most important thing that’s going to happen in the world, in this world, in the next three to four years is data centers in space. And this has really profound implications for everyone building a power plant or a data center on planet earth. And there is a giant gold rush into this. [...]

What are the fundamental inputs to running a data center? They’re power and they’re cooling, and then there are the chips.

In space, you can keep a satellite in the sun 24 hours a day, and the sun is 30% more intense. You can have the satellite always kind of catching the light. The sun is 30% more intense, and this results in six times more irradiance in outer space than on planet earth. So you’re getting a lot of solar energy. Point number two, because you’re in the sun 24 hours a day, you don’t need a battery. And this is a giant percentage of the cost.

So the lowest cost energy available in our solar system is solar energy and space. Second, for cooling. In one of these racks, a majority of the mass and the weight is cooling. The cooling in these data centers is incredibly complicated. The HVAC, the CDUs, the liquid cooling... It’s amazing to see. In space, cooling is free. You just put a radiator on the dark side of the satellite. And it’s as close to absolute zero as you can get. So all that goes away, and that is a vast amount of cost.

That sounds extremely cool and sci-fi, but I suspect it’s going to take longer than 3-4 years because some really tough challenges need to be solved.

Let’s skip over radiation-hardening of everything and look at what is claimed to be a huge selling point of being in space: Cooling.

Space is, well, empty.

In a vacuum, you don’t get conduction or convection in air or water to move heat away from your very hot chips. You’re left with thermal radiation, and to do that, you need *a lot* of surface area.

A very good “black” radiator sitting around room temperature (~300 K) can radiate on the order of 400–500 watts per square meter.

A modest, say, 5 megawatt data center would need thousands of square meters of radiator surface. Multiple football fields of panels.

If you start talking about tens or hundreds of megawatts of AI compute, or even gigawatts if space compute is to move the needle vs Earth compute, the radiator farm becomes enormous and heavy.

The good news is that there’s plenty of space in space (ha!), so gigantic radiators are fine, but the extra cost and complexity of getting them up there may erode the advantage of space data centers vs ground ones, especially if the efficiency of AI DCs keeps improving.

You can run those radiators hot 🌡️ to improve the ratio, eg. at ~600 K (~327°C) you get roughly ≈7,000+ W/m², but silicon chips melt long before they reach that temperature, so you probably need some kind of massive heat pump to raise temps, which adds even more mass, power draw, and complexity (and thus cost).

But if we’re talking about very large amounts of compute in space, which is the whole point, to get around the difficulty of building gigawatts of power generation on Earth, you need gigawatts of solar panels on one side and to radiate gigawatts of thermal energy on the other. That’s a thermodynamic challenge, to say the least. ☀️

(To be clear, I’m no space data center engineer (there’s a new job title!), so it’s very possible that I’m missing something and that dealing with the heat from hundreds of thousands of GPUs in space is easier than I think. If you know a clever solution to this, please let me know! I want to learn!)

🔌🚙🚘🇨🇳 MKBHD Reviews Xiaomi’s EV: Is Tesla Cooked? + The Catch-22 of Chinese Cars

Marques Brownlee lived with a Chinese EV for a few weeks and posted his review.

Note, Xiaomi isn’t a legacy carmaker. They announced their car division in March 2021. They also make smartphones, tablets, fitness wearables, refrigerators, air purifiers, wifi routers, headphones, robotic vacuum cleaners…

They incubated a carmaker in a matter of years thanks to the platform reboot caused by electrification. I don’t think they could have done this with internal combustion.

Back to MKBHD:

The car costs $42K, and turns out it feels like $75K+ EASILY

A+ software and features. Feels like what would happen if Apple made a car

Build quality is excellent all the way around. And materials (leathers, metals, etc) are all premium

It crushes all the fundamentals to make it livable: 320 miles range, super comfortable seats, excellent air suspension, active noise cancellation, great displays and cameras, bright clear HUD, Self driving

It has a MODULAR interior design (detailed in the video)

Performance is sneaky great. This is just the “SU7 Max” spec, but 660 horsepower 0-60 in 2.8 seconds? Sheesh

Some have pointed out that he’s comparing Chinese pricing to U.S. pricing. eg. Teslas are cheaper in China, so the diff between the two isn’t as high as if you compare across countries, but still, considering everything else…

I think it’s pretty obvious that Elon got bored with cars a few years ago and Tesla took its foot off the accelerator when it comes to products, right as China’s EV industry became hyper-competitive, which drove very fast iteration and innovation.

Don’t get me wrong, Tesla has done all kinds of impressive things in recent years, but few of those have been on the physical vehicles-as-products front. That side has stagnated.

They’ve been slow to refresh models, slow to launch new ones, and slow to fill obvious lineup gaps that are ripe for the picking, eg. make an electric pickup truck that is more conventional than the Cybertruck for the millions of people who want something other than a low-polygon stainless steel box, or make a crossover SUV that sits in between the Model Y and the Model X, without polarizing gullwing doors.

There’s a bit of a Catch-22 going on now:

If Chinese EVs stay blocked by tariffs and regulatory barriers, Western carmakers may feel protected in the short term, but by avoiding that competition, they’ll become even less competitive over time.

If Chinese EVs are allowed to be sold in Western markets (once they create models that meet safety standards, etc), they may crush Western carmakers, like how the Japanese carmakers did in the 1980s and South Koreans more recently.

The main difference is that Japan and South Korea were allies, and their cars weren’t computers on wheels with cameras, microphones, and internet connections. The national security angle is certainly different today.

🇺🇸🤖🚫 🇨🇳 U.S. Lets Nvidia Sell H200s to China… and China Might Say No 🤷♀️

It’s Groundhog Day, again. The White House has changed its mind on whether Nvidia can sell GPUs to China, again.

Rather than a cut-down version of Blackwell, it’s the vanilla H200 (as opposed to the China-specific cut-down H20) that would now be allowed. Trump posted:

I have informed President Xi, of China, that the United States will allow NVIDIA to ship its H200 products to approved customers in China, and other Countries, under conditions that allow for continued strong National Security. President Xi responded positively! $25% will be paid to the United States of America.Note that the reverse-tariff on sales to China went from 15%, when this was first discussed for the H20/MI308, to 25% ¯\_(ツ)_/¯

I used to be more ambivalent about whether it was a good idea or not to sell GPUs to China.

I could see both sides of the argument:

More scale for the Nvidia ecosystem, more billions going into the Western stack’s R&D and supply chain, more AI researchers growing up on CUDA, etc.

But also, these tools are extremely powerful. Do we want an authoritarian, non-democratic government to boost its industrial/military/mass surveillance capabilities using hardware and software provided by American companies 🤔

I think that over time, I’ve moved my position more in the direction of *not* selling GPUs to China, even if they are a generation or two behind. H200s are still better than what China can make, and Nvidia+TSMC can make them in quantities larger than anyone else.

Even if the U.S. sells H200s, I don’t think at this point it’ll slow down Huawei & local firms too much. Maybe it would have a few years ago, but that ship has sailed ⛵

Pretty much all current frontier models were trained on Hopper GPUs and most of the world's largest clusters are still Hopper. Blackwell is getting the headlines, but it’s still ramping up, and the next generation of models is still being pre-trained.

The good news is that China is so focused on becoming self-sufficient when it comes to compute that it appears it will keep the supply of H200s low through a strict system of rules and approvals:

The Financial Times reports that Chinese regulators have been discussing ways to allow only limited access to H200 — including an approval process where buyers must explain why domestic chips cannot meet their needs — and could bar the public sector from purchasing Nvidia hardware altogether. At the time of writing, no official confirmation of this has been released by the Chinese government.

We’ll see who wins on the Chinese side: those who want to decouple from the U.S. AI ecosystem ASAP or those who are training models while being compute-starved… Watching the gap widen vs the U.S. as Blackwell fully comes online.

🗣️🇨🇦 Guy Gottfried Follow-Up on TerraVest

I won’t spend a lot of space on this one because as a Canadian small-cap, it’s not that well known. But I’ve posted a few times about Gottfried and TVK in the past, so consider this a follow-up:

Three things interest me in this one:

The history of TerraVest and its transformation from a distressed company into a serial acquirer with skills in capital allocation and operations

Some color on the current management and the runway in front of the company

How Gottfried thinks about this decade+ investment that is still the largest position in his portfolio despite a run of multiple thousands of percents in the stock.

h/t friend-of-the-show C.J.

☕ Hock Tan’s Coffee Chat at VMware ✂️

Tan is well-known in the industry, but this anecdote about how he operates is worth sharing:

Early last year, Broadcom CEO Hock Tan decided to host what he likes to call a “coffee chat” with the employees of VMware, the software giant that the Broadcom CEO had recently purchased for $84 billion. The companywide meeting was Tan’s effort to introduce himself and answer questions from those employees about life at the semiconductor firm.

Tan has described his approach as “he puts himself on a mission to identify which parts of it qualify as ‘diamonds’ and which are ‘turds’.”

At that time, VMware’s Palo Alto, Calif., campus sprawled over 18 buildings and 100 acres of delicately pruned gardens, an outdoor amphitheater and a turtle pond. Employees enjoyed nice HR perks, too, including child care, marital counseling and an annual $1,000 “wellbeing” allowance for anything from dumbbells to Xboxes.

Contrast this with Broadcom, where there is no companywide holiday party and “those who do travel fly economy and stay in no-frills hotels, including Tan himself.”

When Tan opened the discussion up to questions, a VMware employee asked if Broadcom provided such benefits. Tan seemed surprised. “Why would I do any of that? I’m not your dad,” he replied, according to three people in attendance.

Over the next few months, Tan fired about half of VMware’s 38,000 employees. He also stripped down the campus, selling all but five of the buildings. At the remaining offices, Tan had the espresso machines removed. Somewhat against the odds, the turtles were allowed to stay.

There’s also the Line of Doom!

Broadcom is known for conducting regular layoffs and reductions in force. At quarterly companywide meetings, Tan always includes a PowerPoint slide that lists each department by revenue growth—with a red horizontal line drawn across the chart that shows the third of the company with the weakest increases, three former employees recalled. Internally, Tan calls this “the line of doom”—any department below the line for multiple quarters is considered to be underperforming, sparking concerns among those employees that they may be next on the chopping block.

🔥 David Senra Finally Talks to his Hero James Dyson 🔥

The road that led here was incredible to follow over the past 9 years since friend-of-the-show David Senra (💚 🥃 📚🎙️) launched Founders Podcast.

Every 100 episodes, he re-reads James Dyson’s first memoir because it’s the ultimate book about how much focus, perseverance, pain tolerance, and dedication to the craft it takes to build something special.

I highly recommend listening to this interview. I really enjoyed it.

Speaking of time flying, it’s already been 3.5 years since this podcast we did:

🧪🔬 Science & Technology 🧬 🔭

🛩️ Supersonic Plane Maker Boom Unveils… a 42 Megawatt Gas Turbine for Data Centers 🔌⚡

This is cool. Talk about dual-use technology.

Boom has been designing its own turbines for use in its supersonic passenger planes, but it so happens that this technology can also be useful for power generation.

Blake Scholl writes:

The problem? The “blade servers” of the energy world are old tech and they’re sold out.

Because the most popular “aeroderivative” turbines are based on subsonic jet engines, they’re happiest when the outside air temperature is -50°F—like it is when going Mach 0.8 at 30,000 feet. As outside temperatures rise, there is no option but to throttle back the engines—or else the turbine blades literally melt down.

These turbines begin losing power at about 50°F and by the time it’s 110°—as often happens in popular datacenter locations like Texas—30% of generation capacity is lost.

Because they are not optimized for these higher ambient temps, they are often water-cooled on particularly hot days to keep them closer to their sweet spot of operations.

The Boom turbines, on the other hand, allow for waterless operation 💧

Nonetheless, major manufacturers all have backlogs through the rest of the decade and none is building a new-generation advanced-technology turbine.

When we designed the Symphony engine for Overture, we built something no one else has built this century: a brand-new large engine core optimized for continuous, high‑temperature operation.

A subsonic engine is built for short bursts of power at takeoff. A supersonic engine is built to run hard, continuously, at extreme thermal loads.

Symphony was designed for Mach 1.7 at 60,000 feet, where effective temperatures reach 160°F—not the frigid -50°F conditions where legacy subsonic engines operate.

The beauty of this is that there’s such demand that they ALREADY have a launch order for 1.21GW and well over $1.25B in backlog, and this “side business” helps them fund the continued development of their supersonic plane!

To be clear, GE/Siemens/Mitsubishi have way more experience making gas turbines for power, vastly more operating hours on fleets of aeroderivatives, and they are ramping up capacity right now. They’ll be hard to beat:

Boom advertises 39% efficiency (simple-cycle), 42 MW.

GE’s latest LM2500XPRESS+G5 DLE packages are in the ~38 MW class in simple cycle, with roughly 39–41% efficiency, depending on configuration and site conditions, with optional combined-cycle configuration up to ~54% overall.

GE’s LM6000 family (also an aeroderivative) can hit ~41% simple-cycle efficiency and >56% in combined-cycle configurations while generating between 46 and 53 MW.

But as long as there is a strong demand for behind-the-meter small turbines for AI DCs and the traditional turbine makers can’t fill it all, Boom may have a viable side-business. They won’t compete with larger combined-cycle turbines for power plants, but they may be able to pull this off if they execute well.

And because both turbines are largely identical, all this ground testing and operations on the power side give them reliability data and analytics that will be useful with airplane development and reliability testing.

CEO Blake Scholl wrote:

To overcome supply chain bottlenecks, we’re vertically integrating manufacturing. We’ve already started building the first Superpower turbine, and we’re building a new Superfactory in Denver which will make 2GW/year of turbines from raw materialsI wish more companies thought outside the box like this!

🗣️🗣️ Interview: Base Power, The Home Battery/Distributed Virtual Power Plant Startup 🔌🔋🔋🔋🔋🏡

Speaking of electricity, friend-of-the-show Ti Morse has a very enjoyable interview with the co-founders of Base Power (Trivia: Zach is the son of Michael Dell).

The interview doesn’t go into much detail about what they do, so here’s context before you jump in, because it’s a very cool concept:

They install large home batteries (25 kWh or 50 kWh packs) that can keep a typical house powered for 24-48 hours during an outage

It’s not just hardware. Base is a retail electricity provider (in Texas/ERCOT) that:

Sells you your electricity plan

Installs and owns/leases the battery

Handles permits, installation, and ongoing maintenance

Instead of you buying a $10k–$20k battery outright, you:

Pay a comparatively small upfront fee, often in the hundreds of dollars, e.g. ~$695–$995 depending on configuration

Commit to buy your power from Base for a multi-year term

Pay a membership/energy rate that is pitched as “always below market average” and ~10–15% cheaper than typical Texas retail power plans.

In exchange, you let Base control the battery most of the time: when the grid is fine, they use the aggregated battery fleet as a virtual power plant and discharge some of the stored energy back into the grid during peak demand to earn money.

When the grid fails, the battery flips to backup mode for your house.

So they’re essentially building a distributed fleet of batteries that doubles as (1) customer backup and (2) a virtual power plant/grid asset.

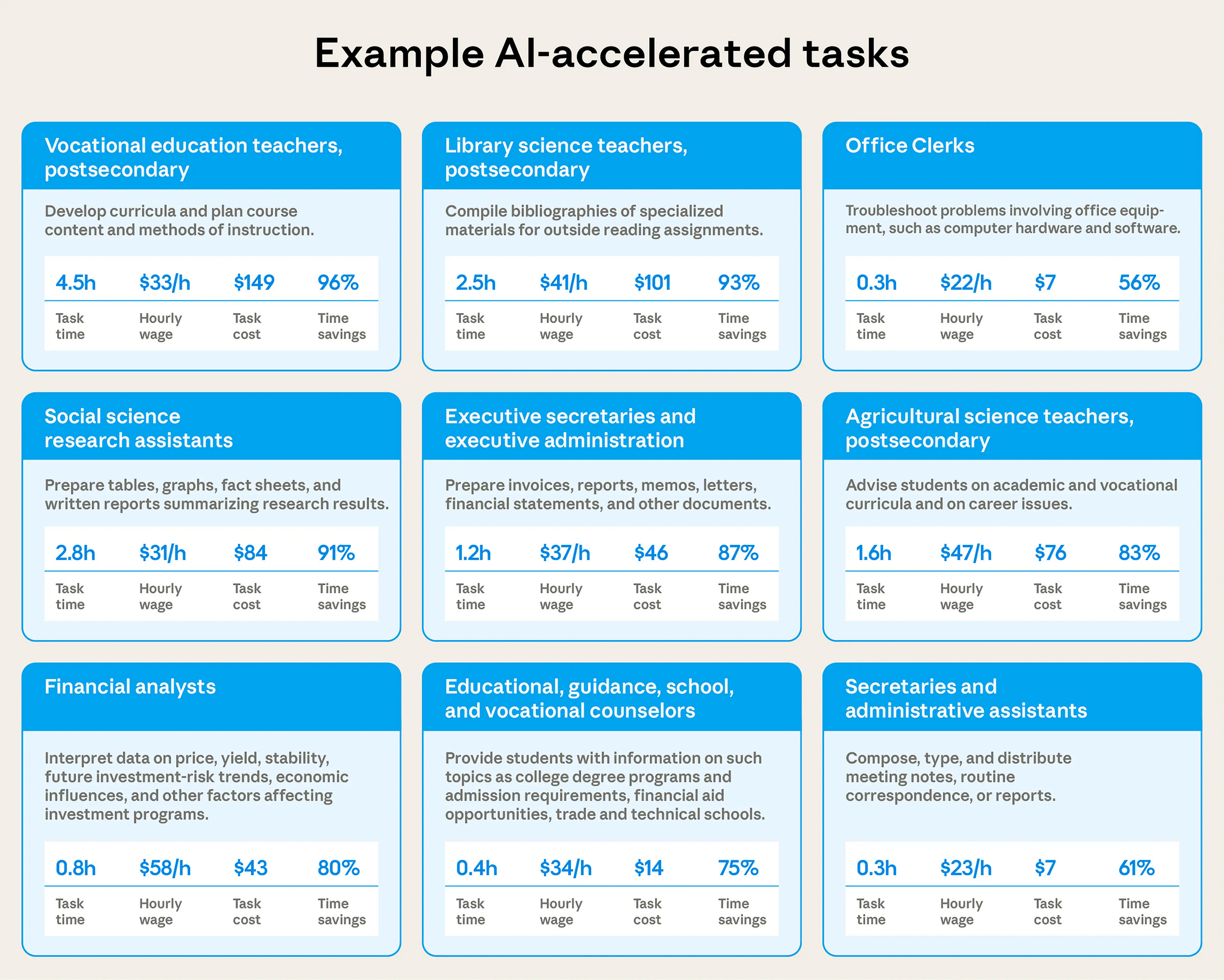

📋🖇️ Anthropic Looks at Real-World Usage of Claude and Estimates AI Productivity Gains 🤖

Using a privacy-preserving analysis method, they looked at 100k real conversations with Claude and estimated how long the tasks in these conversations would take with and without AI assistance.

From this, they try to extrapolate about what the productivity impact of AI could be across the wider economy:

Across one hundred thousand real world conversations, Claude estimates that AI reduces task completion time by 80%. We use Claude to evaluate anonymized Claude.ai transcripts to estimate the productivity impact of AI. According to Claude’s estimates, people typically use AI for complex tasks that would, on average, take people 1.4 hours to complete. By matching tasks to O*NET occupations and BLS wage data, we estimate these tasks would otherwise cost $55 in human labor.

They tracked legal and management tasks that would have taken nearly two hours without Claude, and healthcare assistance tasks were completed 90% more quickly, while hardware issues saw time savings of 56%.

Extrapolating these estimates out suggests current-generation AI models could increase US labor productivity growth by 1.8% annually over the next decade

This would double the annual growth the US has seen since 2019! 😮

But this isn’t a prediction of the future, since we don’t take into account the rate of adoption or the larger productivity effects that would come from much more capable AI systems.

Our analysis has limits. Most notably, we can’t account for additional time humans spend on tasks outside of their conversations with Claude, including validating the quality or accuracy of Claude’s work. But as AI models get better at time estimation, we think our methods in this research note could become increasingly useful for understanding how AI is shaping real work.

While the limitations of the methodology are real, it’s a good start at trying to track the *real-world* impact of these tools.

All the details are here, if you want to go deeper.

🎨 🎭 The Arts & History 👩🎨 🎥

🍕🍫🍪What are Your Favorite Comfort Films? 🛋️📺😌

This video essay helped me better understand some of the things I was feeling unconsciously.

I don’t know if you ever feel like that, but I often find myself wanting to watch something, looking at the films on my to-watch list, and most of them seem stressful: they’re about people getting killed or abused, characters who are feeling anguish or depression.

Sometimes that’s great, but not all the time. If I don’t want that vibe in my life at the moment, I go back to comfort films. They feel like a cozy old sweater that you can just slip into.

What are your favorite comfort films?

Very interesting, with the Gavin Baker Interview, the MKBHD on Xiaomi and the Dyson interview you hit my favorite pieces of info last week. How Gavin structures his models of the world are very interesting and provide great things to dive deeper into. The Boom side business looks really interesting, and I hope that Blake becomes one of the next generation of Elons who can solve hard engineering problems. The Opus in the real world update was also quite striking.

That VMware story is brutal but kinda fascinating. Tan's "why would I do any of that, I'm not your dad" line is oddly refreshing in a world where companies pretend to be families. The line of doom system actualy has some logic to it, forces teams to stay sharp instead of cruising on legacy momentum.