116: Nvidia's New Data-Center CPU, Cloudflare Partners with Nvidia, Visa's Trillions, Founders vs Investors, Burden of Proof, Asch Negatives, AMA Update, and Six Feet Under

"if no one speaks up for them... they will cease to exist"

“To come up with whole new structures, whole new ways of doing things… requires freedom and experimentation, and a certain amount of disorder.”

(yet so many organizations fight that necessary touch of disorder with all they've got)

“You can’t navigate well in an interconnected, feedback-dominated world unless you take your eyes off short-term events and look for long term behavior and structure; unless you are aware of false boundaries and bounded rationality; unless you take into account limiting factors, nonlinearities and delays.”

“The behavior of a system cannot be known just by knowing the elements of which the system is made.”

“A change in purpose changes a system profoundly, even if every element and interconnection remains the same.”

“Nonlinearities are important not only because they confound our expectations about the relationship between action and response. They are even more important because they change the relative strengths of feedback loops. They can flip a system from one mode of behavior to another.”

“Don't be stopped by the ‘if you can't define it and measure it, I don't have to pay attention to it’ ploy. No one can define or measure justice, democracy, security, freedom, truth, or love. But if no one speaks up for them, if systems aren't designed to produce them, and point toward their presence or absence, they will cease to exist.”

― Donella H. Meadows,Thinking in Systems: A Primer(big thanks to my friend Rishi Gosalia for recommending this great book a few years ago — tell your wife expectations are now high for next year’s birthday cake! 🎂)

🥁 George Mack posted something I really like in his March 30th newsletter. The short version:

You’ve probably heard of the Asch conformity experiments where a subject is peer-pressured by actors into saying that two lines that obviously aren’t of the same length are. People will often go with the group and override their own conclusions rather than stand out and contradict others.

Those who bend under the pressure are “Asch positive”. But also interesting are people who are consistently “Asch negatives”. George writes:

If someone is Asch negative, they follow what they believe to be true regardless of the crowds opinion.

Below is what Asch Negative looks like:

(I don’t know if the photo really shows that or if the guy had his arm in the air one second before or after the shot was snapped, but the idea remains powerful)

It just made me think about all the times in my life where I resisted this pressure, and the times when I didn’t… How it got easier as I got older (is this age? Is this deliberate practice? The beginnings of wisdom?).

Just a very interesting topic. I’ll definitely be talking about this to my young kids, because it’s something important to know about others, and about yourself.

🎤 Thank you if you have submitted a question to my Q&A/AMA podcast thing (the form is still open, it’s not too late if you think of something you want to ask… and thanks to Benjamin Dover for submitting a whole bunch of interesting questions — there was no rule that it had to be just one, so tip of the hat for the insatiable curiosity).

My original plan was to reply last week, or maybe early this week, but because we’re under a third-wave lockdown where I live and my oldest son is doing remote school, it’s very hard to find enough time & quiet to concentrate and record, so this project is being pushed back a bit.

Patience is a virtue, I guess ¯\_(ツ)_/¯

Update: After writing the above I learned that the lockdown has been extended to April 25th… Ugh

🖥 Apple has announced an event on April 20th.

Please release new, fully redesigned M1x iMacs with FaceID (or at least Touch ID) and a better + larger screen (30-32" would be nice — footprint would be similar with modern slim bezels. Maybe bump it up to 6k?).

Better integrated speakers that use HomePod computational audio too, while at it.

Please please.

🤔 I'm surprised not all smartphone web browsers have the option to move the URL/search bar to the bottom instead of the top (like this).

Much more thumb-reachable. Clearly the heat-map for touch-convenience is strongest in a 90-degree arc that starts at the bottom right of the screen

Top came from desktop paradigm with different tradeoffs, and made sense with the screen sizes at the time, but on today’s big phones, it’s problematic UX, especially if you do one-hand swiping on your keyboard rather than two-hand thumb-typing.

💚 🥃 Thanks to the 4,030 (as of this morning) of you who endure my stupid dad jokes and random thoughts.

You make this project really fun (it’s not nearly as fun to write if nobody reads).

4k is both a milestone and just another step along the way.

I guess I just wanted to use this round number as an excuse to say 𝕥𝕙𝕒𝕟𝕜𝕤!

Investing & Business

‘Always know where the burden of proof lies.’

Good thread by Paul Enright:

Investing in controversial stocks is a bit like being a lawyer. Knowing whether you are the prosecutor and have to prove something or the defense who can sit back and see if the prosecution fails to make its case.

Intel just flipped its narrative. If you are bearish you now have to prove Gelsinger’s plan won’t work. Will be a long while before you’d have to prove that it actually will work.

These moments usually cause major sentiment shift in a stock.

Twitter had a similar recent narrative shift.

Always know where the burden of proof lies.

Founder / Investor Balance of Power

The decreasing cost of starting a startup has in turn changed the balance of power between founders and investors. Back when starting a startup meant building a factory, you needed investors' permission to do it at all. But now investors need founders more than founders need investors, and that, combined with the increasing amount of venture capital available, has driven up valuations. (Source)

🏭 → 💻

New Job Openings at Cloud Divisions of Amooglesoft

Also from Weng:

The % of companies looking for AWS, Azure or Google Cloud expertise continues to rise. It has increased from 13.7% a year ago to 16.8% now, which is impressive when you consider the size of all of them.

Cloud migration skills are still in demand. The number of companies hiring for “cloud migration” expertise is up 44% YoY, and the chart below shows that demand started shortly after Covid/WFH started. It shows no sign of subsiding.

Big companies in typically conservative industries like financial services are still beginning their cloud migration projects.

Visa 2020 Investor Day Highlights

Good thread from last year by The Dentist1 about Visa (I missed it at the time, but better late than never — Visa does these every 3 years, and long-term vision and plans don’t change that quickly):

I’m always impressed when reading about these huge global C2B and B2B payment markets how words like “back to the $185T opportunity” can be thrown around… That right there is a lot of moolah.

Science & Technology

Nvidia Announces Data-Center CPU (C, not G)

This is big (I’ve set the video above to start at the section where they discuss Grace, 58 minutes in):

NVIDIA today announced its first data center CPU, an Arm-based processor [...] named for Grace Hopper, the U.S. computer-programming pioneer

They say it’s the first “data center” CPU because they’ve also been making the Tegra SoC for over a decade, which includes an ARM CPU. This is what’s in the Nintendo Switch (a custom Tegra X1 variant).

Here’s more on Grace Hopper, who was both a computer scientist and United States Navy rear admiral.

Hopper was the first to devise the theory of machine-independent programming languages, and the FLOW-MATIC programming language she created using this theory was later extended to create COBOL, an early high-level programming language still in use today.

Back to Nvidia, with some shots fired at Intel:

Grace is a highly specialized processor targeting workloads such as training next-generation NLP models that have more than 1 trillion parameters. When tightly coupled with NVIDIA GPUs, a Grace CPU-based system will deliver 10x faster performance than today’s state-of-the-art NVIDIA DGX-based systems, which run on x86 CPUs.

Jensen added that they’ll keep supporting both x86 and ARM CPUs going forward, but this is still a beachhead (well, it’ll be in 2023 when the chip becomes available).

For now Grace is specialized for extremely-memory-intensive ML tasks, but I don’t doubt that over time Nvidia will enter new verticals, and wouldn’t be entirely surprised if they eventually had a more general CPU that isn’t only bundled in their own products (but that’ll probably depend on the competitive landscape — they won’t make it if they can’t be competitive).

There’s not a ton of details about what’s under the hood, but here’s what we know so far:

Underlying Grace’s performance is fourth-generation NVIDIA NVLink® interconnect technology, which provides a record 900 GB/s connection between Grace and NVIDIA GPUs to enable 30x higher aggregate bandwidth compared to today’s leading servers.

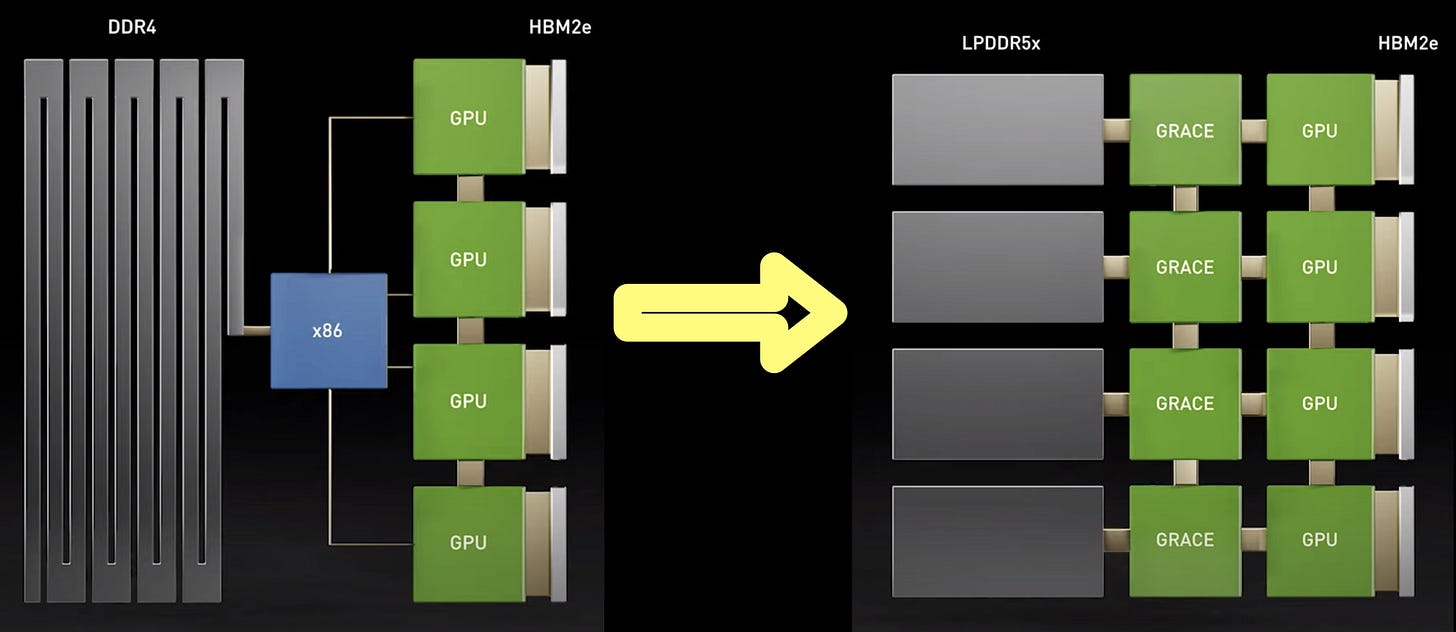

Grace will also utilize an innovative LPDDR5x memory subsystem that will deliver twice the bandwidth and 10x better energy efficiency compared with DDR4 memory. In addition, the new architecture provides unified cache coherence with a single memory address space, combining system and HBM GPU memory to simplify programmability.

This is largely about removing the memory bottleneck with the more traditional configuration with x86 in between the GPUs and the main system RAM. In other words, the GPUs could exchange data extremely quickly among themselves via NVLinks, but were limited when they had to go to CPU and main RAM. The Grace system connects everything via NVLinks to faster memory with more and wider lanes.

The CPU cores themselves don’t seem to have too much secret sauce. They’re based on the upcoming ARM Neoverse designs, I think — this doesn’t sound like a radical custom design like Apple’s M1, unless they are keeping some important details hidden — but the way the whole system is architected provides what seems to be huge benefits.

Here’s a collage I made of their slides to show the architectural difference between the old DGX and the new one with Grace:

The longer DDR4 bar shows the higher latency of that pool of memory, and how it was bottlenecked through the x86 CPU. The next model has faster memory with wider pipes that flow through more ARM CPUs that are each linked to the GPU with wider pipes. That’s how they get to that insane 900 GB/s.

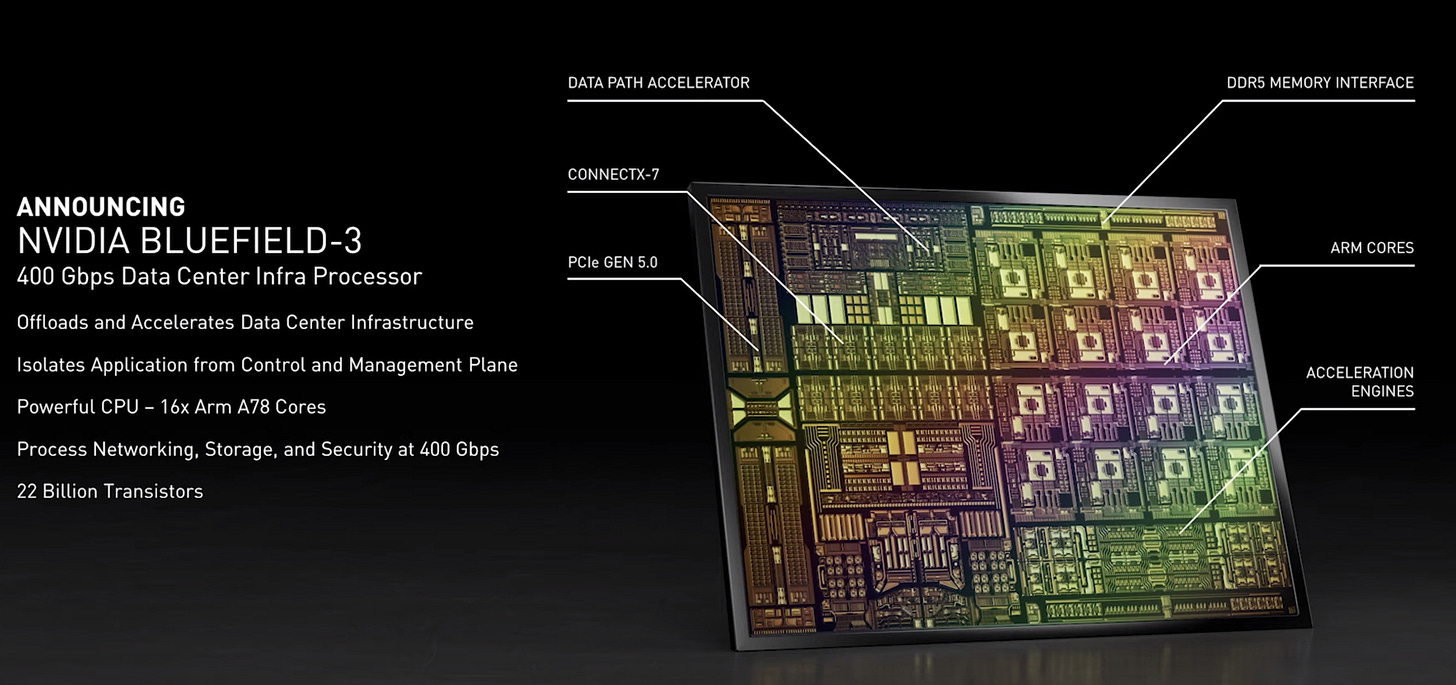

The Bluefield 3 announcement was also pretty cool:

One BlueField-3 DPU delivers the equivalent data center services of up to 300 CPU cores, freeing up valuable CPU cycles to run business-critical applications. [...]

BlueField-3 DPUs transform traditional infrastructure into “zero-trust” environments — in which every data center user is authenticated — by offloading and isolating data center infrastructure from business applications. This secures enterprises from cloud to core to edge while increasing efficiency and performance.

The industry’s first 400GbE/NDR DPU, BlueField-3 delivers unmatched networking performance. It features 10x the accelerated compute power of the previous generation, with 16x Arm A78 cores and 4x the acceleration for cryptography. BlueField-3 is also the first DPU to support fifth-generation PCIe and offer time-synchronized data center acceleration.

They even teased Bluefield 4 in 2024, with 64 billion transistors (!), 1000 tera-OPS (!!), and 800gbps of throughput (!!!).

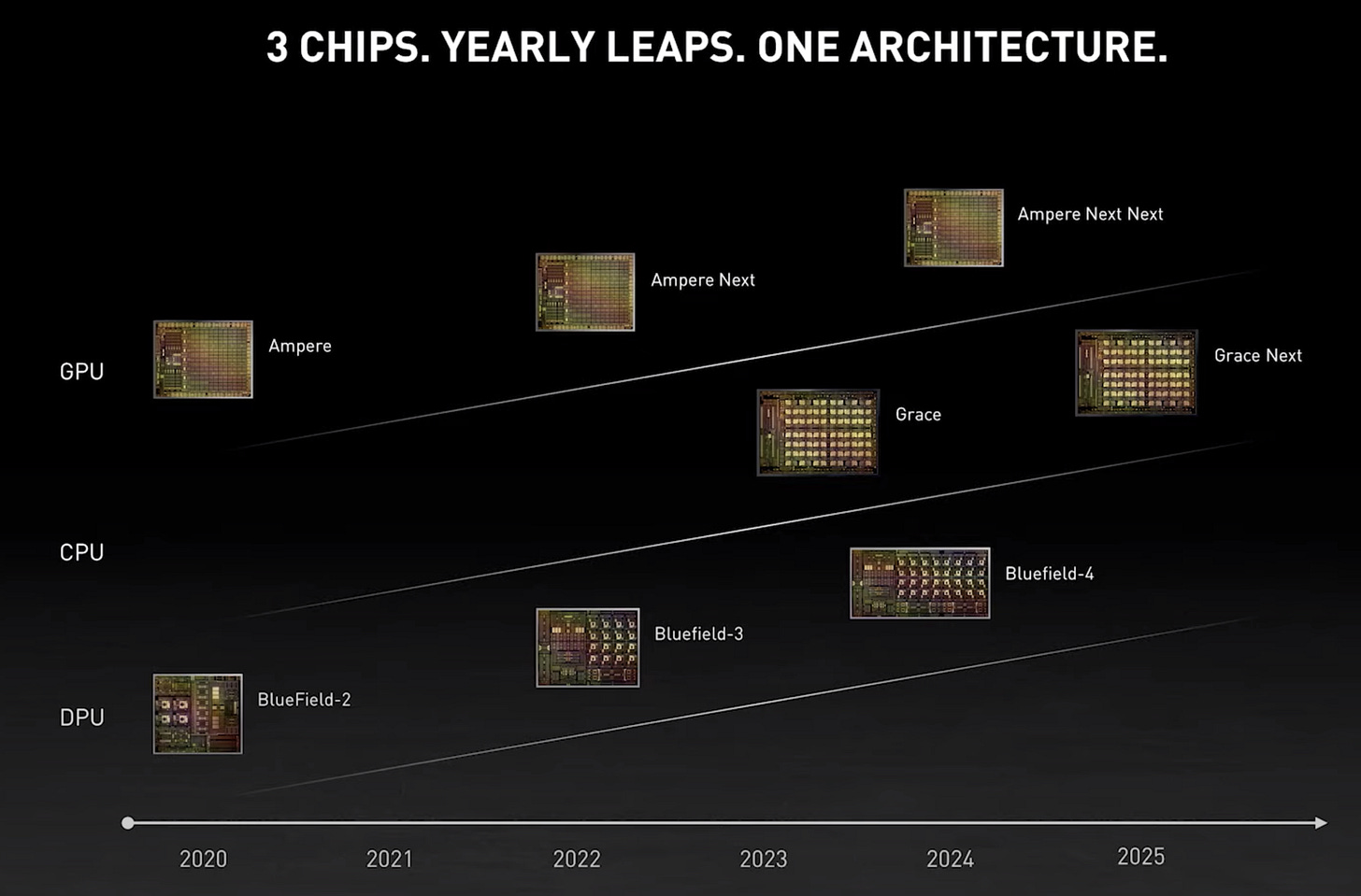

So they now have 3 main chip lines and will upgrade them on a cadence that looks like this:

Integration, Pros & Cons

Ambitious, but the approach also brings a lot of benefits, because the pieces of the puzzle can be designed to work better together (including with the all-important software stack).

Internal roadmaps can be cross-pollinated in ways that roadmaps of separate companies can’t.

What do I mean? Well, let’s say that Nvidia announces a new Bluefield DPU. If this DPU had to be integrated only with third-party equipment, the makers of this stuff can only start to integrate and optimize for the new DPU when it’s unveiled. But Nvidia internally can spend a long time making sure that the rest of its line is optimized and will integrate well with the new DPU as soon as it comes out.

Of course, there are other benefits to modular ecosystems with a large number of companies specializing on various parts of the stack and all competing with each other, but sometimes it’s hard to beat an integrated scaled player that is firing on all cylinders (ie. Apple).

Historical Trivia: Nvidia tried to go into the data-center CPU business about a decade ago with Project Denver, but it didn’t really go anywhere. Probably just too early (in part because at the time the “Armv7 architecture was stuck at 32-bit processing” and the 40-bit memory addressing expansion wasn’t yet released).

Cloudflare Partners with Nvidia to Bring GPUs + Tensorflow to Edge Network

May as well tie-in last edition’s featured company with this edition’s featured company…

To help anyone build AI-based applications Cloudflare is extending the Workers platform to include support for NVIDIA GPUs and TensorFlow. [...]

We’ve examined a wide variety of dedicated AI accelerator chips, as well as approaches that use clever encoding of the models to make them run efficiently on CPUs.

Ultimately, we decided that with our large footprint of servers in more than 200 cities worldwide and the strong support for NVIDIA GPUs in AI toolkits that the best solution was a deployment of NVIDIA GPUs to enable AI-based applications. Today we announced that we are partnering with NVIDIA to bring AI to our global edge network. [...]

TensorFlow has become one of the defacto standard libraries and toolsets for building and running AI models. Cloudflare’s edge AI will use TensorFlow allowing developers to train and optimize models using familiar tools and tests before deploying them to our edge network.

In addition to building your own models using TensorFlow, we plan to offer pre-trained models for tasks such as image labeling/object recognition, text detection, and more. (Source)

What are the benefits of this? Glad you asked!

Previously machine learning models were deployed on expensive centralized servers or using cloud services that limited them to “regions” around the world. Together, Cloudflare and NVIDIA, will put machine learning within milliseconds of the global online population enabling high performance, low latency AI to be deployed by anyone. And, because the machine learning models themselves will remain in Cloudflare’s data centers, developers can deploy custom models without the risk of putting them on end user devices where they might risk being stolen.

The biggest benefit, though, may be what I mentioned in my previous editions about Cloudflare: Regulatory compliance, data sovereignty, etc. You can keep the ML models and the inference close to the users and make sure the data doesn’t leave the jurisdiction.

‘Are We Much Too Timid in the Way We Fight Covid-19?’

This column is a good example of the kind of dilemmas I was writing about in edition #114, about not forgetting the value of preventing negatives:

John Gruber puts it well on the J&J halt:

This terrible decision is going to kill tens of thousands of Americans. Six blood clots after 7 million administered Johnson & Johnson vaccines, versus a disease that has a mortality rate of 18,000 per million cases in the U.S., and has killed over 1,700 of every million people.

One death after 7 million J&J vaccinations for these blood clots (which they don’t even know are attributable to the vaccine), versus over 50,000 dead per 7 million cases of COVID in Americans. That’s a ratio of 1 : 50,000. You can fairly argue those mortality numbers are skewed by the fact that COVID has already ripped through our nursing homes, killing a lot of our most vulnerable people, but still, the risk numbers aren’t even in the same ballpark. And mortality numbers don’t include the millions of Americans who suffered or are suffering from severe cases that require hospitalization.

This is criminal innumeracy.

Zeynep Tufekci makes a good complementary point about how what the effects of something should be and what they actually are aren’t always the same:

FDA says the pause is due to “abundance of caution.” I am very much for abundance of caution against tail risk, and a full investigation into rare events. I respect these are difficult decisions. But “caution” isn’t the term for dramatic, forward-leaning and irreversible acts.

I appreciate the people saying “we should feel more confident because they’re investigating”, which is true — it works on me! — but the word “should” is doing a lot of work there. Meanwhile, let’s check in on how this affects dynamics of human cognition, media and social media.

If a “small pause out of abundance of cautious about a very rare potential issue” causes thousands of unnecessary deaths because of the delay, and likely millions of people to increase their hesitancy to take the vaccine, with all this causing untold economic damage because it prolongs the pandemic, did we balance the costs/benefits properly?

The Arts & History

Lisa in Six Feet Under? (*Spoilers*)

If you still haven’t seen this excellent 2001-2005 HBO series, please put it on your list.

To me it belongs with the heavy-hitters of that golden era, but somehow it now rarely gets mentioned alongside other shows like The Sopranos, The Wire, Deadwood…

Rome is another one I don’t hear that much about anymore.

I’m not necessarily saying it belongs on the very top of the list for the period — it’s been too long since I’ve seen it, I’d need a rewatch to know how I’d rank it, and to make sure nostalgia isn’t making me remember it as better than it was — but it definitely belongs on the list.

Anyway, enough context.

What I want to mention is how, even 10+ years after seeing this show, once every few years I remember Lisa, and wonder what happened to her (in show, I mean, not Lili Taylor who played her role).

Those who have seen the show know what I mean.

I think it was a strong decision to leave it partly a mystery (some answers are suggested, but like in real-life, you don’t get a flashback showing you what happened and providing closure).

It’s a testament to the strong character writing that I still care about this fictional person all this time later.

I think if social media had existed at the time, that storyline would’ve made big waves online…

Great super-villain name.