471: Relative Valuation, Perplexity AI, RIP Artifact, Hertz Sells Teslas, Marc Andreessen, DARPA Plane, Uranium, LEDs, and Jake Gyllenhaal

"Opportunity cost is often the biggest cost, yet invisible."

You should understand something at a level or two deeper than the level at which you’re teaching it.

—CGP Grey

📬📩📩📩📩📩📩😡 I have to vent:

Once in a while, I buy a product and think: “I like this company.”

I figure, what the heck, I'll subscribe to their email list so they can email me once in a while and I won't miss the next cool thing they do.

However, instead, THEY EMAIL ME EVERY SINGLE DAY WITH POINTLESS VAPID BULLSHlT AND THEN I UNSUBSCRIBE!!1 🤬

I'm sure they do it because it leads to a measurable number of sales, but I wonder if the negative impact on customer goodwill is a blind spot that they are not capturing in their metrics...

The obvious solution: When subscribing there should be a choice, “email me at most daily | weekly | monthly | quarterly”.

They’d probably get lower churn and not turn off fans and potential customers who are sick of being spammed.

🧑🏼🏫🚌👦🏻👧🏻👦🏻👧🏻👦🏻👧🏻👦🏻👧🏻👦🏻👧🏻👦🏻🎂 Segregating kids by age in school is not on the radar for most people, but we may someday look back on it and go “what were we thinking?”

Over evolutionary times, children always grew up surrounded by older and younger peers, as well as by adults.

It’s easy to imagine how the younger ones learned from the older ones and modeled a lot of their behavior on them. And the older kids learned to teach and take care of others simply by being around younger kids.

Those who were more advanced could hang out with older kids and learn at their own pace, while those who were a bit slower could also learn at their own pace and spend more time with kids of the same level, regardless of their chronological age.

I’m sure it wasn’t perfect, and I’m not saying it would automatically fix every problem, but I think it’s how our brains naturally try to learn as we grow up. The mix of ages was one of the striking things in the video about the “unschool” school that I posted at the top of Edition #448.

However, in most schools today, we try our best to keep kids from interacting too much with anyone more than a couple of years older or younger.

The belief that a child's age should override almost every other aspect of their identity is overly simplistic and fails to appreciate the complex, individual variation in each child's growth and learning journey.

💚 🥃 🐇🕳️ If you feel like you’re getting value from this newsletter, it means the world to me that you become a supporter to help me to keep writing it.

If you think that you’re not making a difference, that’s incorrect. Only 2% of readers are supporters (so far — you can help change that), so each one of you makes a big difference.

You’ll get access to paid editions and the private Discord, and if you only get one good idea here per year, it’ll more than pay for itself!

🏦 💰 Liberty Capital 💳 💴

⚖️ "Overvalued compared to peers" 🤔

Relative valuation works a lot of the time, but like reasoning by analogy, it has limits and will break down in extreme and exceptional cases.

Lazy investors will often look no further than the multiples of similar companies before deciding that something is fairly, over, or under-valued.

The problem with this is that some companies *are* way better than their peers. What looks overvalued at first glance can turn out to be undervalued after digging deeper.

Or vice versa — some companies deserve to trade below their peers because they suck.

This is all very obvious, but all the important things in investing are simple and obvious. They’re just hard to consistently remember and apply.

This is your reminder to look deeper, and apply this to other areas of your life too.

🔍🤖 Perplexity AI 📱

Once every few years, I get that feeling about a new product.

I’m having it for Perplexity.

After just a few tries, its utility was obvious.

But then I started noticing all the niceties. It’s fast, the UX is well-designed and clean, and they don’t try to do too many things at once.

To me, the company sits at the intersection of Wikipedia, ChatGPT, and Google.

It’s not necessarily better than any of those at doing the single thing that it does, but the combination is powerful and creates a new tool that fills a need I didn’t know I had.

GPT-4 had already been eating many of my Google searches, and now Perplexity has been eating even more searches.

Part of me hopes that they stay independent and keep developing the product. I’m really curious where they can take it over time. But part of me kind of hopes they get acquired by Apple and become the seed from which Siri becomes useful and a standalone Apple Search product is launched.

As a user, I’d love to see more competition in the search space, and I wouldn’t mind a swing of the pendulum back in the direction of minimalism and clean UX (if you’re old, you may remember when Google was the clean and minimalist choice vs AltaVista and Yahoo).

How does Perplexity do what it does?

They combine open-source LLMs (Mistral’s 7-billion parameters model and LLAMA’s 70b model) with their own web crawling and fine-tuning.

They wrote about their models a couple months ago:

At a high level, this is how our online LLM works:

Leverage open sourced models: our PPLX models build on top of

mistral-7bandllama2-70bbase models.In-house search technology: our in-house search, indexing, and crawling infrastructure allows us to augment LLMs with the most relevant, up to date, and valuable information. Our search index is large, updated on a regular cadence, and uses sophisticated ranking algorithms to ensure high quality, non-SEOed sites are prioritized. Website excerpts, which we call “snippets”, are provided to our

pplx-onlinemodels to enable responses with the most up-to-date information.Fine-tuning: our PPLX models have been fine-tuned to effectively use snippets to inform their responses. Using our in-house data contractors, we carefully curate high quality, diverse, and large training sets in order to achieve high performance on various axes like helpfulness, factuality, and freshness. Our models are regularly fine-tuned to continually improve performance.

You can do regular queries, or you can enable their Copilot, which will use an LLM to better understand your question, split it into steps if necessary, ask for precisions, do a bunch of searches, and then pull it all together to (hopefully) create a better answer for your question.

I like that Perplexity cites sources — a search I did cited 21 different sources! — and allows you to retry the same query with a different model. You can also ask it to focus on certain sources that may better match the question (f.ex. WolframAlpha, Reddit, Academic papers, or even just the model itself without going out to the web).

So far, I’ve found the Copilot very useful. If I try the same query with and without it, I usually get better results with it, especially for complex ones. On the free tier, you get 4 Copilot credits every 4 hours.

You can try most of their models for yourself on the Perplexity Labs page (there’s a dropdown menu to switch between models). It’s a good way to see for yourself the trade-off between speed and quality of the small and large models.

The primary way to use Perplexity is with the mobile app or the website, but there’s also a browser extension that I find useful. It creates a small dropdown where you can ask your question and quickly get an answer, without too much context-switching.

So while I’m doing other things, I sometimes wonder about something, and rather than go to a different tab to Google or ask GPT-4, I’ll just quickly ask Perplexity.

I’ve also set the “action button” on my iPhone 15 Pro to open the Perplexity app (previously, I had it set to open ChatGPT), so whenever I have a question about anything I can quickly ask (their voice-to-text is solid — I’m not sure if they use OpenAI’s Whisper or something else).

All this is free.

If you pay for a Pro subscription ($190/year if you use my referral coupon below), you get to pick which model you want Perplexity to use (including the big ones, GPT-4, Claude 2.1, Google Gemini, and Perplexity’s own models), you can upload images and documents, and you get “unlimited” Copilot Queries (not fully unlimited, but I currently have 600/day — I think they may adjust it depending on GPU resources available..?).

The biggest downside of using the big models is that they’re slower than Perplexity’s own 7b model.

It doesn’t sound like their goal is to scale up into an ad-based search engine like Google, if their about page is to be believed:

Perplexity was founded on the belief that searching for information should be a straightforward, efficient experience, free from the influence of advertising-driven models. We exist because there’s a clear demand for a platform that cuts through the noise of information overload, delivering precise, user-focused answers in an era where time is a premium.That makes sense as counter-positioning since AI-enhanced search is less conducive to the auction-based search ads model.

If you want to sign up for the Pro tier, you can use my Perplexity AI referral coupon code to get $10 off (and I get $10 too — win-win).

If you think I wrote all this just to make $10 of whatever, know that I didn’t know about the referral link until after I had written the above. I had been using Perplexity on the free tier for a couple of weeks and decided to sign up for Pro to test that part of it to better be able to tell you about it. Then I saw there was a referral link…

🗣️🗣️🎙️ Interview: Brett Johnson, ex-fraudster and cyber-criminal, now a white hat 🔓💸

Constellation Software has a podcast! I enjoyed every episode so far, but this one stands out:

Brett Johnson, also known as "The Original Internet Godfather," is a former cybercriminal who was instrumental in developing many areas of online fraud. He was the leader of ShadowCrew, a precursor to today's darknet markets, and helped design, implement, and refine modern identity theft, account takeover fraud, card-not-present fraud, and IRS tax fraud, among other social engineering attacks, breaches, and hacking operationsThe part where he talks about his mother gets a little bone-chilling 👿

And he has some choice words for Frank Abagnale for making up most of his backstory (the one turned into the film ‘Catch Me If You Can’).

Even after he got arrested and became an informant to the Secret Service, he kept doing IRS scams. He was eventually placed on the ‘US Most Wanted List’, captured, sent to prison… escaped prison! Was recaptured, and after doing his time inside, turned his life around and became a security consultant.

Note: I wasn’t familiar with Brett before this podcast. I know he has a YouTube channel, but I have no idea what he does over there. I’m recommending this one interview, not endorsing him generally, because I just don’t know.

🪦 Artifact is shutting down (AI media startup by Instagram co-founders) 📰🗞️📲

I’ll admit that I didn’t see this one coming.

Maybe I should have since I had stopped using the app… I guess my experience turned out to have been typical.

Although I didn’t necessarily expect it to be super successful, I did expect them to keep trying and iterating for longer, and to maybe hit a rich vein in this fairly stagnant space.

Maybe I was still under the spell of the excitement conveyed by the co-founders in that interview with Ben Thompson. Of course, startup founders have to be cheerleaders and salespeople, so maybe that’s what this was. Or maybe they were still in the honeymoon phase back then and things changed subsequently…

But after one year after launch, Kevin Systrom writes:

We’ve made the decision to wind down operations of the Artifact app. We launched a year ago and since then we’ve been working tirelessly to build a great product. We have built something that a core group of users love, but we have concluded that the market opportunity isn’t big enough to warrant continued investment in this way. It’s easy for startups to ignore this reality, but often making the tough call earlier is better for everyone involved. The biggest opportunity cost is time working on newer, bigger and better things that have the ability to reach many millions of people. I am personally excited to continue building new things, though only time will tell what that might be. We live in an exciting time where artificial intelligence is changing just about everything we touch, and the opportunities for new ideas seem limitless.

Respect for not dragging things out and facing reality early 👍

Opportunity cost is often the biggest cost, yet invisible.

Will anything inject new blood and energy into the media landscape?

Why did Artifact fail?

Is it that the TAM was too small, as Systrom claims, or that the product couldn’t be made good because most of the good news content is behind paywalls (either at big legacy names or at tiny new Substacks)? Was it hard to get good signals to train the AI because clickbait is too powerful?

🔌🚘🔋 Hertz to sell about 1/3 of its EV fleet, around 20,000 vehicles, mostly Teslas 🤔

Hertz announced last week that it would be selling roughly a third of its fleet of electric vehicles, or roughly 20,000 cars that are predominately Teslas. [...]

It would then use some of those proceeds to buy internal combustion engine cars. The company would also be taking a $245 million incremental net depreciation expense as a result.

Management claims it’s an error of degrees. The thesis was correct, they say, but they went too far, too fast with it.

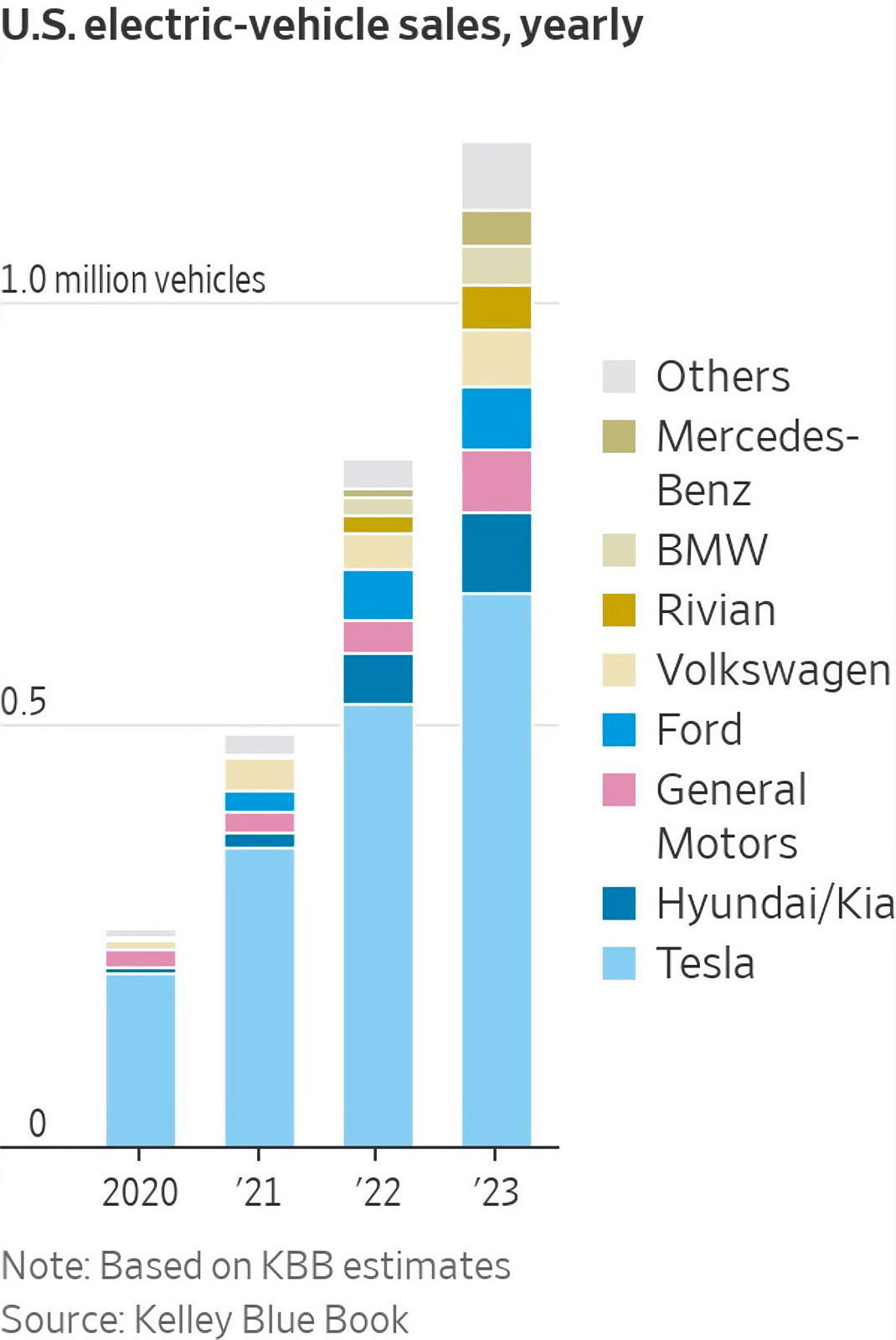

Speaking of electric cars, despite not meeting extremely high expectations from the 2021 bubble era, sales are increasing rapidly.

It’s still Tesla and the 7 dwarves, but it used to be Tesla and 🦗:

I lost the source — sorry to whoever shared it!

🗣️🗣️🎙️ Interview: Marc Andreessen talks to Rick Rubin

I enjoyed this one. It covers a lot of ground:

I don’t always agree with Marc, and sometimes I think he over-uses reasoning by analogy, but Rubin gets good stuff out of him because of his genuine curiosity and openness.

🧪🔬 Liberty Labs 🧬 🔭

🛩️ DARPA experimental plane has no moving control surfaces

In December 1903, the Wright brothers flew the world’s first fully controllable aircraft, which used wing warping to successfully achieve flight. Virtually every aircraft since then has used a system of movable, external control surfaces for flight control.

The X-65 breaks this century-old design paradigm for flight control by using jets of air from a pressurized source to shape the flow of air over the aircraft surface, with AFC effectors on several surfaces to control the plane’s roll, pitch, and yaw. Eliminating external moving parts is expected to reduce weight and complexity and to improve performance.

The X-65 will be an unmanned aerial vehicle of around 7,000lbs with a wingspan of 30 feet and reach speeds of up to Mach 0.7.

Its specifications, including weight, dimensions, and velocity, are comparable to those of a military trainer aircraft, so learnings will be directly applicable.

🛀💭💡 Electricity saved by LEDs and Fluorescents ⚡️

Here’s a random shower thought I had:

How many more power plants would we need if we had never invented fluorescent and LED lights, and had to light everything with incandescent bulbs? (let’s not worry about halogen bulbs, which are even more power-hungry)

I figured I may be able to get a ballpark figure using AI, so I asked GPT-4 Turbo to think it through step-by-step and show me its assumptions and calculations:

Step 1: Average Power Consumption Differences

Assumption: Incandescent bulbs use about 60 watts, while a comparable LED might use 8-12 watts, and a fluorescent bulb around 14-18 watts.

Chosen Average for LEDs/Fluorescents: 15 watts (a middle ground).

Step 2: Global Lighting Usage

Assumption: There are approximately 10 billion light bulbs in use worldwide.

This is a rough estimation and could vary significantly.

Assumption: Each bulb is used, on average, 3 hours a day.

This is a generalized average. Actual usage could vary based on region, season, and other factors.

10 billion seems a bit low to me, but let’s go with it.

Step 3: Increased Energy Demand Calculation

Current Total Power Consumption for LED/Fluorescent Bulbs

Calculated as: average power (LED/Fluorescent)×number of bulbs×average usage hours per dayaverage power (LED/Fluorescent)×number of bulbs×average usage hours per day

Hypothetical Total Power Consumption for Incandescent Bulbs

Calculated as: average power (Incandescent)×number of bulbs×average usage hours per dayaverage power (Incandescent)×number of bulbs×average usage hours per day

Difference in Power Consumption

The additional power needed is the difference between the hypothetical incandescent consumption and the current LED/Fluorescent consumption.

Step 4: Power Plant Capacity

Assumption: Average power plant capacity is 500 megawatts.

This is a generalized figure; actual capacities vary widely among power plants.

Based on these rough estimations and assumptions, the world would need approximately 112.5 additional power plants if LED and fluorescent lights had never been invented and everything was lit using incandescent bulbs. This calculation is highly simplified and should be taken as a ballpark figure, as real-world factors could significantly alter the actual number.

Then I asked it to estimate how many more power plants would be required for air conditioning because of all the extra heat generated by the much hotter incandescent bulbs. I’m running out of space so I won’t cut & paste it here, but that math is a lot more involved and detailed.

The resulting estimate is 42 extra 500mw power plants, for a combined total of 154 extra 500mw power plants.

77 gigawatts just for lights! And if there are 20 billion lightbulbs instead of 10, that’s 154 gigawatts!

🌊☢️ How much Uranium is in the Ocean? ☢️

Scientists estimate that the amount of uranium dissolved in seawater is 1,000 times larger than what is on land, and as uranium is removed from seawater, more uranium enters the water, leached from sea bottom rocks.

It’s like that trick glass that replenishes itself!

The Nuclear Energy Agency estimates that 4.5 billion tons of uranium are floating around in our oceans as dissolved uranyl ions.

So why don’t we extract uranium from seawater?

Chemical engineers have demonstrated various means of extracting uranium from seawater, but none have been commercialized because, so far, it’s less expensive to dig the metal out of the earth.

And there is a lot to be had in the dirt; an MIT study, The Future of Nuclear Power, found in 2003 that the worldwide supply was sufficient to fuel 1,000 reactors over the first half of this century.

Naturally occurring uranium is hardly the only reactor fuel available; reactors make plutonium, a very good fuel, and uranium can be produced in a reactor from thorium. In addition, many advanced reactor designs get more work out of a ton of uranium than current models do.

Of course, incentives matter:

Uranium mining in the United States has mostly withered away because cheaper supplies are available from Kazakhstan and other places [Canada, Australia]. But Energy Fuels, based in Lakewood, Colo., announced two weeks ago that it had started up three mines in Arizona and Utah. And it plans to start operations at two more, in Colorado and Wyoming, within a year. (Source)

And this is not even counting on the fact that at some point we’ll have fusion, and that advanced breeder reactors can take the spent fuel from other reactors and run on that (since 95%+ of the energy remains in Uranium even after it runs through a conventional reactor).

🎨 🎭 Liberty Studio 👩🎨 🎥

🎥 Spotlight on: Jake Gyllenhaal 🍿

I really enjoy his work as an actor, and this video provides a great overview of his career. So many highlights that made me think: “Oh yeah, I should watch that film again.”

The top two on my rewatch list as “Prisoners” and “Nocturnal Animals”, but they’re both very heavy and I need to be in the right mood…

Hmm 🤔 I had never heard of Perplexity before - thanks for sharing!

Definitely want to dig into perplexity more now