485: Jensenmania and Nvidia GTC 2024, Roper Technologies Deep Dive, Nuclear Ramp Up, Humanity's Archives, We Are Legion Review

"Time is devouring the past."

In the long-run, prioritization beats efficiency.

–James Clear

⏳🕰️💭 Time is devouring the past. We’re losing *a lot* to it.

Anything outside of living memory is only accessible indirectly.

But even something that someone remembers is still mostly gone.

Memory is subjective, transformative, and fallible.

The past slips through our fingers.

Every year, fewer people remain who have direct experience of WWII.

The oldest humans were children during the Great Depression.

We don’t have anyone left who fought in WWI (the last survivor died in 2012).

Books, photos, films, stone tablets, and whatever else we have only cover a small fraction of the past, from a few perspectives and with varying degrees of reliability. And even that doesn’t always survive (see the Vesuvius Scrolls Challenge).

With each passing year, more personal diaries are lost, books go out of print and are forgotten, people with valuable stories die without having told them to anyone, etc.

It’s great that we’re still reading Plutarch, but how many amazing lives weren’t documented at all?

What was the most amazing thing that happened on Earth in 4076 B.C.E.? We’ll never know.

Blessed are the historians, documentarians, and archive-dot-org servers (and blessed are those who read and share the lessons from history, making it alive and useful).

I wish we were a bit more organized and took the task of remembering more seriously. Preserving large quantities of information used to be almost impossible, but digital technology has changed that.

There is no perfect system, and there is no objective historian, but having something to learn from is certainly better than nothing.

🗣️🗣️🗣️🗣️🗓️📚🎟️ Next month, on April 13th, I’ll be in NYC to help host an event about the Future of Publishing. It’s an inaugural festival of thought *and* action.

Traditional publishers, online platforms, internet-native writers, hybrid magazines, and new publishing houses will come together for the first time to rethink how ideas are shaped and spread online and offline, now and in the future.

It’s a co-production of O’Shaughnessy Ventures and Interintellect (the event will be live-streamed to members). I’m looking forward to meeting everyone who attends! (join us if you can)

Follow this link for all the details on who, what, when, and where. There’s an early bird special discount on the tickets until the end of this week (March 25).

😄👶👨🏻🍼Good news corner: friend-of-the-show Alex Morris welcomed to the world a brand new human named Jack. I’m very happy for him and his family! 💚 🥃

🏦 💰 Liberty Capital 💳 💴

🔥 😎 BeatleJensenmania & Nvidia GTC 2024 🤖🤖🤖🤖

As a long-time Nvidia watcher, this keynote triggered a bit of a small hipster twinge. It must be how it feels to like a band when they’re a little obscure and niche, and to suddenly see them play stadiums and hear everybody talk about them.

The meme on Twitter is of Jensen as the new Taylor Swift 🦢

Of course, it’s just as stupid to not like something because others like it as to like it because they do — either way, you’re outsourcing your opinion to the crowd.

I’m happy for Jensen and Nvidia. They did it the right way, by grinding for decades, creating good technology, iterating, maximizing optionality by increasing the surface area of potential markets that could take off, and generally making good strategic decisions and positioning themselves in ways that they can defend their castle and benefit from others’ success by selling them (expensive) picks & shovels.

Let’s have a look at what was just announced. I’ll do my best to not get lost in the details. Let’s analyze what this all means together:

Jensen: 2023 generative AI emerged and a new industry begins. Why a new industry? We are now producing software using computers to write software.

Producing software that never existed before. It took share from nothing. It's a brand new category.

Jensen goes on to compare AI to the Industrial Revolution when the first power plants were built. You run the plant and this valuable — but invisible — thing comes out: Electricity!

He’s analogizing the tokens generated by AI models + large scale compute to electrons.

As in past years, one of the central ideas behind Nvidia is that by simulating things (products, robots, even the whole planet — the idea of digital twins) we can make better decisions. But all this is extremely data and compute-intensive, which is where accelerated computing comes in — nothing else can handle it.

The transformer model allows us to do this with very very very very complex datasets because they apply a kind of lossy compression to the patterns and relationships in that data.

It’s a bit like the difference between an uncompressed image or video and the JPEG or H.265 MP4 version of it.

Most of the useful patterns are preserved so that someone looking at the compressed version gets most of the same information, but the file size is massively smaller. To get there, you need a lot of compute to figure out what is important and should be kept and what can be discarded.

Now carry on that simple analogy to much more complex relationships and patterns that are extracted from high-dimensional space (tens of thousands of dimensions and more) rather than something relatively simple like a 2D image or video.

Here are the headlines specs for Blackwell, Nvidia’s new GPU generation:

208 billion transistors

Manufactured using a custom-built 4NP TSMC process

Two-reticle limit GPU dies connected by 10 TB/second chip-to-chip link into a single unified GPU

Second-Generation Transformer Engine, double the compute and model sizes with new 4-bit floating point AI inference capabilities.

Fifth-Generation NVLink: 1.8TB/s bidirectional throughput per GPU 🤯

The GB200 Grace Blackwell packages two B200s with a Grace ARM CPU for a combined 40 petaFLOPS and 3.6 TB/s of bandwidth. These can be stacked to create compute nodes with 72 Blackwells for 1.4 exaFLOPS.

And then you canfurther stack these like Lego blocks until you have whatever size cluster you need to train GPT-6 or whatever (Nvidia showed the rendering of a liquid-cooled data center with 32,000 B200s GPUs producing 645 exaFLOPS of compute).

Crazy stuff.

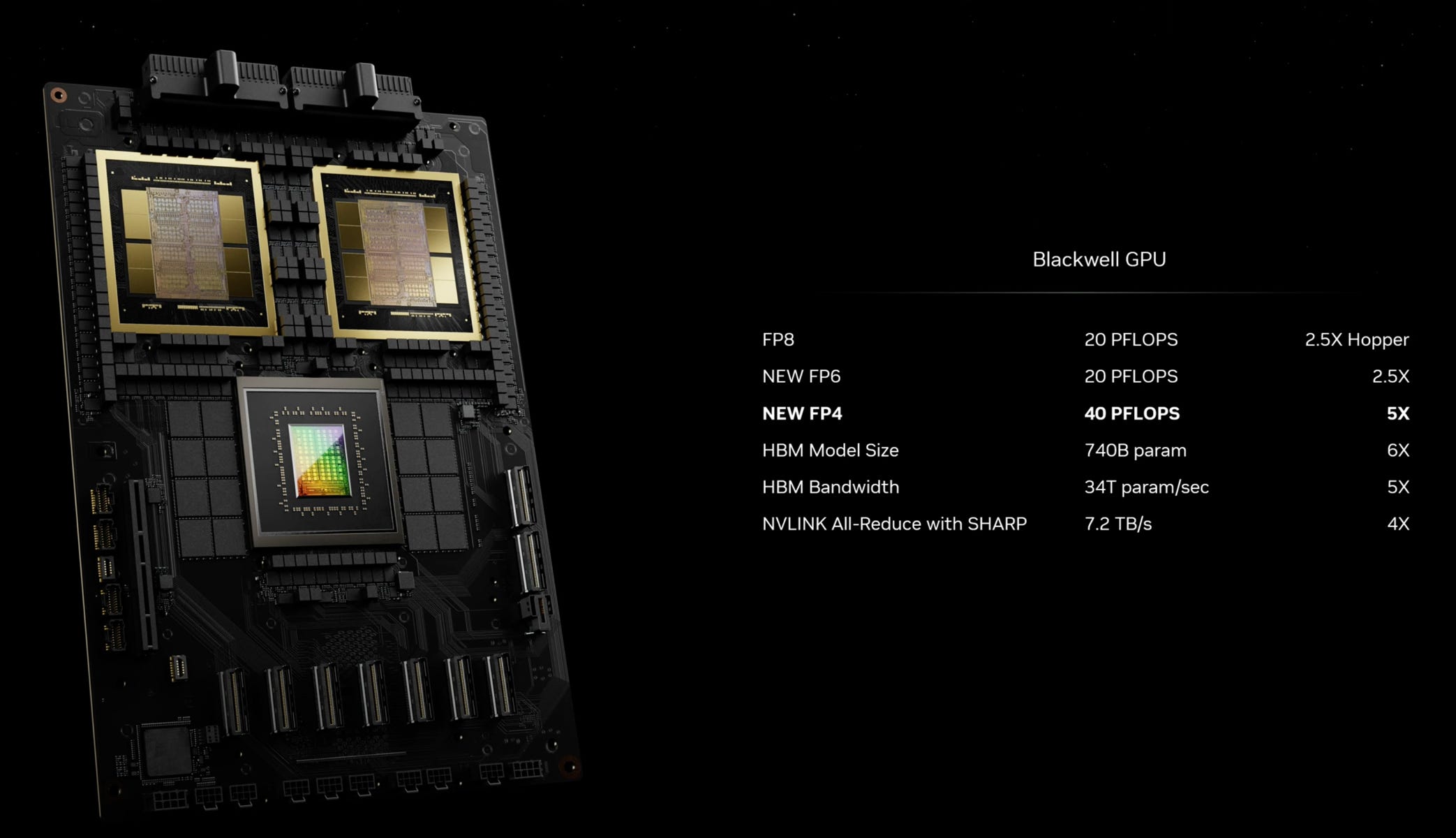

Here’s a slide comparing the performance of Blackwell to Hopper, the previous generation (and the chip that many are still trying to get their hands on in large enough quantities):

Not shown here is the energy-efficiency improvement.

Training the same LLM with B200s rather than H100/H200s would use about 25% of the energy, a significant difference. But of course, we’ll just train larger models and do more inference, so it’s not like energy use is about to go down in absolute terms (which is a story for another day).

Jensen: over the course of the last eight years, we've increased computation by 1,000 times.

That’s from the Pascal chip in 2016 doing 19 teraFLOPS at FP16 to Blackwell doing 20,000 teraFLOPS at FP4 (not apples to apples on the floating point precision — I guess they just wanted the bigger number they could get).

Another thing to note is how many partners Nvidia now has. Everybody (read in Gary Oldman’s voice) is on board. All the hyperscalers, AI labs, and pretty much every large company you can think of.

This reinforces the dominance of the Nvidia/CUDA ecosystem (software+hardware).

Jensen: The reason why we started with text and images is because we digitized those. But what else have we digitized? Well it turns out we digitized a lot of things, proteins and genes and brain waves.

Anything you can digitize so long as there's structure we can probably learn some patterns from it and if we can learn the patterns from it we can understand its meaning we might be able to generate it as well and so therefore the generative AI revolution is here.

There’s also cool stuff on chip design and accelerated computational lithography, robotics, new chips to help run AI on-device, and virtual environments in Omniverse to train robots, but I’ll go deeper into that another time.

The bottom line for now is that Nvidia seems to be executing very well. The velocity of progress across their primary vectors of attack is impressive and makes it hard for anyone to challenge them on the core of what they do.

It may be possible to peel off verticals by specializing or going to extremes, or if you have the scale (hyperscalers) to offload a lot of compute to your own cheaper custom chips. However, most of the mass is likely to stick with Nvidia for the foreseeable future.

🏭→💾 Roper Deep Dive 🩻🔍🦊

As part of his thematic series on compoundery (that’s the technical term) acquisitive conglomerates, my friend David Kim (🕵️♂️) wrote about Teledyne, then Ametek, and now he dove deep into Roper:

📄 [ROP] Roper Technologies (sub required)

Here are a few highlights, but the whole thing is worth reading:

[In 2001] Brian Jellison, a graduate of GE’s management program who had spent 26 years at Ingersoll-Rand, where he oversaw $4bn of revenue across a range of businesses, succeeded Derrick Key as CEO of Roper.

While Derrick initiated Roper’s transition from a heavy industrial to a capital light compounder, Brian Jellison accelerated the shift and steered the company to its end point in vertical software. For that reason, he is widely regarded as the architect of the Roper we know today.

Back when I first started following Roper 10+ years ago, it was harder to get good transcripts online as an individual investor, so I listened to quarterly calls.

It was always a pleasure to listen to Jellison.

He was more like a good university professor than a typical CEO, and he loved to go into the “why” of what he was doing. I still have the audio recordings of all those calls… Sometimes I miss him. Someday, I should listen just to hear his voice again (context: Jellison died a few months after leaving the role of CEO because of an undisclosed medical condition).

all [acquisitions under Jellison] were geared toward improving a newly introduced metric, “cash return on investment” (CROI), formally defined as cash earnings (net income + D&A – maintenance capex) divided by gross investment (net working capital + net PP&E + accumulated depreciation). Senior executives were instructed to make that number go up every year, which naturally led to a growing emphasis on asset light, technology-driven businesses with high incremental margins.

It’s not a metric I’ve seen used much elsewhere, but using it as a North Star during the transition from industrial to asset-light certainly led to interesting results.

Not that Roper was ever the outlier that Constellation Software was, but it still did very well while taking relatively low risk. The stock compounded at an 18.65% CAGR over Jellison’s 17.6-year tenure:

But most importantly, unlike Jack Welch, the company kept doing well after his departure and we didn’t discover all kinds of hidden problems or cans that couldn’t be kicked any further down the road.

Most of their acquisition seem to have been fairly successful, but here’s one that didn’t work (learning from exceptions!):

Sunquest, a developer of diagnostic and lab software founded in 1979, was passed around several times. Five years after going public, it was acquired in 2001 by Misys, who sold it to Vista Equity in 2007. By the time Roper acquired it in 2012, its software was used by 1/3 of US hospitals (1/2 of the largest) to process specimens, verify lab results, manage blood banks, and streamline lab workflows.

With 60% margins and revenue locked under multi-year contracts and growing ~10%, Sunquest seemed promising at the time. But eventually, they began losing share to Electronic Health Record (EHR) vendors like Epic and Cerner, who bundled lab information software into their broader platforms. Sunquest experienced mid-single digit revenue declines for years leading up to 2019 and was eventually merged into Clinisys, a leading European lab information software developer (with 85% share in the UK) that Roper acquired in 2015.

The rare misstep taught Roper to avoid niche software markets where a vertical solution predates the broader enterprise layer, as the latter may eventually come to integrate the former. Still, I wonder about the applicability of this lesson as EHRs and lab information systems both emerged in the 1970s and the competitive concerns that management cites didn’t manifest until after 2015.

Here’s how they divested most of their industrial businesses:

As Roper transformed through massive software acquisitions, they ablated legacy, capital intensive businesses from the main body. They started small, divesting Abel Pumps, a $23mn purchase from 2000, for $106mn in 2015. Four years later, they sold their imaging businesses to Teledyne for $225mn and Gatan (electron microscopes), which they had acquired for $50mn in 1996, to AMETEK for $925mn (19x EBITDA). Two years after that, they closed the sale of CIVCO Radiotherapy (diagnostic ultrasound products) to Blue Wolf Capital for $120mn.

In 2022, Roper finally ripped off the band-aid, tearing off huge chunks of themselves through a series of transactions. In January, Zetec, a materials testing equipment maker acquired for $57mn in 2002, was sold for $350mn. In March, TransCore, acquired for $606mn in 2004, was sold to Singapore Technologies Engineering for $2.7bn (without DAT). Finally, in November, Roper sold a 51% stake in its industrial businesses – AMOT, Roper Pump, Cornell Pump, Dynisco, Struers, among others – to Clayton, Dubilier & Rice for $2.6bn (realizing $2bn of proceeds after-tax), retaining 49% for itself. In each divestiture, Roper realized significant gains.

Those divestitures represent around 40% of revenue for the entire company, so it’s truly a Ship of Theseus story.

What remains? How do they organize and manage all those business units?

Application Software (52% of total revenue) and Network Software (23%) [...] both software segments share a common “look”: mid/high-90s customer retention, 105%-110% net revenue retention, 35%-40% EBITDA margins (including stock comp!), and a Goldilocks ~6%-9% organic growth rate that is fast enough to comfortably outpace cost inflation but not so fast that it attracts too much competition. [...]

28 business units means 28 independent P&Ls, each overseen by a business unit manager who has full discretion over every strategic and operational decision and who derives more than 60% of their total compensation from cash bonuses and equity grants pegged to sustaining EBITDA growth over a 3-year period. [...]

Managers are taught to think about their business in economic, rather than GAAP, terms… properly allocating costs, identifying the fixed and variable components, calculating breakeven points and incremental margins in every product line.

Is there a moat?

Roper’s business units compete on the basis of customer intimacy – fostering relationships with clients, understanding their idiosyncratic needs, developing modules to address them, and providing access in the most convenient way possible – not whiz bang IP.

What they sell tends to be mission-critical yet a relatively small % of the customer’s expenses:

A firm that has standardized on Aderant to track billable hours, manage caseloads, and otherwise run their day-to-day operations is not going to switch to a competing system that is 10% more performant (whatever that even means) the way a semiconductor fab would switch to a instrument that is 10% better at detecting wafer defects. With users having habituated themselves to Aderant’s system and stored so much data inside it, the inconvenience and risk of adopting a new, competing solution is too high relative to the upside. It is little wonder that Roper’s software business units retain mid/high-90% of customers and are able to consistently push price.

Roper’s largest TAM rounds to about $4bn. A new venture-funded entrant would have an exceedingly tough time pulling existing relationships away from incumbents who have already staked significant claims, leaving an unoccupied white space that is too small to pursue.

There’s plenty more, including the pipeline where they source their acquisitions and how they make the math work, management incentives, and what David thinks the prospective IRRs may be, but I’ll leave it here and point you to David’s write-up if you want more details.

🧪🔬 Liberty Labs 🧬 🔭

⚛️ Nuclear Power Ramp Up 🚀

Look at that!

Back in the 1960s and 1970s, the world was much poorer than it is today and was facing stagflation for most of the 1970s.

The inflation-adjusted GDP per capita in 1960 in the US was $19k compared to $65.5k today. In France, it was significantly lower (a bit more than half). We used slide rules and paper for complex construction projects. On-site, things were mostly coordinated with clipboards and walking around.

Despite this, the world went from no nuclear reactors to 400+ in around thirty years.

The only thing stopping us from doing this again, except bigger and better, is the willingness to do it. The engineering hasn’t changed and the physics haven’t changed — but we’ve got 60+ years of iteration and improvements to designs, materials, sensors, hardware, and software — the rest is just politics and words on paper that can be changed.

How many of our problems could be ameliorated with an abundance of clean and reliable energy?

How many long-term well-paid local jobs could be created from all this manufacturing of parts, construction and multi-decade operation of plants? How about all the second-order benefits of having gigawatts and gigawatts of dependable 24/7 clean power with predictable prices (ie. not fluctuating wildly with natural gas prices or the weather)?

🎨 🎭 Liberty Studio 👩🎨 🎥

📕 ‘We Are Legion (We Are Bob)’ by Dennis E. Taylor 📖

🚨 I keep spoilers to a minimum and only mention the kind of stuff you may read on the back cover of the book., like the premise, etc. 🚨

I recently realized that my nonfiction-to-fiction ratio was getting a bit out of whack and I wasn't reading enough fiction. In my teens, I started out with 100% fiction, but over time, the line went down and the nonfiction line went up, until they crossed on the graph, and now I’m close to 100% nonfiction.

To remedy this, I ordered ‘This Is How You Lose The Time War’ by Max Gladstone and ‘Tomorrow and Tomorrow and Tomorrow’ by Gabrielle Zevin, having heard good things about both.

But before I got to them, I decided to listen to the audiobooks of ‘We Are Legion (We are Bob)’ and ‘For We Are Many’ by Dennis E. Taylor, which I have had for over a year. I meant to read them after my last fiction binge (‘Project Hail Mary’ by Andy Weir and ‘Dark Matter’ by Blake Crouch) but got side-tracked…

One big bonus point for the audiobooks: They are narrated by Ray Porter, who I just *love*. He also did ‘Project Hail Marry’ and I’ve been recommending the audio version of it to people because his narration makes it about 15-20% better than the text version.

The premise of the Bobiverse books is ambitious!

A software engineer is cryopreserved after his accidental death and gets reanimated as a software simulation a century later. His consciousness is used to oversee a Von Neumann probe sent on a mission to explore and colonize the galaxy, creating copies of itself en route, interacting with his sentient descendants/clones…

Of course, hijinks ensue.

It’s not the hardest of hard sci-fi, but I like that there’s still enough cool speculative engineering and tech to warm my geeky heart. If you enjoyed ‘Project Hail Mary’, I would be very surprised if this wasn’t a lot of fun for you. The humor is also kind of similar.

Book 2 is a continuation of the storylines of Book 1, with some new ones thrown in. It could’ve been published as one larger book and not seemed out of place.

There’s an annoying detail about the audiobook for book 2: There are a lot of small segments that were re-recorded on a mic that sounds different, and the transitions can be a bit jarring.

Overall, I give both books high marks as fun sci-fi. They’re not quite page-turners that make you hold your breath as you wonder what will happen next. They’re a lower-energy ride, but still very enjoyable and very accessible if you can handle something more plot and tech-based than character and emotion-based (there’s some of that, but it’s not the primary strength). 👍👍

Just seeing this (I'm a few days late, but I NEVER miss Liberty posts!)

Thank you for the kind words!

Great news on Interintellect. Looks like a great adventure starting up. I won't be able to be there in person, but will be following your progress with great interest. You have a wide range of ways to participate and I am sure many, many people will want to play roles of one kind or another. Godspeed!