488: The Evolution of Software Bloat, OpenAI, Microsoft's $100bn AI Supercomputer, Cookware, and Vinyl

"it becomes a different book each time"

Almost no one is ever insulted into agreement.

–Arthur Brooks

🍳🥘👨🏻🍳🐇🕳️ As often happens, a simple task turned into a whole research project.

This specific rabbit hole began when one of our old sauté pans died…

It was a generic non-stick pseudo-granite thing I bought on sale at Canadian Tire (once in a while they have a ‘70% off’ sale on pots and pans).

However, as I’ve gotten deeper into home-cooking and saw the *massive* difference made by the cast iron and carbon steel skillets I got in recent years, I figured I shouldn’t just go with any random pan — if my wife and I are going to use these tools multiple times a week for years, possibly decades, we may as well invest a bit more upfront so that we can reap the benefits for a long time.

I ended up learning much more than I expected and ordered four different items:

This YouTube channel which primarily reviews cookware has been a big help. They review many models, compare brands, give the pros and cons of different types of cookware, give budget alternatives to the big names, etc. Very useful.

This video on how to avoid food sticking in stainless pans was also quite informative.

If I had been in the US, I probably would’ve made different choices because more brands are available and less expensive, but I got a really good deal on All Clad (one of the best-known brands, along with Made In).

Misen seems to offer very good value and is probably what I’d recommend to anyone wanting to give higher-quality cookware a try without breaking the bank.

The Lodge was just 1/3 to 1/4 of the price of a similar Le Creuset or Staub. From what I can tell, it’s very well made (it even has a self-basting lid!). I made a beef stew in it this weekend and it was *chef’s kiss*

📖📚👀🤔 Via Dylan O’Sullivan (🇮🇪🇪🇸):

A book read by a thousand different people is a thousand different books. — Andrei Tarkovsky, 1986True, but it goes even further: When someone reads the same book at different times, it becomes a different book each time because the reader has changed.

Speaking of books! A quick reminder that if you’re anywhere near NYC on April 13th, you should come to the Future of Publishing Event organized by Interintellect and OSV.

I’ll be there along with all these interesting people.

You can buy your ticket here and get 20% off with the coupon “FOPE20”.

💚 🥃 🚢⚓️ If you’re getting value from this newsletter, it would mean the world to me if you become a supporter to help me to keep writing it.

If you think that you’re not making a difference, that’s incorrect. Only 2% of readers are supporters (so far — you can help change that), so you make a big difference.

You’ll get access to paid editions and the private Discord. If you only get one good idea per year, it’ll more than pay for itself!

A Word from our Sponsor: 💰 Brinker Advisor 💰

Are you ready to take control of your investments and secure your financial future? Our premium subscription is designed to empower you with the tools and insights you need to make informed decisions and maximize your returns.

🔑🔓 When you become a paid subscriber, you'll gain exclusive access to:

Marketimer Model Portfolios I, II, and III, Income Portfolio, Active/Passive Portfolio 📊📈🏦

BFIA Model Portfolios Aggressive, Moderate, Conservative, Tax-Exempt, Exchange-Traded Fund Portfolio 🧭

Recommended List of No-Load Mutual Funds (updated daily) 📑🕵️♂️

Full Access to All Archived Posts 🗄️📜

All this along with our favorite investment ideas and our views on the economy, monetary policy, and related topics.

⭐️ Sign up here and get 20% off your first year with the coupon code “Liberty20” (offer good through June 30th, 2024). ⭐️

🏦 💰 Liberty Capital 💳 💴

🤖💻 The Paradox of Progress: How Faster Hardware Leads to Bloated Software 🏎️🐢 (Is AI the Next Step?)

There’s a phenomenon that has been going on forever in computing:

As hardware gets faster, software becomes less efficient and more bloated.

We can only hope that on average the rate of the former is faster than the rate of the latter…

It’s not entirely a bad thing — trade-offs are made to make software easier to write, easier to deploy and maintain, with better functionality for users, better graphics and sound, etc. However, the result of all this is that even the simplest thing, like a plain text editor, may now require more CPU cycles, RAM, and disk storage than a whole operating system from a few decades ago.

In many cases, just the app icon file is bigger than entire applications and operating systems of yore.

From Floppy Disks to Data Centers: The Exponential Growth of Computing Resources 💾

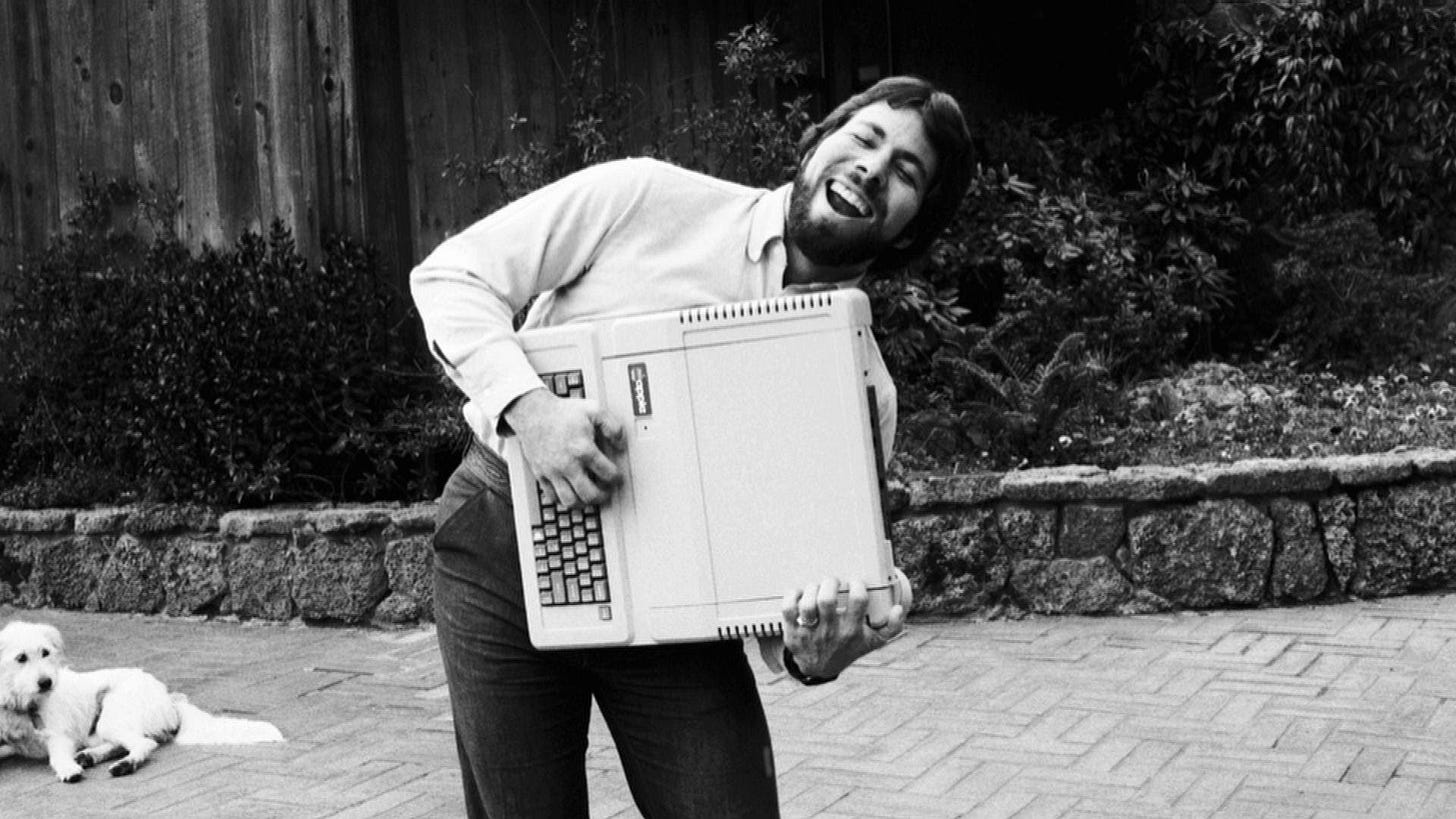

The Apple II’s CPU had a clock speed of 1.023 MHz and had 4 KB of RAM.

To be able to do anything interesting with it, Steve Wozniak needed to be both a hardware *and* software genius. He knew everything about every single component of the machine (because he built it) and knew every 1s and 0s of the software (because he wrote them, sometimes by hand on paper). He optimized everything to the Nth degree.

To allow Doom, the first fast-paced 3D first-person shooter with texture mapping and dynamic lighting, to run on my childhood’s Intel 386 25mhz with 4 megs of RAM, John Carmack had to be a genius. He hand-tuned the code in assembly (machine language) to squeeze out every last bit of performance from it and had to devise a whole bag of clever tricks to stay within the bounds of what a computer could do at the time. The entire game fit on four 1.44mb floppy disks.

(Trivia: I still play Doom occasionally and upload some of my games to YouTube. The code has been open-sourced and the community has been improving it and creating new maps for 30 years. My favorite is a mod that makes weapons more realistic and enemies more deadly.)

The Hidden Cost of Convenience: The Resource-Intensive Future of Software, AI Edition 🔌⚡️🪫

Today, Slack, an app that is essentially a glorified IRC chat client, takes around half a gigabyte of RAM and over 300 megabytes of disk space. It is built on top of Electron, a cross-platform framework that is itself built on top of a bunch of web technologies including a browser engine.

Programmers creating an application like that use tools that abstract away the hardware to a level that coders could only dream of 30 years ago. They build using high-level languages and all kinds of third-party APIs and frameworks, a lot of them open-source. Rather than having to reinvent the wheel, they can rely on them to do various things for them.

This has huge benefits.

Coders no longer need to be geniuses to be able to do cool things (though of course it always helps to be a genius, especially if you want to be at the cutting edge).

App stores with millions of apps would be unthinkable back when you needed to write everything in C and assembly to get acceptable performance, or when you needed to create almost everything yourself rather than build on top of existing building blocks (like 3D engines from Epic and Unity for games, for example).

Thinking about all this, I realized that the next level of this is probably integrating AI into software in ways that make it easier to develop, but more bloated and inefficient when it comes to resources used.

I’m not talking about using CoPilot to assist with coding and debugging. That’s probably making it more efficient.

But once it becomes common for software to integrate genAI models and do calls to generative AI APIs, we may see a real explosion in the resources needed to run things.

What if you have software that occasionally exhibits strange behavior. Rather than debugging it fully, maybe you just get it to “good enough” and call on an LLM to look at the output when anything weird happens so it can fix it on-the-fly. Or maybe instead of hardcoding a bunch of rules and filters for some input field, you have an LLM fined-tuned on these rules look at the input to make sure it fits those criteria. Maybe you have genAI create visual assets as required rather than pre-render them — why should your messaging app be limited to just a certain number of emojis and stickers? Let’s generate a new set based on conversation context for every thread! Maybe that photo app should suggest ways to automatically edit your photos using a diffusion model? Etc.

I can’t predict all the ways in which generative AI will be integrated in various types of random software, but I can imagine that engineers will figure out all kinds of clever ways to use it to make their lives easier and expand the functionality of their apps.

This will be great in many ways but it will also further the trend of using more and more computing resources for even simple tasks.

The Power Hungry Future: How AI-Driven Software Will Strain Our Energy Grid 🏭⚡️🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖

The end result will be that soon, we will have a basic plain text editor that can’t have full functionality without a data center full of GPUs somewhere in the cloud to support it, aka AI-powered Clippy 2.0 👀🖇️

It’s mind-bending to imagine a world in which every time someone writes a tweet or posts on Facebook, trillions of transistors around the world light up specifically because of those few sentences of text or that photo — platforms will scan it to make sure it follows their content guidelines, scammers and spammers will scan it to try to create custom phishing and spam just for it, the people in your network may have apps that also run some AI model against it to suggest them potential responses and memes or whatever, advertisers will have their models scan it to better target ads to you, multiple gov’t spy agencies around the world will scan it with their SIGINT programs, etc.

All from a few keystrokes on a keyboard.

Now multiply that by billions of people online… 🤯

We better build a bunch more nuclear power plants pronto, we’re going to need a lot of juice for data centers!

🔓 OpenAI Makes ChatGPT Open to Anyone 🤖

Speaking of AI usage ramping up… OpenAI has announced that ChatGPT is now available to anyone without the need to have an account.

It’s not exactly the same as the logged-in experience:

We’ve also introduced additional content safeguards for this experience, such as blocking prompts and generations in a wider range of categories.They mention that they “may use what you provide to ChatGPT to improve our models for everyone” though there is an option to turn that off.

It’s an interesting move. I can imagine two main reasons for doing this:

The data. They learn from users, both from what they write and the thumbs up/thumbs down feedback, and that can feed back into the RLHF layer and training of new versions of the model.

Cutting oxygen to rivals. By further reducing friction (never underestimate friction!) to using a pretty good model (GPT-3.5 isn’t cutting edge, but it does a good job for many use cases), they may reduce the number of users that other models get, and make it table stakes to have an entirely open way to access an LLM.

The big players are already doing that, so it doesn’t change much for them, but smaller competitors trying to come in from below are already having trouble finding enough compute for training, R&D, and inference, so this new requirement just makes it incrementally harder to keep up.

It does seem to have both offensive and defensive benefits for OpenAI.

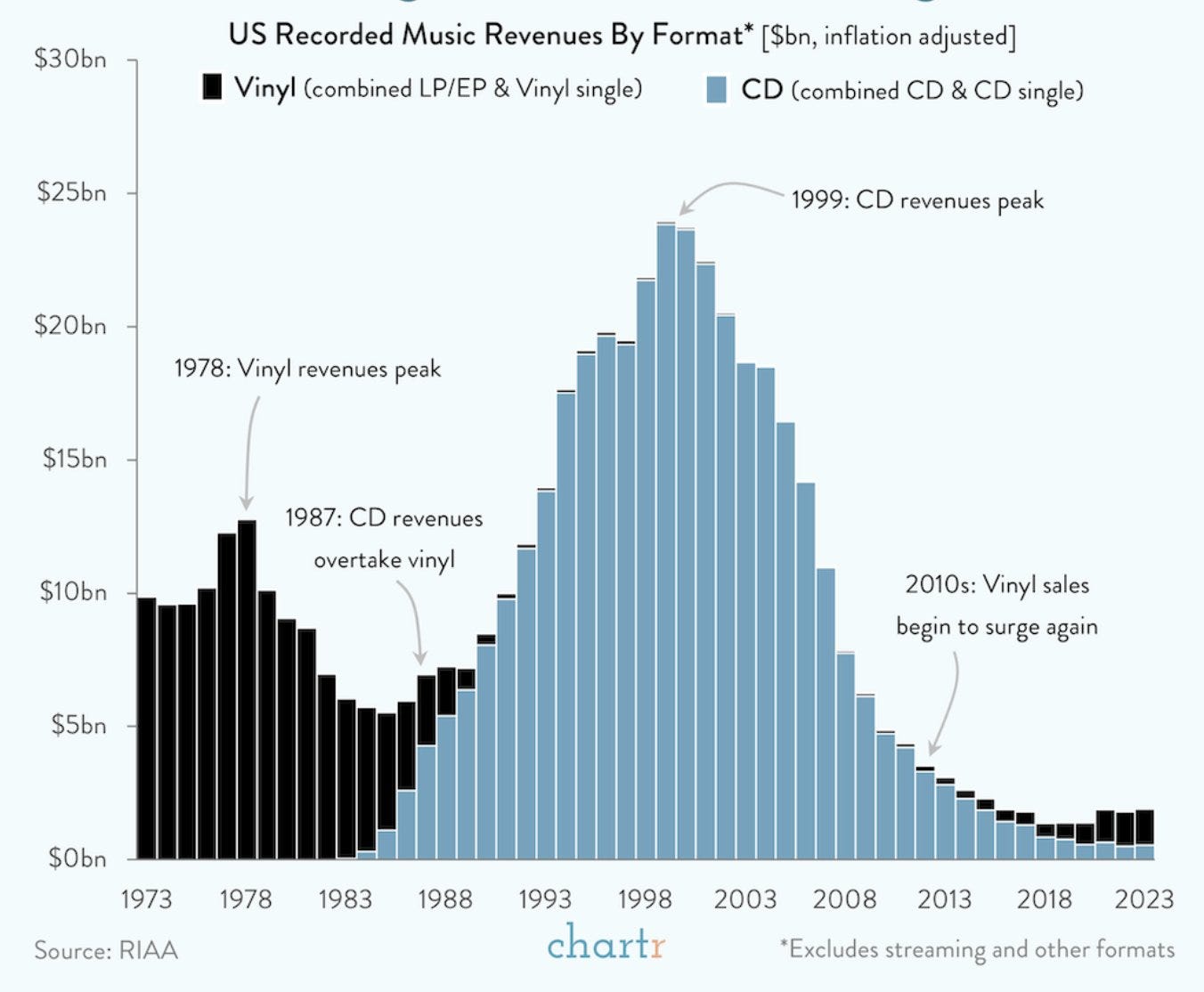

Vinyl is outselling CDs! 💿📉

I don't think many could've predicted that 30 years ago. I like Will Martin’s take on this.

I can see the appeal of vinyl. The great big artwork, the tea ceremony, watching it spin. Listening to one album at a time rather than bouncing around some algorithm. It has great physicality — you can look at your music, it’s an artifact.

I’m almost starting to convince myself to get a turntable 🤔 (but not quite)

Via Chartr

🧪🔬 Liberty Labs 🧬 🔭

🎓 Visual Introduction to Transformer AI Models 📺🤖

This is an AI-heavy Edition, so we may as well brush up on our understanding of these marvels of human ingenuity!

💰💰💰💰 Microsoft and OpenAI working on $100 Billion Stargate AI Supercomputer 💰💰💰💰

If the primary way to make AI models better is to scale them up in size, and if they grow by an order of magnitude every couple years, you fairly rapidly run into pretty insane numbers for compute and energy.

The early models cost a few thousand to train, then a few million, then hundreds of millions… Now we’re getting into the era of billions, soon tens of billions, and on the horizon, hundreds of billions.

Microsoft and OpenAI seem to be planning for exactly that:

Executives at Microsoft and OpenAI have been drawing up plans for a data center project that would contain a supercomputer with millions of specialized server chips to power OpenAI’s artificial intelligence, according to three people who have been involved in the private conversations about the proposal. The project could cost as much as $100 billion, according to a person who spoke to OpenAI CEO Sam Altman about it and a person who has viewed some of Microsoft’s initial cost estimates.

The project hasn’t been green-lit yet, according to the sources, and plans could still change. But it gives an idea of the order of magnitude of what they’re thinking of.

The executives have discussed launching Stargate as soon as 2028 and expanding it through 2030, possibly needing as much as 5 gigawatts of power by the end, the people involved in the discussions said. (Source)

5 gigawatts for one project!

Multiply that by Big Tech companies and nation-states who will want their own…

In parallel to this, Microsoft is working on shorter-term AI data-centers that are also gigantic by today’s standard — one of them in Wisconsin could cost around $10bn once completed.

🎨 🎭 Liberty Studio 👩🎨 🎥

🎥 Video Essay on What Makes Films Look Authentic 🎞️

At the risk of beating a dead horse 🐴, this video essay shows the importance of not merely attempting to imitate past methods, but instead to understand what was done right.

Many of the positive examples given are relatively recent films. They don’t look old, they just haven’t lost what generations of filmmakers have learned before (either through happy accidents or studying the medium).

Another interesting dynamic is that when we say we prefer the “old” look, there’s a huge survivorship bias at play. The old films *that we still watch today* aren’t the mediocre ones. It’s the great ones that have endured (Lindy). What we’re truly learning from isn’t primarily tied to the age of a film, but rather to its quality and craftsmanship.

I too just found out how amazing All-Clad is! I cooked pancetta with garlic and crushed red pepper with their French skillet this week for a pasta dish, and it came out better than anything I've made in the past!

I wonder how much of that $100B is just to stop or at least cause a competitive pause, to signal an irrational level of commitment. If that's the case, how credible will it be?