581: GPT-5: Early Verdict & Who Should Worry, 100% Tariffs on Chips, Dario Amodei & John Collison, Distress Investing, 6,552 Coal Plants, Opus 4.1, and Gemini Storybook

"the traffic flows in both directions"

Neither love without knowledge, nor knowledge without love can produce a good life.

—Bertrand Russell

🧠↔💭✍️ Friend-of-the-show Gurwinder Bhogal recently wrote:

Writing is not just communicating with others, but also with your own unconscious mind.I love it. It’s very true.

I often say that writing is thinking, but writing is also a way to discover what the rest of you thinks and feels. The conscious part, which we often mistake for the entirety of ourselves, can be surprisingly out of the loop on important things.

Writing clears a path between your conscious and unconscious, and the traffic flows in both directions.

In other words, it’s not just about digging up buried ideas in the hidden part of your mind, it’s also about planting seeds there that may grow later. 🪴🌱

🗓️📋✅⏳ Here is part two of looking back at various things I added to my life and how they turned out:

📲🦉👩🏻🏫🇪🇸🇮🇹🇯🇵🇩🇪 I still use Duolingo daily, but as expected, I’ve slowed down to about one lesson per day. I mostly bounce between Italian, German, Spanish, and Japanese (I’ve put Chinese on pause for now, it’s so hard). I have no illusions I’ll be fluent just from this, but I’ve learned plenty of vocabulary that I wouldn’t otherwise know, and I think I have a better feel for the vibe of each of these languages. That’s worth something! My wife and older son still do Spanish daily.

🏃♂️ Running update: Pretty much a failure. I had gotten to the point where I ran a few times a week, but when I started strength training, running got bumped. I still take walks, but even that has been reduced in recent months (‘I’m too busy’ is always an excuse… I know I could prioritize it more 😔)

🎒🧱 Rucking is another bust, but hopefully a temporary one. I want to come back to it. I think it’s such an elegant thing: if you’re going on a hike anyway, add a bunch of weight and make it count. I haven’t bought a dedicated rucking pack yet (Goruck seems to make good ones), so I had just been walking with my regular backpack with duct-taped bricks in it.

🔌🚘⚡🛣️ We took our first long family roadtrip in our EV6 electric car. We went to Connecticut. 10 hours down, 9 hours back. Surprisingly, we didn’t go crazy. The kids were patient. The fast-charging CCS infrastructure is hit & miss, but it’s good enough that it never was a real issue. There are more 350kW chargers than I expected, and a couple of times my car hit its 240kW max. By the time we were done stretching our legs, eating a snack, and using the restrooms, we had enough charge, so it didn’t add too much time. Only once did we get to a station full of EVs waiting… we drove to the next one, and it was empty.

🔊🎶📲 I’m back to being happy about my Sonos setup. I have two Era 100s, plus an Arc and Sub Mini spread over the kitchen, dining room, and living room. I loved them at first, then Sonos released their new app and all hell broke loose. I considered shorting the stock for a few months… and probably should have. Then the CEO got booted, the company refocused on software quality and reliability, and while it took what seemed an eternity, things are back to being solid. I love being able to play music on a whole floor of the house perfectly in sync, and with small-footprint decent-sounding speakers.

🔋🔋🔋⌨️ 🖥️ 🛜 I’ve only had one power outage since I bought my giant UPS batteries (1,152Wh LiFePO₄ BLUETTI) for my desktop computer + fiber modem & wifi router, but it worked! I just kept on trucking.

More later.

🏦 💰 Liberty Capital 💳 💴

🤖5️⃣ 🤔 GPT-5 is Here! First Impressions & Who Should Worry (Anthropic? Google?)

The expectations were *incredibly* high for this one, which meant they were almost impossible to meet. Within minutes of the livestream video announcing and demo’ing GPT-5, Twitter was already full of people claiming to be disappointed or saying it sucked.

As Nathan Lambert puts it:

The people discussing this release on Twitter will be disappointed in a first reaction, but 99% of people using ChatGPT are going to be so happy about the upgrade.Keep in mind that for the hundreds of millions of free users, the upgrade over the non-reasoning free models they were using to GPT-5 will be a *much* bigger step up than what paid users will get going from o3. A strong model just got democratized, the AI floor has been lifted.

Maybe it’ll be the iPhone 5 moment for mainstream AI? 🤔

Too early to tell.

Opinions are always volatile when a new model drops. Benchmarks only tell part of the story, and confirmation bias runs high (it didn’t help that OpenAI’s charts had some atrocious errors, an easy target 🙈📊🎯).

OpenAI claims they’ve:

Reduced hallucinations, improved instruction following, and minimized sycophancy

Leveled up GPT-5 in three of ChatGPT’s most common uses: writing, coding, and health

Cut deception rates from 4.8% for o3 to 2.1% for reasoning responses in real-world use cases

I hope they’re correct, because while this is hard to measure on benchmarks, it makes a big difference in real-world usage.

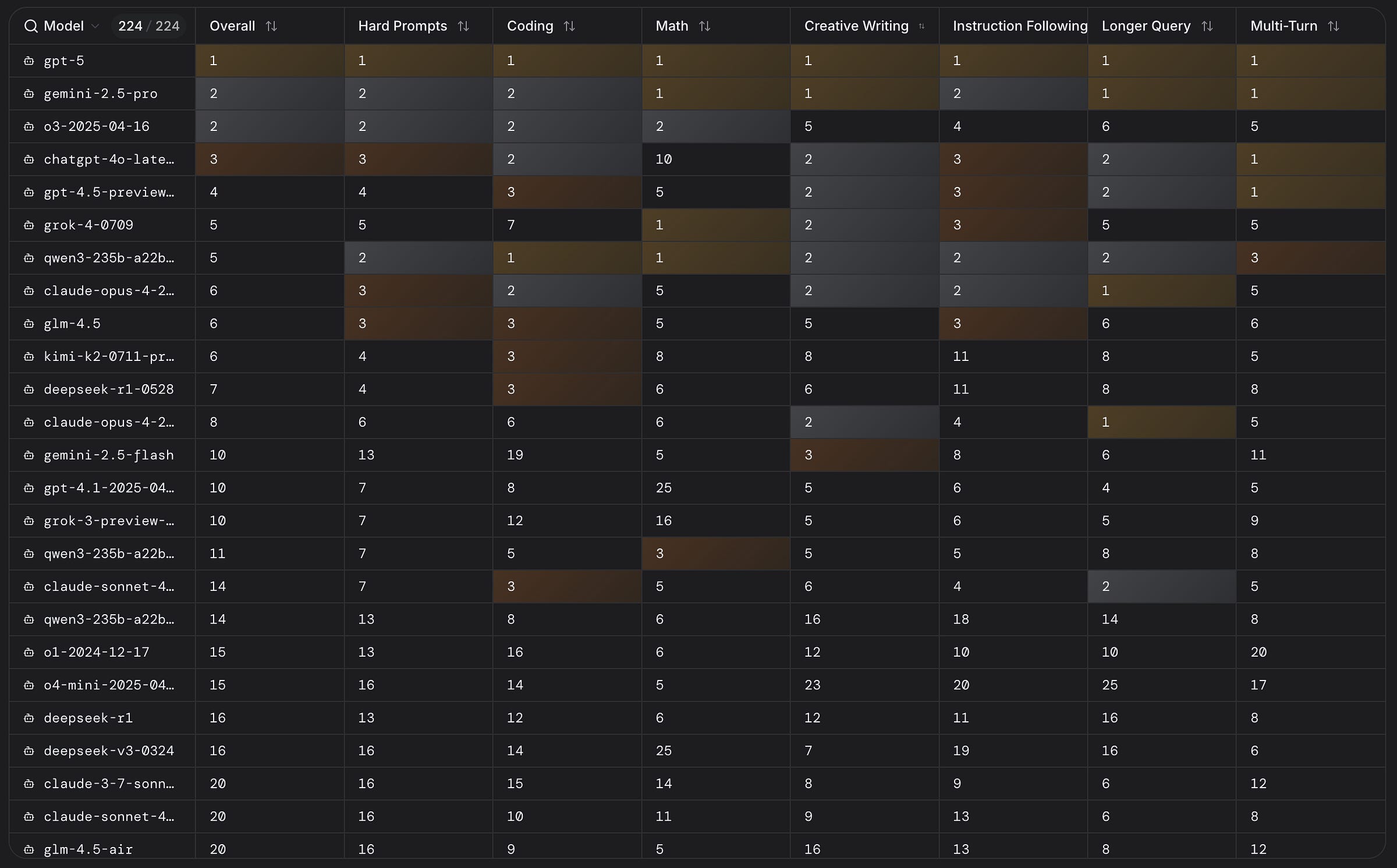

LLM Arena has gathered a few thousand blind votes on GPT-5 under a codename, and it currently ranks #1 in every category (tied with Google’s Gemini 2.5 Pro in a few, but still a few points ahead).

It appears impressive in coding:

In WebDev Arena, GPT-5 sets a new record:

+75 pts over Gemini 2.5 Pro

+100 pts over Claude Opus 4

The best model to date for real-world coding.This could still change. We’ll see where it settles, and whether GPT-5 pulls ahead or falls back.

Let’s also keep it in context: LLM Arena’s methodology limits what can be tested, it’s not representative of a full model’s capabilities. I doubt many people do extremely complex, highly iterative real-world tasks in there. And it’s unclear whether reasoning models get to spend as many tokens as they might in, say, Cursor.

Another important thing to remember about benchmarks, from Lambert again:

the march of progress on evals has been slowed for a while because model releases are so frequent. Lots of small steps make for big change. The overall trend line is still very positive, and multiple companies are filling in the shape of it.This makes for smaller jumps from model to model, but over, say, a year, progress is still huge. Remember, o3 came out on April 16, 2025, less than four months ago!

GPT-5 merges what used to be multiple models into one:

GPT-5 is a unified system with a smart and fast model that answers most questions, a deeper reasoning model for harder problems, and a real-time router that quickly decides which model to use based on conversation type, complexity, tool needs, and explicit intent (for example, if you say “think hard about this” in the prompt). [...] Once usage limits are reached, a mini version of each model handles remaining queries. In the near future, we plan to integrate these capabilities into a single model.

I dislike that they removed the older models and the option to manually pick a sub-model. Smart-routing is a better default for 99% of users, but why not let power users override it?

A few people have been given early access and spent a couple of weeks experimenting with GPT-5. Here are my highlights of what they have to say (and who’s smiling the most today 😎 and who’s not going to sleep well 😱).