606: Alpha School, Housing Affordability Moonshot, ChatGPT Image 1.5, Ford Kills the F-150 EV, Novo Nordisk vs Eli Lilly, Claude Code, DeepMind, Zach Dell, and Flea

"supercharged my ability to be a mentor/tutor for my kids."

I think about decisions in three ways: hats, haircuts, and tattoos.

—James Clear

🎒🏫🧑🎓🍎📲 I’ve been thinking a lot about what and how my kids learn these days.

My oldest is going to secondary school next year (where we live, it’s six years of primary and five years of secondary school).

While the school he got into is pretty forward-thinking compared to most others here — they’ve been using iPads since 2011, and he got into an outdoors program that has 30 outings a year to do things like overnight winter camping ⛺, mountain biking 🚵♂️, canoe trips 🛶, rock climbing 🧗, etc — in many ways it’s still fairly traditional, with a teacher lecturing 30 kids.

We’ve known for ages that one-on-one mentoring/tutoring + individualized pace + fast feedback + mastery focus is extremely effective and group lectures are way down the list, but we didn’t have a way to scale personalized education until recently. (Benjamin Bloom’s landmark 1984 research reported that students tutored one-on-one using mastery learning techniques performed two standard deviations better than students in conventional classroom settings, putting the average tutored student above ~98% of the control group.)

A couple of podcast interviews with Joe Liemandt, the current Principal at Alpha School and billionaire founder of Trilogy Software, made me really intrigued about their educational model, and made me question how I could apply some of those principles with my kids.

I *highly* recommend listening to at least one of these:

The Alpha School model compresses what takes 6 hours + homework elsewhere (core academics) into about two hours a day of self-paced, mastery-oriented, adaptive computer-based learning, with adults acting more like coaches/guides than traditional teachers.

The rest of the day is spent on projects and ‘life skills’ activities instead of conventional classroom lectures. They give the example of students running an Airbnb and a food truck to learn about entrepreneurial skills, recording videos shared with other students to learn public speaking, doing 5k races, etc.

The deal 🤝 they make with students is basically: If you do a good job on the academics in the morning, doing four 25-minute Pomodoro blocks ⏲️ on the software, you are “buying back” the rest of your day to work on fun projects.

I know that as a student, I would have loved that. Trade two focused hours for the rest of my day back? Yes please! So much better than being lectured at for 6 hours a day… 🥱

Here’s an intro video to the school that goes through what they do in each grade:

To be clear, if you haven’t yet listened to the podcasts I linked above: They’re not parking kids in front of ChatGPT and hoping that the chatbot will educate them. That’s not at all how they use software and AI.

My understanding is that they use software because it allows them to individualize the pace for each kid, and for everything to be tracked very granularly, so they can measure mastery.

It sounds more like a less static and more modern version of Khan Academy, and the “buy back the rest of your day” deal creates the motivation 🥕 (the software alone wouldn’t be enough).

If someone struggles with something, they can spend more time on it until they master it. You can’t do that if there’s one teacher for 30 kids, you move on and leave some kids behind. Those who don’t master concepts will later be blocked by the holes in their knowledge. If they get 60-70% on tests, that 30-40% they struggle with adds up year after year, and as academic concepts get more complex, they are lost and give up 🧀

Alpha uses AI largely to better understand how each kid is doing and to tailor lessons to them and their interests. eg. if a kid loves football or Pokémon, maybe they can learn math concepts with football or Pokémon-themed exercises. 🏈

It all sounds very intriguing and plausible to me. While I don’t know enough about Alpha to judge it (I’m sure they have plenty of detractors), I do find their model very inspirational, and it matches what I’ve been observing. It may not be perfect, but it sounds much better than what most schools currently do.

In my own experience, AI has supercharged my ability to be a mentor/tutor for my kids.

I’ve always loved teaching them things and encourage them to ask me questions about anything they’re curious about. I do my best to come up with analogies, metaphors, stories, etc. I don’t just explain, I also ask them questions afterwards to make sure they really understand.

But however good I am as a teacher, I have *lots* of limitations.

I’ve long augmented what I know with Wikipedia and Google, but lately, AI has become the top layer on top of everything else.

I use it both to make sure that *I* truly understand things so that I’m better able to teach them, but also to have explanations that are clearer than what I could come up with on the spot, or ask follow-up questions when something isn’t clear, or if there’s an interesting tangent.

To me, this seems like a super-powerful way to use AI.

I’m learning more, my kids are learning more, and we enjoy researching things together, so it’s quality time.

But it also models for them the behavior that I want them to internalize: When you don’t know something, take a moment to look it up, and don’t just look for “the answer”, but make sure you understand *why* things are the way they are.

For example, if my kids ask: “Why is the sun hot?” ☀️ I don’t want to just say “nuclear fusion”. While that’s correct and might earn exam points, it doesn’t help them truly understand what’s going on.

Instead, I’ll talk about how the sun is so big that its gravity is enormous, pushing together the hydrogen atoms that make up most of its mass until they are so squished together that they fuse into new, heavier atoms, a process that releases energy… ⚛️

Update: Writing the above reminded me of Khan Academy.

I asked my 11-year-old, who has been obsessed with math lately, if he wanted to try it.

We created an account for him, and he decided to start with algebra, which he’ll be learning for the first time in school next year.

On the very first try, he spent 1h45mins watching videos and doing exercises. On the second day, he did it for an hour. He loved that he could go at his own pace, pause videos and go back, skip ahead when things were obvious to him, etc. I had to tell him to stop because it was bedtime. 🛏️🌙

We’ll see if he sticks with it, but I explained to him that by doing this, he’s “making investments that will pay off later.” Next year, he’ll understand things better in class, get through exercises and homework faster, have more free time to do other things, and get better grades with lower stress.

🍎📲📚 The Margins App, which I love and use to track my reading, was named App of the Day by Apple yesterday. The design is delightful, and it’s being constantly updated with new features, including the ability to follow friends and see what they’re reading.

Very cool to see, and entirely deserved! 🎉

(Full disclosure: I know the founder, Paul Warren, and OSV has invested. But again, we thought it was great first and then wanted to support it, not the other way around.)

🔎📫💚 🥃 Exploration-as-a-service: your next favorite thing is out there, you just haven’t found it yet!

If this newsletter adds something to your week, consider becoming a paid supporter 👇

🏦 💰 Liberty Capital 💳 💴

🔌🛻🪫⚡ Ford Pulls the Plug on the F-150 Lightning EV, Takes $19.5B Writedown, and Pivots to Chinese Battery Tech 🤔

After making a very ambitious bet in 2021 and openly citing Tesla as an inspiration, Ford is backing away from its EV dreams and retrenching to hybrids as it expands licensing deals for Chinese technology.

Ford CEO Jim Farley: “We evaluated the market, and we made the call. We’re following customers to where the market is, not where people thought it was going to be, but where it is today. The last couple of months have been really clear to us, the very high-end EVs — the $50,000, $70,000, $80,000 vehicles — they just weren’t selling.”

Ah, yes, following customers!

Wasn’t it Henry Ford who had a line about faster horses 🐎

(note: The line appears apocryphal, but it’s still a good one)

Ford may just not be able to do this right. Rather than make its products better and more attractive, cheaper through manufacturing advances, and iterating rapidly into models that do sell, they tried one big swing, then retreated before iteration had a chance to compound.

It’ll probably be good for their finances in the near term, but over time, they are ceding the game to others. Batteries are still getting better every year, and as the Chinese car ecosystem is showing, there’s a lot of innovation possible.

Are Ford and other carmakers, who are similarly unable to transition to making profitable & desirable EVs going to be the Nokias and Blackberrys of this industry? 🤔

To be fair, they’re not fully giving up on electrification.

They want to make plug-in hybrids and extended-range EVs: The difference between the two is that with PHEVs, the ICE can drive the wheels, while with EREVs, the ICE doesn’t drive the wheels, it acts as a generator that recharges the battery.

This has some benefits and some downsides.

Even with a smaller battery, you can cover a lot of short-range daily driving and save a lot of fuel. And when you need to drive longer distances, the ICE has got you covered, and you can benefit from fast refueling at gas stations.

But it also means the increased complexity and weight of having two kinds of drivetrains in the same vehicle.

While you’re driving in pure electric mode, you still have to lug around the ICE. You don’t get the full EV experience with instant torque because the battery and motor are relatively small, and when the ICE is active, you don’t get that much power because it’s not full-size like in a pure ICE.

But the main downside is that the tech tree for these hybrids requires maintaining a full ICE supply chain and caps the potential cost savings and performance benefits as batteries keep getting better and cheaper. How will these hybrids compete with full EVs in 10 or 15 years? (skate where the puck is going, etc 🏒)

It’s a bit like mechanical spinning hard drives vs SSDs. There was a period when both techs overlapped, but SSDs kept improving and getting cheaper. The old tech still has niches where it’s cost-effective or more convenient, but for the mainstream experience, the new tech keeps compounding advantages as it scales.

On the battery front, Ford said it was cancelling plans to make “87 GWh of nickel-manganese-cobalt batteries in Kentucky” and will instead license technology from China’s CATL to make “at least 20 gigawatt-hours a year of stationary storage batteries in Glendale, Kentucky by 2027.”

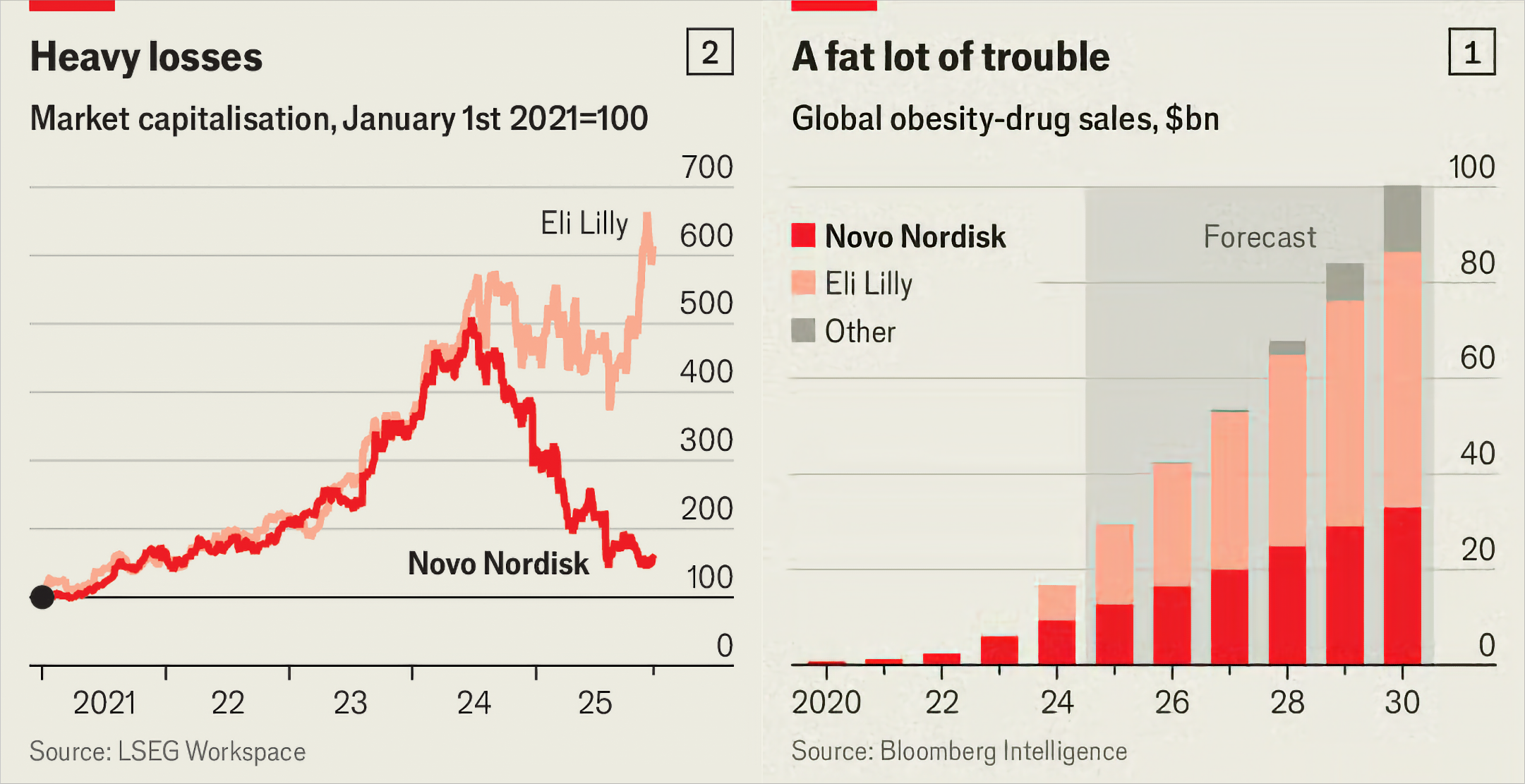

💉 Novo Had It, Novo Lost It — Eli Lilly Is Winning the GLP-1 Wars 💊

Novo had it. Novo lost it.

They had a big hit on their hands and came out of the gate strong, but Eli Lilly not only has caught up, it’s been out-executing it.

It’s as if Novo’s stock has been taking tirzepatide since 2024 😅

There’s a very interesting Acquired episode on the history of Novo. It’s a fascinating company.

I’m not an expert in the field, but from what I could gather, Eli just has better drugs and a superior pipeline right now:

Head-to-head evidence: Lilly reported (and NEJM published) a trial where tirzepatide produced ~20.2% mean weight loss vs ~13.7% for semaglutide (Wegovy) over ~72 weeks.

Lilly’s retatrutide is a “triple” incretin (GLP-1, GIP, and glucagon receptor activity) and has posted very high weight-loss numbers (~28.7% at 68 weeks in one late-stage study).

Lilly also has an oral small-molecule GLP-1 (orforglipron) that has cleared a Phase 3 milestone. This is important because an effective pill could expand the market and improve adherence vs injections for some patients.

Novo has newer candidates too (e.g., amycretin, GLP-1 + amylin activity), with early data showing meaningful weight loss over short durations. Encouraging, but still early-stage compared to Lilly’s late-stage readouts.

Amycretin: In a first-in-human Phase 1 trial (n=144), 62% of participants had treatment-emergent adverse events; the most common were GI (nausea/vomiting/decreased appetite), and events were mild to moderate and dose-dependent 😬

It’s a good reminder of how tough a game investing is 🎲

If a traveler from the future — an old Biff carrying a Novo annual report rather than a Sports Almanack — told me in 2021 that the company would have a category-defining mega-blockbuster, that its revenues would go up 2.5x in 4 years, and that EBITDA margins would go up 700bps, I would probably not have predicted that the stock would be basically flat over that period (down if you adjust for inflation).

Starting point matters! Expectations matter! The market is forward-looking! Value creation vs value capture, competitive positioning, etc. It all matters!

It reminds me of Zoom: going from revenue of $622m in 2020 to $4.8 billion over the past twelve months, EBITDA margin quintupled from 4.7% to 25.7%, FCF/share up even more from 41 cents/share to $6.74/share, etc.

Yet the stock is basically flat over the past 5 years and down 85% from peak 📉😅

(I understand why, I’m not saying it’s necessarily undeserved, just that you can be right about a business’s fundamentals doing great and still be wrong about the stock)

⚡🔋🏡🔋⚡ Zach Dell: Why Utilities Are Incentivized NOT to Innovate + Batteries as Time Machines for Energy

I so enjoyed Ti Morse’s interview with the co-founders of Base Power that I found other interviews they did. My favorite is this one that Patrick O’Shaughnessy did with Zach Dell:

Here are some of my highlights. First, on the incentives facing utilities against innovation:

Zach Dell: The cost of moving power across the transmission grid and the distribution grid has gone up really significantly in the last couple decades, primarily because this infrastructure is really aging. The way that this infrastructure is built out and upgraded, in the regulated utility markets, is utilities will basically propose capex to their public utility commission which then gets added into their rate base.

What you have is this incentive for the utilities to build, not to innovate, because they’re actually incentivized not to innovate. If they show up to the PUC with some kind of new technology that’s unproven, which is kind of the definition of innovation, the PUC says, “Well, that’s not a very good use of dollars and that’s too risky and we’re not going to approve that.”

What you have is this incentive to build where you’re earning a rate of return, a regulated rate of return, return on equity on the CapEx that you deploy. The more CapEx you deploy, the more return you generate for the shareholders.

On how batteries move energy through time rather than through space:

Zach Dell: Our view is that batteries have fundamental value on the power grid. Yes, they’re very useful for energy arbitrage, but they’re very useful for other things too. I think of batteries as more akin to poles and wires than to wind and solar. Poles and wires move energy through space. Batteries move energy through time.

On energy abundance as a foundation for human prosperity:

Zach Dell: There’s a chart I imagine many people have seen which plots energy consumption per capita with GDP per capita. And it is one of the strongest correlations in economics. There is no such thing as an energy rich poor country. Energy abundance and human prosperity are just inextricably linked.

So we are currently in a regime of increasing electricity prices. And those prices have been going up really rapidly, particularly over the last two decades, which is quite concerning. If you look to China, I think we all understand the dynamic there with regards to the race towards AGI or ASI or however you want to define it. They’re building out incredible amounts of electricity infrastructure to drive the cost of power down, and that of course drives their cost of compute down. So if we don’t work maniacally to build out the infrastructure in this country to drive the cost of electricity down, we’re going to lose the race in AI, but we’re going to lose the race in quantum and in biology in the next couple areas of innovation that are inevitably energy consumptive. [...]

Also at the economic level, it unlocks new technology. So take AI, for example. In a world where power prices just continue to go up, the cost of compute continues to go up linearly with those increases. In a world where the cost of power goes down, the cost of compute goes down too. And that allows us to do things with these models that we wouldn’t be able to do if those costs were to only increase...

There’s more good stuff in the full interview. Check it out.

🏡 🏡🏡 A Housing Affordability Moonshot 🚀🌙

You know what I’d like to see?

A REAL nation-level effort on housing, similar to what we’re seeing on rare earths or semiconductor manufacturing.

It’s just as important for the future health of the U.S., yet I see mostly complaining, not much that is constructive or ambitious, or large-scale thinking about solutions.

Instead of something like DOGE, I wish someone with political capital would just put this issue on top of the priority list and systematically de-bottleneck new housing construction.

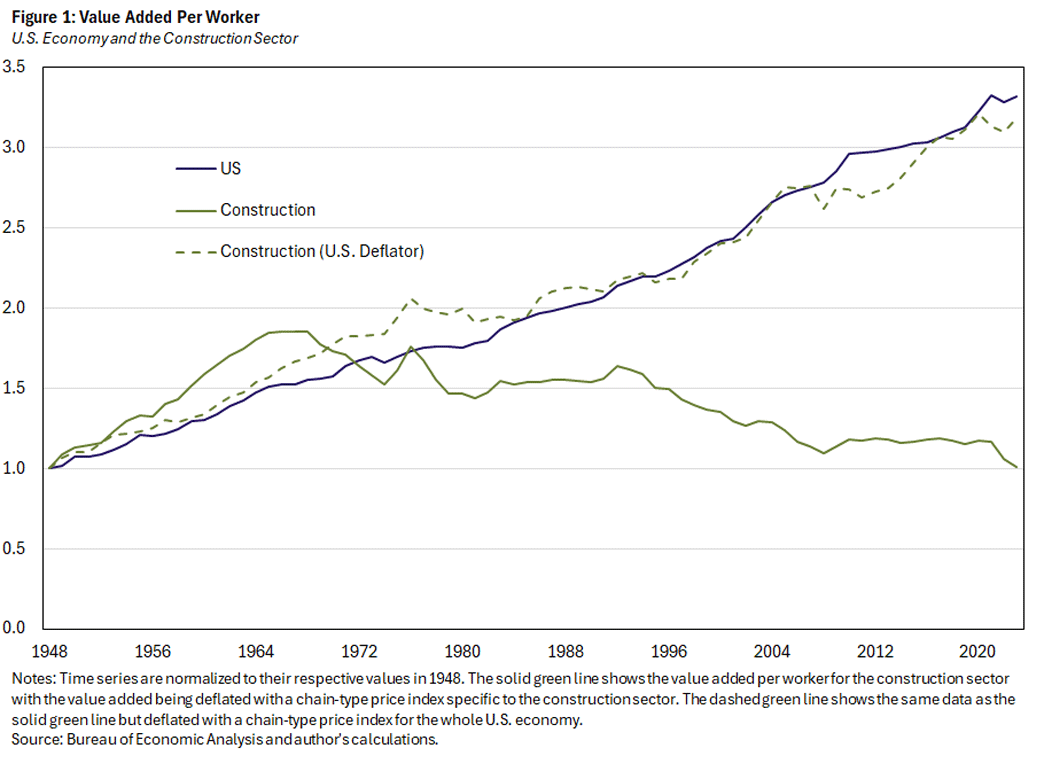

Act with urgency, shame the NIMBY blockers to new supply, reform NEPA, go after local red tape or supply issues (grid interconnects, water treatment, whatever), offer X-prizes for efficiency improvements in the industry (manufacturing has become way more productive over the past 50+ years while construction has largely stagnated or even decline*), create new incentives, learn from places that are doing it right, etc.

That’s something that large swathes of the population would get behind if it were explained properly and done right.

*Here are some stats about construction productivity:

A Federal Reserve (FEDS) paper reports average annual labor-productivity growth (1987–2019):

Construction: –0.4%/yr

Nondurable manufacturing: +1.7%/yr

Durable manufacturing: +2.9%/yr

Compounded over 1987–2019, that’s roughly:

Durable manufacturing: ~2.5× productivity level

Nondurable manufacturing: ~1.7×

Construction: ~0.88× (a decline)

We shouldn’t accept this, settle for it. It doesn’t have to be this way! It’s not a law of nature! 🔨👷♂️🚧

🗣️ Todd Rose: From High School Dropout to Harvard Professor 🎓

Speaking of learning and the failings of the traditional education system, I really enjoyed this one. I’m also a fan of Todd’s books.

We need to ditch our ideas about what is ‘average’ and how humans are just one-size-fits-all cogs in the Taylor-era industrial machine.

Let’s bring back individuality and originality!

The story he tells about the woman who became Prince’s sound engineer on Purple Rain gives me chills. If you listen to nothing else, listen to that part.

🧪🔬 Liberty Labs 🧬 🔭

🗣️ Interview with Claude Code’s Creator 💾

This one is a few months old, but I really enjoyed it.

It really helps understand how Anthropic thinks about products and experimentation, and how Claude Code — a big hit by any standard, generating meaningful revenue and growth for the lab — came into existence organically from an internal quick & dirty tool that spread inside the company.

🖼️🎨🤖 OpenAI Releases an Update to ChatGPT’s Image Model (v.1.5)

I don’t know if this was part of the ‘Code Red’ 🚨 push too, but considering how popular Google’s Nano Banana Pro has been, I wouldn’t be surprised if it accelerated timelines.

ChatGPT Image 1.5 aims to improve both the quality of the image and also how well it follows instructions:

It makes precise edits while keeping details intact, and generates images up to 4x faster.

Now, when you ask for edits to an uploaded image, the model adheres to your intent more reliably—down to the small details—changing only what you ask for while keeping elements like lighting, composition, and people’s appearance consistent across inputs, outputs, and subsequent edits.

The speed is a very welcome improvement, because their previous model was dog slow compared to Nano Banana 🍌

The model excels at different types of editing—including adding, subtracting, combining, blending, and transposing—so you get the changes you want without losing what makes the image special. [...]

The model follows instructions more reliably than our initial version. This enables more precise edits as well as more intricate original compositions, where relationships between elements are preserved as intended.

That’s a big claim.

So far in my tests, this is very hit & miss. Sometimes it’ll make a brilliant edit, doing exactly what I asked, and sometimes there’s no way at all to get it to do what I want, however many times I try ¯\_(ツ)_/¯

Text rendering seems like an improvement, but Nano Banana Pro still seems ahead, as far as I can tell.

So far, OpenAI’s new image model is doing really well on LLM Arena’s Text-to-Image and Image Edit, and in Design Arena’s Image leaderboard, taking the top spot from Google in each, which is a bit surprising to me based on my early tests and comparisons.

Personally, I would still give the edge to Nano Banana Pro, even if the new OAI model is a big improvement over their previous one.

You can see the full announcement here, as well as a bunch of A/Bs showing images generated by the previous version and the new one side by side.

🧪🔬🤖 DeepMind Plans a Gemini-Integrated “Automated Lab” for Materials Discovery in the UK 🇬🇧

This is very cool, and could prove to be an important scientific milestone:

To help turbocharge scientific discovery, we will establish Google DeepMind’s first automated laboratory in the UK in 2026, specifically focused on materials science research.

“We think that AI systems are now ready to bridge the gap between digital and actually discovering new materials,” said Pushmeet Kohli, DeepMind’s vice-president for science and strategic initiatives.

A multidisciplinary team of researchers will oversee research in the lab, which will be built from the ground up to be fully integrated with Gemini. By directing world-class robotics to synthesize and characterize hundreds of materials per day, the team intends to significantly shorten the timeline for identifying transformative new materials.

Discovering new materials is one of the most important pursuits in science, offering the potential to reduce costs and enable entirely new technologies. For example, superconductors that operate at ambient temperature and pressure could allow for low cost medical imaging and reduce power loss in electrical grids. Other novel materials could help us tackle critical energy challenges by unlocking advanced batteries, next-generation solar cells and more efficient computer chips.

The first of many such labs, I suspect!

🚲 🧲 A Bicycle with a Magnetic ‘Maglev’ Suspension!

This is so cool. I wish I could ride it.

🎨 🎭 Liberty Studio 👩🎨 🎥

🗣️ Interview: Flea of the Red Hot Chili Peppers 🌶️🎶

I’m not even a huge Chili Pepper fan (in the rock/rap crossover genre, I used to listen to more Faith No More and Rage Against the Machine growing up), but this interview was excellent.

The more I listen to these in-depth interviews with musicians who have had longevity and success, the more I realize that there’s a lot more to them than meets the eye. They are omnivorous when it comes to music, and they really know their stuff, from bebop to R&B. They rarely are just one-trick ponies, limited to one style, one genre, one technique.

There’s a very touching moment near the end when Flea talks about his musical insecurities, as someone who grew up surrounded by extremely proficient bebop musicians 🎷🎺

As one of the commenters on YouTube put it:

Flea inspires me to love everything more.

Questlove and Black Thought started performing together in high school. They never thought The Roots would make it because their classmates were Boyz II Men, Christian McBride (best jazz bassist of his generation), Joey DeFrancesco (best jazz organist of his generation), and Kurt Rosenwinkel (best jazz guitarist of his generation)