522: Broadcom Q2, AWS CEO, iPhone 16, Instagram Co-Founder + Anthropic, AlphaProteo & Chai-1, Alexa's SNAFU, TSMC, and Nick Cave

"the exhilaration of running as fast as you can"

If your boss demands loyalty, give him integrity. But if he demands integrity, then give him loyalty.

—USAF Col. John Boyd

[Check out his biography by Robert Coram, it’s a great book]

🛀💭🤖🤖🤖🤖 Does the wisdom of crowds work for AI?

The wisdom of crowds operates on the principle that when a large group of diverse individuals makes independent judgments, their collective answer tends to be more accurate than that of any single expert. This is because:

1. Individual errors and biases tend to cancel each other out

2. The aggregation of diverse perspectives provides a more comprehensive view

3. The collective knowledge of a group often surpasses that of any individual memberOn average, do you get a better answer if you spin up 10,000 separate AIs — and create variation by using multiple different models, model sizes, each with a different meta prompt, fine-tuning, each doing their own online searches/RAG, etc — and look at the collective decision or prediction?

🤖🗓️🗓️🗓️🗓️🗓️ Ethan Mollick writes:

We are absolutely at a place where, if AI development completely stopped, we would still have 5-10 years of rapid change absorbing the capabilities of current models and integrating them into organizations and social systems.

I don't think development is going to stop, though.

AI is a General Purpose Technology. Look for uneven & weird impacts, good and bad, on culture, society, education and work to play out in waves over time.That’s my feeling too.

LLMs are such opaque black boxes that they may have capabilities we aren’t even aware of. Even the capabilities we do know will take a long time to go up the adoption curve and be turned into products that diffuse throughout various industries.

This tends to be the way with general-purpose innovations.

For example, think of spreadsheets, or transistors, or electricity, or plastics, or radio communication, etc. They all improved over time, but even if they hadn’t, there was enough juice in the original version for many years of absorption and impact.

🏃♂️🏃🏾🏃🏻♀️🏃♀️➡️🏃➡️🏃♂️➡️ When was the last time you sprinted?

It feels good.

I recently raced uphill at the park with my boys and it made me realize that the exhilaration of running as fast as you can is something that I don’t feel often enough. I suspect most people over, say, 25, basically never sprint again in their entire lives.

I should go do it again soon 🤔

🐦 Remember when Elon Musk bought Twitter, claiming it was crucial for social media platforms to remain politically neutral?

💚 🥃 🚢⚓️ You recognize that ideas are often undervalued, even among those whose job it is to make decisions, solve problems, or have the right idea at the right time.

Consider this: Even just one good idea per year can be INCREDIBLY valuable.

The impact of ideas extends far beyond the financial: Making better decisions with your spouse or about your kids or health can have huge lifelong positive effects. 💪

This newsletter provides exploration as a service. We can’t know in advance what will be useful, but we can have fun learning and thinking things through together.

Your next favorite thing might be just one newsletter away. Thank you for your support! 💡

🏦 💰 Business & Investing 💳 💴

🐜 💾 🔍 Broadcom Q2 Highlights 🤔

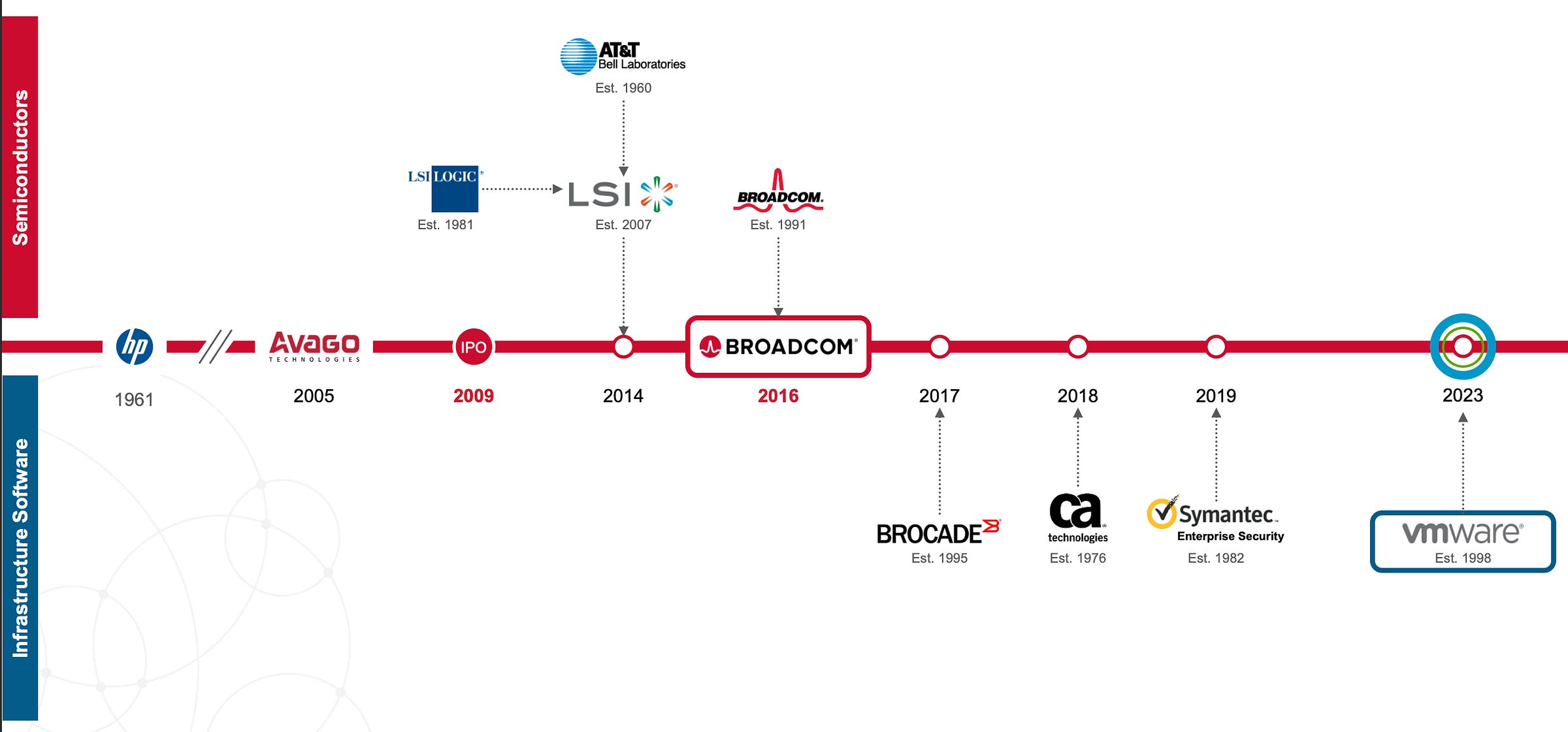

I thought it would be interesting to take a look together at Broadcom’s recent results and commentary, since they touch on so many interesting parts of the tech world.

Here are some highlights:

In our fiscal Q3 2024, consolidated net revenue of $13.1 billion was up 47% year-on-year, and operating profit was up 44% year-on-year.

As you can see above, software is now a really big part of Broadcom’s 🥧

The acquisition of VMWare in 2023 for about $69bn (cue Elon Musk snickering) contributed a good chunk of that, and they’re still going through integration and optimization.

Starting with software. In Q3, infrastructure software segment revenue of $5.8 billion was up 200% year-on-year driven by $3.8 billion in revenue contribution from VMware. [...]

when we acquired VMware, our target was to deliver adjusted EBITDA of $8.5 billion, within 3 years of the acquisition. We are well on the path to achieving or even exceeding this EBITDA goal in the next fiscal '25.

On the semiconductors side:

In networking, Q3 revenue of $4 billion grew 43% year-on-year, representing 55% of semiconductor revenue.

AI is of course a big growth engine:

Custom AI accelerators grew 3.5x year-on-year.

Google’s TPUs are built with Broadcom’s IP and building blocks, so a lot of this revenue is likely coming from there.

While GPUs and TPU-like chips are sexier, networking is increasingly important since it’s what ties together these tens of thousands (now 100k with that xAI cluster, and going to millions in a few years) of chips.

In the fabric, Ethernet switching, driven by Tomahawk 5 and Jericho3-AI grew over 4x year-on-year, while our optical lasers and thin dies used in optical interconnects grew three-fold.

Meanwhile, PCI Express switches more than doubled, and we're shipping in volume our industry-leading 5-nanometer, 400-gigabit per second NICs and 800-gigabit per second DSPs.

It’s interesting the bifurcation in the market between AI-related things and the rest.

Look at this:

On to broadband. Q3 revenue declined 49% year-on-year to $557 million [...]

Q3 industrial resales of $164 million declined 31% year-on-year [...]

Moving on to wireless. Q3 wireless revenue of $1.7 billion grew 1% year-on-year, [...]

Our Q3 server storage connectivity revenue was $861 million, up 5% sequentially and down 25% year-on-year. [...]

In aggregate, we have reached bottom in our non-AI markets and we're expecting a recovery in Q4. AI demand remains strong and we expect, in Q4, AI revenue to grow sequentially 10% to over $3.5 billion. This will translate to AI revenue of $12 billion for fiscal '24, up from our prior guidance of over $11 billion.

But AI investments could trickle down to non-AI hardware and help the cycle turn, according to Hock Tan:

As AI permeates enterprises all across and digital natives, you need to upgrade servers. You need to upgrade storage. You need to upgrade networking, connectivity across the entire ecosystem.

And if anything else, we could be headed for up cycle, timing of precisely when, we're not sure, but an up cycle, that could even meet or even surpass what our previous up cycles would be, simply because the amount of bandwidth you need, the amount to manage, store, manage all these workloads that come out of AI would just the need to refresh and upgrade hardware. So that's my two cents' worth on where we're headed from this down cycle.

Hock Tan also had interesting commentary about the custom AI accelerators (like Google and Amazon are incorporating in their clouds, for example) and how he’s changed his mind about the winners between more general GPUs and more customer XPUs/ASIC accelerators:

large hyperscalers with very large platforms, huge consumer platform subscriber base, have the entire model predicated on running a lot of large language models, a lot of AI requirements, workloads out there. And it will drive, towards creating as much as possible their own compute silicon, their own custom accelerators, that's a matter of time. And we are in the midst of seeing that transition, which may take a few years for that to happen. [...]

I used to think that general-purpose merchant silicon [ie. Like Nvidia and AMD] will win at the end of the day. Well, based on history of semiconductors mostly so far, general purpose, small merchant silicon tends to win. But like you, I flipped in my view. And I did that, by the way, last quarter, maybe even 6 months ago. But nonetheless, catching up is good.

And I actually think so because I do think there are 2 markets here on AI accelerators. There's one market for enterprises of the world, and none of these enterprises are capable nor have the financial resources or interest to create the silicon, the custom silicon, nor the large language models and the software going maybe, to be able to run those AI workloads on custom silicon. It's too much and there's no return for them to do it because it's just too expensive to do it.

But there are those few cloud guys, hyperscalers with the scale of the platform and the financial wherewithal for them to make it totally economically rational to create their own custom accelerators because right now, I'm not trying to overemphasize it, it's all about compute engines. It's all about especially training those large language models and enabling it on your platform.

We’re getting to the point where compute > engineers:

It's all about constraint, to a large part, about GPUs. Seriously, it came to a point where GPUs are more important than engineers, these hyperscalers in terms of how they think. Those GPUs are much more -- or XPUs are much more important. And if that's the case, what better thing to do than bringing the control, control your their own destiny by creating your own custom silicon accelerators. And that's what I'm seeing all of them do.

It's just doing it at different rates and they're starting at different times.

But they all have started. And obviously, it takes time to get there. But a lot of them, there are a lot of learning in the process versus what the biggest guy of them had done longer, have been doing it for 7 years. Others are trying to catch up and it takes time. I'm not saying you'll take 7 years.

I think you'll be accelerated. But it will still take some time, step by step, to get there. But those few hyperscalers, platform guys, will create their own if they haven't already done it, and start to train them on their large language models. And yes, you're right, they will all go in that direction totally into ASIC or, as we call it, XPUs, custom silicon. Meanwhile, there's still a market for in enterprise for merchant silicon.

That makes a lot of sense. I don’t have the actual numbers, but I can see the napkin math in my head where past a certain scale and return on compute, it makes sense to develop your own even if you also continue buying from Nvidia too.

For the sake of illustration, let’s say it costs $1bn to develop a chip.

If you’re buying $100m worth of GPUs from Nvidia, there’s no way that it makes sense to invest that amount of money (not to mention the non-monetary barriers to entry, like the skilled engineers/chip designers, etc).

But if you’re buying $10bn of GPUs, you have to start thinking: Okay, if I stop paying Jensen a margin and go direct to TSMC with a design that I made in-house using a lot of IP from people like Broadcom and ARM and Cadence/Synopsis, etc, where’s my break-even and where does it become cheaper per unit of compute for me to roll my own?

Nvidia’s supply constraints are also a factor. ie. Even if you keep buying from them, whenever they don’t have enough chips for everyone, having an alternative source of compute supply that isn’t available to competitors can be very valuable (AMD goes 🙋🏻♀️).

Additionally, customization can help improve efficiency — in other words, even if you aren’t as good as Nvidia at making the fastest GPU possible, by making a chip that is only optimized for your use cases rather than a more general chip that has to serve multiple masters, you can compensate and achieve very good performance per dollar on the software that matters to you.

☁️ AWS CEO Matt Garman: Notable Quotes & Highlights 🤖

AWS’ CEO was recent at the GS Communacopia conference. Here are some highlights:

we're about 18 years into the cloud now, and so it's a pretty well-established technology. And yet, there’s still the vast, vast, vast majority of workloads that have yet to move to the cloud. And so I think you all probably have lots of estimates as to how many workloads are still on-premise or move to the cloud, and you spend probably more time thinking about that than I do.

But I think you're hard pressed to find somebody that thinks it's more than 10% to 20% of the workloads out there. And so what that means is there is a massive set of workloads that are still running in on-prem data centers.

This feels a lot like what I was hearing 10 years ago.

It’s hard to believe that even after a decade of exponential growth and AWS and Azure and GCP having run rates in the 100s of billions range there is still all this on-prem IT ‘dark matter’ out there, mostly up for grabs because most enterprises can’t compete with the hyperscalers (while others *are* doing much better managing their own stuff, there’s no one-size fits all).

A nice echo of what Hock Tan was talking about:

from 10 years ago, we went on a path to start innovating on our own custom silicon. We started on a path to innovate at the very base layers of hypervisors and virtualizations and networks and data centers and power infrastructure and supply chain. And across the board, these are non-glamorous things necessarily, but they're very differentiating.

It means that we have a very differentiated security posture than anyone else. It means we have a differentiated cost structure than anyone else. It means that we have custom-made processors where we can deliver outsized performance and better price performance gains than anyone else.

AWS started with networking and security, but now they also have their own ARM CPUs, AI accelerators for training and inference, etc. They’re not necessarily all best-of-breed, but they don’t need to be for big slices of the market.

On generative AI:

I am incredibly excited about this technology. It is a technology that over time is going to completely change almost every single industry that all of us focus on and think about and work on every single day to some level. And I really think that. And it's every single industry.

you talk to a pharmaceutical company that's using AI to actually invent new proteins and discover new proteins and new molecules that may be able to help cure cancer or cure other diseases and things like that and at a rate that's tens of thousands, hundreds of thousands more times than a person sitting there with a computer trying to guess what the next protein could look like to solve a particular disease. That is just a fundamentally different capability than ever existed before and has massive implications for health care.

More on AI’s potential to help with biotech and drug design and discovery later in this Edition.

🍎📱 Apple iPhone 16 Event 🥱

I thought I would be writing about the iPhone 16 event, but honestly, I don’t have much to say.

Why don’t we get huge surprises at these events anymore? Why aren’t they as exciting as when Steve Jobs was pulling the next iPod or iPhone out of his jeans pocket?

Products mature over time. The 16th generation of something is likely to see more iterative polish than massive changes, that’s just part of the lifecycle of a product. PCs are not nearly as exciting now as they were 20 years ago, but they are much much better nonetheless.

Let’s not confuse boring events with bad products…

This is especially true since most people do not buy a new smartphone every year. If you wait 3-4 years between upgrades, the cumulative improvements will be pretty big even if the individual changes don’t make as many headlines as they used to.

The other factor is that Apple used to be able to keep things secret, but now, due to their scale and the fact that they are facing unprecedented industrial espionage from everyone, there are few surprises left. Things leak from the supply chain and Mark Gurman ends up publishing almost everything in advance. Past events would’ve been a lot less exciting too if we knew everything going in…

Even if I can’t hold these things against Apple, it still doesn’t make for a very exciting event. ¯\_(ツ)_/¯

🗣️ Interview: Instagram co-founder Mike Krieger, now head of products at Anthropic 🤖

I enjoyed this one, I think Mike’s a smart and thoughtful guy.

I thought his previous startup, Artifact, was interesting even though it probably couldn’t make it because the open web is in such bad shape (that product would’ve done much better 10-15 years ago).

I’ll be curious to see how he influences Anthropic’s product direction. They have great tech, but I feel like their products are behind OpenAI and even Perplexity.

🧪🔬 Science & Technology 🧬 🔭

🧬👩🔬 Chai-1 Foundational Model for Molecular Biology & Drug Discovery 🤖🧫🧪

Chai Discovery just unveiled their open weights model (the licensing is not entirely free, but the weights and inference code are being released as a software library, which is available for non-commercial use, while the web interface can be used for commercial purposes).

What jumped at me is that while this is a new model by a young company, it is already doing slightly better than Google’s AlphaFold3 (at least in this benchmark — it remains to be see with real-world use how it compares):

a 77% success rate on the PoseBusters benchmark (vs. 76% by AlphaFold3), as well as an Cα LDDT of 0.849 on the CASP15 protein monomer structure prediction set (vs. 0.801 by ESM3-98B).

It also can do things that others can’t:

Unlike many existing structure prediction tools which require multiple sequence alignments (MSAs), Chai-1 can also be run in single sequence mode without MSAs while preserving most of its performance. The model can fold multimers more accurately (69.8%) than the MSA-based AlphaFold-Multimer model (67.7%), as measured by the DockQ acceptable prediction rate. Chai-1 is the first model that’s able to predict multimer structures using single-sequences alone (without MSA search) at AlphaFold-Multimer level quality.

🧬📌 AlphaProteo: Protein Design & Binding In Silico

Speaking of computation biology, Google has a new model too:

Today, we introduce AlphaProteo, our first AI system for designing novel, high-strength protein binders to serve as building blocks for biological and health research. [...]

AlphaProteo can generate new protein binders for diverse target proteins, including VEGF-A, which is associated with cancer and complications from diabetes. This is the first time an AI tool has been able to design a successful protein binder for VEGF-A.

AlphaProteo also achieves higher experimental success rates and 3 to 300 times better binding affinities than the best existing methods on seven target proteins we tested.

🫣 ‘Alexa AI was riddled with technical and bureaucratic problems.’ 🤖

Ethan Mollick puts it well:

Amazon has spent between $20B & $43B on Alexa & has/had 10,000 people working on it.

It is all obsolete. To the extent that it is completely exceeded by the new Siri powered mostly by a tiny 3B LLM running on a phone.

What happened? This thread suggests organizational issuesMihail Eric worked on Alexa and shared what he thinks went wrong in this Twitter post.

Here are some highlights:

We had all the resources, talent, and momentum to become the unequivocal market leader in conversational AI. But most of that tech never saw the light of day and never received any noteworthy press.

Why?

The reality is Alexa AI was riddled with technical and bureaucratic problems. [...]

It would take weeks to get access to any internal data for analysis or experiments. Data was poorly annotated. Documentation was either nonexistent or stale.

Experiments had to be run in resource-limited compute environments. Imagine trying to train a transformer model when all you can get a hold of is CPUs. Unacceptable for a company sitting on one of the largest collections of accelerated hardware in the world. [...]

Alexa’s org structure was decentralized by design meaning there were multiple small teams working on sometimes identical problems across geographic locales.

This introduced an almost Darwinian flavor to org dynamics where teams scrambled to get their work done to avoid getting reorged and subsumed into a competing team.

The consequence was an organization plagued by antagonistic mid-managers that had little interest in collaborating for the greater good of Alexa and only wanted to preserve their own fiefdoms.

Yikes 😬

I wonder if they will be able to ignore sunk costs and pivot the whole thing to LLMs as cleanly as possible (while maintaining legacy support for all the third party plug-ins, which is no easy feat).

🐜🇺🇸 TSMC’s Arizona Fab Gets Trial Yields Comparable to Taiwan Plants 🥳

That’s better, faster than I expected:

achieved production yields at its Arizona facility on par with established plants back home [...]

The Taiwanese chipmaker’s yield rate in trial production at its first advanced US plant is similar to comparable facilities in the southern Taiwanese city of Tainan [...]

TSMC had said it started engineering wafer production in April with advanced 4-nanometer process technology.

Of course, what will matter is scaling up volume and becoming more independent from the motership in Taiwan over time (right now about half the employees at the AZ plant are deployed from Taiwan). But this is a good start.

See also:

🎨 🎭 The Arts & History 👩🎨 🎥

🎶🎤 Nick Cave & The Bad Seeds Live in 2013 — What is Charisma? 👩🏻🦰

🚨 First, be warned: This song is ‘Stagger Lee’ a ‘murder ballad’ full of expletives and homicidal characters. 🚨

Charisma has always been hard to define. It can take many forms.

I first saw this video years ago.

Recently, something reminded me of it. I think it’s a great study in the power of charisma. Cave isn’t exactly a ‘traditionally handsome’ fellow, but when he’s in character, he can be quite hypnotic, making crowds of thousands hang on to his every word and movement.

The interaction near the end of this song with the red-haired woman feels like a good real-world example of someone falling under a spell. 😵💫

I recommend the whole song because it’s a crescendo, but the moment I’m talking about starts at around 7:50 in the video.

I agree with the AWS' CEO assessment of the market and how little has shifted to cloud. I work in the IT industry and still see many applications that are running on-premises of company-owned or -leased datacenters. In my opinion, cloud technology is just that, a technology. As a service, it's terrible. It's still overly complex and convoluted to transition enterprise workloads to the cloud. Not to mention, most business managers are shocked at the bill received at the end of the month. Cloud services, as a whole, need to mature greatly, before more adoption can occur. Presently, there are still plenty of workloads that are easier to maintain or cheaper to run on-premises for Fortune 500-sized companies.

The opportunity to grab more workloads is there for AWS and other GCPs but I believe the risk of losing workloads is also beginning to increase. Frustrations over complexity or unexpected expense increases is something I hear about more and more. I'm not a cloud "doomer", by any means, but the fact remains that there is still much innovation that needs to be done to enhance the customer's experience and reduce the friction of transitioning workloads.

Enjoyed your point about sprinting! Totally agree! I did Orangetheory 4-5 times a week for a 3-4 years until the local studio shut down last year (sad). Every workout included all-outs on the treadmill where you would run as fast as you could for 30-45-60 seconds. It was pretty cool - tough to do but it made you feel like a boy even when you're 60+. Also it was a great feeling to push yourself physically especially when you knew you it was followed by a walking recovery of a minute before the next stressor.